If you missed the first 🧵 on Decision Trees you can read it here 🔽

We know that for the best result, we need to ask great questions.

How do we know if a question is good?

We do measurements and comparisons.

In the case of Decision Trees we usually use Gini Impurity Index or Gini Index in short.

Let's see how Gini Index works:

1/8

How do we know if a question is good?

We do measurements and comparisons.

In the case of Decision Trees we usually use Gini Impurity Index or Gini Index in short.

Let's see how Gini Index works:

1/8

Gini Index will tell us how diverse the dataset is.

If a set contains similar elements the set has low Gini Index.

Let's consider two sets:

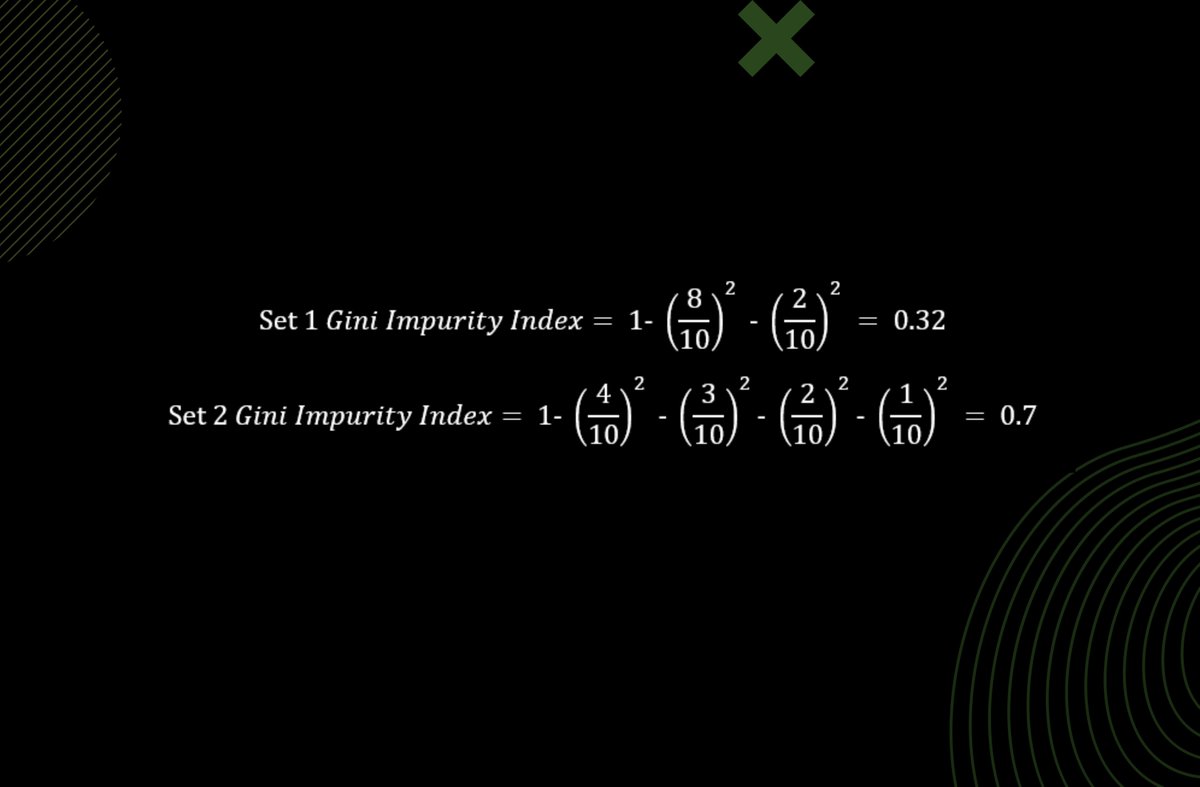

Set 1: 8 🍎 , 2 🍌

Set 2: 4 🍎 , 3 🍌 , 2 🍉 , 1 🍋

Set 1 seems more pure, meaning it has less diversity.

2/8

If a set contains similar elements the set has low Gini Index.

Let's consider two sets:

Set 1: 8 🍎 , 2 🍌

Set 2: 4 🍎 , 3 🍌 , 2 🍉 , 1 🍋

Set 1 seems more pure, meaning it has less diversity.

2/8

Set 1 contains mostly 🍎 , while Set 2 has many different fruits.

We can see this difference, but we need to use numbers for easy comparison - we need a measurement.

Impurity is based on probability.

Let's see what is happening in the background.

3/8

We can see this difference, but we need to use numbers for easy comparison - we need a measurement.

Impurity is based on probability.

Let's see what is happening in the background.

3/8

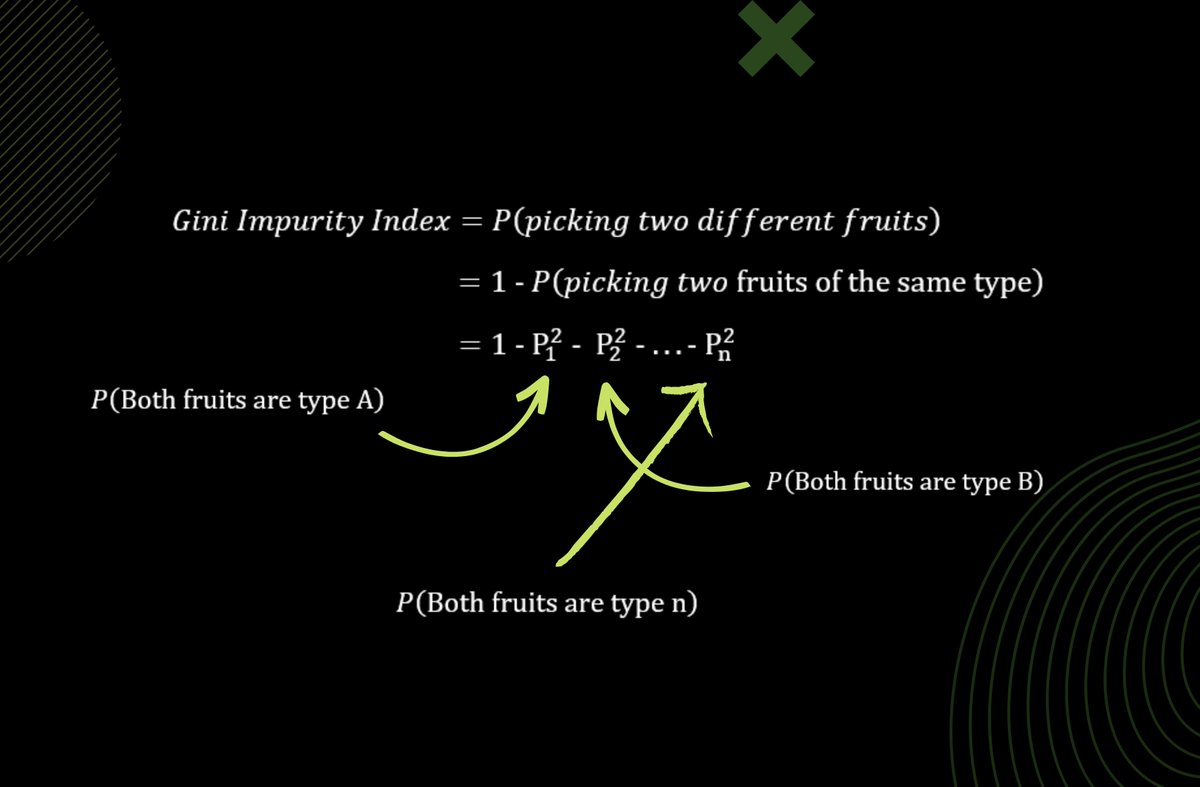

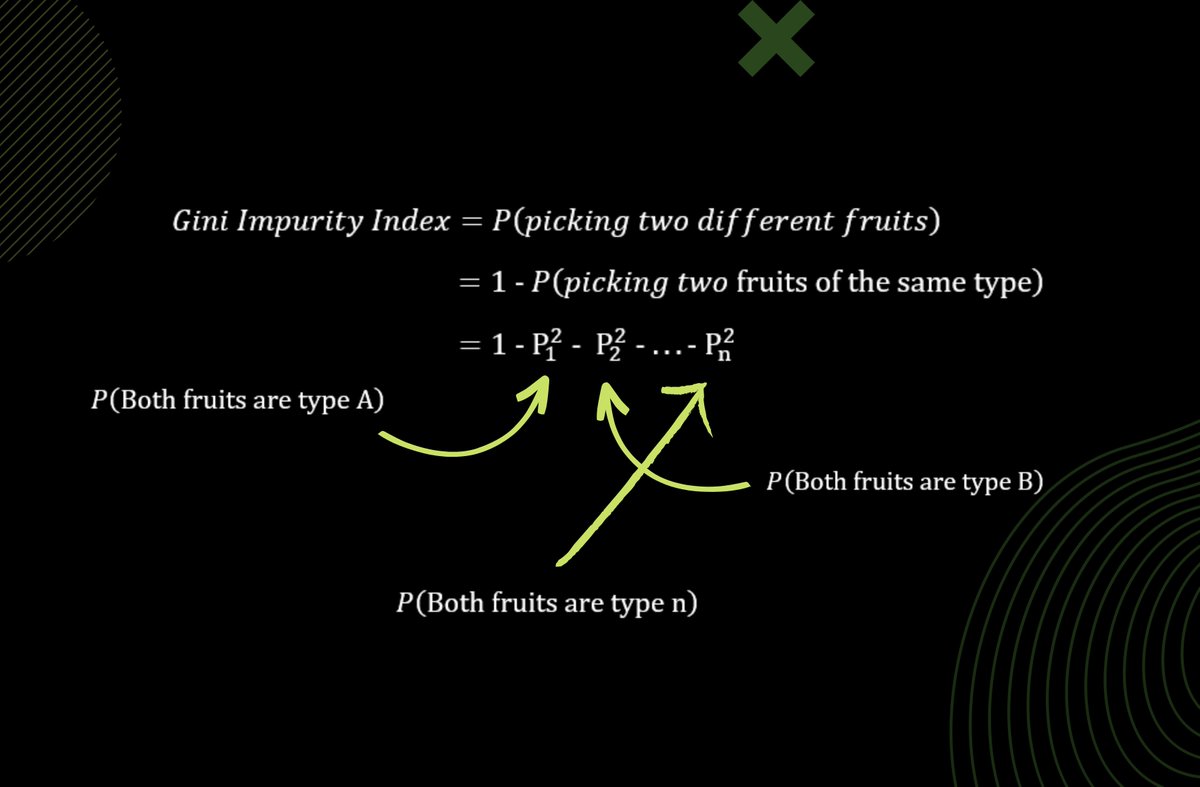

What is the probability that if we choose 2 different fruits from the sets, they will be different?

- For Set 1, the probability is low, since it contains mainly 🍎

- For Set 2 the probability is higher, cause it is more diverse.

4/8

- For Set 1, the probability is low, since it contains mainly 🍎

- For Set 2 the probability is higher, cause it is more diverse.

4/8

But how do we use the Gini Index to decide which is the best question?

1. We calculate the Gini index for every leaf in the tree

2. Take the average of the leaves to get the Gini Index for the tree

3. Take the lowest Gini Index

Lower Gini Index = Better split!

7/8

1. We calculate the Gini index for every leaf in the tree

2. Take the average of the leaves to get the Gini Index for the tree

3. Take the lowest Gini Index

Lower Gini Index = Better split!

7/8

That's it for today.

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

8/8

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

8/8

Loading suggestions...