I'd like to tell you about one of the biggest and most effortful studies on behavior change ever conducted and its strange findings (that may not even be obvious if you read the paper - I only realized its implications on my 2nd reading!)

Let's begin:

[study megathread]🧵

Let's begin:

[study megathread]🧵

The study had 61,000 participants, all with gym memberships. 30 scientists worked in small teams to develop interventions, each aiming to increase gym attendance over 4-weeks. This resulted in 53 interventions to test. A promising setup to figure out what truly changes behavior!

The design of the study is important to understand to interpret the results. Participants were randomized into different groups:

1. Control group - these participants received points at the study start (convertible into small sums of money on Amazon), and that's all

1. Control group - these participants received points at the study start (convertible into small sums of money on Amazon), and that's all

2. Baseline group - these participants received 3 things:

• planning - participants planned the dates and times when they'd work out

• small incentives - they earned points (usable on Amazon) for every workout

• reminders - they got a text 30 mins before each scheduled workout

• planning - participants planned the dates and times when they'd work out

• small incentives - they earned points (usable on Amazon) for every workout

• reminders - they got a text 30 mins before each scheduled workout

3. The remaining participants were each assigned to one of 52 other interventions.

These 52 interventions incorporated all of the elements that the baseline group got (planning + incentives + reminders) but then added extra stuff on top to test different strategies.

These 52 interventions incorporated all of the elements that the baseline group got (planning + incentives + reminders) but then added extra stuff on top to test different strategies.

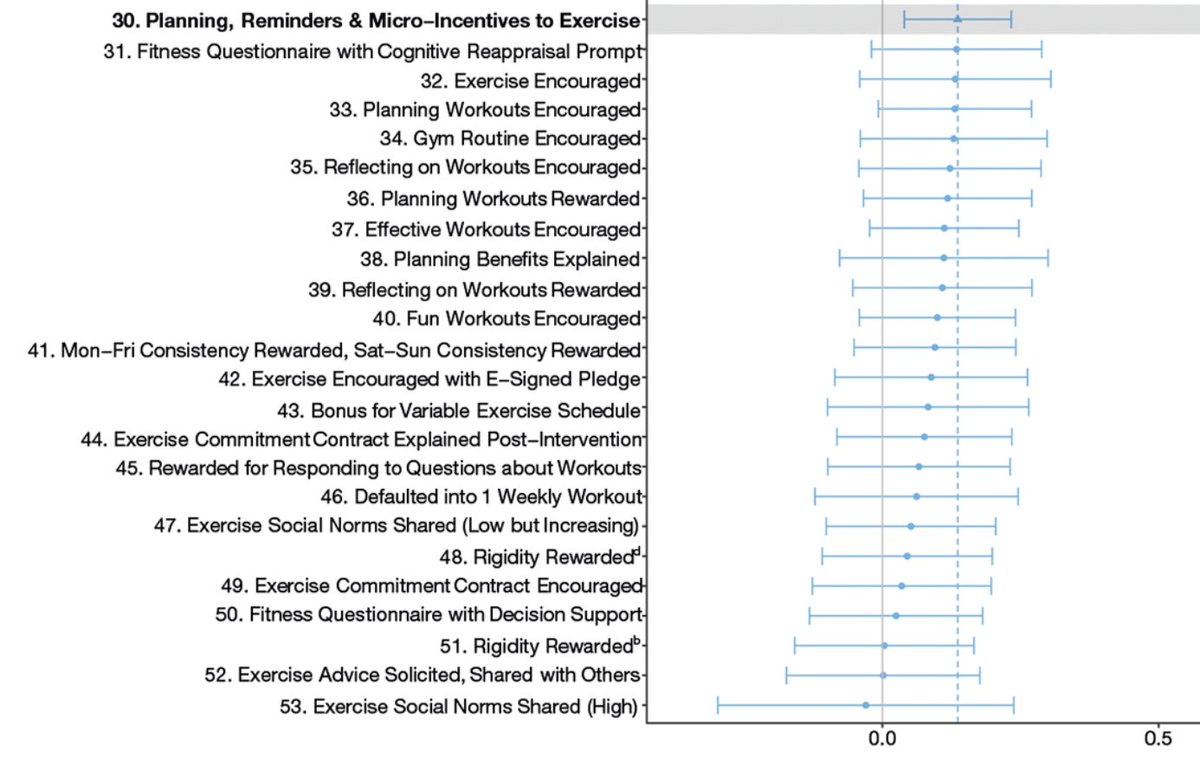

What about the other 52 interventions?

The paper abstract says: "45% of these interventions significantly increased weekly gym visits by 9% to 27%."

Later in the paper, we learn this means 45% achieved p<0.05 compared to the control.

But that's a confusing comparison.

The paper abstract says: "45% of these interventions significantly increased weekly gym visits by 9% to 27%."

Later in the paper, we learn this means 45% achieved p<0.05 compared to the control.

But that's a confusing comparison.

The 52 interventions each incorporated the elements of the baseline and added extra stuff on top of the baseline.

We know the baseline intervention beats the control.

So, of course, many interventions beat the control since they incorporate the baseline!

We know the baseline intervention beats the control.

So, of course, many interventions beat the control since they incorporate the baseline!

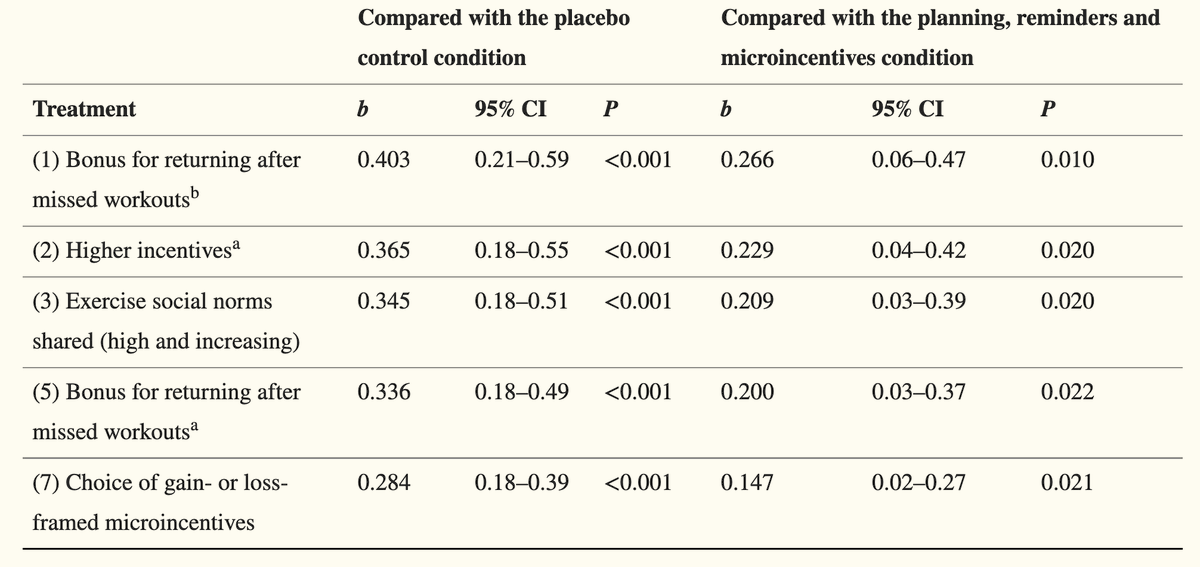

(2) Bigger incentives - simply paying more points each time people went to the gym.

(3) Information about what's normal - telling participants "that a majority of Americans exercise frequently and that the rate is increasing"

(3) Information about what's normal - telling participants "that a majority of Americans exercise frequently and that the rate is increasing"

(4) Choice of gain/loss frame - participants learned they could choose to earn points each day they visited the gym OR to start with all the points and lose points each day they did not visit the gym. They were told that their earnings would be the same in both programs.

It may be no surprise that bigger incentives increased gym attendance, but the other 3 findings I find quite interesting: bonuses after messing up, providing information about what's normal, and giving participants a choice of a gain frame vs. a loss frame seemed to work!

It should be noted, however, that the effect sizes on these best interventions were still modest (less than an average of an additional 0.27 days of exercise per week, on average).

Furthermore, since we selected these from a wide set of interventions based on their apparent effectiveness, we should anticipate regression to the mean. That is, we should predict that the effect size of these best interventions, modest as it is, is likely inflated.

Takeaway #3: this leads us to our next big learning from this study, which is that behavior change is incredibly hard! About 30 scientists who study behavior change worked to develop more than 50 interventions, and at most, 4 of them meaningfully beat the baseline! Wow!

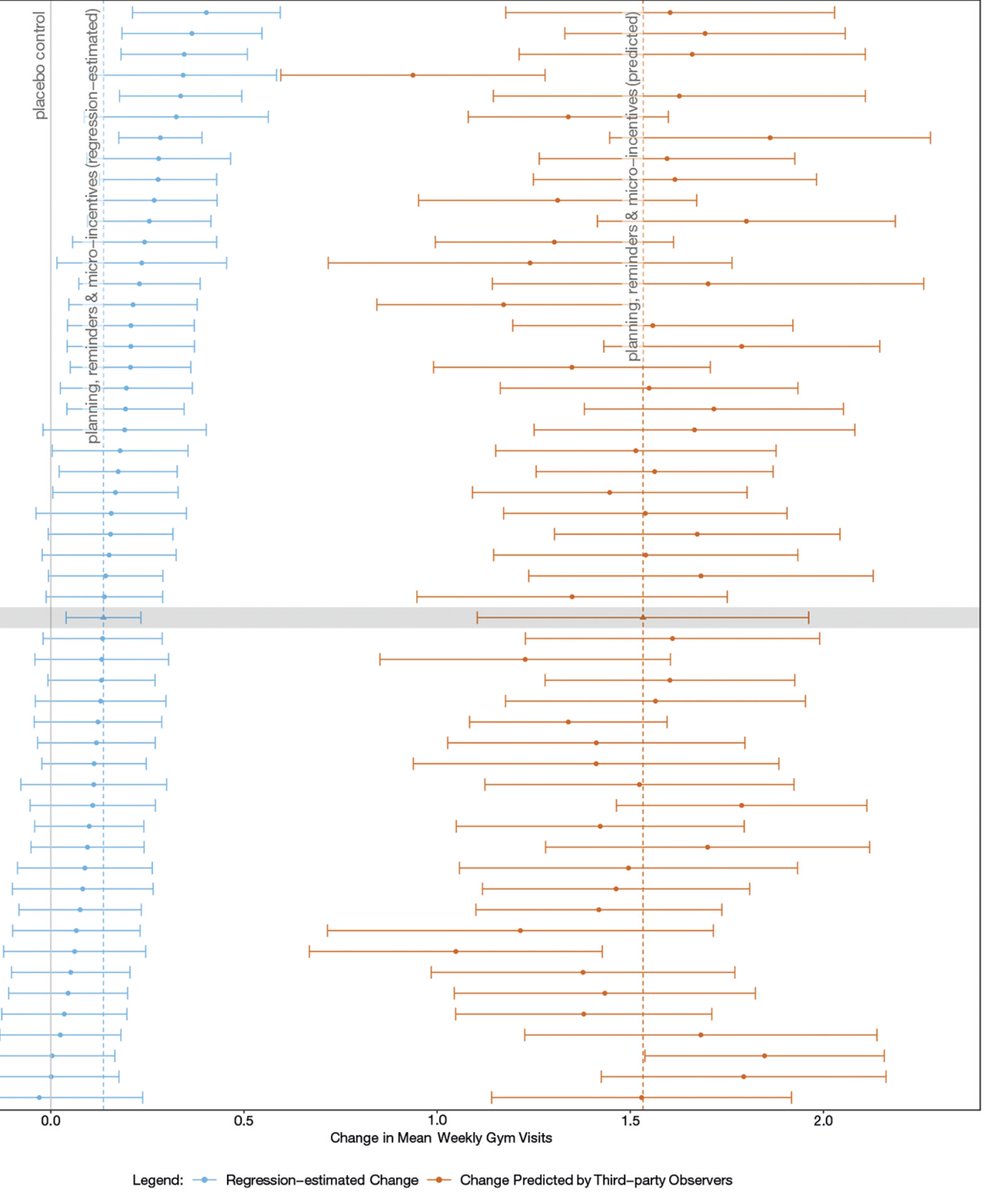

Another fascinating thing about this paper is that they tested whether different groups could predict which interventions would work to increase gym membership (in 3 separate studies).

For one study, they had 301 ordinary people make predictions about which would work. Another had 156 professors from the top 50 schools of public health making predictions. A final study used 90 practitioners recruited from companies specializing in applied behavioral science.

Takeaway #4: It's really hard to predict what will work with regard to behavior change!

Overall, I learned a lot from reading this paper, and I have great respect for the research team that conducted these studies through a herculean effort - including @katy_milkman, @angeladuckw, and many others. I hope they conduct many more "mega studies" like this one!

If you'd like to read the original paper, it's "Megastudies improve the impact of applied behavioural science":

bit.ly

And if you found this thread interesting, I'd appreciate a follow. Or you can subscribe to my weekly newsletter:

mailchi.mp

bit.ly

And if you found this thread interesting, I'd appreciate a follow. Or you can subscribe to my weekly newsletter:

mailchi.mp

Loading suggestions...