This explanation is courtesy of @TivadarDanka. He allowed me to republish it

3 years ago, he started writing a book about the mathematics of Machine Learning.

It's the best book you'll ever read:

tivadardanka.com

Nobody explains complex ideas like he does.

2/15

3 years ago, he started writing a book about the mathematics of Machine Learning.

It's the best book you'll ever read:

tivadardanka.com

Nobody explains complex ideas like he does.

2/15

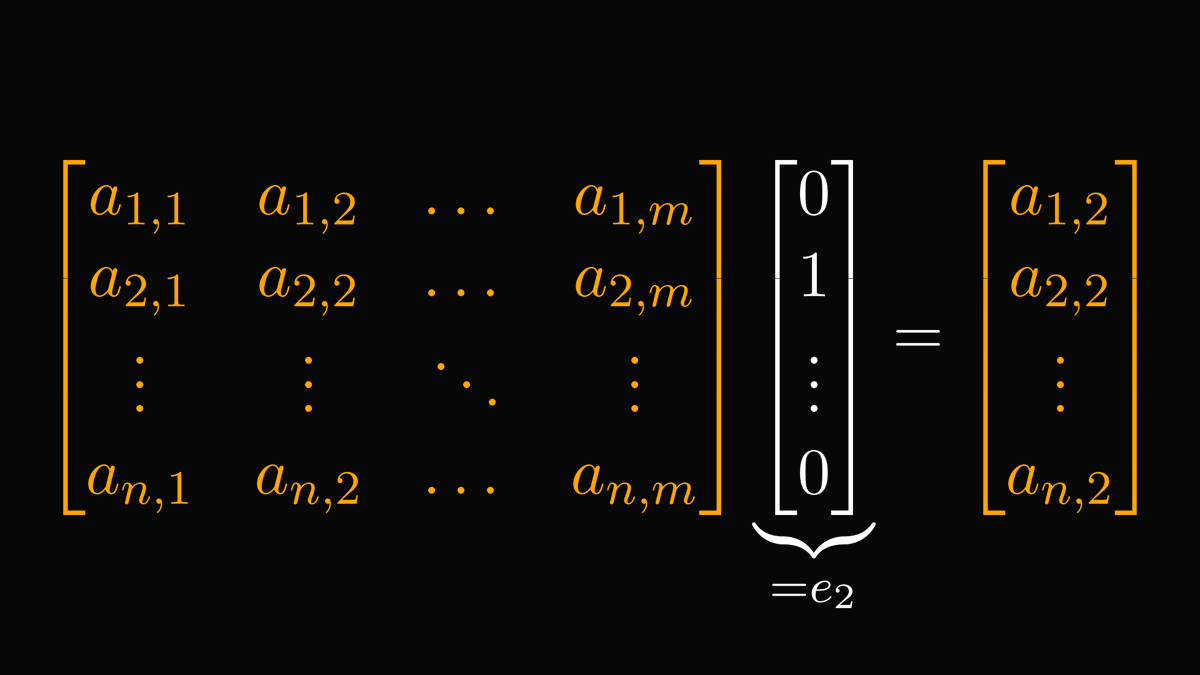

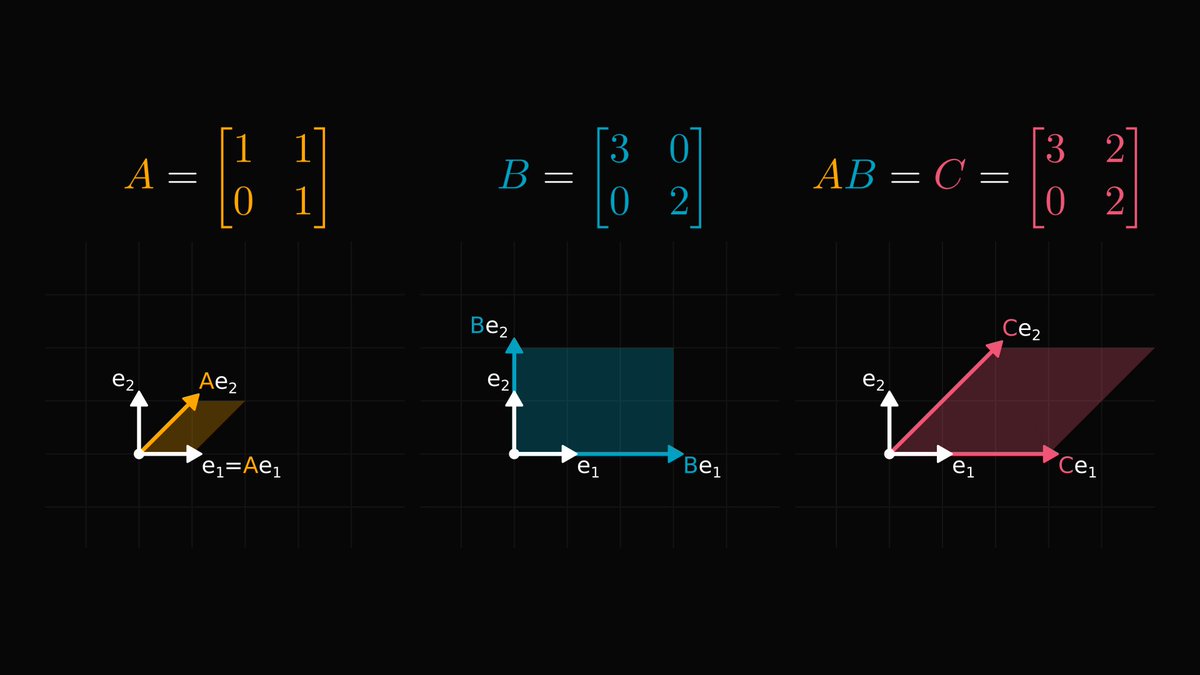

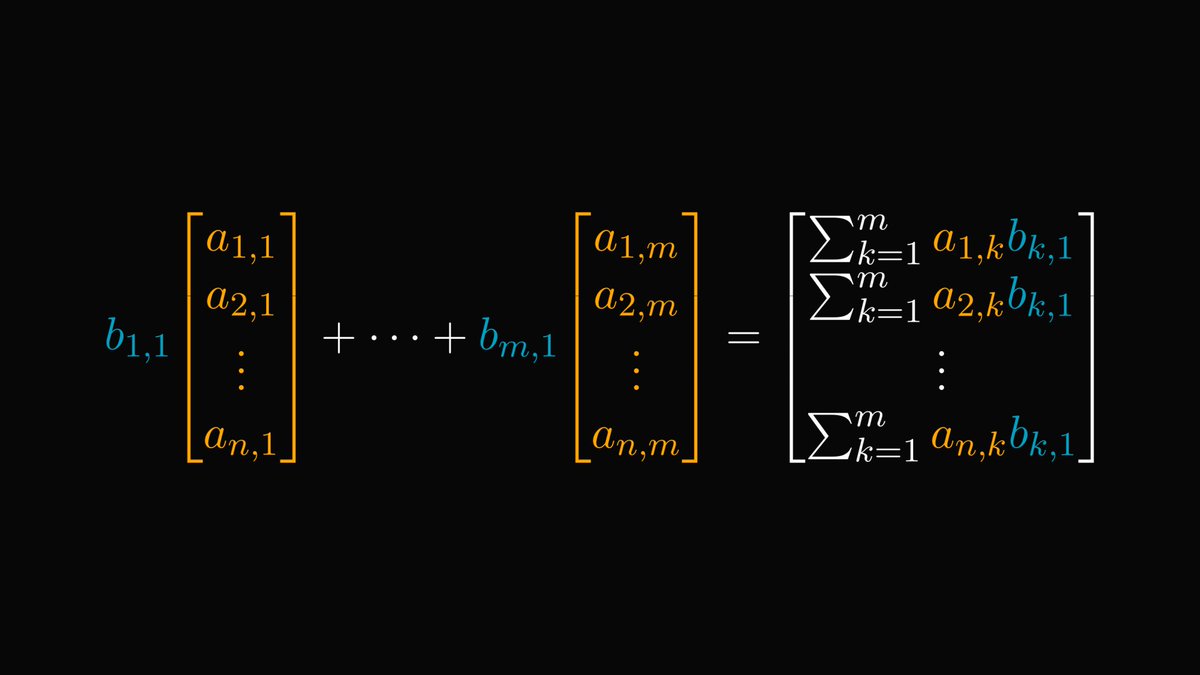

The power of Linear Algebra is abstracting away the complexity of manipulating data structures like vectors and matrices.

Instead of explicitly dealing with arrays and convoluted sums, we can use simple expressions like AB.

That's a huge deal!

14/15

Instead of explicitly dealing with arrays and convoluted sums, we can use simple expressions like AB.

That's a huge deal!

14/15

The book this came from is crazy good and 100% focused on the math required in machine learning.

You won't find better explanations anywhere else:

tivadardanka.com

Trust me on this one. This is the book you want to read.

15/15

You won't find better explanations anywhere else:

tivadardanka.com

Trust me on this one. This is the book you want to read.

15/15

Loading suggestions...