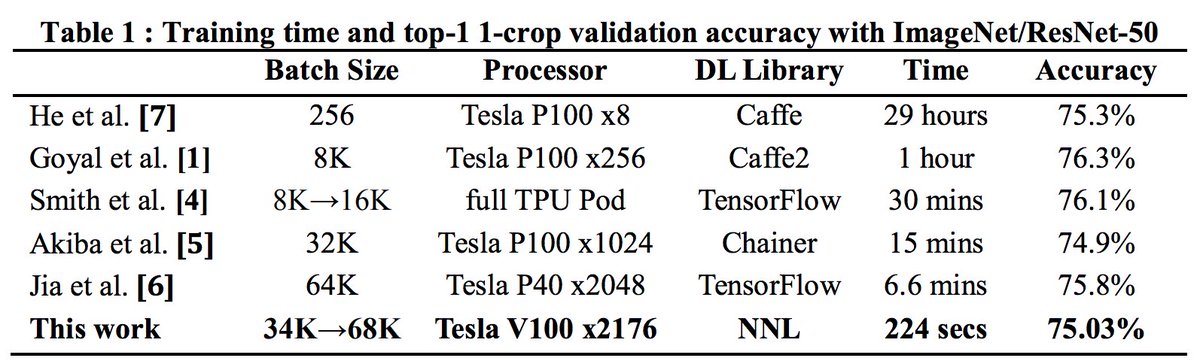

so... if this rate keeps up then around 2020 we'd be training ImageNet to 75% accuracy in 0.5 seconds :)

last fun thing to think about is that we're doing 1.28M images over 90 epochs with 68K batches, so the entire optimization is ~1700 updates to converge. How lucky for us that our Universe allows us to trade that much serial compute for parallel compute in training neural nets

Loading suggestions...