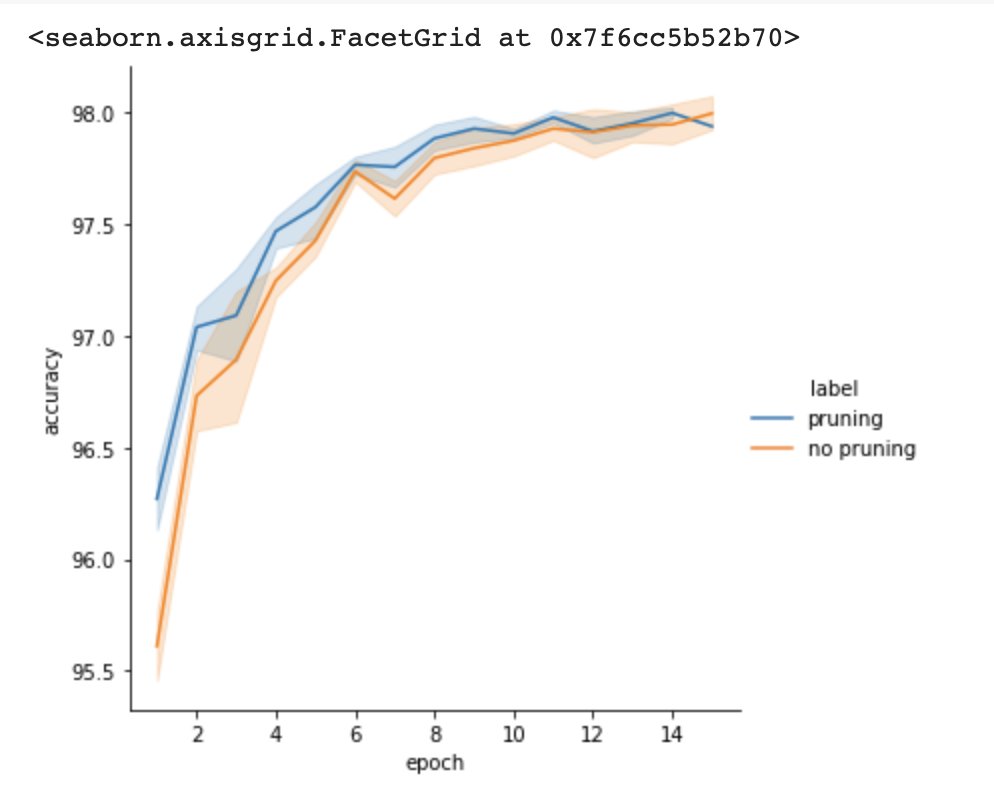

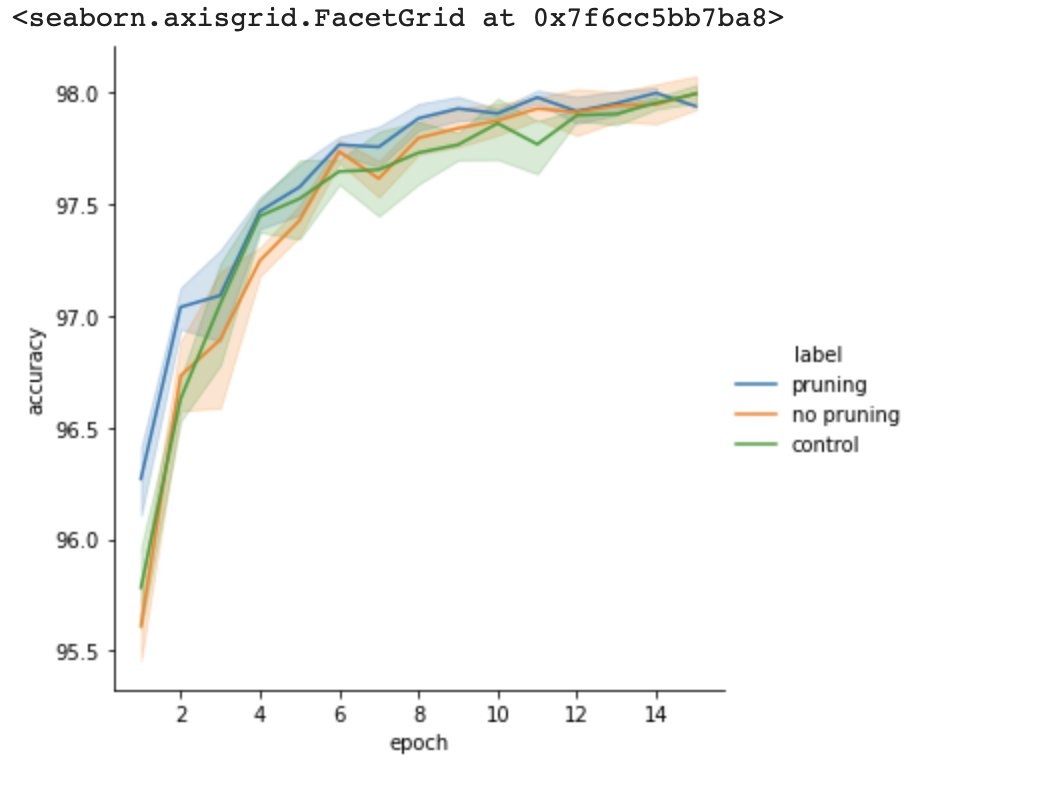

The ML research ecosystem can be amazing. A few hours ago, I wondered: do pruned neural networks converge to high accuracies faster than the original networks? I'm sure I can find an answer in one of many lottery ticket hypothesis papers, but I wanted to explore myself. (1/5)

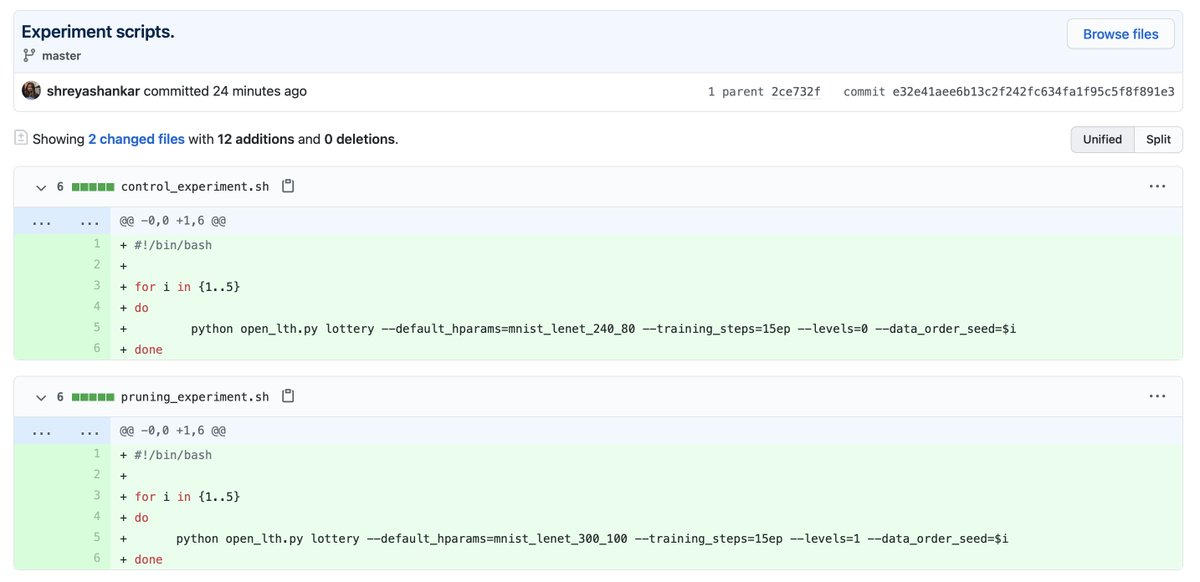

None of this is intended to be "true science" or "research" by any means, but I'm amazed that I was able to think of a question and find the resources to explore it. The codebase was so easy to use -- I literally only added a few lines of code: github.com (4/5)

There's a lot of ML research crap out there, but it's important to highlight when ML research shines. This story is truly a testament to the infrastructure that some ML researchers painstakingly develop. I'm lucky to be in such a field, even if I'm not a researcher. (5/5)

Loading suggestions...