If you liked this thread and want to read more about self-driving cars and machine learning give me a follow! 👍

I have many more threads like this planned 😃

I have many more threads like this planned 😃

@pythops @comma_ai They are also still missing some important features, like for example traffic lights control.

The truth is probably somewhere in between modular and end-to-end. I recommend this talk by Raquel Urtasun on that covers the topic quite well:

youtube.com

The truth is probably somewhere in between modular and end-to-end. I recommend this talk by Raquel Urtasun on that covers the topic quite well:

youtube.com

@mrsahoo You can then easily generate a lot of training data by taking the trajectory the car actually took and correcting for your own movement.

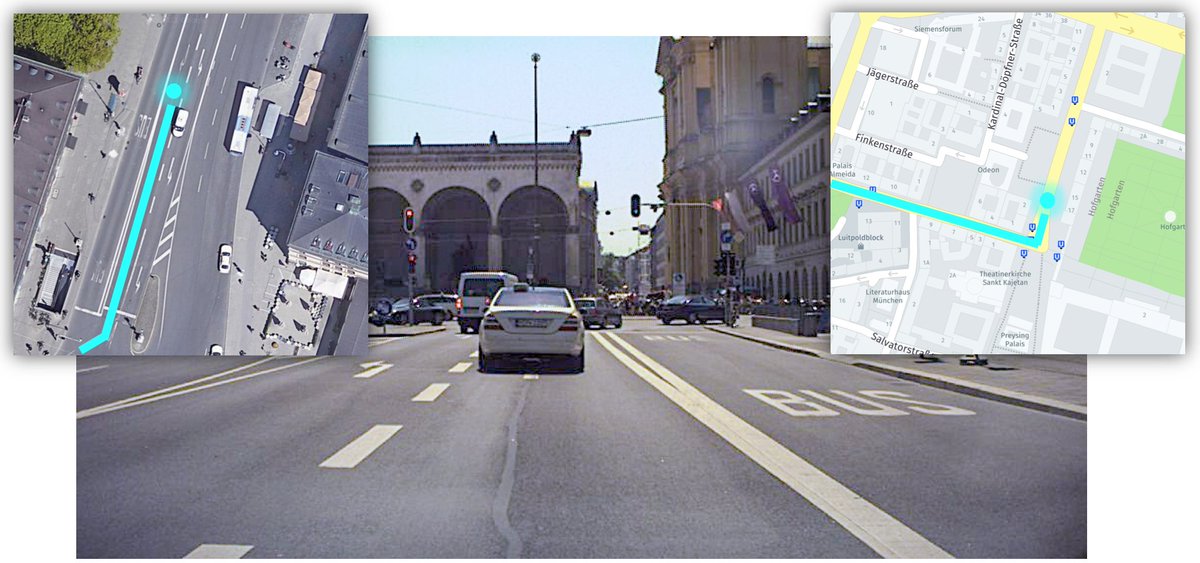

@donkosee What you need to do is use additional landmarks that you can detect in the world and have stored in the map to refine your position and work through GPS outages.

There are different approaches on what landmarks one could use.

There are different approaches on what landmarks one could use.

@donkosee Waymo for example relies on point clouds from the lidar which are quite dense, but there are also solutions with sparser maps, that contain landmarks like traffic signs for example.

@dspkiran - Reprocess all sensors - use a new software version to process the raw data and generate the output again

- Reprocess all the function logic with a new software version or a bugfix

- Look at the behavior, compute KPIs, run automatic tests etc.

- Reprocess all the function logic with a new software version or a bugfix

- Look at the behavior, compute KPIs, run automatic tests etc.

@dspkiran This can be done both in our data center or on a local developer machine if you want in depth debugging capabilities.

Loading suggestions...