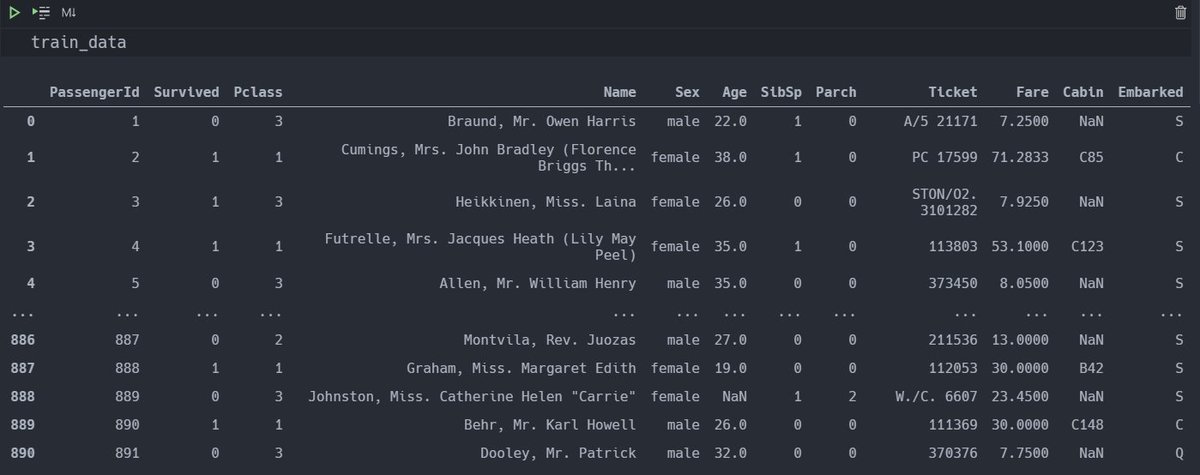

Kaggle challenges are a great way to practice your machine learning skills.

In this thread, we'll go through each step for solving the beginner friendly titanic disaster challenge.

In this thread, we'll go through each step for solving the beginner friendly titanic disaster challenge.

These are the key steps that we will go over:

- Cleaning the data

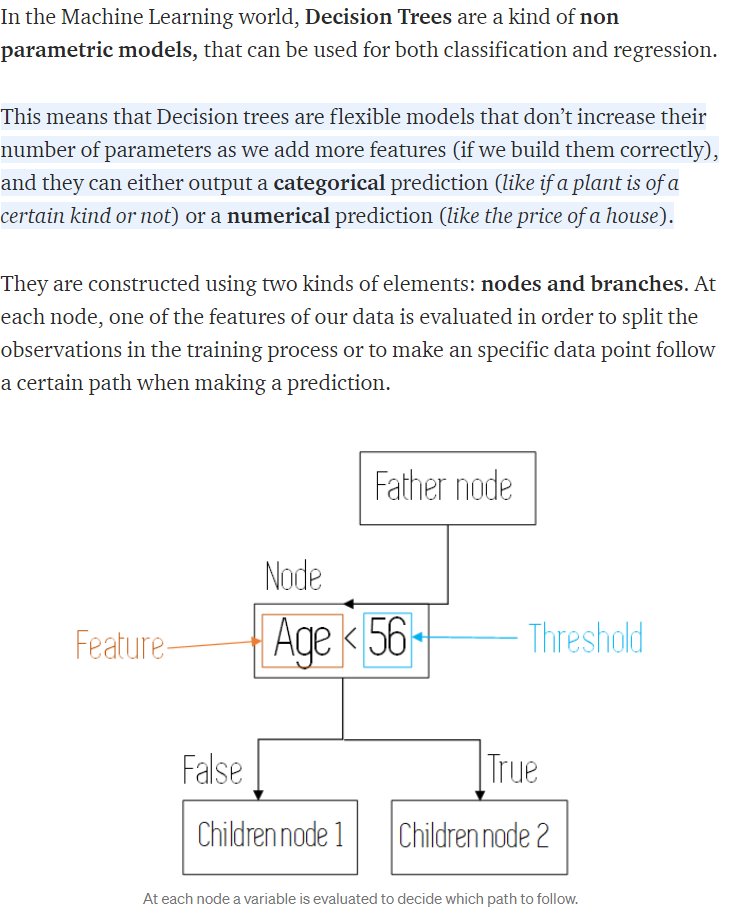

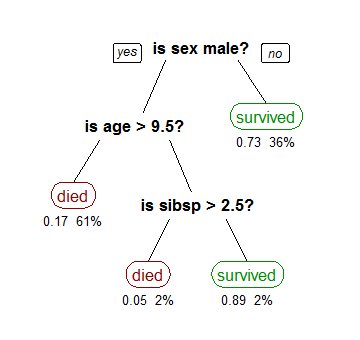

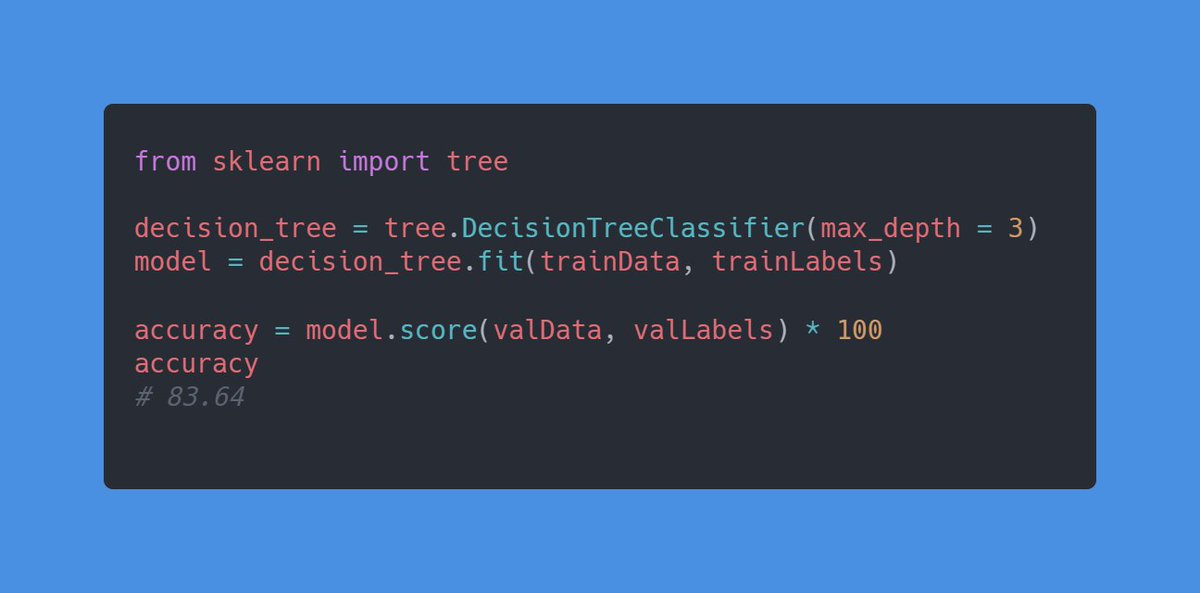

- Training a machine learning model using decision trees in Sklearn

- Making a submission to Kaggle using the predictions from our training model

- Cleaning the data

- Training a machine learning model using decision trees in Sklearn

- Making a submission to Kaggle using the predictions from our training model

We will be using:

- Jupyter notebooks/Google Collab

- Python with Sci-kit learn, pandas

Keep in mind that this is just one way of solving this challenge, there are probably many others but I find this intuitive and easy to understand.

- Jupyter notebooks/Google Collab

- Python with Sci-kit learn, pandas

Keep in mind that this is just one way of solving this challenge, there are probably many others but I find this intuitive and easy to understand.

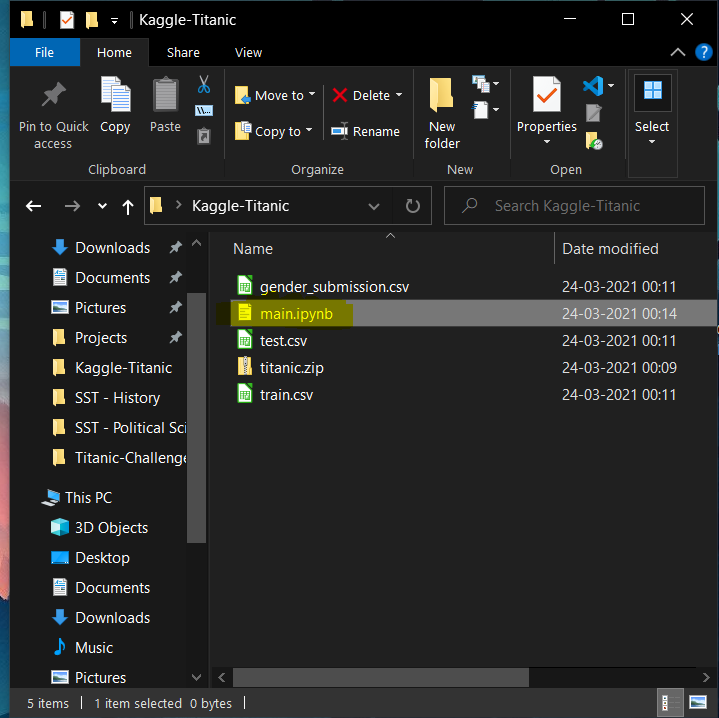

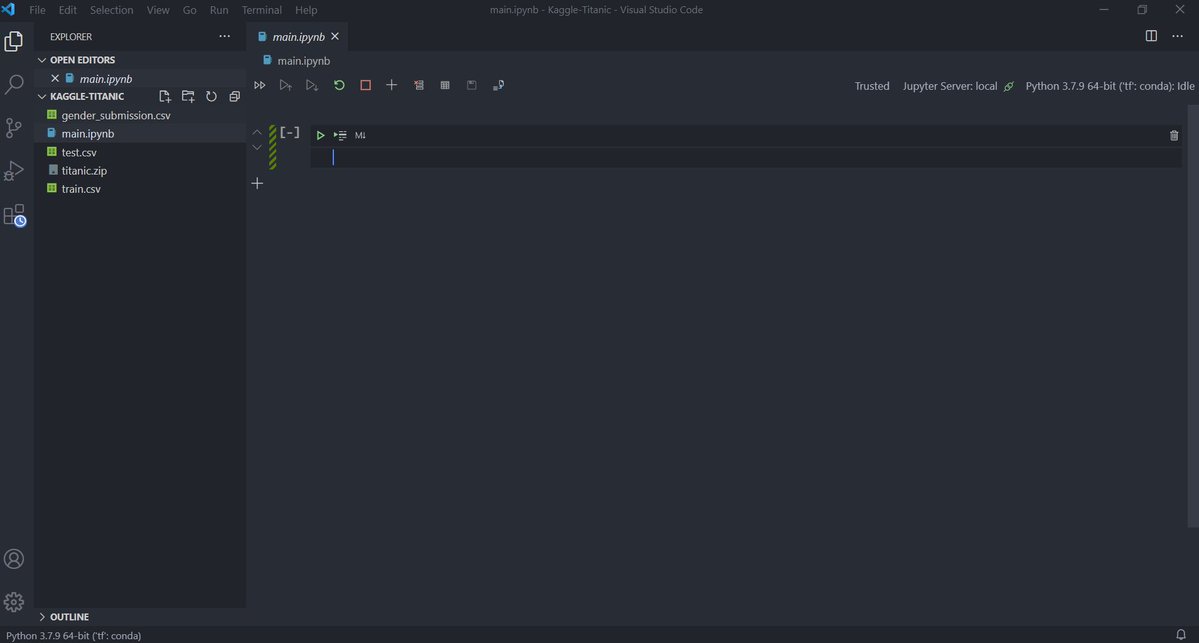

Now open this folder in VS code.

It has a nice editor for Jupyter notebooks which is what we'll be using.

For this, you need to install the Python extension from the VS Code marketplace.

🔗 marketplace.visualstudio.com

It has a nice editor for Jupyter notebooks which is what we'll be using.

For this, you need to install the Python extension from the VS Code marketplace.

🔗 marketplace.visualstudio.com

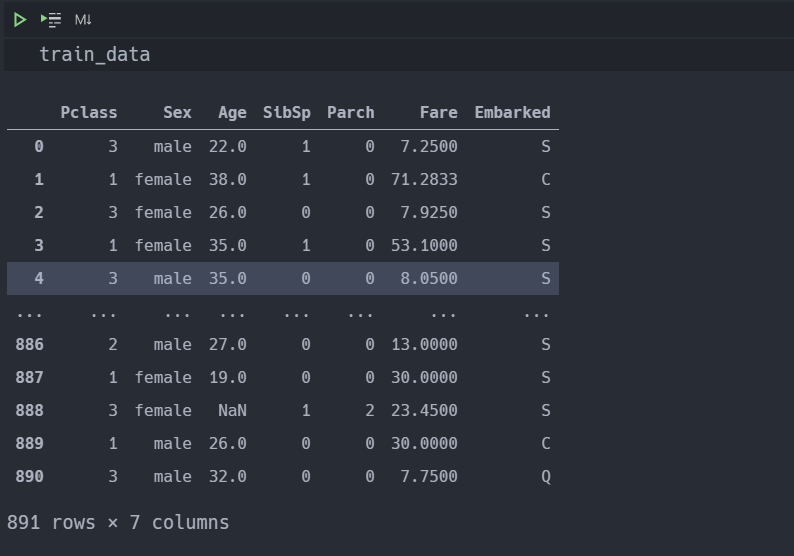

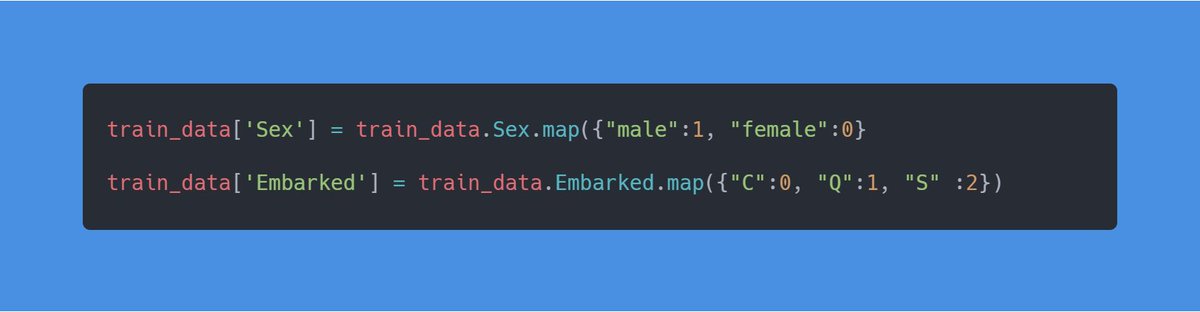

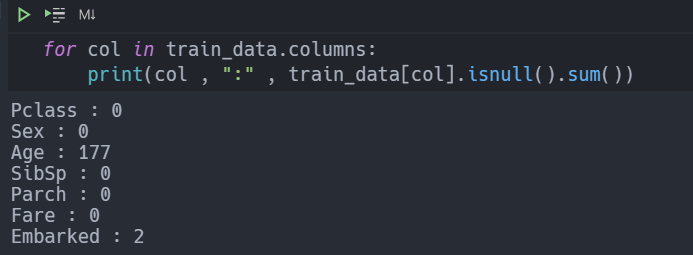

We need to do 2 more things before we can feed this data to our machine learning model.

1. Map all the categorical data to numerical values

2. Fill the NaN values

1. Map all the categorical data to numerical values

2. Fill the NaN values

If you look at the columns labelled Sex and Embarked, you'll notice values that are in categories.

Our machine learning model will only accept numerical values as input.

Our machine learning model will only accept numerical values as input.

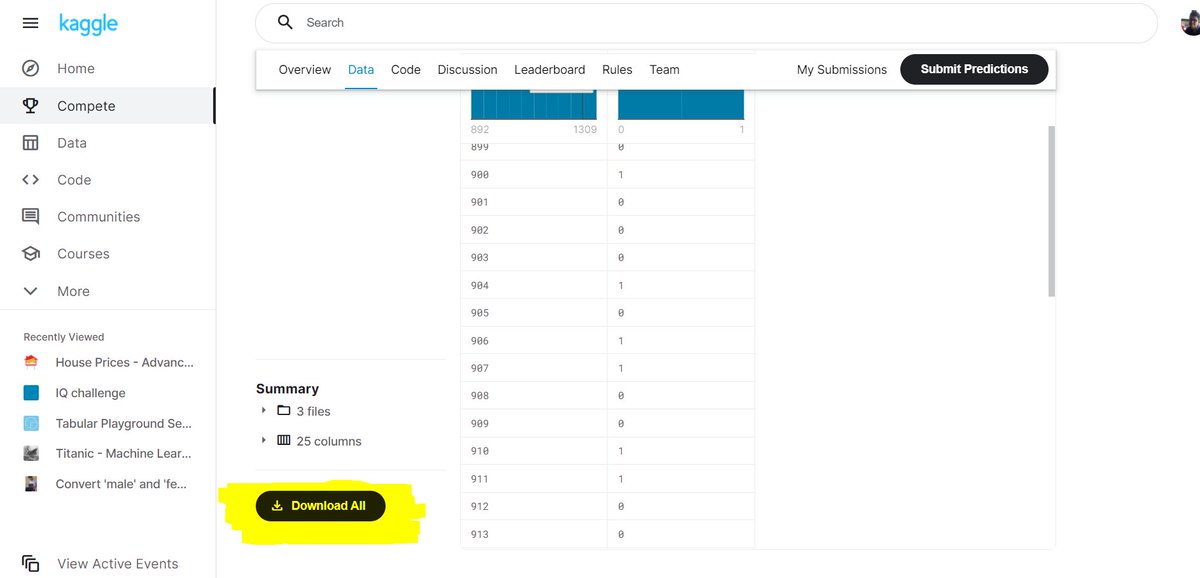

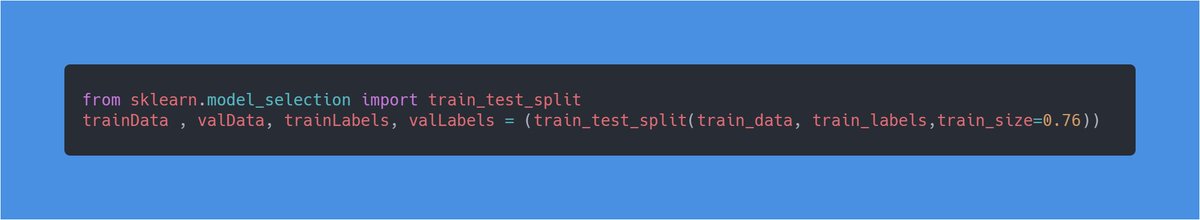

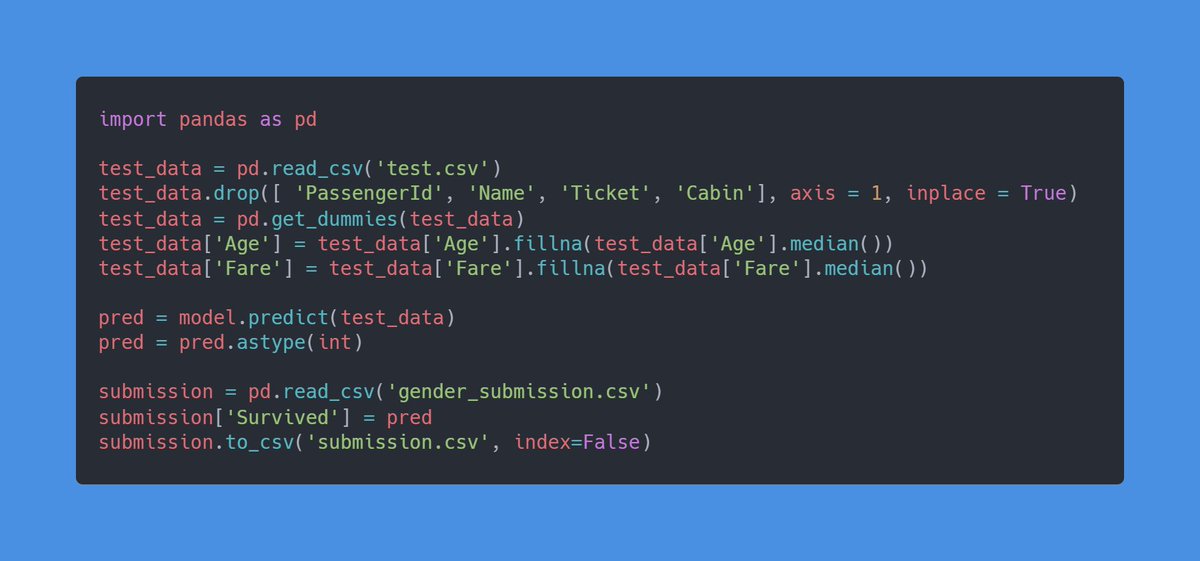

Now we can use this model to make predictions on the test data which we will submit to kaggle.

kaggle.com

kaggle.com

That is the end of thread, if liked it then follow me for more content like this.

In the next thread we will train a neural network to classify images of dogs and cats.

In the next thread we will train a neural network to classify images of dogs and cats.

Loading suggestions...