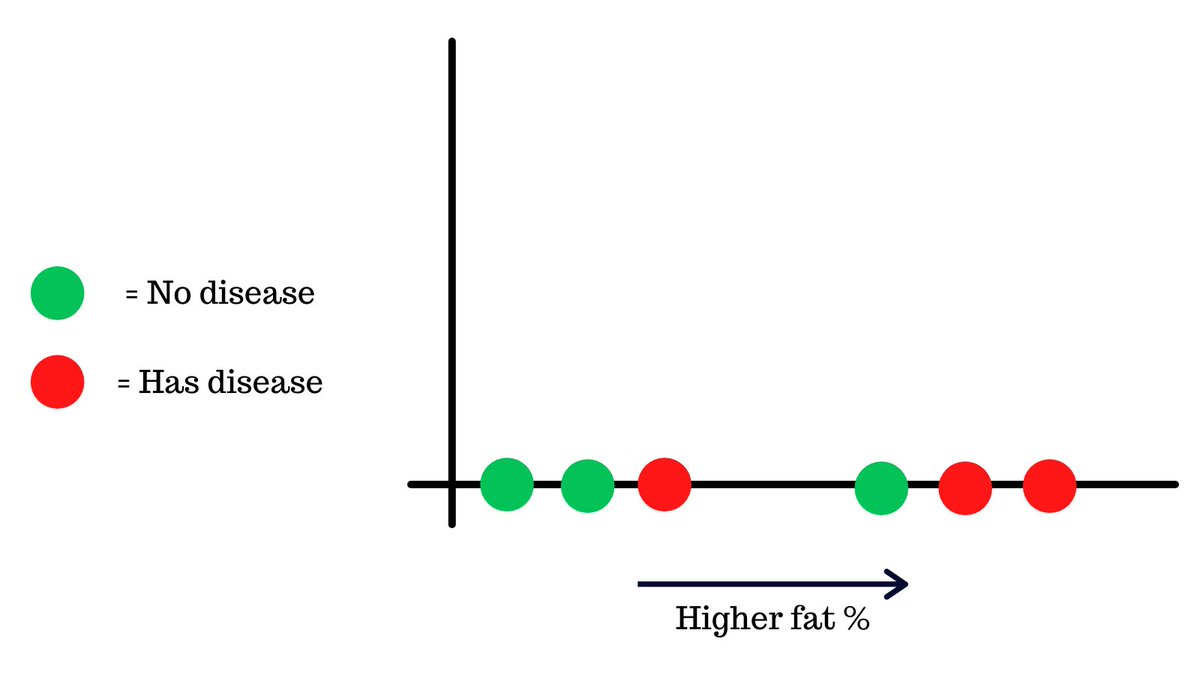

You see, this is a special disease that affects only a very small group of people and the victims show no symptoms.

It is caused by several factors such as the amount of fat in someone's body, muscle density, etc.

It is caused by several factors such as the amount of fat in someone's body, muscle density, etc.

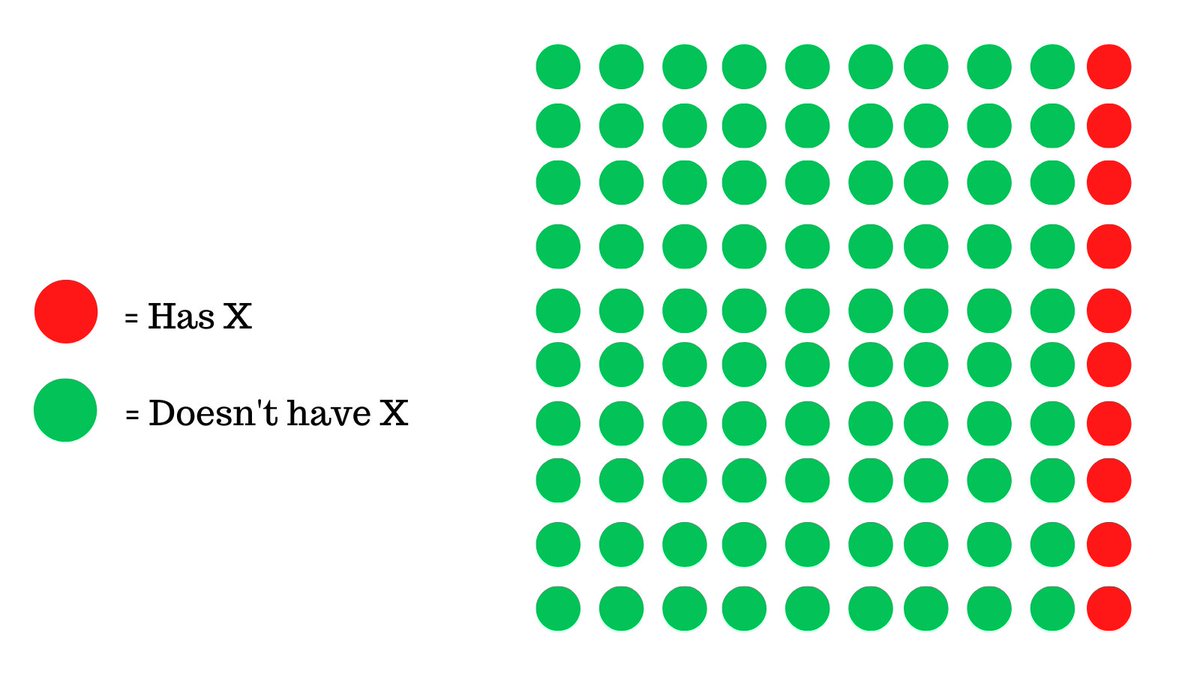

For every person that we caught with X, we falsely deemed 7 people who did not have X as being positive.

This is problematic for one main reason:

The model is essentially useless, it predicts almost everyone as being positive.

Why is this happening and how can we fix this?🤔

This is problematic for one main reason:

The model is essentially useless, it predicts almost everyone as being positive.

Why is this happening and how can we fix this?🤔

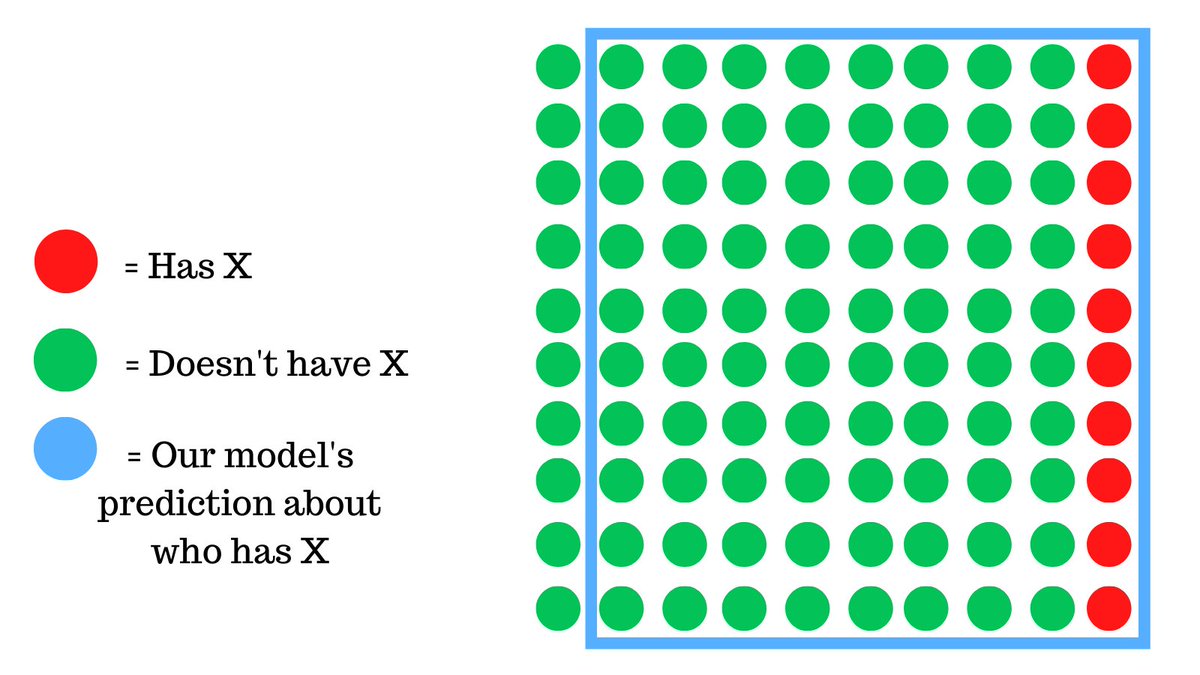

We need to realize is that this is certainly not the best model we could've trained. Binary classifications like these are hard to evaluate.

Let me explain.

Let me explain.

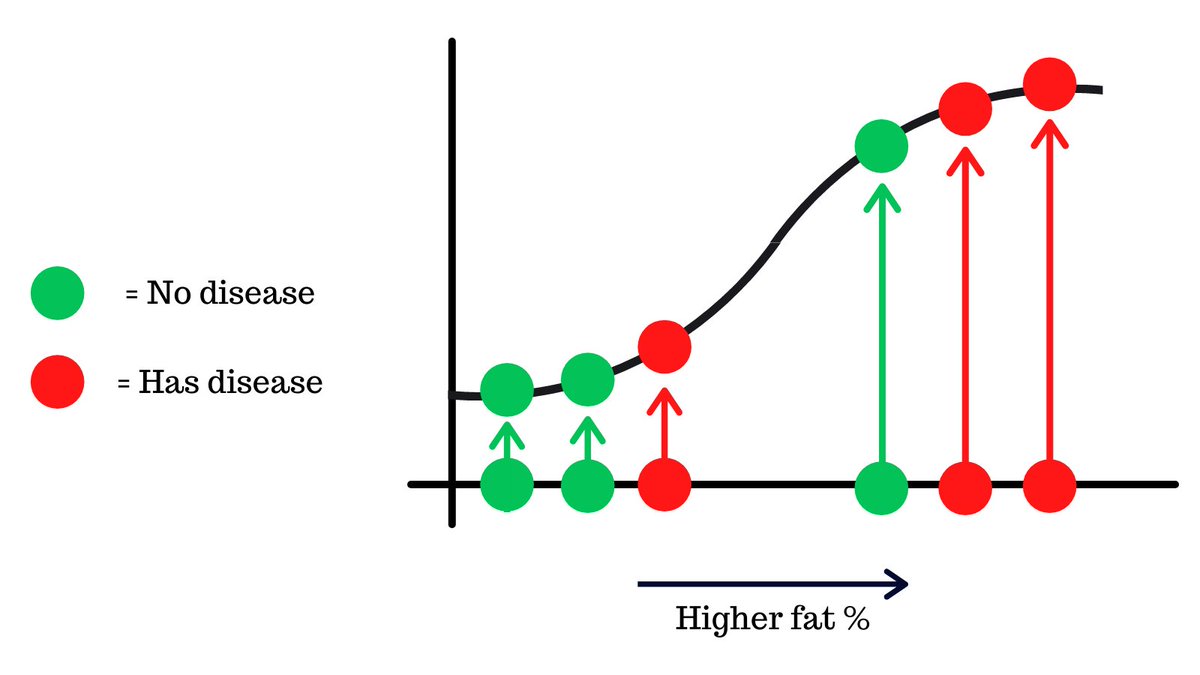

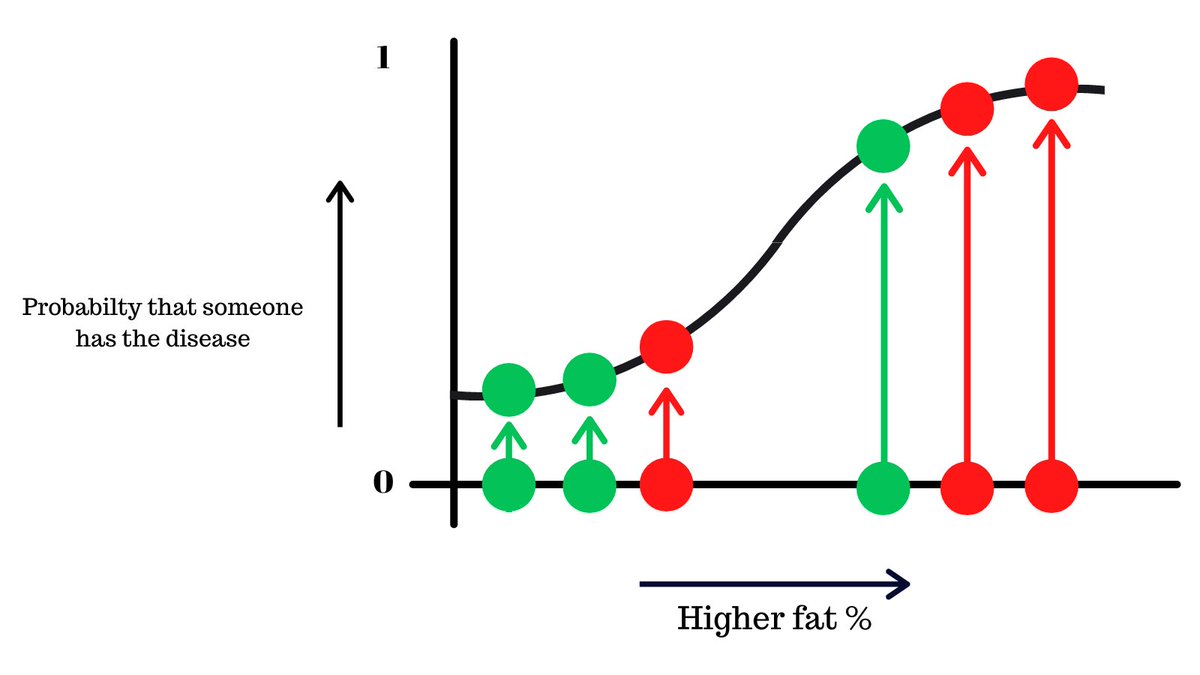

This is a binary classification problem, basically, there are two possibilities: either the person has the disease or not.

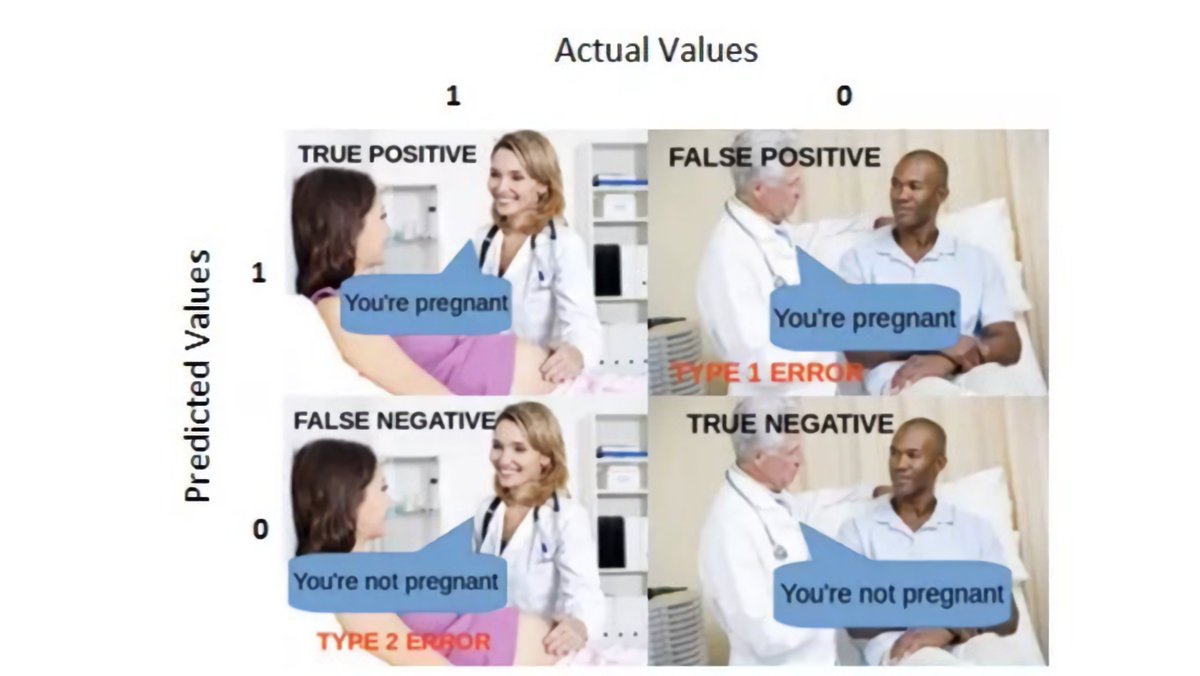

Then there are the 2 predictions by the models which are the same as above. This leads to 4 total cases.

Then there are the 2 predictions by the models which are the same as above. This leads to 4 total cases.

- Case 1: Our model predicts someone shoplifted when they actually did (TP - True Positive)

- Case 2: Our model predicts someone shoplifted when they didn't (FP - False Positive)

- Case 2: Our model predicts someone shoplifted when they didn't (FP - False Positive)

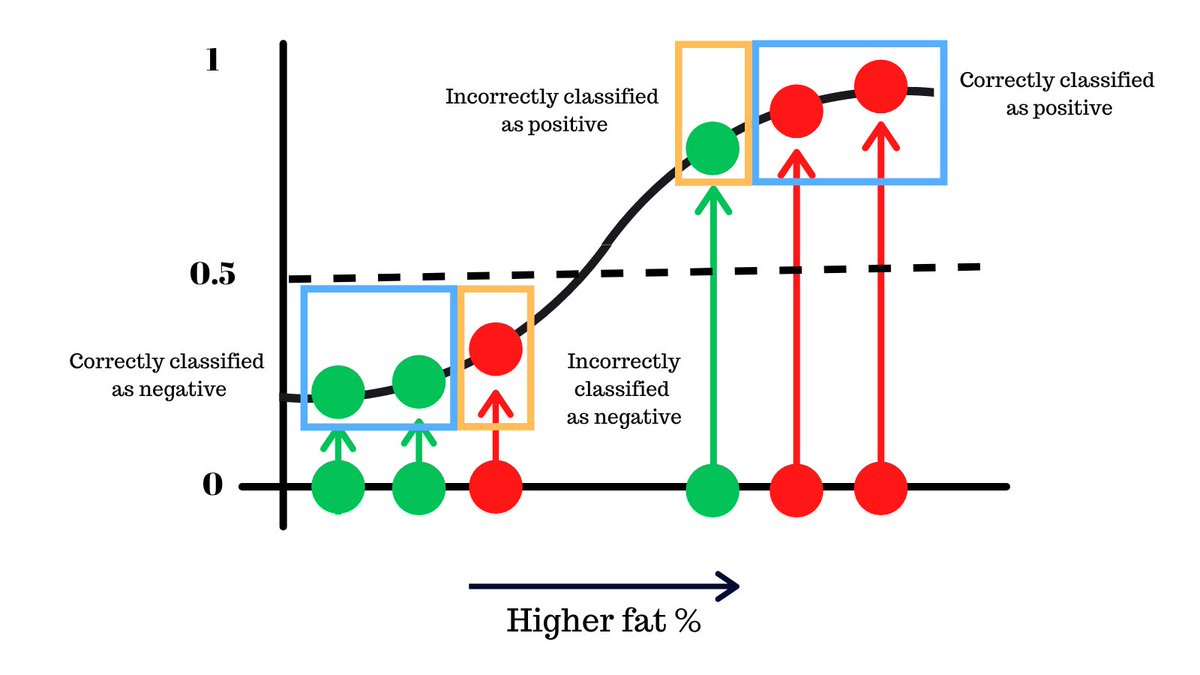

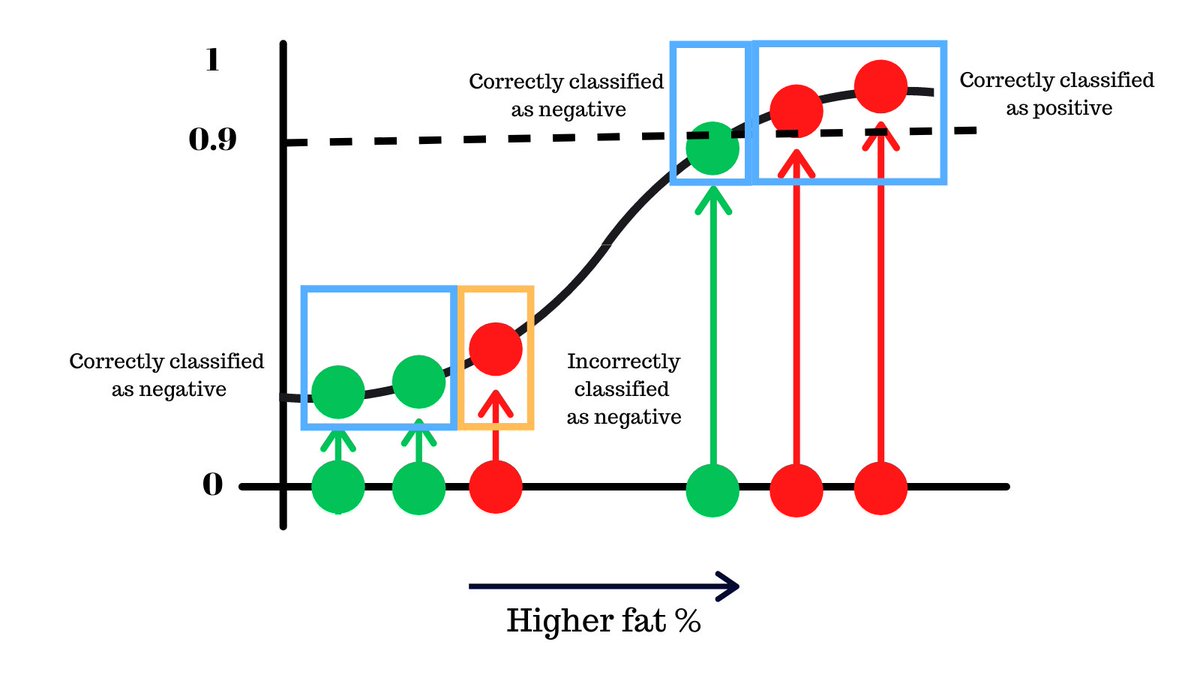

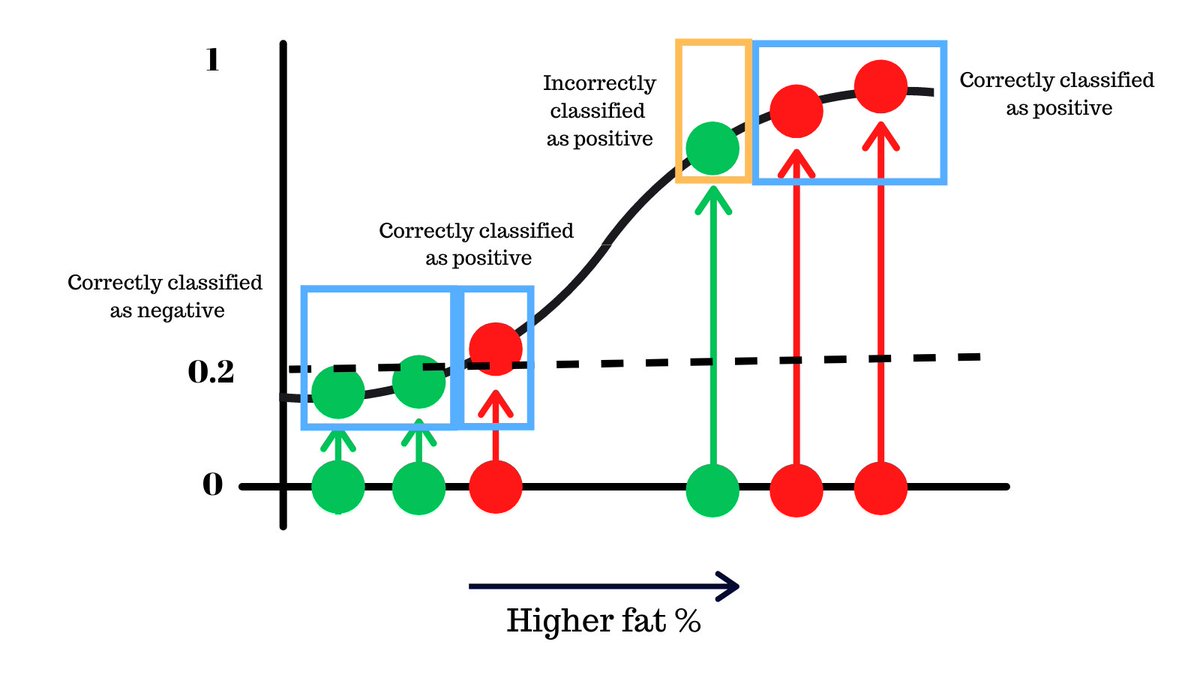

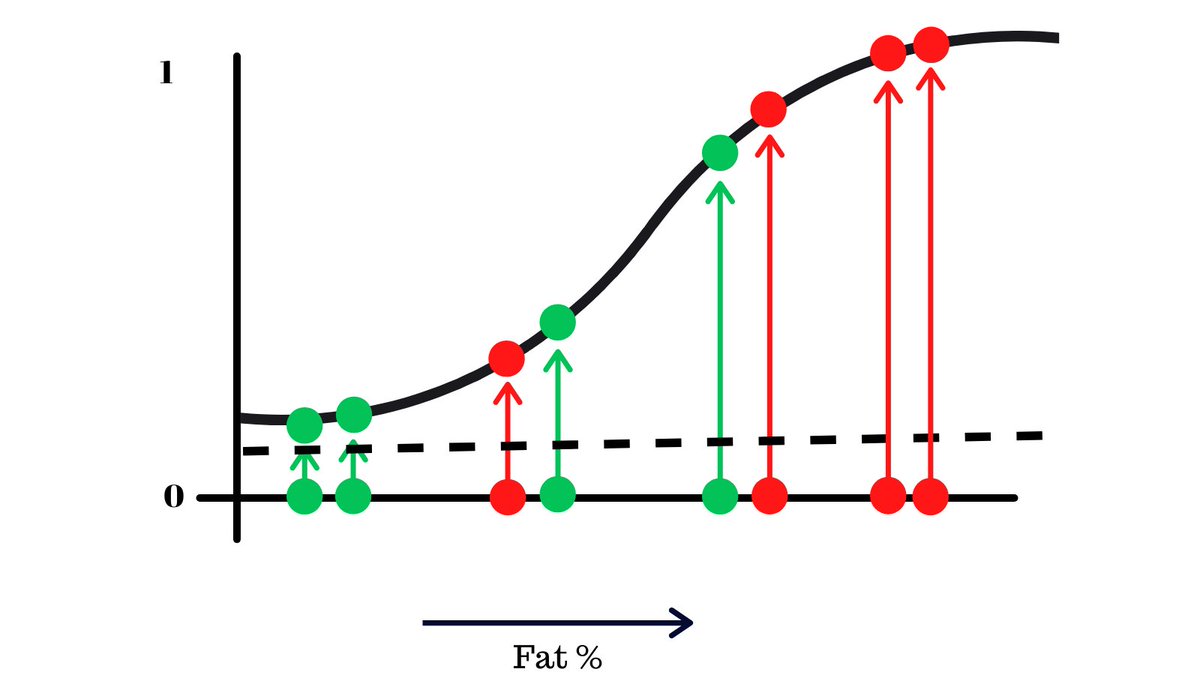

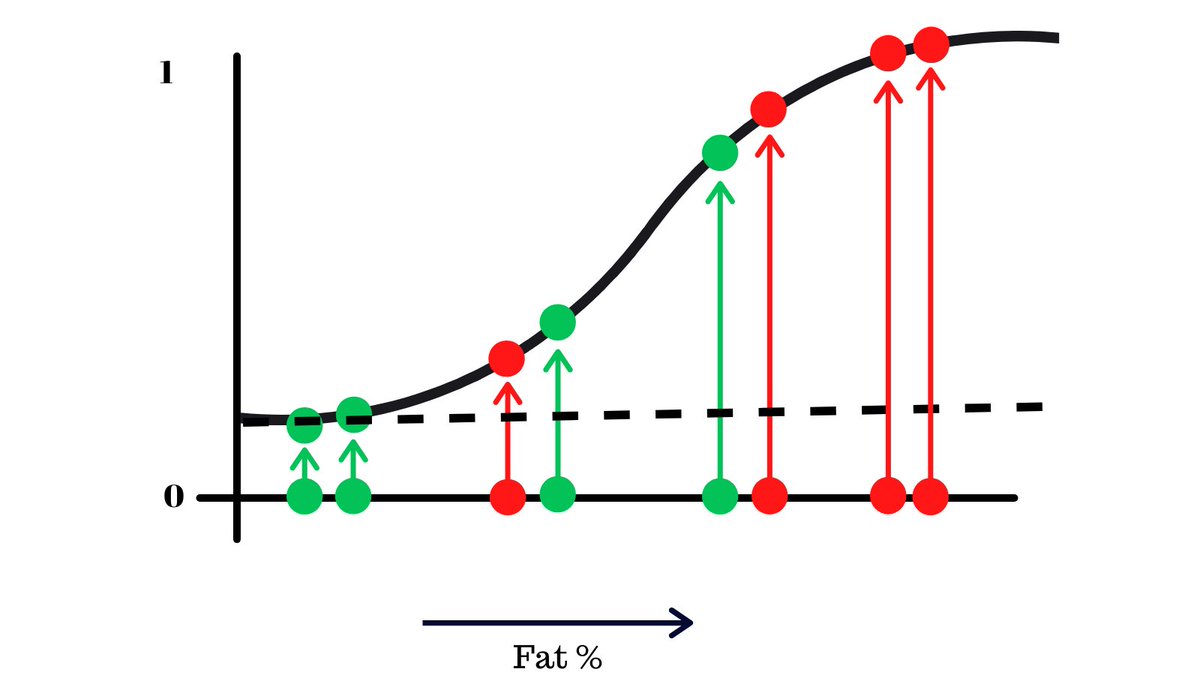

Since this is a binary classification problem (either a yes or a no), what would be optimal threshold where we decide if someone has the disease or not?

This will make more sense as we move on.

This will make more sense as we move on.

There are 2 things to keep in mind here:

- We must catch people that actually have the disease correctly

- We must not falsely classify people without X as positive, the model just ends up being useless

- We must catch people that actually have the disease correctly

- We must not falsely classify people without X as positive, the model just ends up being useless

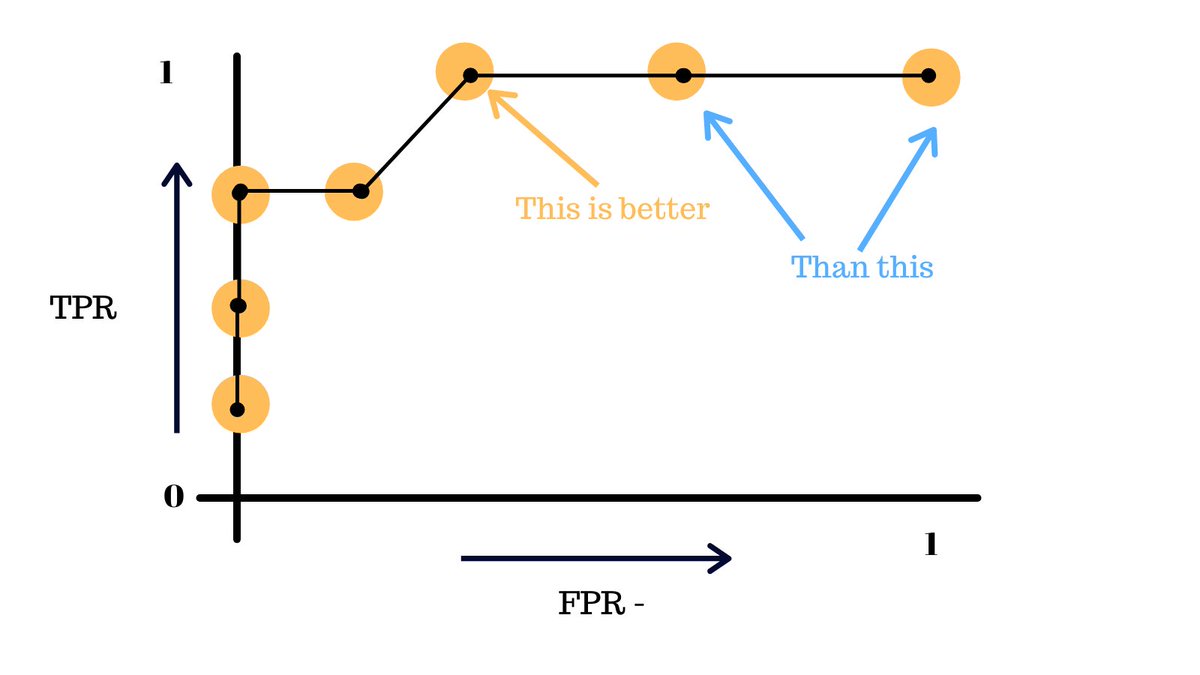

As you can see, just by changing this threshold, we can reduce the mistakes that our classifier model makes.

Now imagine thousands of data points on the logistc regression curve, how would you find the optimal threshold in this case?

Now imagine thousands of data points on the logistc regression curve, how would you find the optimal threshold in this case?

Now our previous method of trial error, this would be a painstaking and long process, fortunately there is a better solution.

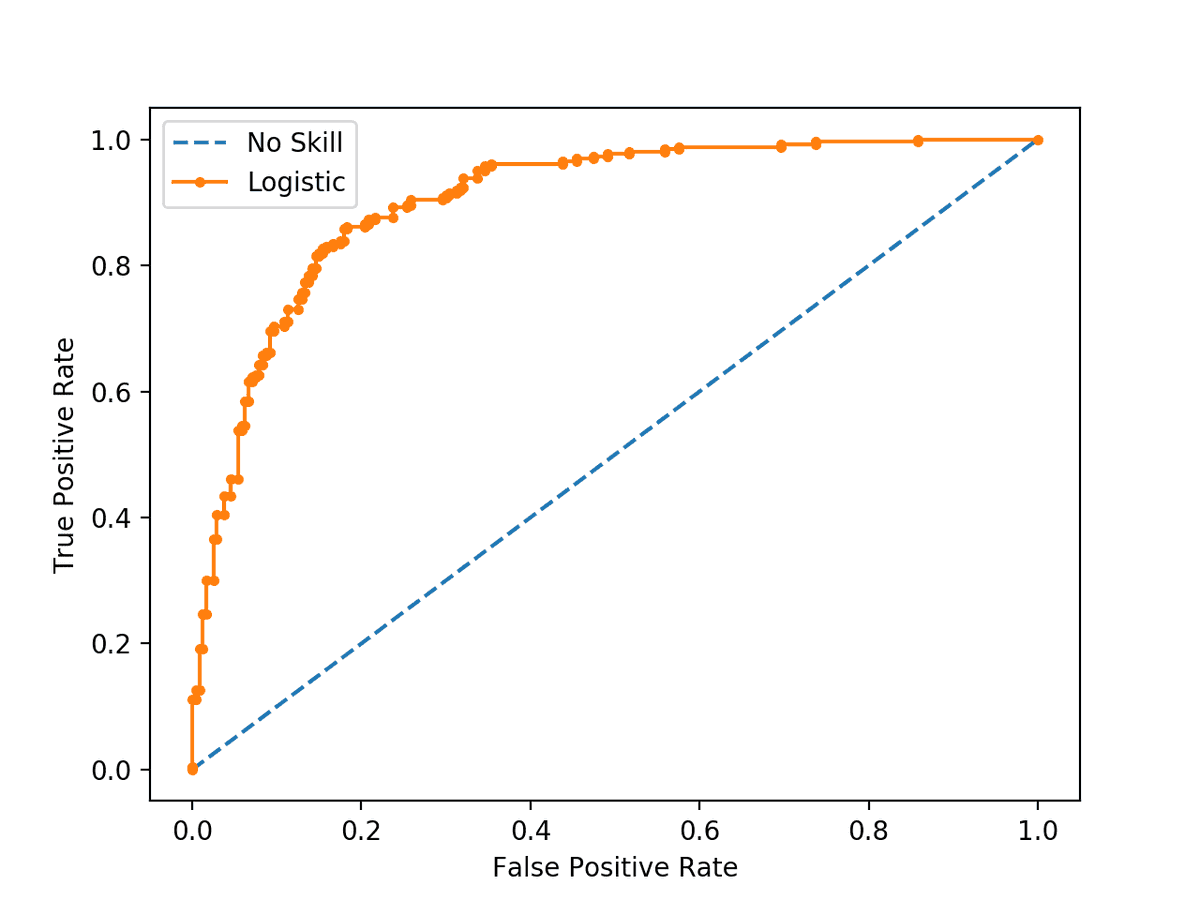

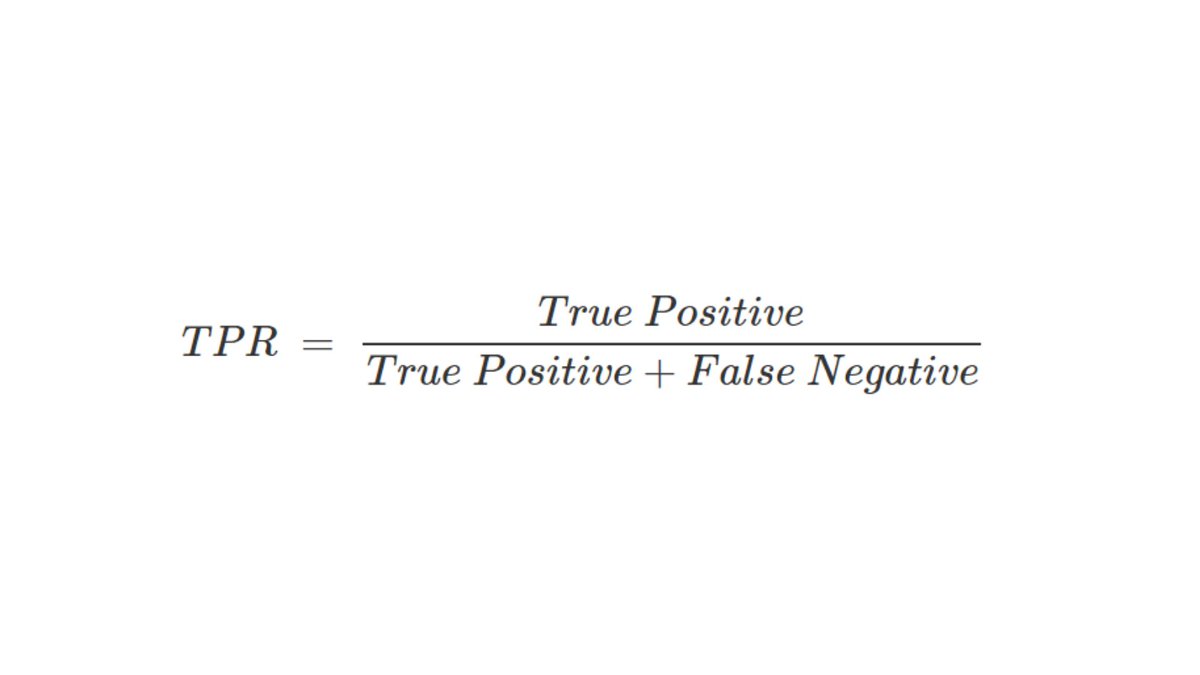

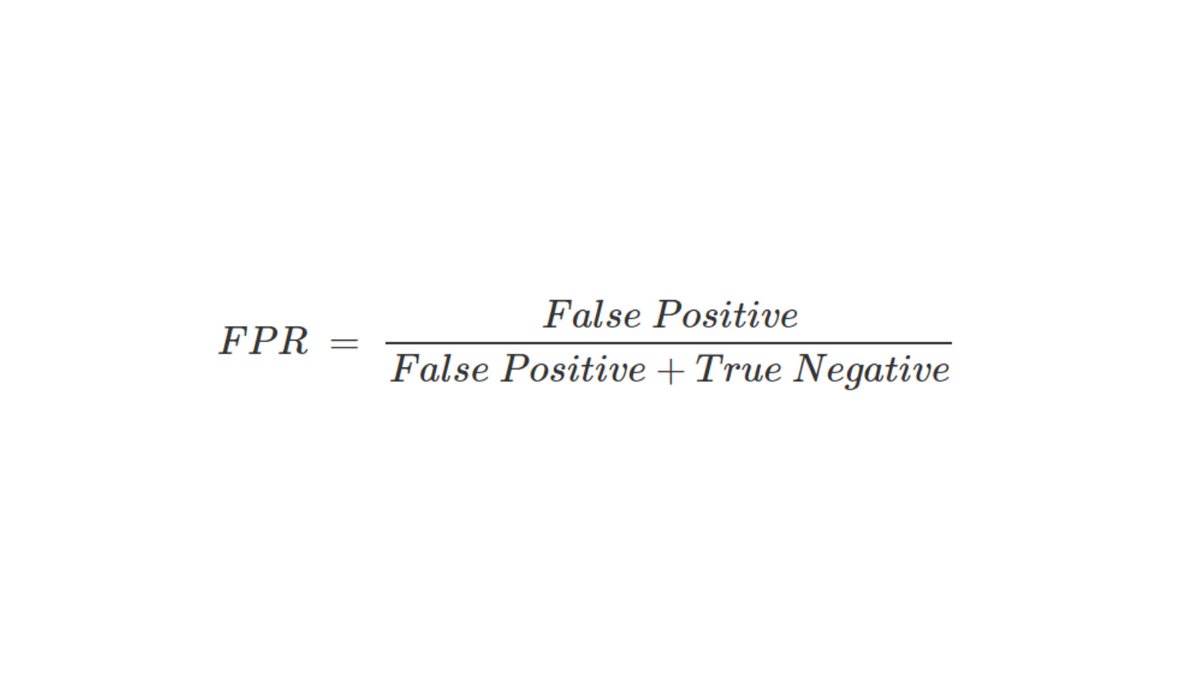

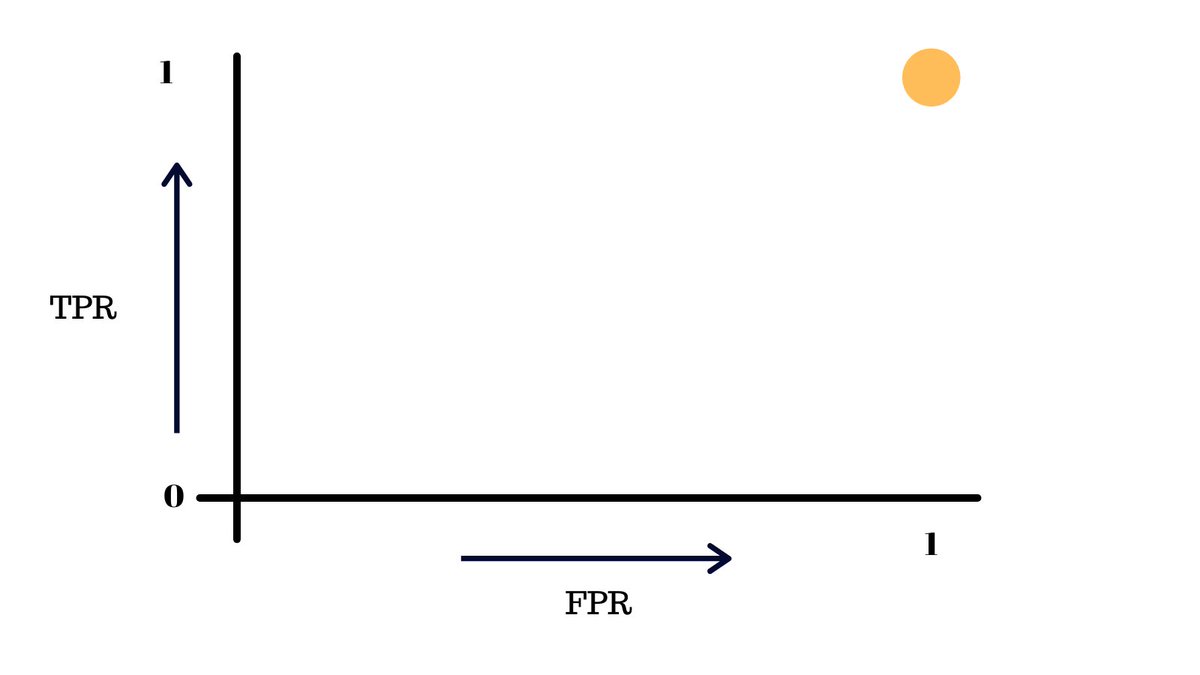

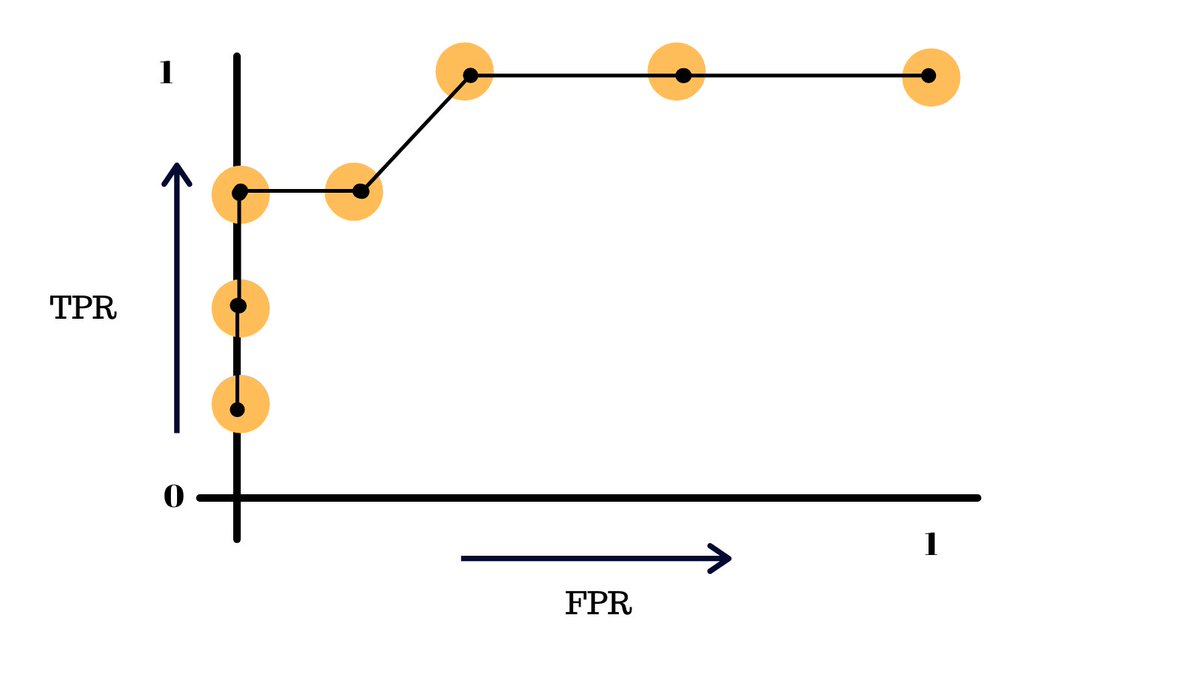

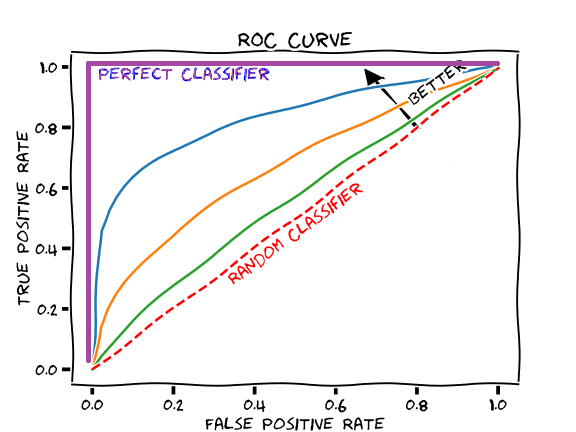

It compares the True Positivity Rate (TPR) and the False Positivity Rate(FPR) of binary classification model at different thresholds.

Anything that’s below the line will output be people without X and above as people with X by the model. We’ll now find the FPR and TPR of this model with this threshold and plot it on another graph.

We have 👇

We have 👇

- 4 True Positive: People infected and predicted correctly

- 0 False Negatives: People infected but not predicted correctly

- 4 False Positives: People infected but not predicted correctly

- 0 True Negatives: People not infected and predicted correctly

- 0 False Negatives: People infected but not predicted correctly

- 4 False Positives: People infected but not predicted correctly

- 0 True Negatives: People not infected and predicted correctly

By simply using the optimal thresholds, we can dratically improve the accuracy of a binary classifier.

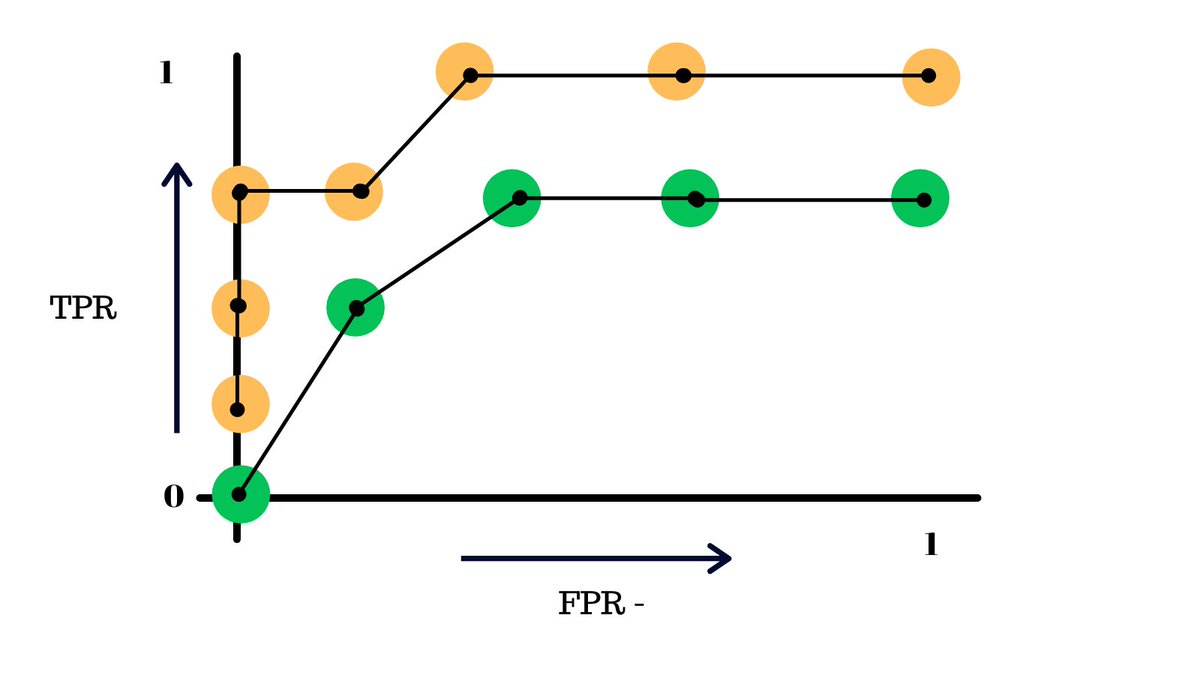

Another topic related to the ROC curve is AUC(Area under the curve), it a metric that helps us compare the performance of 2 models.

Another topic related to the ROC curve is AUC(Area under the curve), it a metric that helps us compare the performance of 2 models.

And all of this is what helped the engineers make a better model.

Follow @PrasoonPratham for more content like this and do retweet the first tweet in thread to spread the love of machine learning.

Follow @PrasoonPratham for more content like this and do retweet the first tweet in thread to spread the love of machine learning.

Loading suggestions...