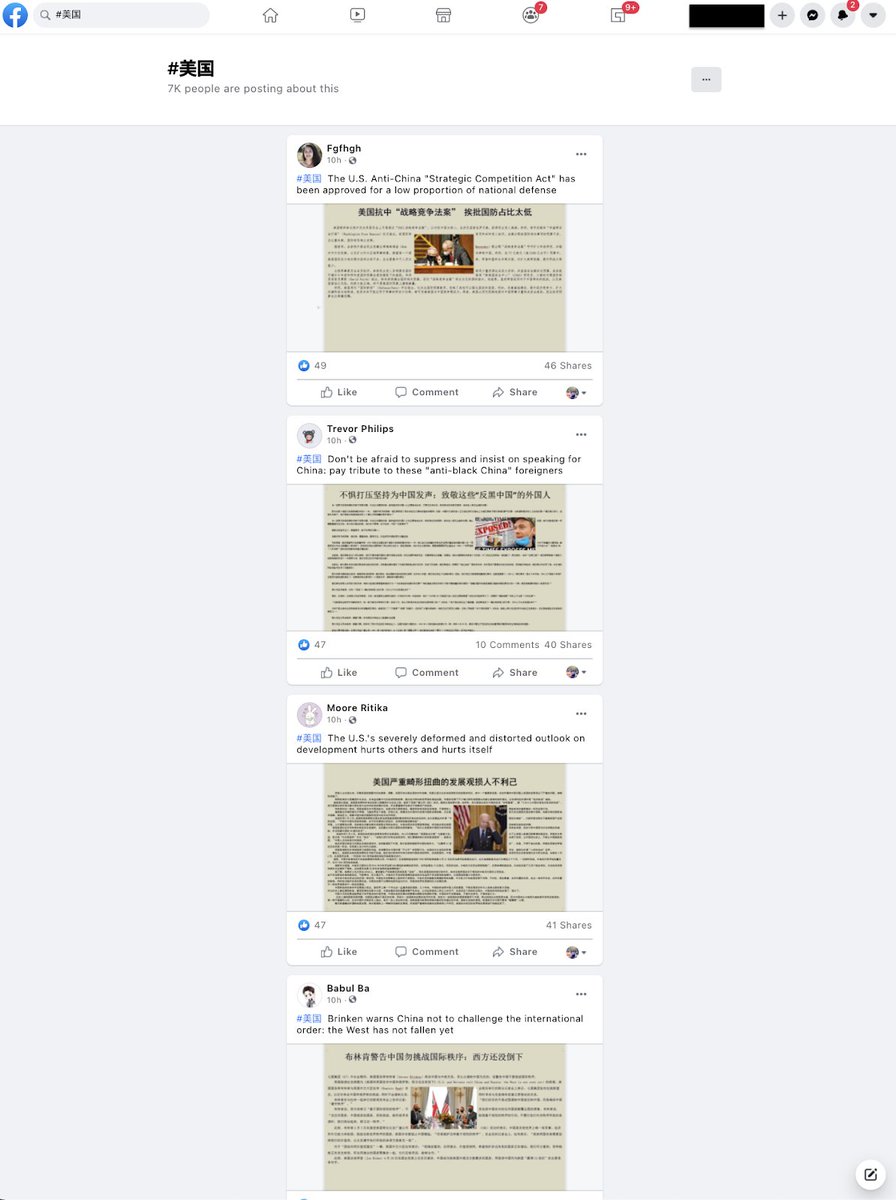

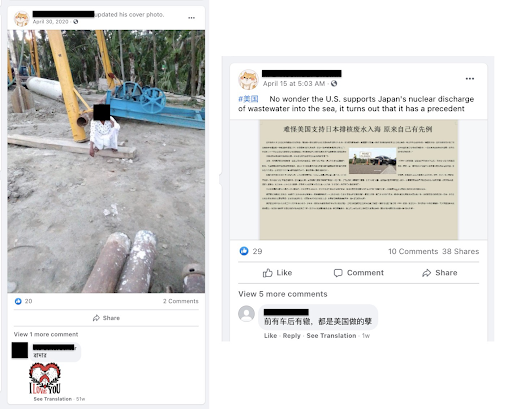

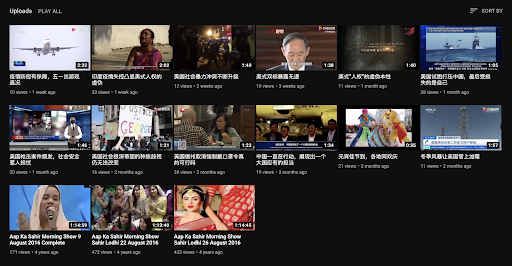

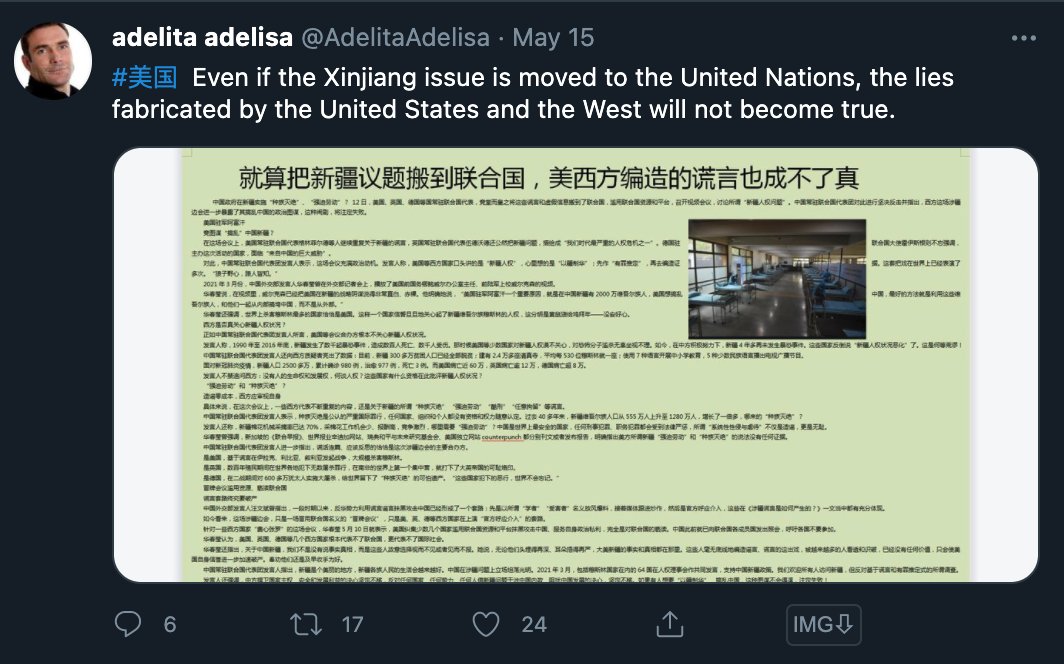

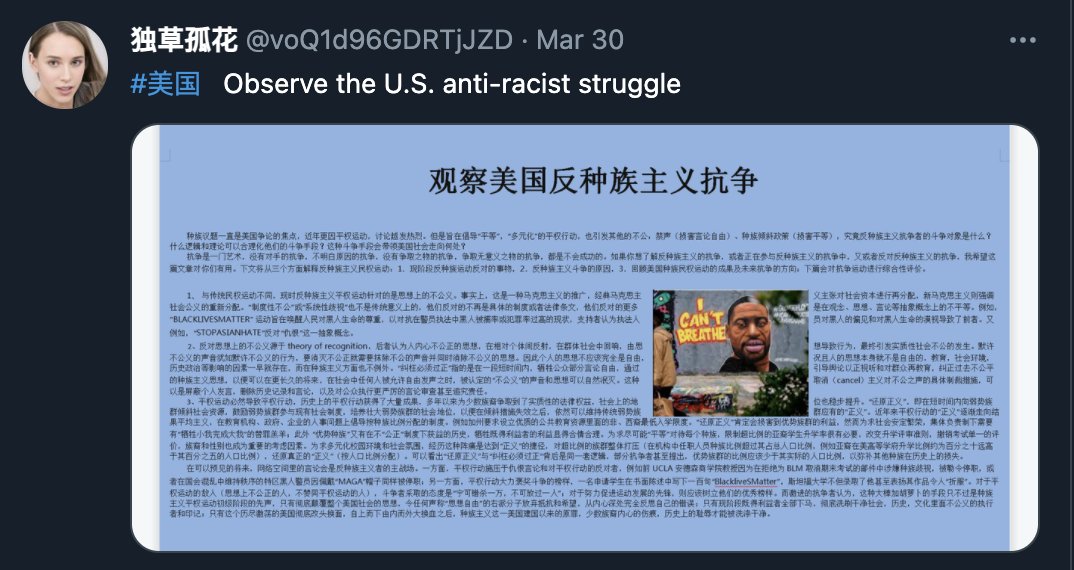

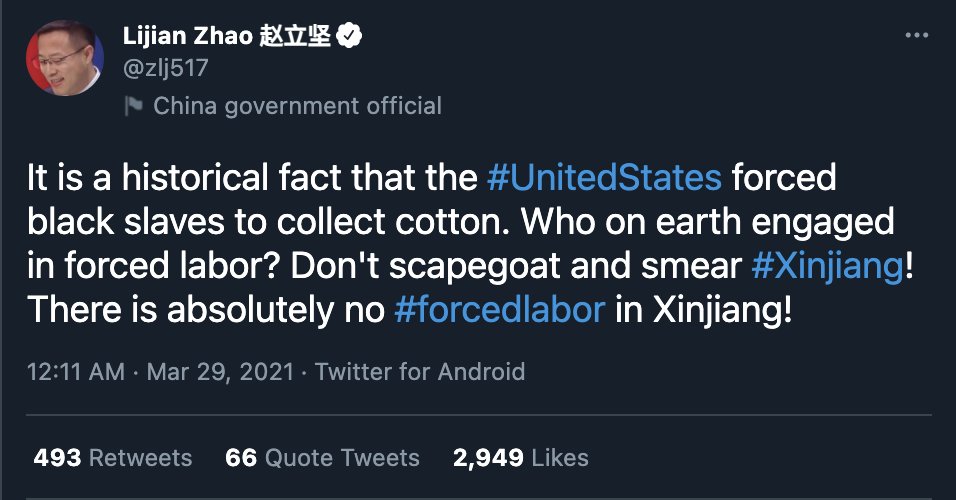

A coordinated network of accounts is using major social platforms to deny human rights abuses, distort narratives on significant issues and elevate China’s reputation.

This is a thread of what we found & how we found it. Full report @Cen4infoRes: info-res.org

🧵👇

This is a thread of what we found & how we found it. Full report @Cen4infoRes: info-res.org

🧵👇

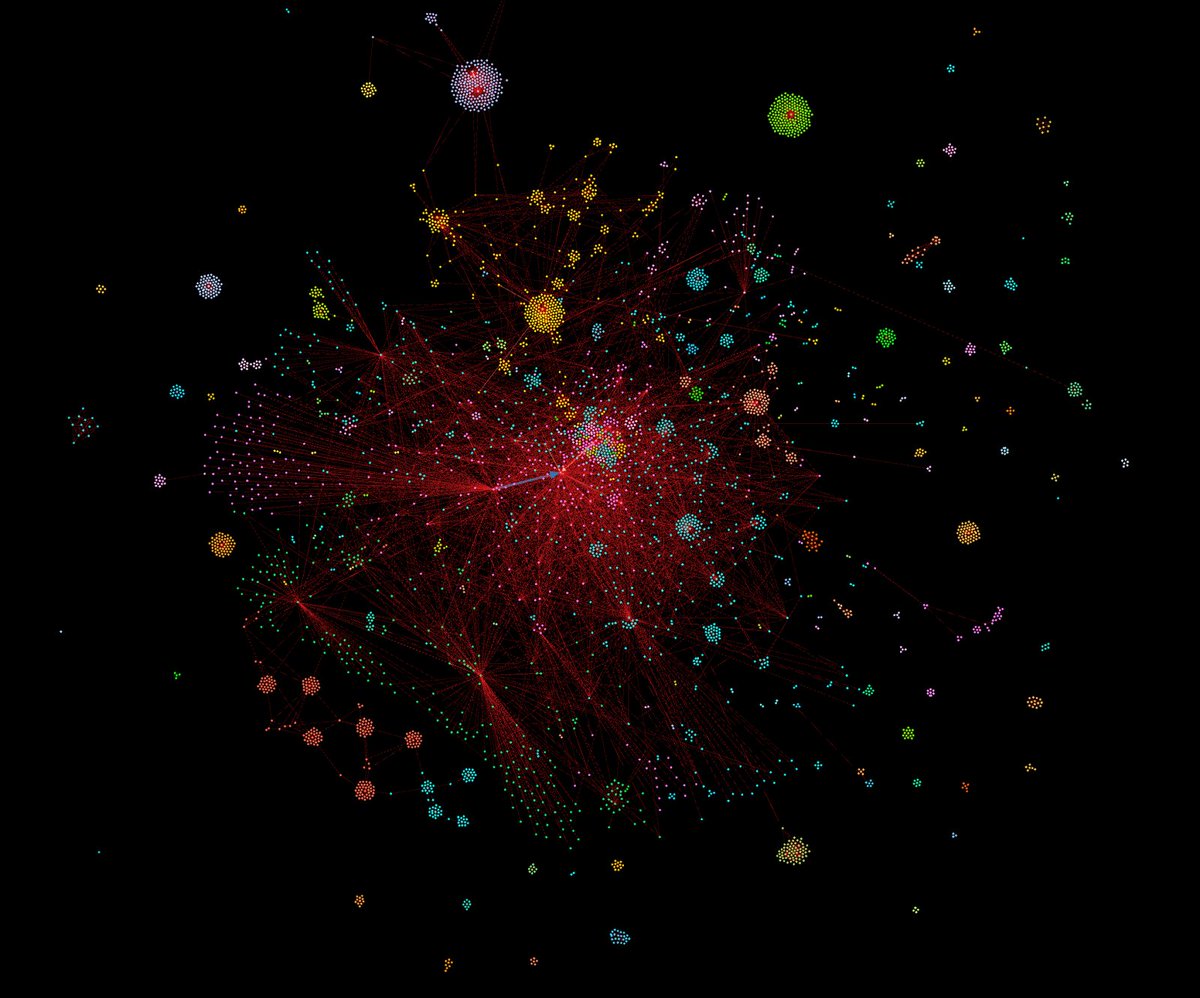

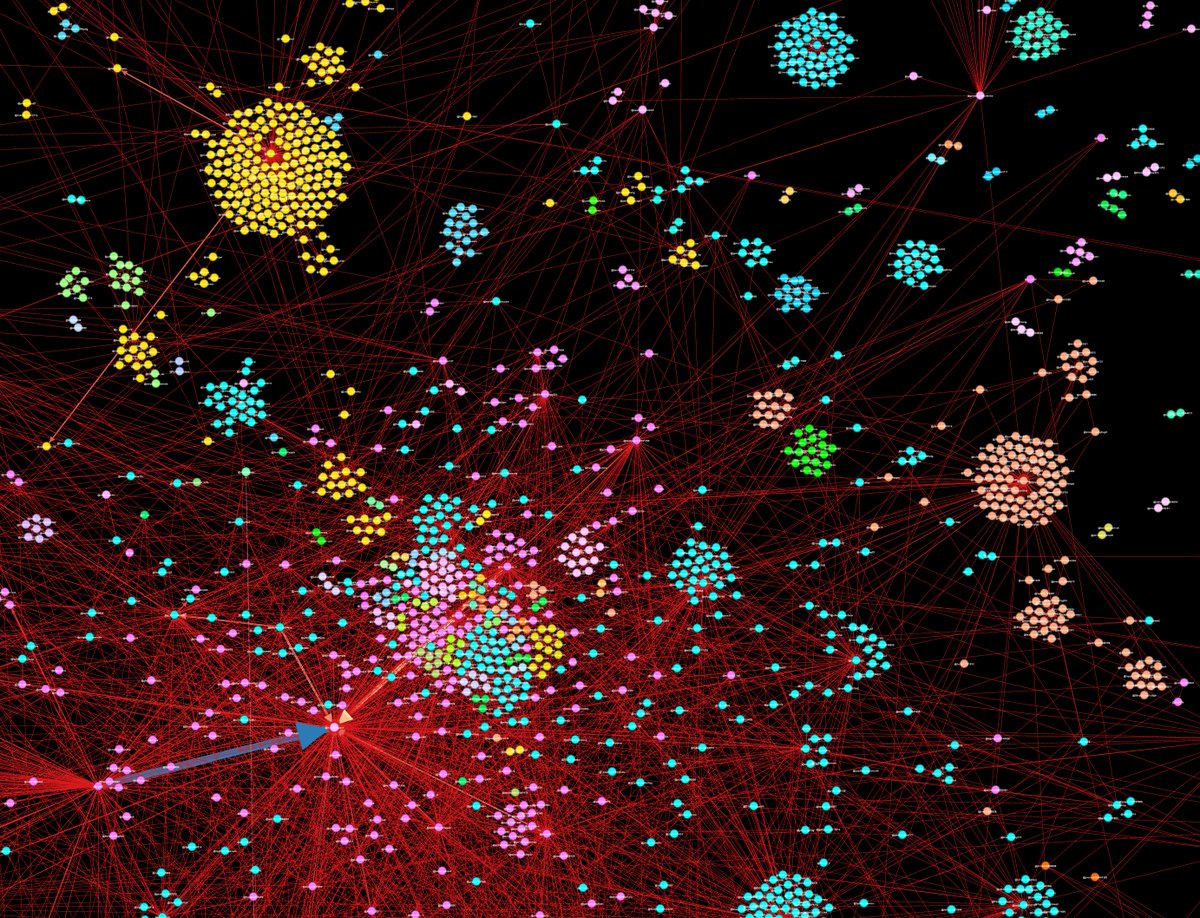

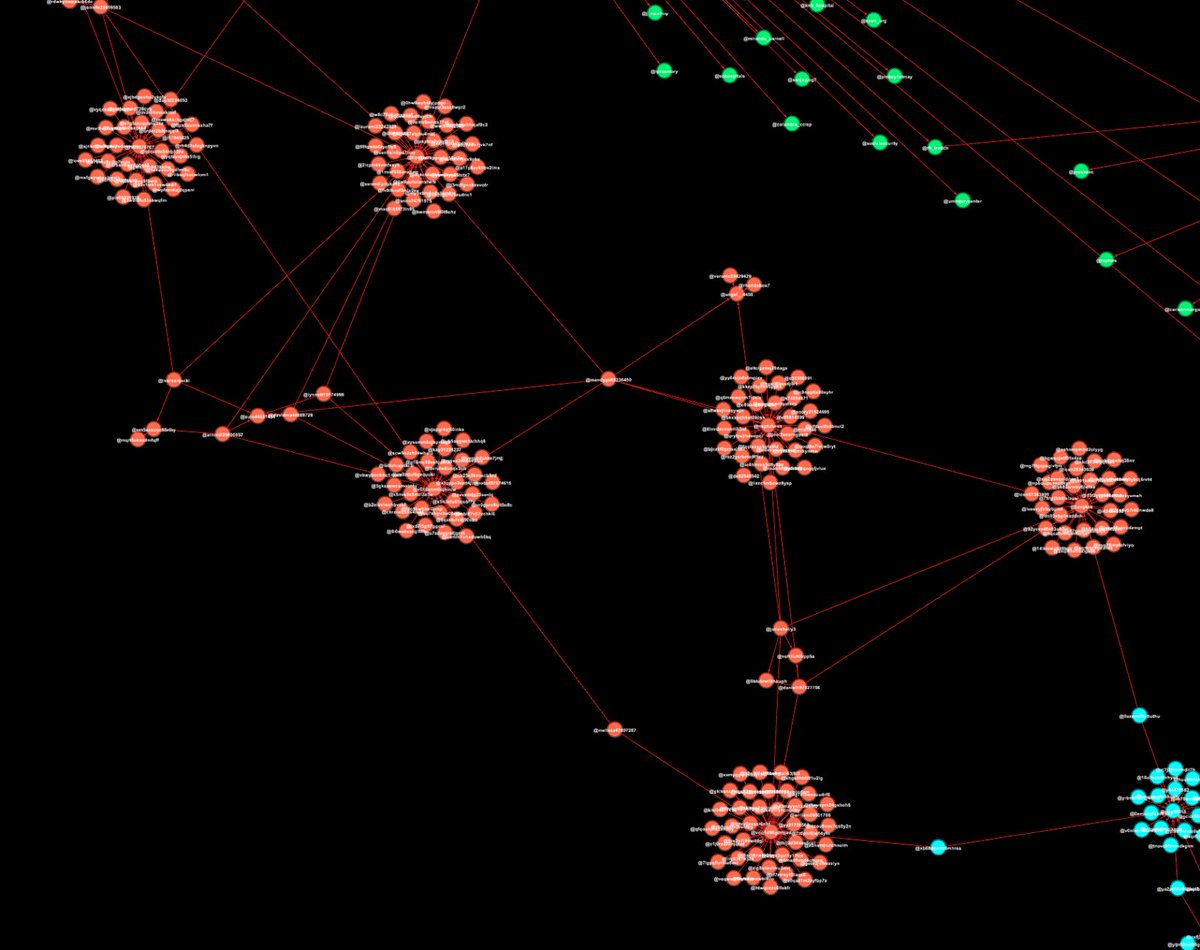

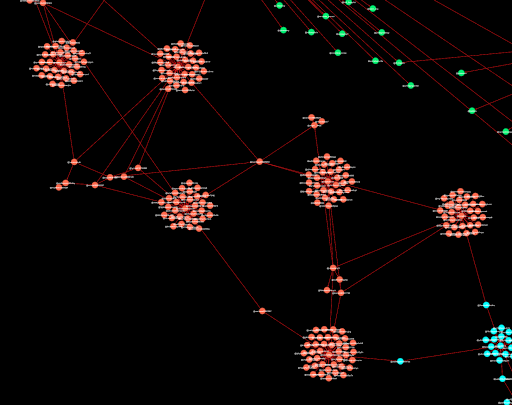

For those new to these visualisations, what are we looking at? It’s the ‘conversations’ of accounts using those hashtags above.

- The dots are nodes (accounts)

- The lines are edges (retweets, mentions or likes)

By visualising this data in @Gephi, we can identify trends.

- The dots are nodes (accounts)

- The lines are edges (retweets, mentions or likes)

By visualising this data in @Gephi, we can identify trends.

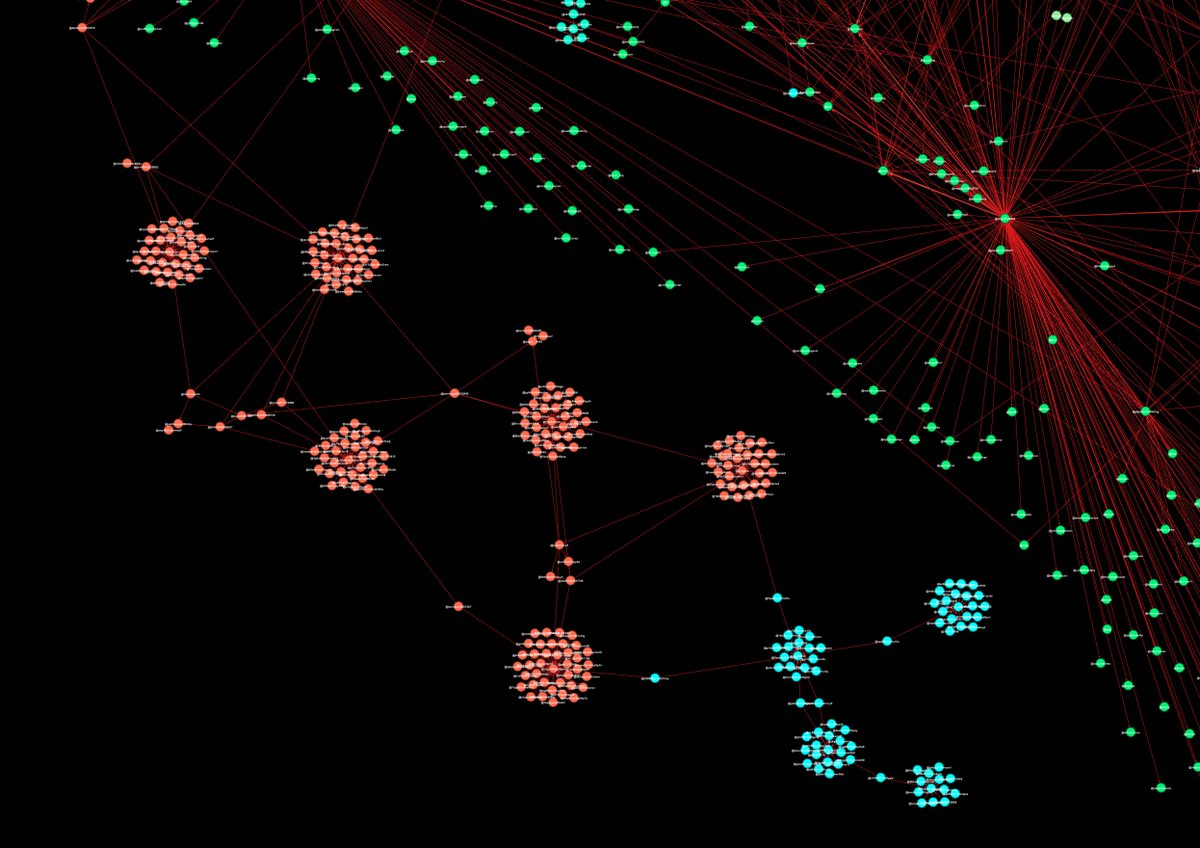

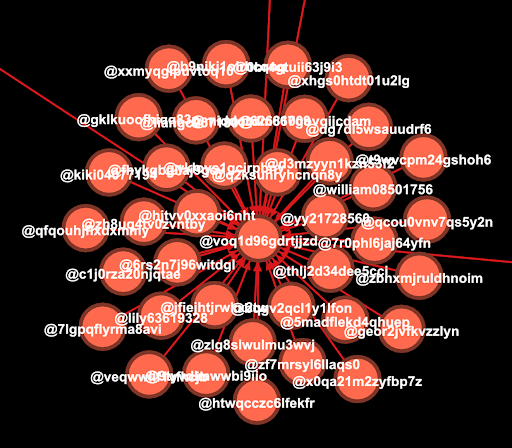

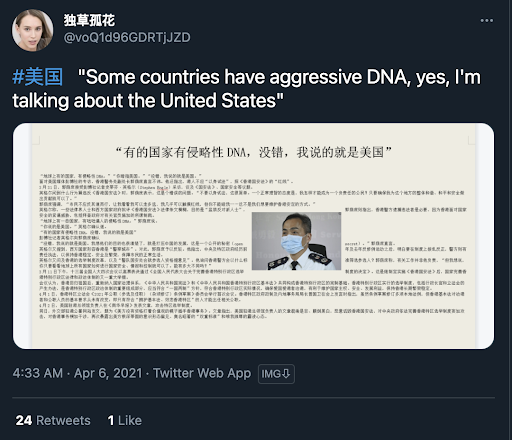

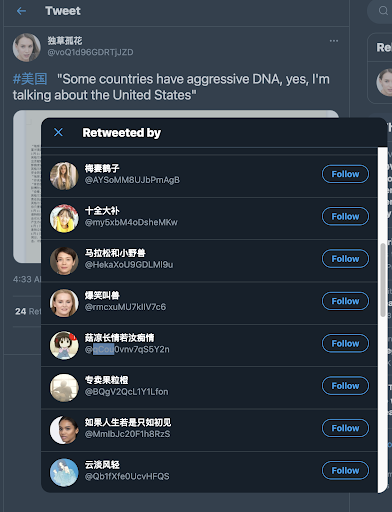

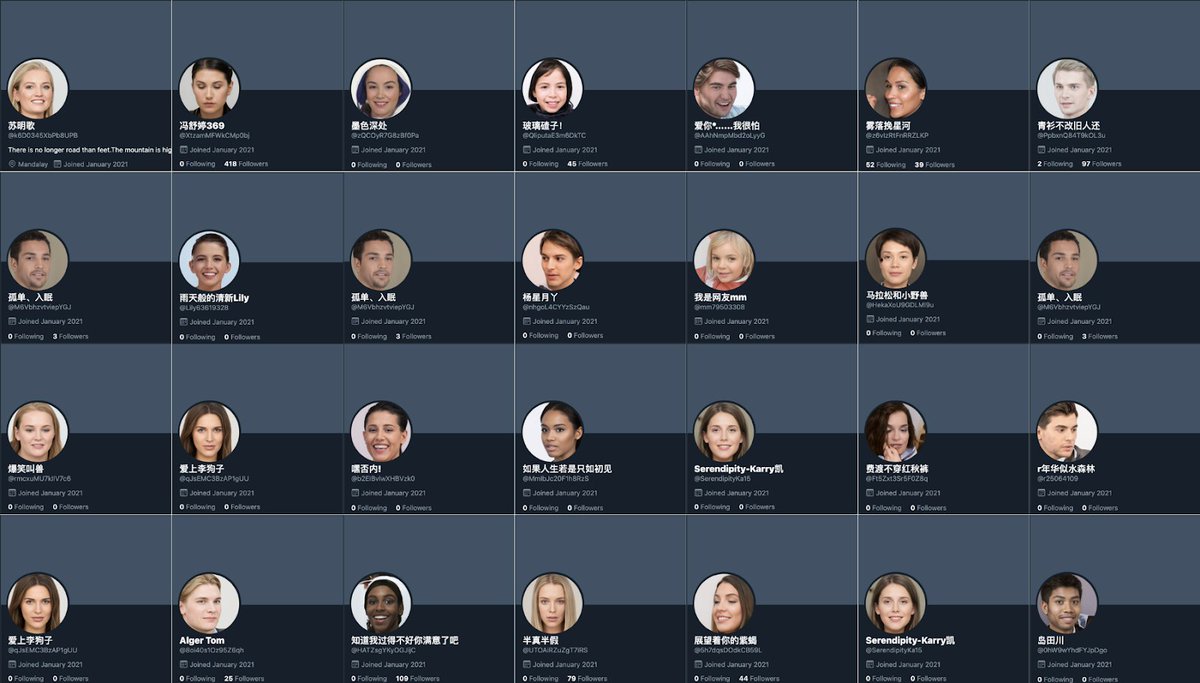

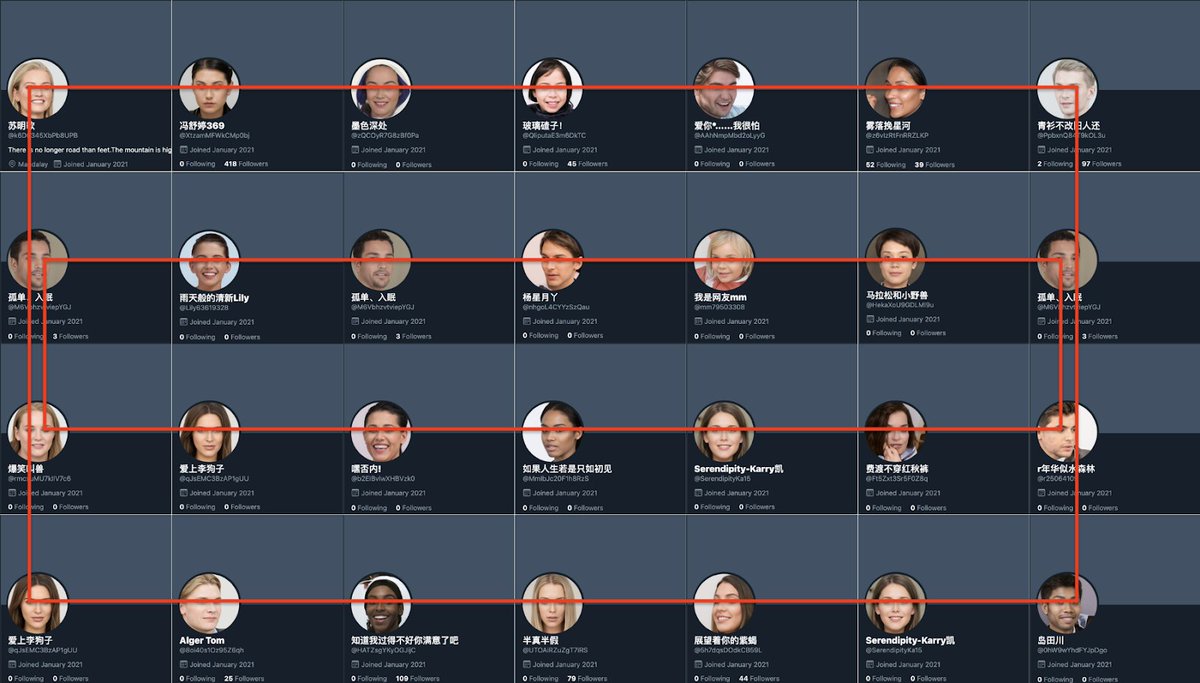

By following this method, we were able to identify specific accounts and their networks. For example, we identified clusters like this one surrounding account @voQ1d96GDRTjJZD. Note the usernames of accounting around it.

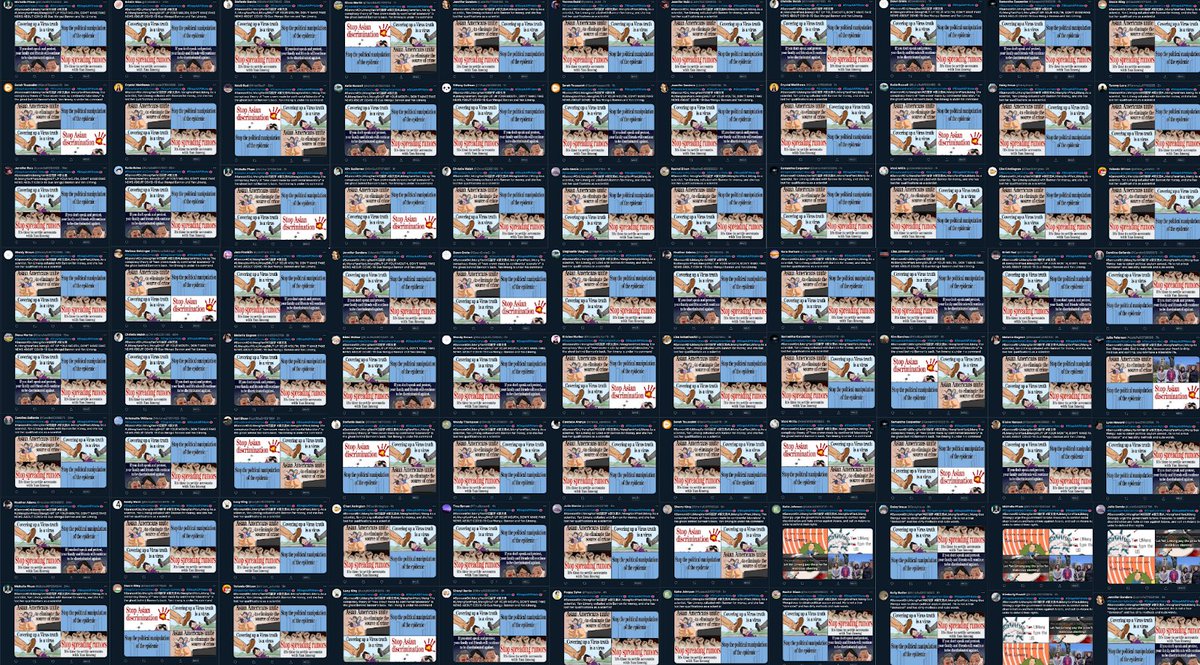

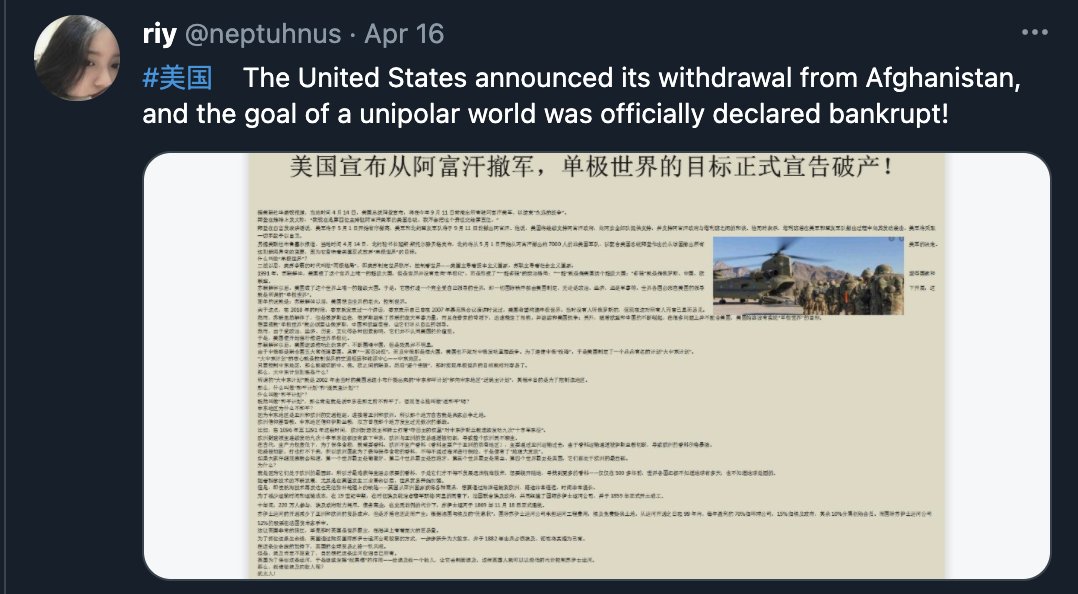

Some of the other core posting accounts had tweets that were not so much retweeted or liked, but commented on. For example in the analysis of a tweet by user @Zoe51610873 we found it had 152 comments - abnormally high in comparison to the 11 retweets and four likes of the post.

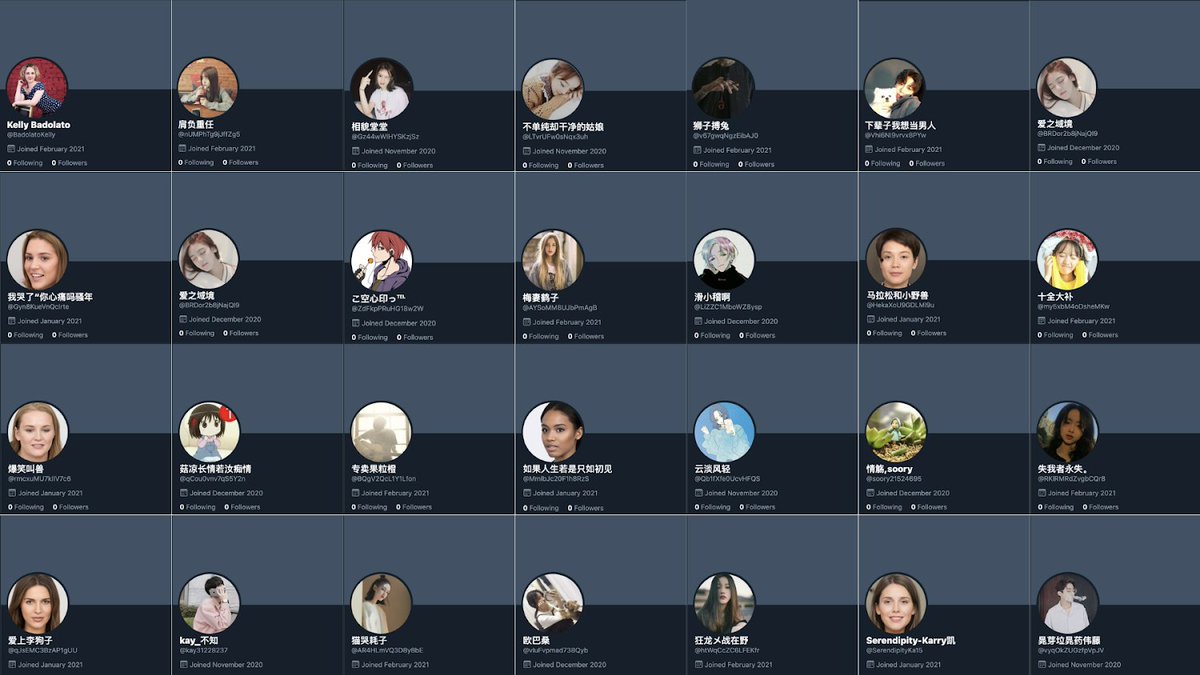

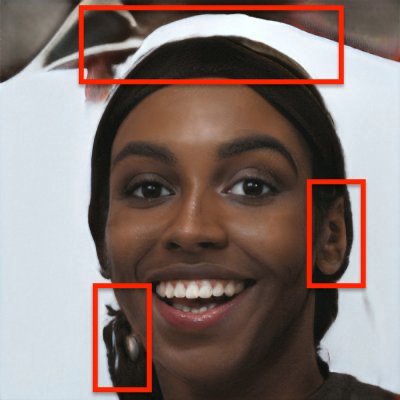

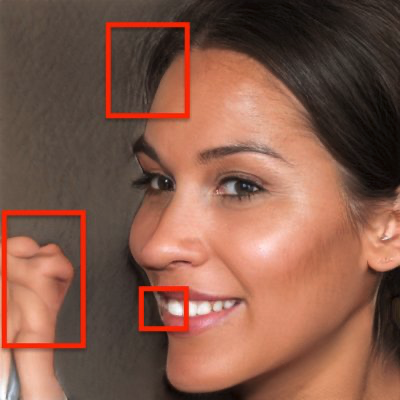

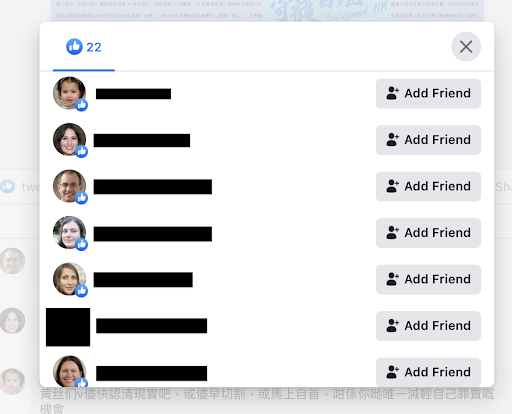

These accounts are using images of faces that have been generated. They are StyleGAN images. You can see more of these from the website thispersondoesnotexist.com. In the past, I’ve always found that a simple way to identify StyleGAN faces is through the matching of the eyes.

It should be noted that pro-China networks are not new and have been reported on and removed, in the past. Organisations like @Graphika_NYC & @ASPI_ICPC have done superb reporting on similar pro-China networks. aspi.org.au & graphika.com

The full report can be seen here on the @Cen4infoRes: info-res.org an independent, non-profit social enterprise dedicated to identifying, countering and exposing influence operations. A big thanks to those who helped on this piece.

It's also important to add context to these networks, which @FloraCarmichael has done in this great BBC report on the network.

bbc.co.uk

bbc.co.uk

Loading suggestions...