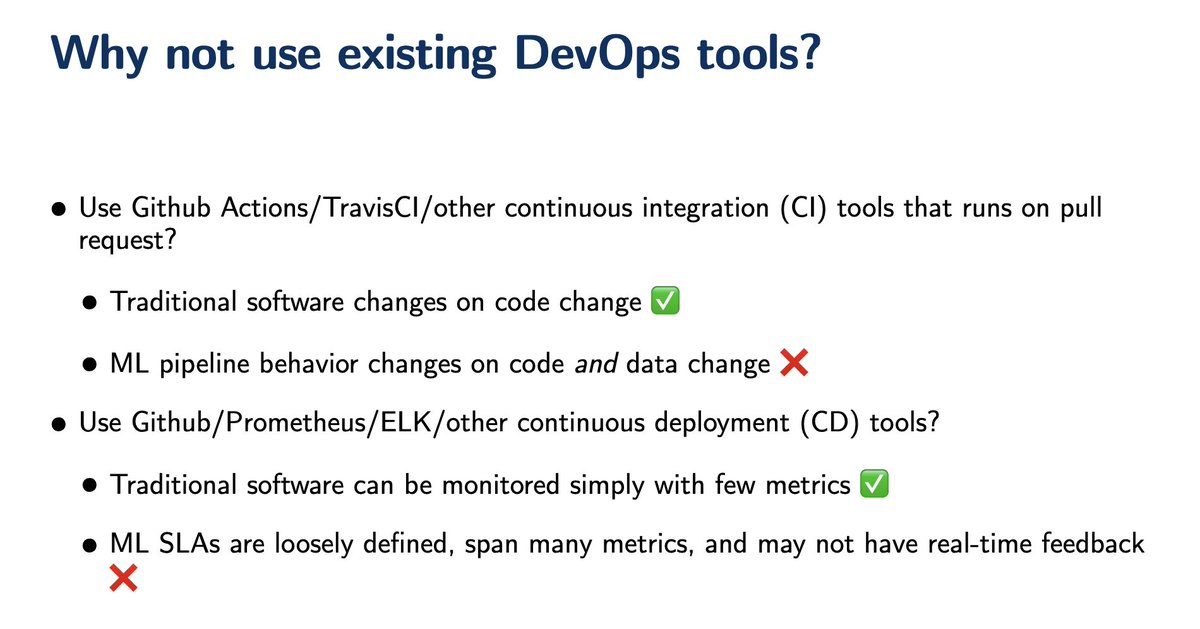

Continuous Integration (CI) & testing for ML pipelines is hard and generally unsolved. I’ve been thinking about this for a while now — why it’s important, what it means, why current solutions are suboptimal, and what we can do about it. (1/10)

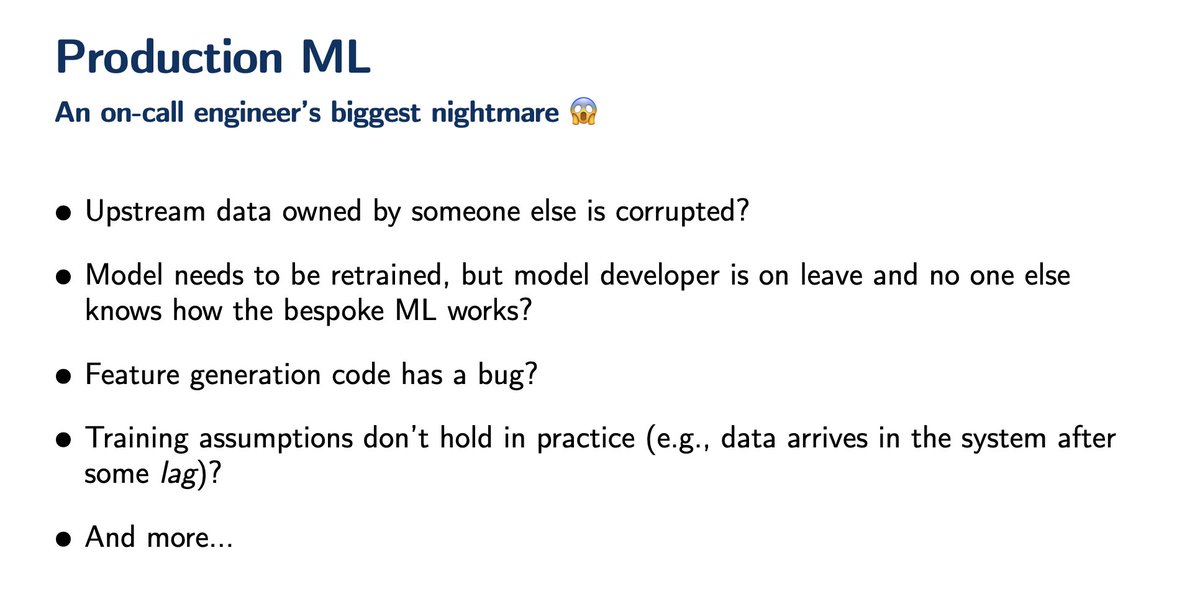

Even with Great Computational Resources, sustaining production ML is hard: the tech/tool stack is complicated, and almost nobody is an expert on the end-to-end pipeline. You need CI, or the ability to continually validate that the pipeline does what you expect it to do. (3/10)

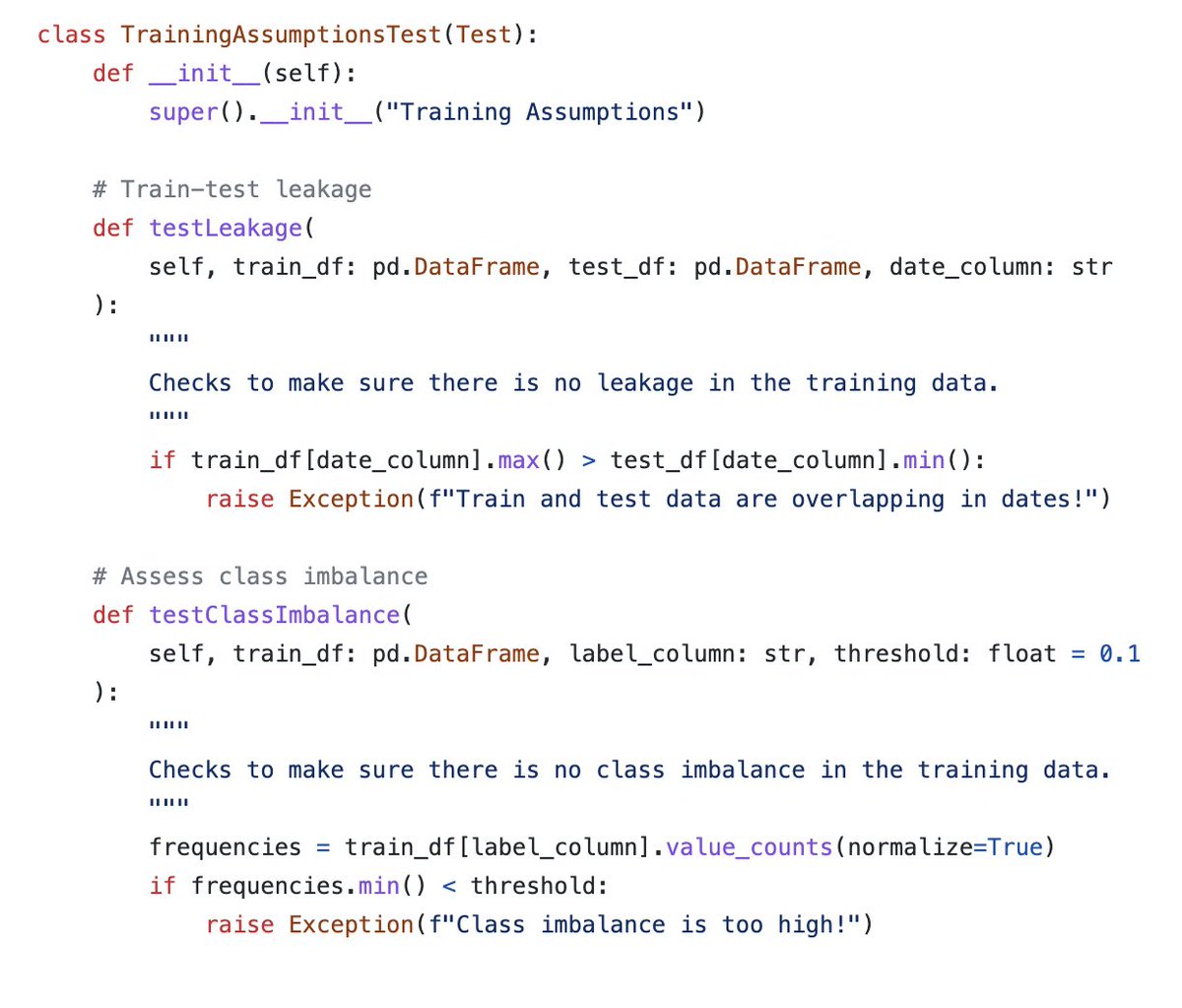

When I started thinking about CI & testing for ML, I investigated the most effective solution (IMO). A best practice is to liberally use assert statements across your pipeline, to check that human-defined invariants hold -- e.g., few nulls, low-variance features over time. (5/10)

Why do assert statements work so well? 1: they force pipeline writers to think critically about pipeline invariants & share this knowledge, 2: they are executed at runtime (when the data is changing), not pull request, 3: they are easy to encode and understand. (6/10)

Why are assert statements suboptimal? They aren’t very modular or reusable across pipeline components, they're decentralized & scattered across application code, and they're blocking (e.g., not executed async). How crazy — this violates principles of software testing! (7/10)

A research challenge we’re thinking about is how to populate a library of reusable tests and ML pipeline components in mltrace. I’d love to read more articles & blog posts on specific reusable tests for different types of ML tasks (e.g., time series, rec sys, physical robots).

Loading suggestions...