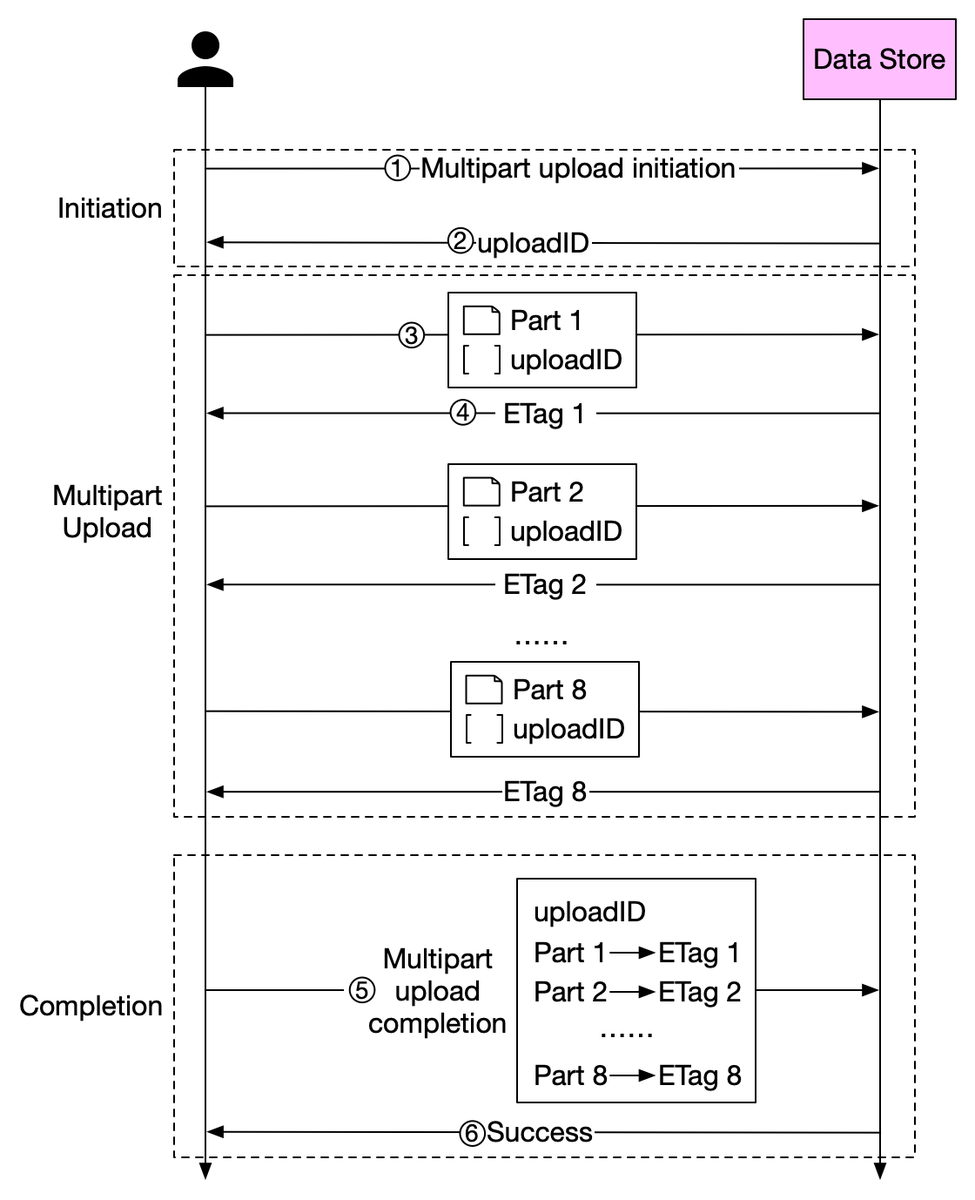

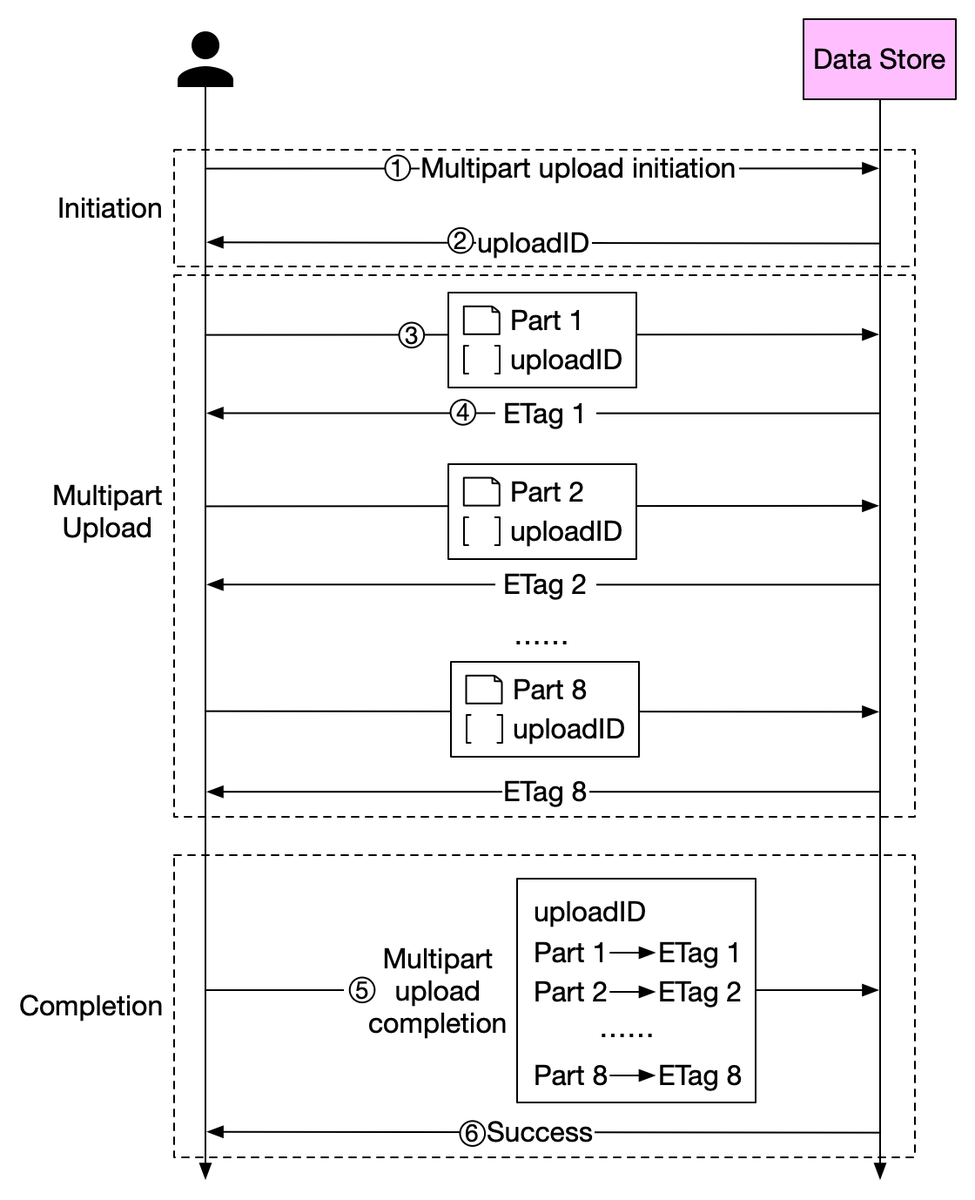

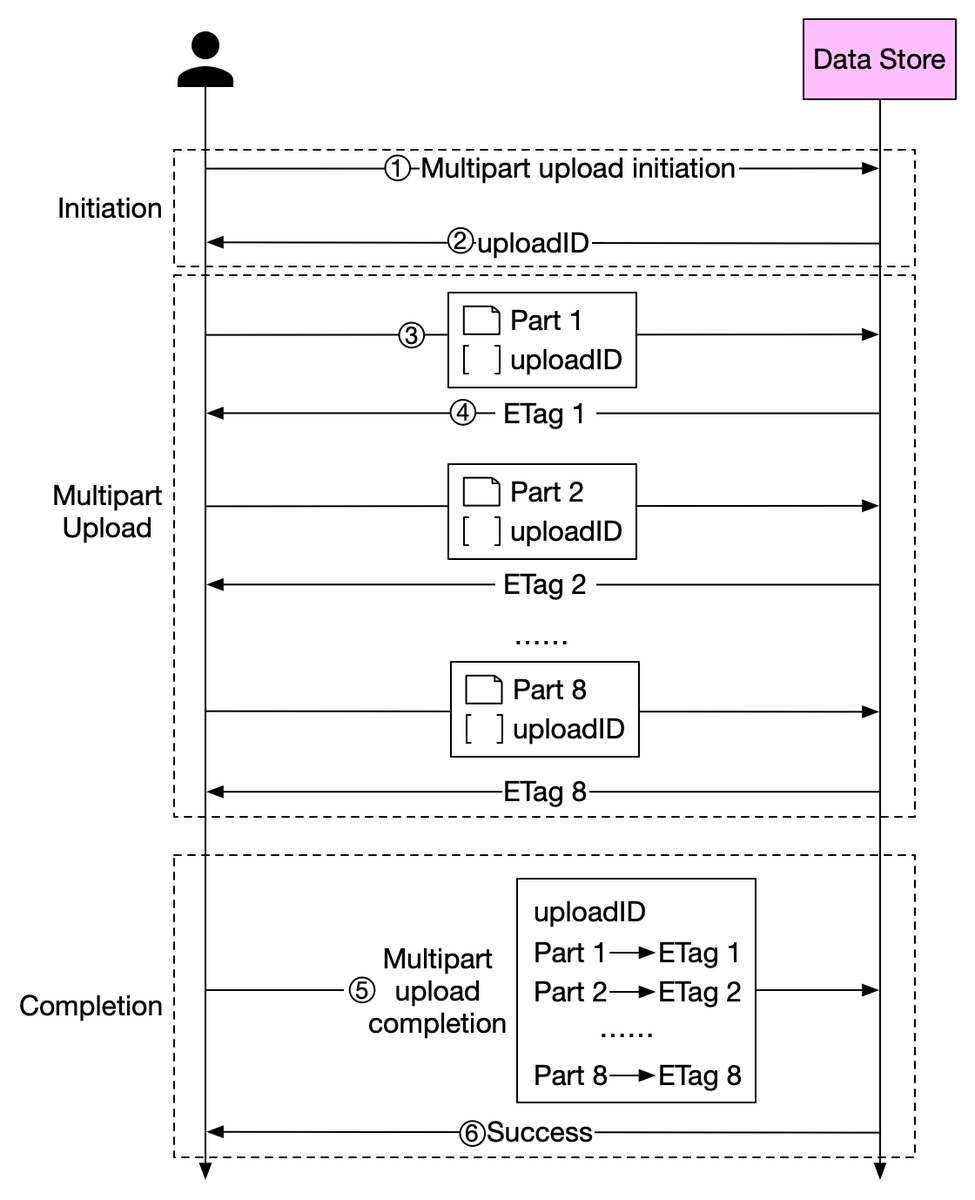

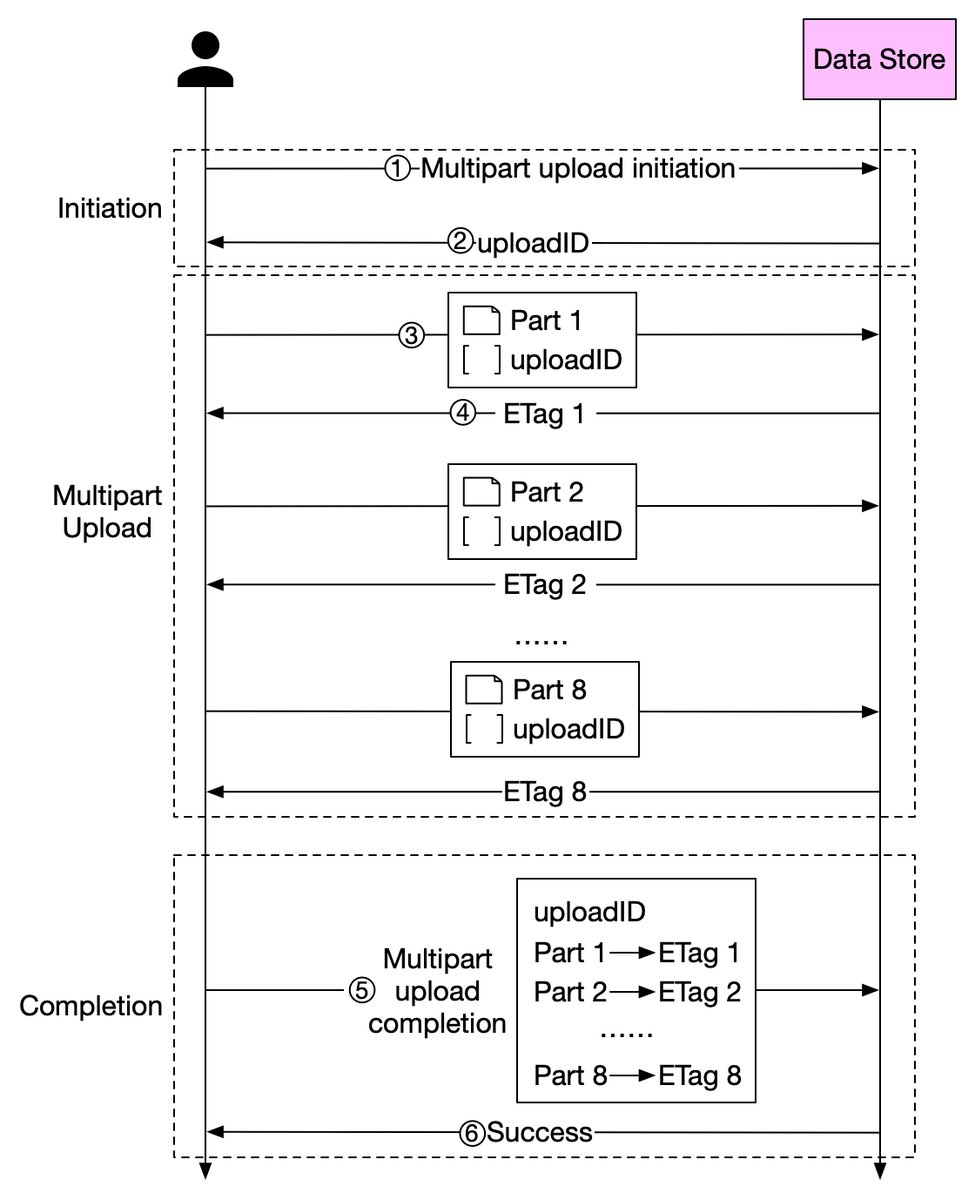

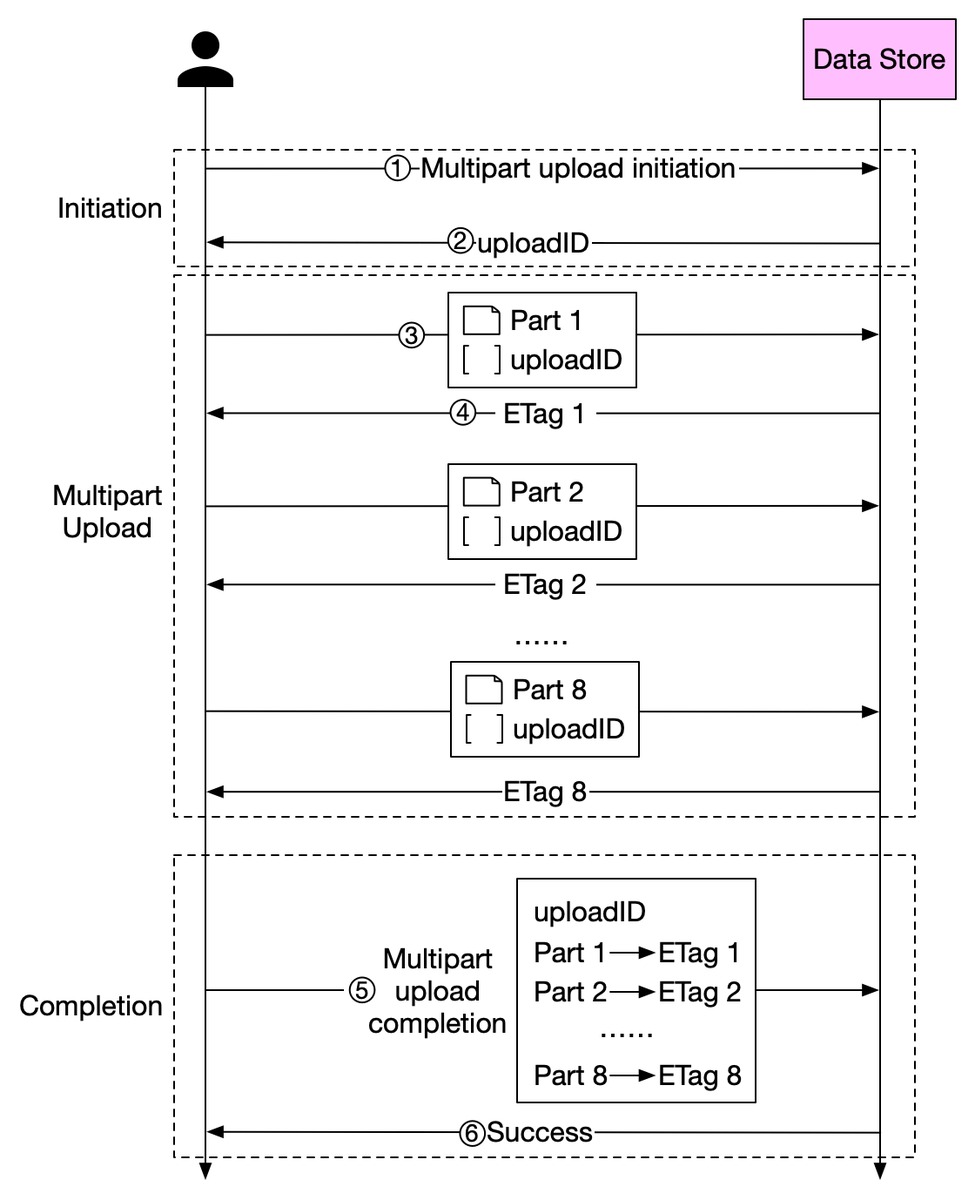

Some files might be larger than a few GBs. It is possible to upload such a large object file directly, but it could take a long time. If the network connection fails in the middle of the upload, we have to start over. 2/8

A better solution is to slice a large object into smaller parts and upload them independently. After all the parts are uploaded, the object store re-assembles the object from the parts. This process is called 𝐦𝐮𝐥𝐭𝐢𝐩𝐚𝐫𝐭 𝐮𝐩𝐥𝐨𝐚𝐝. 3/8

Question: what other techniques can be used to optimize large file upload? 8/8

Loading suggestions...