Compilation thread of various Keras tips ⬇️⬇️⬇️

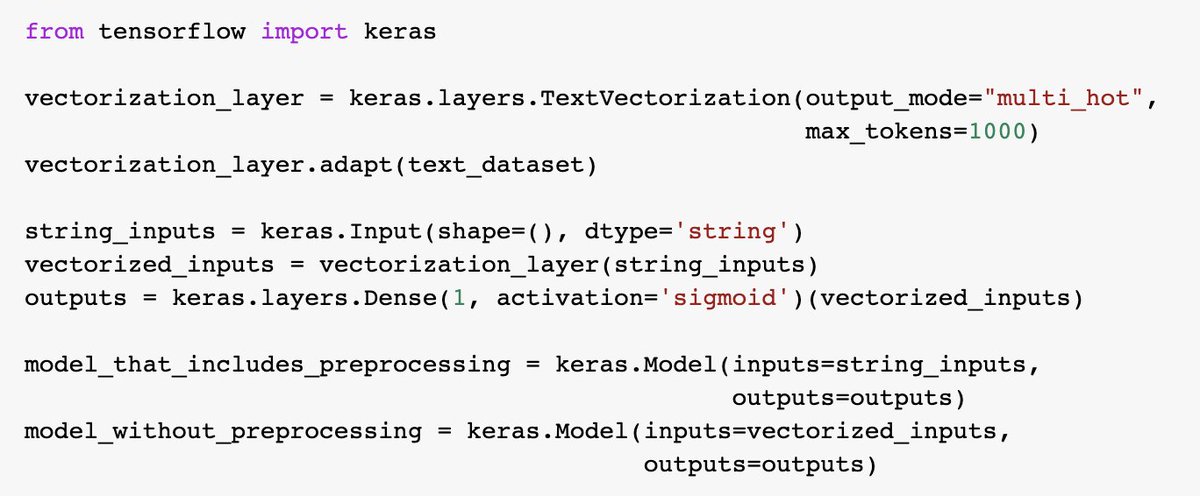

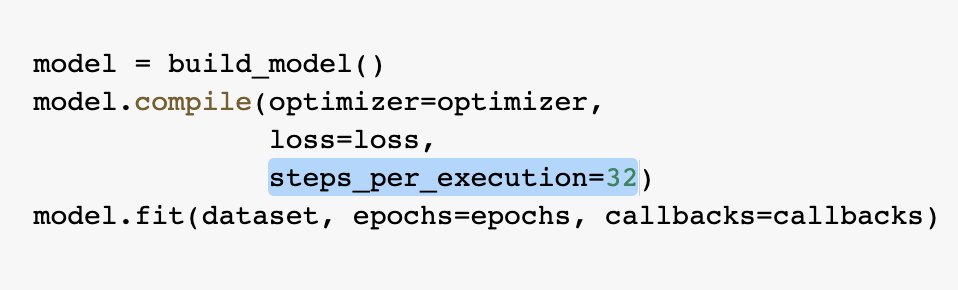

Tip #1: when building models with the Functional API (keras.io), you can use an intermediate output as entry point to define a new Model.

This is especially useful to create pairs of models where one includes preprocessing (e.g. for inference), and one does not.

This is especially useful to create pairs of models where one includes preprocessing (e.g. for inference), and one does not.

@amit_amola See tip #8

Tip #9: use TFRecords for efficient data storage and loading. Once you've converted your data to the TFRecords format and stored it in the cloud, it becomes easy to stream it to your model, e.g. via `tf.dаtа.TFRecordDataset`.

keras.io

keras.io

Loading suggestions...