Do you still use standard ML evaluation metrics to convince your client (or boss) that your ML model works?

Good luck 😛

There are more convincing ways to test ML models in the real world,

Let's see what these are in this mega 🧵

#machinelearning

#freelancing

#datascience

Good luck 😛

There are more convincing ways to test ML models in the real world,

Let's see what these are in this mega 🧵

#machinelearning

#freelancing

#datascience

The problem with standard ML evaluation metrics

We, data scientists and ML engineers, develop and test ML models in our local development environment, for example, a Jupyter notebook.

We use standard ML evaluation metrics depending on the kind of problem we are trying to solve:

We, data scientists and ML engineers, develop and test ML models in our local development environment, for example, a Jupyter notebook.

We use standard ML evaluation metrics depending on the kind of problem we are trying to solve:

For example,

• If it is a regression problem we print things like mean squared errors, Huber losses, etc.

• If it is a classification problem we print confusion matrices, accuracies, precision, recall, etc.

• If it is a regression problem we print things like mean squared errors, Huber losses, etc.

• If it is a classification problem we print confusion matrices, accuracies, precision, recall, etc.

The problem is that these numbers have almost no meaning for non-ML folks around us, including the ones who call the shots and prioritize what pieces of software make it into production, including our ML models.

Why don't these metrics convince them?

Two reasons:

1. These metrics are not business metrics, but rather abstract.

2. There is no guarantee that once deployed your ML will work as expected according to your standard metrics because many things can go wrong in production.

Two reasons:

1. These metrics are not business metrics, but rather abstract.

2. There is no guarantee that once deployed your ML will work as expected according to your standard metrics because many things can go wrong in production.

Ultimately, to test ML models you need to run them in production and monitor their performance.

However, it is far from optimal to follow a strategy where models are directly moved from a Jupyter notebook to production.

However, it is far from optimal to follow a strategy where models are directly moved from a Jupyter notebook to production.

The question is then,

How can we safely walk the path from local standard metrics to production?

How can we safely walk the path from local standard metrics to production?

There are at least 3 things you can do before jumping straight into production:

• Backtesting your model

• Shadow deploying your model

• A/B testing your model

• Backtesting your model

• Shadow deploying your model

• A/B testing your model

They represent incremental steps towards a proper evaluation of the model and can help you and the team safely deploy ML models and add incremental value to the business.

Let’s see how these evaluation methods work, with an example.

Let’s see how these evaluation methods work, with an example.

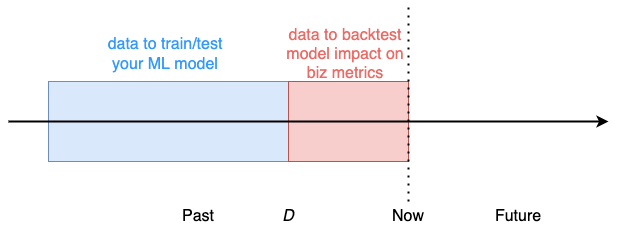

🌟 Method #1: Backtesting your ML model

Backtesting is an inexpensive way to evaluate your ML model, that you can implement in your development environment.

Backtesting is an inexpensive way to evaluate your ML model, that you can implement in your development environment.

Why inexpensive?

Because

• You only use historical data, so you do not need more data than what you already have.

• You do not need to go through a deployment process, which can take time and several iterations to get right.

Because

• You only use historical data, so you do not need more data than what you already have.

• You do not need to go through a deployment process, which can take time and several iterations to get right.

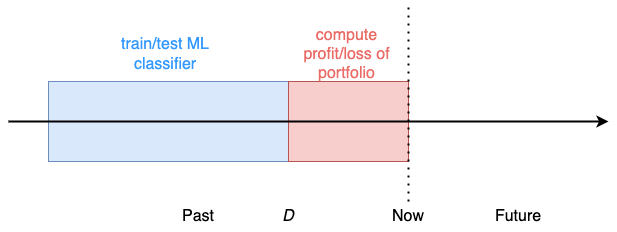

For example, imagine you work in a financial trading firm as an ML developer.

The firm manages a portfolio of investments in stocks, bonds, crypto, and commodities.

Your boss comes one day and says:

"Can we develop an ML-based strategy to improve the portfolio returns?"

The firm manages a portfolio of investments in stocks, bonds, crypto, and commodities.

Your boss comes one day and says:

"Can we develop an ML-based strategy to improve the portfolio returns?"

To which you answer: "Sure!"

Given the tons of historical price data for these assets, you will train an ML model to predict price changes for each asset, and use these predictions to adjust the portfolio composition every day.

Given the tons of historical price data for these assets, you will train an ML model to predict price changes for each asset, and use these predictions to adjust the portfolio composition every day.

The ML model is a 3-class classifier, where

• the target is `up` if the next day’s price is higher than today’s, `same` if it stays very close, and `down` if it goes down.

• The features are static, like asset type, and behavioral, like historical prices and volatilities.

• the target is `up` if the next day’s price is higher than today’s, `same` if it stays very close, and `down` if it goes down.

• The features are static, like asset type, and behavioral, like historical prices and volatilities.

You develop the model in your local environment and you print standard classification metrics, for example, accuracy.

For the sake of simplicity, let’s assume the 3 classes are perfectly balanced in your test set, meaning 33.333% for each of the classes `up`, `same`, `down`.

For the sake of simplicity, let’s assume the 3 classes are perfectly balanced in your test set, meaning 33.333% for each of the classes `up`, `same`, `down`.

And your test accuracy is `34%` !!! 🎉

Predicting financial market movements is hard, and your model accuracy is above the `33%` accuracy you get if you always predict the same class.

Things look promising, and you tell your manager to start using the model right away.

Predicting financial market movements is hard, and your model accuracy is above the `33%` accuracy you get if you always predict the same class.

Things look promising, and you tell your manager to start using the model right away.

Your manager, a non-ML person who has been in this industry for a while, looks at the number and asks:

“Are you sure the model works? Will it make more money than the current strategies?”

“Are you sure the model works? Will it make more money than the current strategies?”

This is probably not the answer you expected, but sadly, it is one of the most common ones. When you show such metrics to non-ML people who call the shots in the company, you will often get a NO.

You need to go one step further, to show your model will generate more profit.

You need to go one step further, to show your model will generate more profit.

If your backtest shows negative results, meaning your portfolio would have generated a loss, you go back to square 1.

On the contrary, if the profit of the portfolio in the backtest period is positive, you go back to your manager:

On the contrary, if the profit of the portfolio in the backtest period is positive, you go back to your manager:

You: “The backtest showed a positive result, let’s start using the model”

To which she answers

“Let’s go step by step. Let’s first deploy it and make sure it actually works in our production environment.”

And this leads to our next evaluation step.

“Let’s go step by step. Let’s first deploy it and make sure it actually works in our production environment.”

And this leads to our next evaluation step.

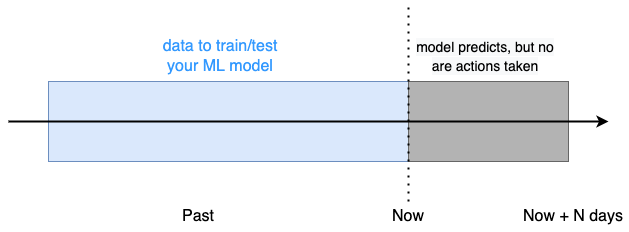

🌟 Method #2: Shadow deployment in production

ML models are very fragile to small differences between the data used to train them and the data sent to the model at inference time.

ML models are very fragile to small differences between the data used to train them and the data sent to the model at inference time.

For example, if you have a feature in your model that:

• had almost no missing values in your training data, but

• is almost always unavailable (and hence missing) at inference time

Your model performance at inference time will deteriorate, and be worse than what you expected

• had almost no missing values in your training data, but

• is almost always unavailable (and hence missing) at inference time

Your model performance at inference time will deteriorate, and be worse than what you expected

After N days, you look at the model predictions, and how the portfolio profit would have been if we had used the model to take action.

If the hypothetical performance is negative (i.e. a loss) you need to go back to our model and try to understand what is going wrong.

If the hypothetical performance is negative (i.e. a loss) you need to go back to our model and try to understand what is going wrong.

• was the data sent to the model very different from the one in the training data? Any missing parameters?

• was the backtest period a very calm and predictable one, while today’s market conditions are very different?

• is there a bug in the backtest you ran previously?

• was the backtest period a very calm and predictable one, while today’s market conditions are very different?

• is there a bug in the backtest you ran previously?

If the hypothetical profit is positive, you get another sign your model is working. So you go back to your boss on Friday and say:

“The model would have generated profit this week if we had been using it. Let’s start using it, come on”.

“The model would have generated profit this week if we had been using it. Let’s start using it, come on”.

To which she replies,

“Didn’t you see this week’s performance of our portfolio? It was incredibly good. Was your model even better or worse?”

“Didn’t you see this week’s performance of our portfolio? It was incredibly good. Was your model even better or worse?”

You spent the whole week so focused on your live test, that you even forgot to check the actual performance.

Now, you look at the two numbers:

• the actual portfolio performance of the week

• and the hypothetical performance for your model

Now, you look at the two numbers:

• the actual portfolio performance of the week

• and the hypothetical performance for your model

and you see that your number is slightly above the actual performance.

This is great news for you! So you rush back to your manager and tell her the good news.

This is great news for you! So you rush back to your manager and tell her the good news.

And this is what she responds:

“Let’s run an A/B test next week to make sure this ML model is better than what we have right now”

You are now on the verge to explode:

“What else do you need to see to believe this ML model is better?”

And she says:

“Actual money” 💰

“Let’s run an A/B test next week to make sure this ML model is better than what we have right now”

You are now on the verge to explode:

“What else do you need to see to believe this ML model is better?”

And she says:

“Actual money” 💰

You call it a week and take well-deserved 2-day rest.

You will need to take one more step to convince her...

You will need to take one more step to convince her...

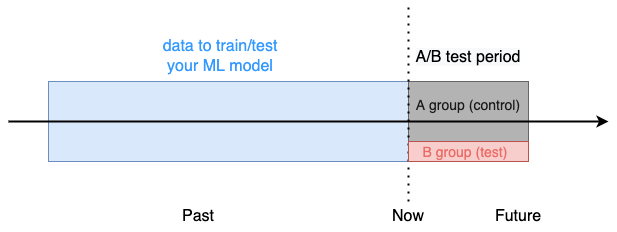

🌟 Method #3: A/B testing your model

So far, all your evaluations have been either

• too abstract, like the `34%` accuracy

• or hypothetical. Both the backtesting and the shadow deployment produced no actual money. You estimated the profit instead.

So far, all your evaluations have been either

• too abstract, like the `34%` accuracy

• or hypothetical. Both the backtesting and the shadow deployment produced no actual money. You estimated the profit instead.

You need to compare actual dollars versus actual dollars, to decide if we should use our new ML-based strategy instead. This is the final way to test ML models, that no one could refute.

And to do so you decide to run an A/B test from Monday to Friday.

And to do so you decide to run an A/B test from Monday to Friday.

Every day you monitor the actual profit of each of the 2 sub-portfolios and on Friday you stop the test.

When you compare the aggregate profit of our ML-based system vs the status quo, 3 things might happen.

When you compare the aggregate profit of our ML-based system vs the status quo, 3 things might happen.

Scenario 1:

The status quo performed much better than your ML system. In this case, you will have a hard time convincing your manager that your strategy should stay alive.

The status quo performed much better than your ML system. In this case, you will have a hard time convincing your manager that your strategy should stay alive.

Scenario 2:

Both sub-portfolios performed very similarly, which might lead your manager to extend the test for another week to see any significant differences.

Both sub-portfolios performed very similarly, which might lead your manager to extend the test for another week to see any significant differences.

Scenario 3:

Your ML system overperformed the status quo. In this case, you have everything on your side to convince everyone in the company that your model works better than the status quo, and should at least be used for 10% of the total assets, if not more.

Your ML system overperformed the status quo. In this case, you have everything on your side to convince everyone in the company that your model works better than the status quo, and should at least be used for 10% of the total assets, if not more.

In this case, a prudent approach would be to progressively increase the percentage of assets managed under this ML-based strategy, monitoring performance week by week.

After 3 long weeks of ups and downs, you finally get an evaluation metric that can convince everyone (including you) that your model adds value to the business.

Good job!

Good job!

Wrapping it up

Next time you find it hard to convince people around you that your ML models work, remember the 3 strategies you can use to test ML models, from less to more convincing,

• Backtesting

• Shadow deployment in production

• A/B testing

Next time you find it hard to convince people around you that your ML models work, remember the 3 strategies you can use to test ML models, from less to more convincing,

• Backtesting

• Shadow deployment in production

• A/B testing

The path from ML development to production can be rocky and discouraging, especially in smaller companies and startups that do not have reliable A/B testing systems in place.

It is sometimes tedious to test ML models, but it is worth the hassle.

It is sometimes tedious to test ML models, but it is worth the hassle.

Believe me, if you use real-world evaluation metrics to test ML models, you will succeed.

If you want to read more about real-world ML and freelancing, follow me on Twitter and subscribe to my newsletter

datamachines.xyz

datamachines.xyz

Loading suggestions...