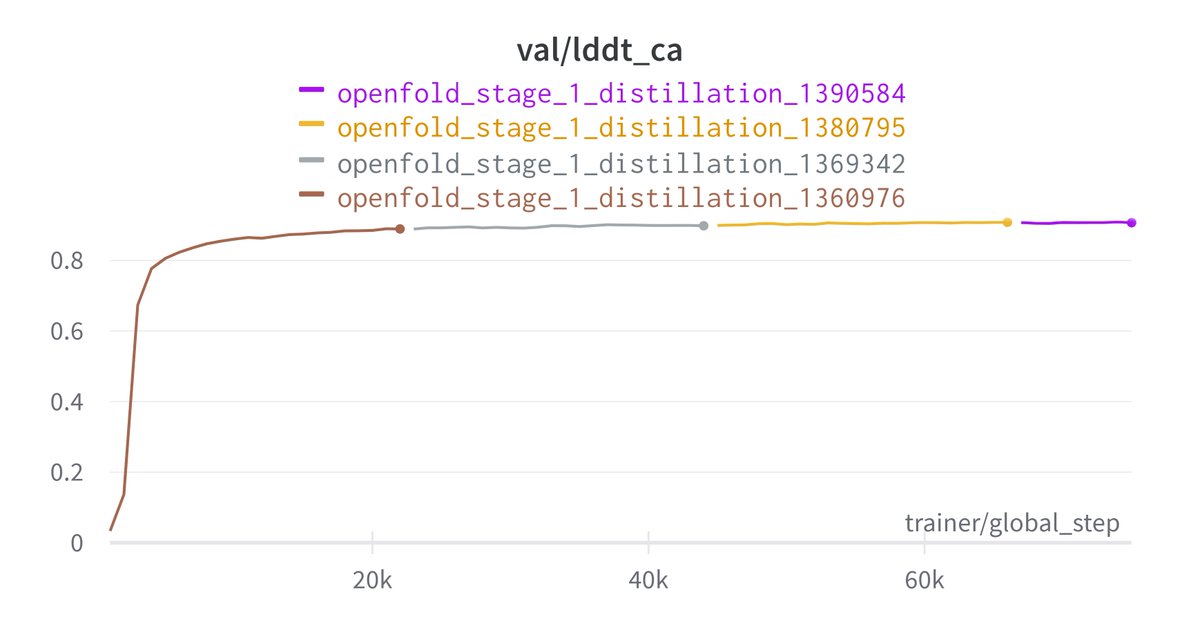

We have successfully trained OpenFold from scratch, our trainable PyTorch implementation of AlphaFold2. The new OpenFold (OF) (slightly) outperforms AlphaFold2 (AF2). I believe this is the first publicly available reproduction of AF2. We learned a lot. A🧵1/12

First off: model weights, training code and colab notebook are here github.com. We are also making available a training set of 400K unique MSAs & predicted structures for self-distillation. These lives in the Registry of Open Data on AWS registry.opendata.aws 2/12

github.com/aqlaboratory/o…

GitHub - aqlaboratory/openfold: Trainable, memory-efficient, and GPU-friendly PyTorch reproduction of AlphaFold 2

Trainable, memory-efficient, and GPU-friendly PyTorch reproduction of AlphaFold 2 - GitHub - aqlabor...

registry.opendata.aws/openfold

OpenFold Training Data - Registry of Open Data on AWS

The Registry of Open Data on AWS is now available on AWS Data Exchange All datasets on the Registry...

Our PyTorch implementation has some advantages over the publicly available JAX implementation from DeepMind, beyond the obvious one of being trainable. 5/12

1st is speed: OF inference is up to 2x faster on short proteins even when excluding JAX compilation. On longer proteins advantage lessens, until AF2 begins to OOM (see 2nd point). Inference speed is key when coupled with fast MSA schemes like MMseqs2 6/12

2nd is memory: we use less due to optimizations and custom CUDA kernels, enabling inference of much longer sequences. In general we get up to ~4,600 residues on a 40GB A100 and we believe we can optimize further. 7/12

Preprint coming soon, with more details about what we learned during training and lots of ablation studies. 8/12

This was a big effort within the lab and with many external collaborators. Internally credit goes to the OF team led by @gahdritz (w/@SachinKadyan99, Luna Xia, Will Gerecke) and co-advised by @NazimBouatta and me. 9/12

Externally our collaborators at @nyuniversity (@dabkiel1), @ArzedaCo (Andrew Ban), @cyrusbiotech (@lucas_nivon), @nvidiahealth (@ItsRor, Abe Stern, Venkatesh Mysore, Marta Stepniewska-Dziubinska and Arkadiusz Nowaczynski), ... 10/12

… @OutpaceBio (@BrianWeitzner) and @PrescientDesign (@amw_stanford, @RichBonneauNYU) were pivotal in getting this off the ground and making it a reality. Thank you all! 11/12

This is far from the end of our OpenFold efforts; in fact it is only the beginning. Stay tuned for an exciting announcement soon! 12/12

Some folks I mindlessly forgot to acknowledge: @milot_mirdita, @thesteinegger, and @sokrypton have been incredibly helpful with working out MSA/mmseqs2 issues and providing early feedback on OpenFold.

Loading suggestions...