One of my fave chapters of "Practical Deep Learning for Coders", co-written with @GuggerSylvain, is chapter 8. I've just made the whole thing available as an executable notebook on Kaggle!

It covers a lot of critical @PyTorch & deep learning principles 🧵

kaggle.com

It covers a lot of critical @PyTorch & deep learning principles 🧵

kaggle.com

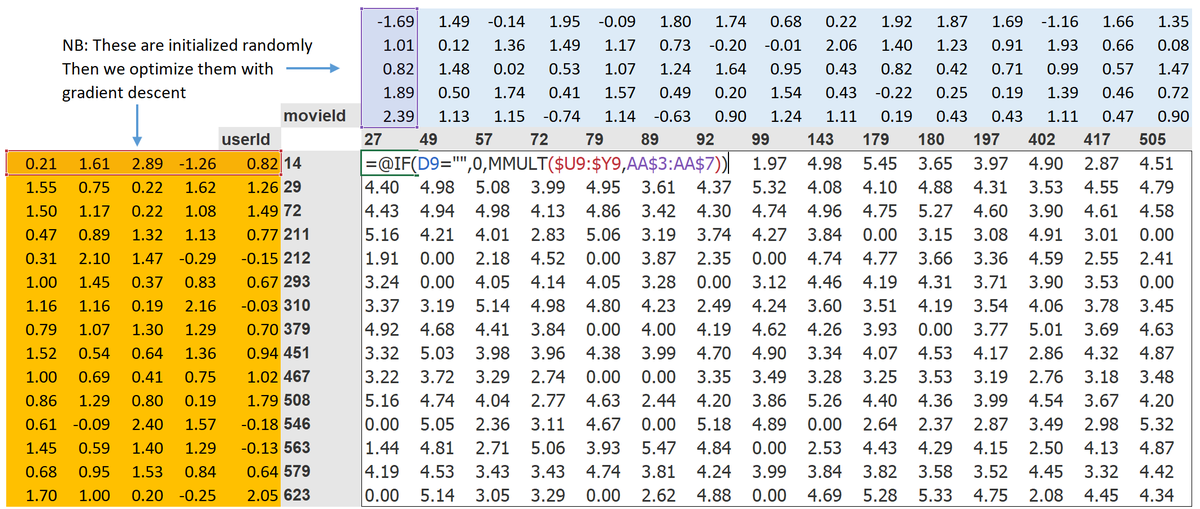

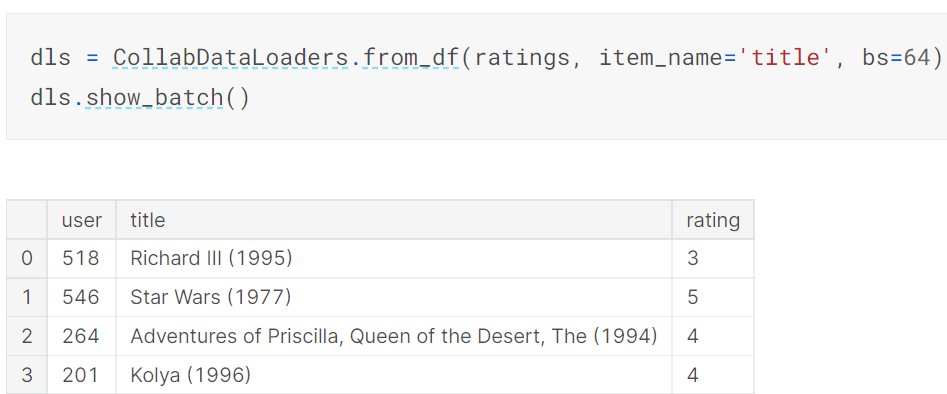

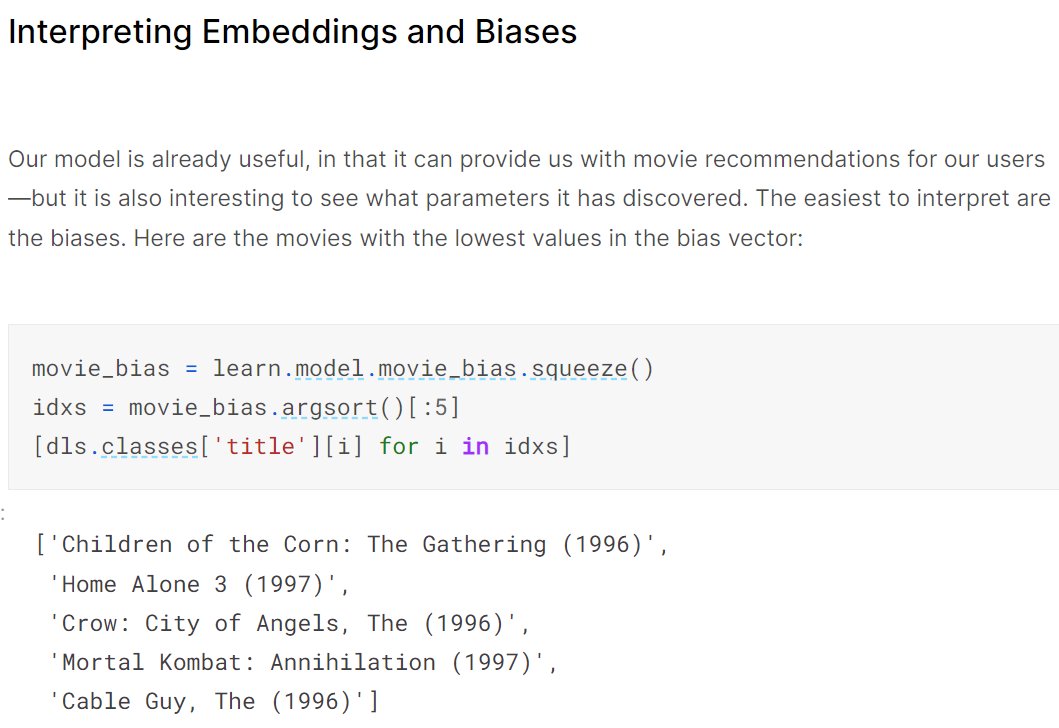

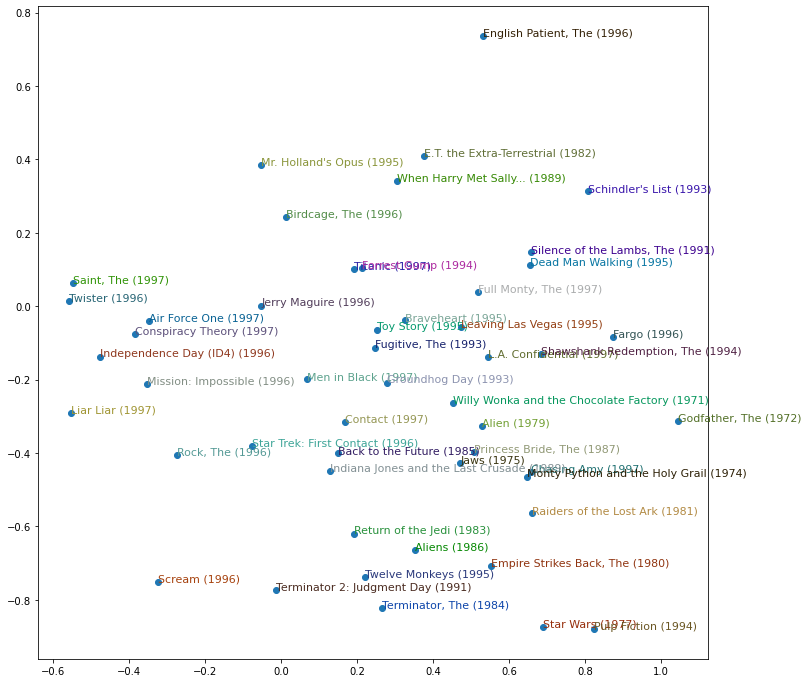

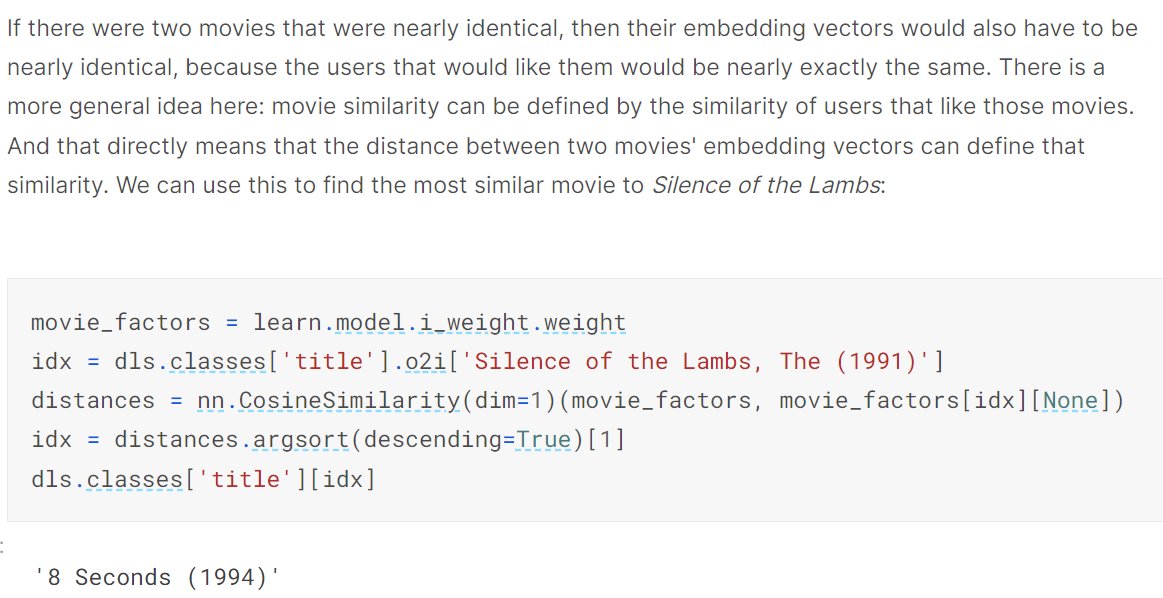

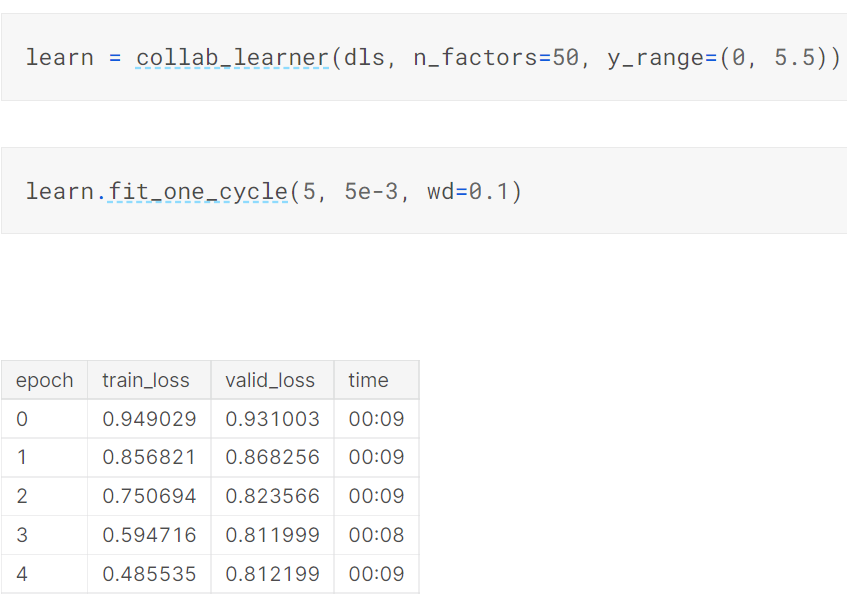

We can calculate those latent factors using stochastic gradient descent. We can construct the data loaders we need using the appropriate @fastdotai application

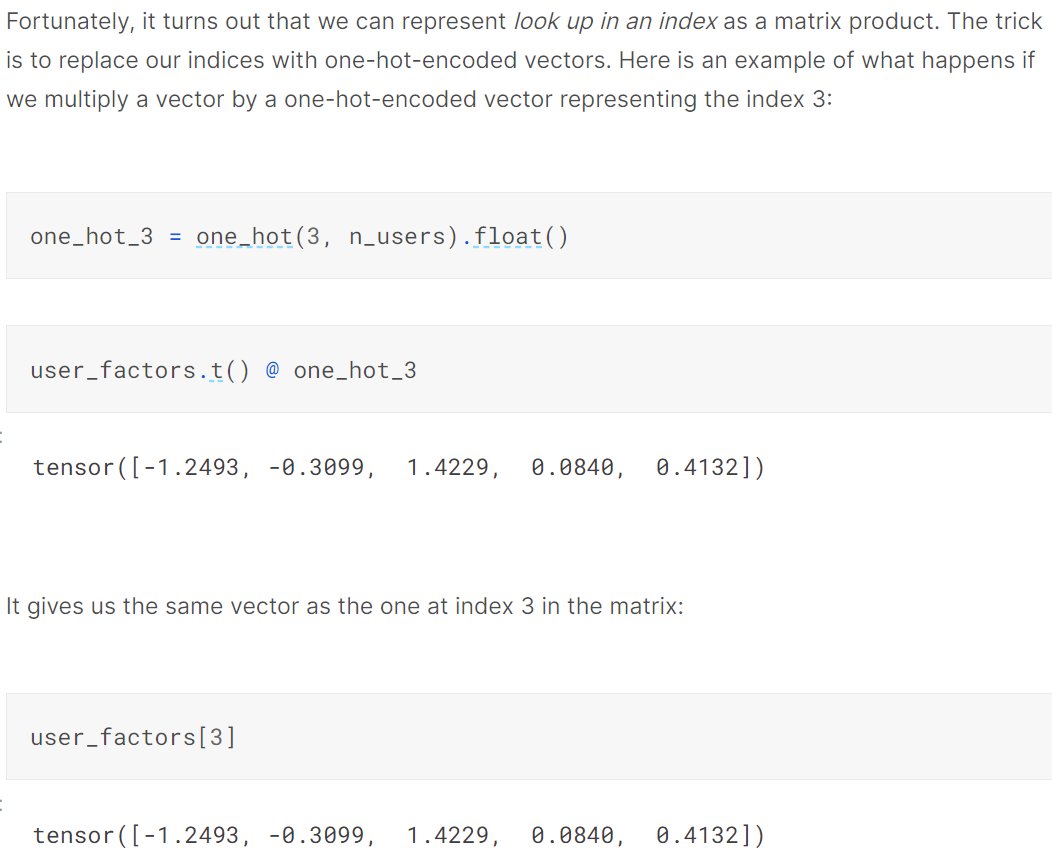

This trick of doing an array index lookup, which is identical to multiplying by a 1-hot encoded vector, is known as an "Embedding".

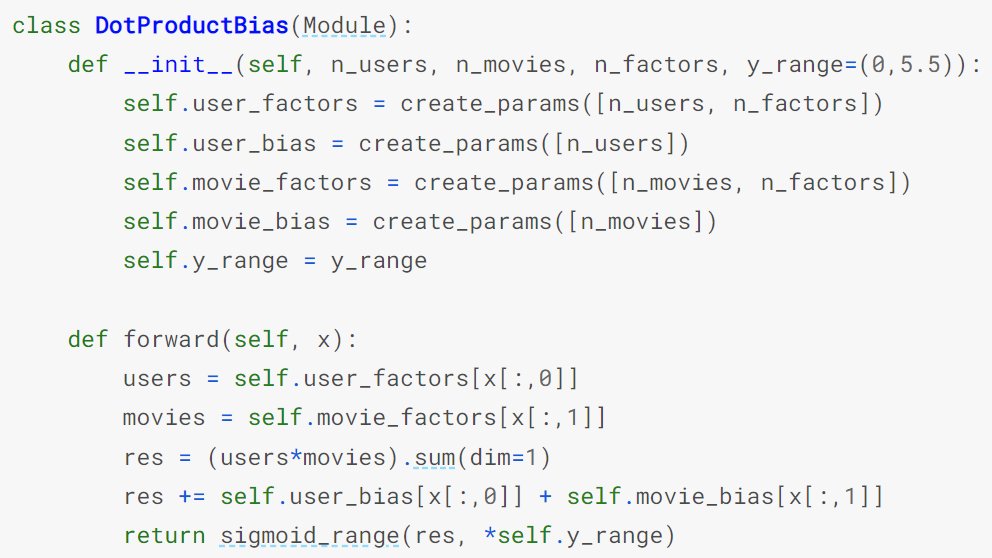

We can create a complete collaborative filtering model in @PyTorch from scratch using this trick:

We can create a complete collaborative filtering model in @PyTorch from scratch using this trick:

There's lots more covered in the full chapter, so please do check it out -- and be sure to try executing it yourself so you can do your own experiments:

kaggle.com

kaggle.com

If you'd like to order a copy of the book yourself, here it is:

amazon.com

amazon.com

Chapter 1 of the book is also available on @kaggle.

(Oh and BTW, if you like my notebooks, please upvote them on Kaggle, because it helps others find them, and motivates me to do more because I know they're appreciated!)

kaggle.com

(Oh and BTW, if you like my notebooks, please upvote them on Kaggle, because it helps others find them, and motivates me to do more because I know they're appreciated!)

kaggle.com

Loading suggestions...