Are you a data scientist using CSV files to store your data?

What if I told you there is a better way?

Can you imagine a

-> lighter 🦋

-> faster 🏎️

-> cheaper 💸

file format to save your datasets?

Read this thread so you don't need to imagine anymore 👇🏾

What if I told you there is a better way?

Can you imagine a

-> lighter 🦋

-> faster 🏎️

-> cheaper 💸

file format to save your datasets?

Read this thread so you don't need to imagine anymore 👇🏾

Do not get me wrong. I love CSVs.

You can open them with any text editor, inspect them and share them with others.

They have become the standard file format for datasets in the AI/ML community.

However, they have a little problem...

You can open them with any text editor, inspect them and share them with others.

They have become the standard file format for datasets in the AI/ML community.

However, they have a little problem...

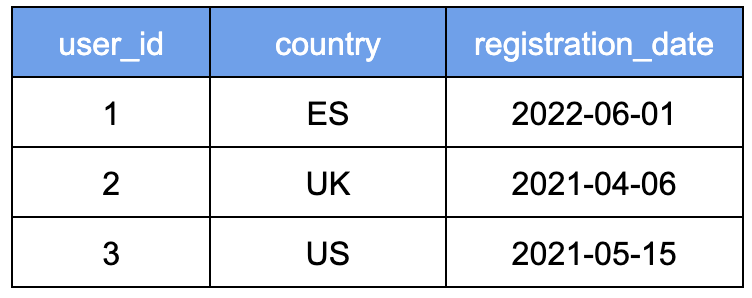

CSV files are stored as a list of rows (aka row-oriented), which causes 2 problems:

- they are slow to query --> SQL and CSV do not play well together.

- they are difficult to store efficiently --> CSV files take a lot of disk space.

Is there an alternative to CSVs?

Yes!

- they are slow to query --> SQL and CSV do not play well together.

- they are difficult to store efficiently --> CSV files take a lot of disk space.

Is there an alternative to CSVs?

Yes!

Welcome Parquet 🤗

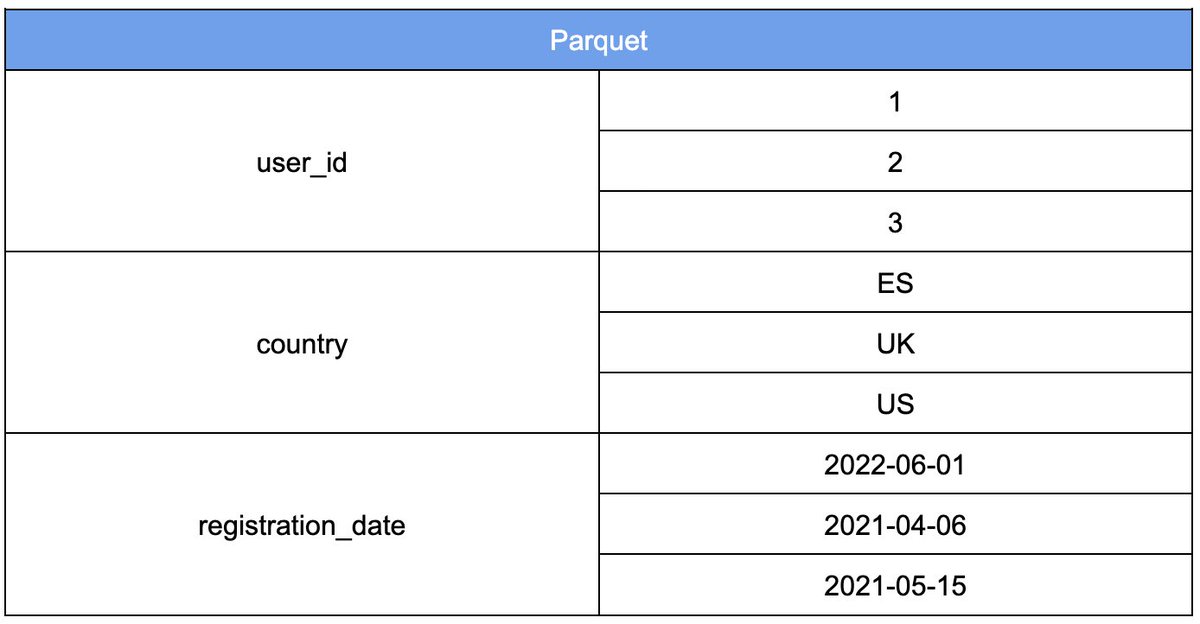

As an alternative to the CSV row-oriented format, we have a column-oriented format: Parquet.

Parquet is an open-source format for storing data, licensed under Apache.

Data engineers are used to Parquet. But, sadly, data scientists are still lagging behind.

As an alternative to the CSV row-oriented format, we have a column-oriented format: Parquet.

Parquet is an open-source format for storing data, licensed under Apache.

Data engineers are used to Parquet. But, sadly, data scientists are still lagging behind.

Why column-storing is better than row-storing?

2 technical reasons and 1 business reason.

2 technical reasons and 1 business reason.

Tech reason #1: Parquet files are much smaller than CSV

In Parquet, files are compressed column by column, based on their data type, e.g. integer, string, date.

A CSV file of 1TB becomes a Parquet file of around 100GB (10% of the original size)

In Parquet, files are compressed column by column, based on their data type, e.g. integer, string, date.

A CSV file of 1TB becomes a Parquet file of around 100GB (10% of the original size)

Tech reason #2: Parquet files are much faster to query

Columnar data can be scanned and extracted much faster.

For example, an SQL query that selects and aggregates a subset of columns does not need to scan the other columns.

This reduces I/O and results in faster queries.

Columnar data can be scanned and extracted much faster.

For example, an SQL query that selects and aggregates a subset of columns does not need to scan the other columns.

This reduces I/O and results in faster queries.

Business reason #3: Parquet files are cheaper.

Storage services like AWS S3 or Google Cloud Storage charge you based on the data size or the amount of data scanned.

Parquet files are lighter and faster to scan, which means you can store the same data at a fraction of the cost.

Storage services like AWS S3 or Google Cloud Storage charge you based on the data size or the amount of data scanned.

Parquet files are lighter and faster to scan, which means you can store the same data at a fraction of the cost.

And now the cherry on top of the cake 🍰...

Working with Parquet files in Pandas is as easy as working with CSVs

🥳

Working with Parquet files in Pandas is as easy as working with CSVs

🥳

I hope you've found this thread helpful.

If you wanna get more real-world ML content, follow me at @paulabartabajo_

And Retweet the first tweet below 👇🏽

If you wanna get more real-world ML content, follow me at @paulabartabajo_

And Retweet the first tweet below 👇🏽

If you wanna get more real-world ML and freelancing content subscribe to my newsletter by clicking on this link.

👉🏾datamachines.xyz

👉🏾datamachines.xyz

Loading suggestions...