For more detailed insight, check out my article where I:

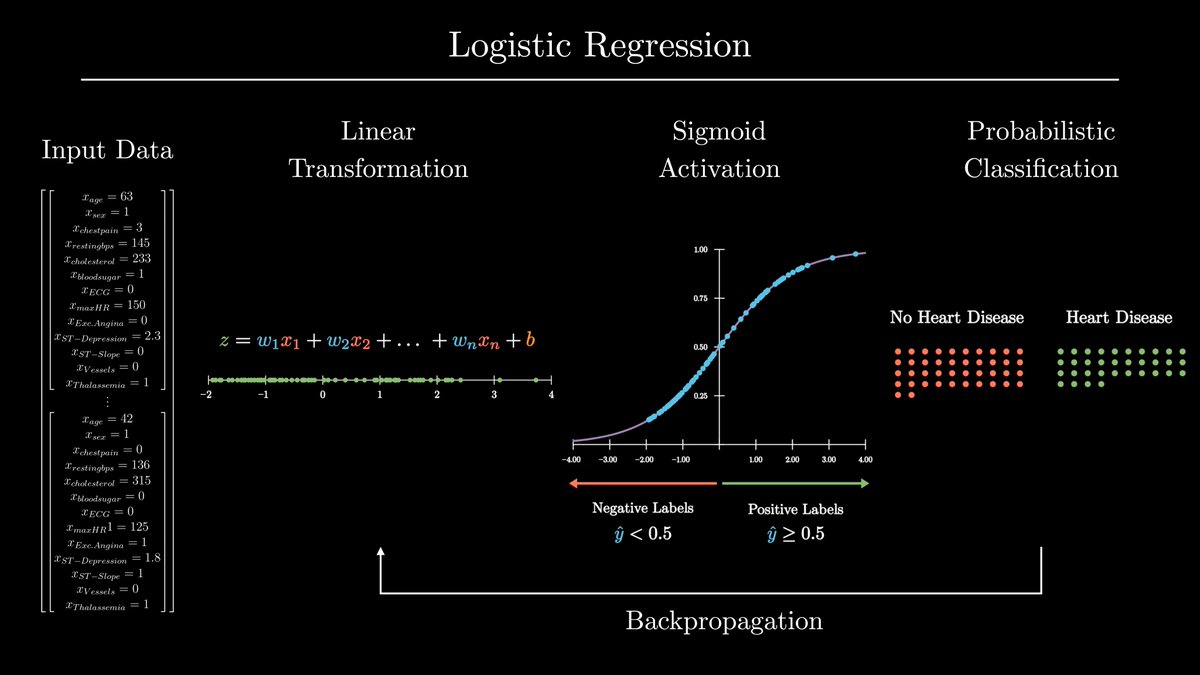

1. Build a logistic regression model from scratch with NumPy

2. Train the model to predict heart disease with the UCI Heart Disease Dataset

3. Build a TensorFlow logit model

tinyurl.com

Thanks for reading!

1. Build a logistic regression model from scratch with NumPy

2. Train the model to predict heart disease with the UCI Heart Disease Dataset

3. Build a TensorFlow logit model

tinyurl.com

Thanks for reading!

Loading suggestions...