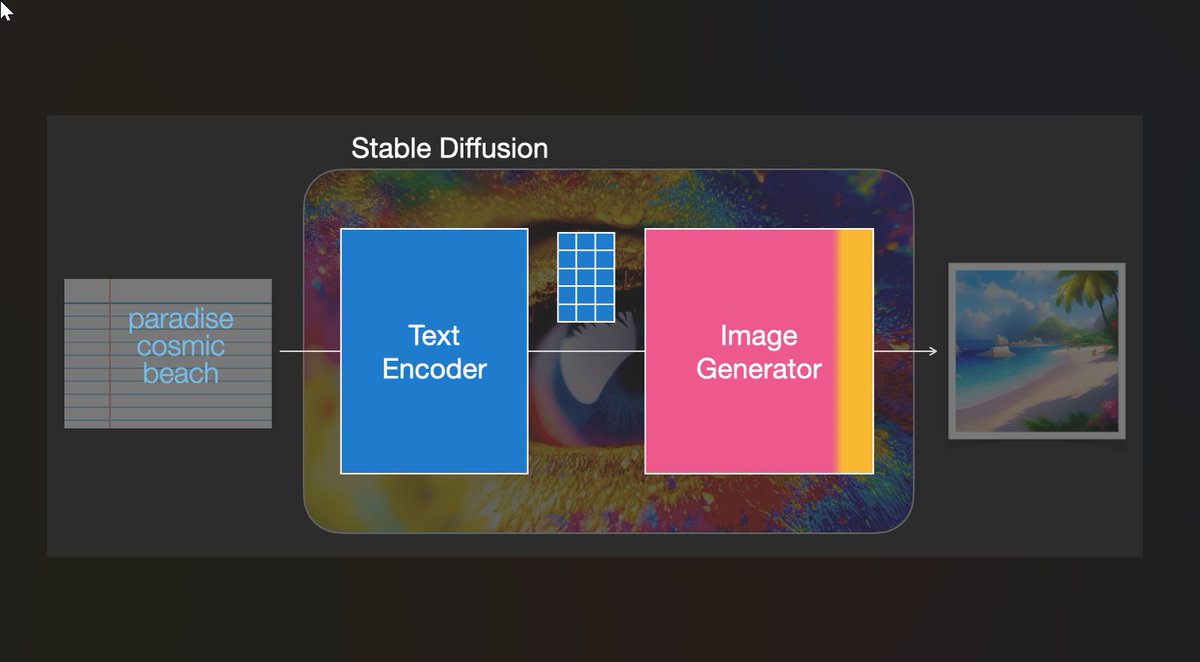

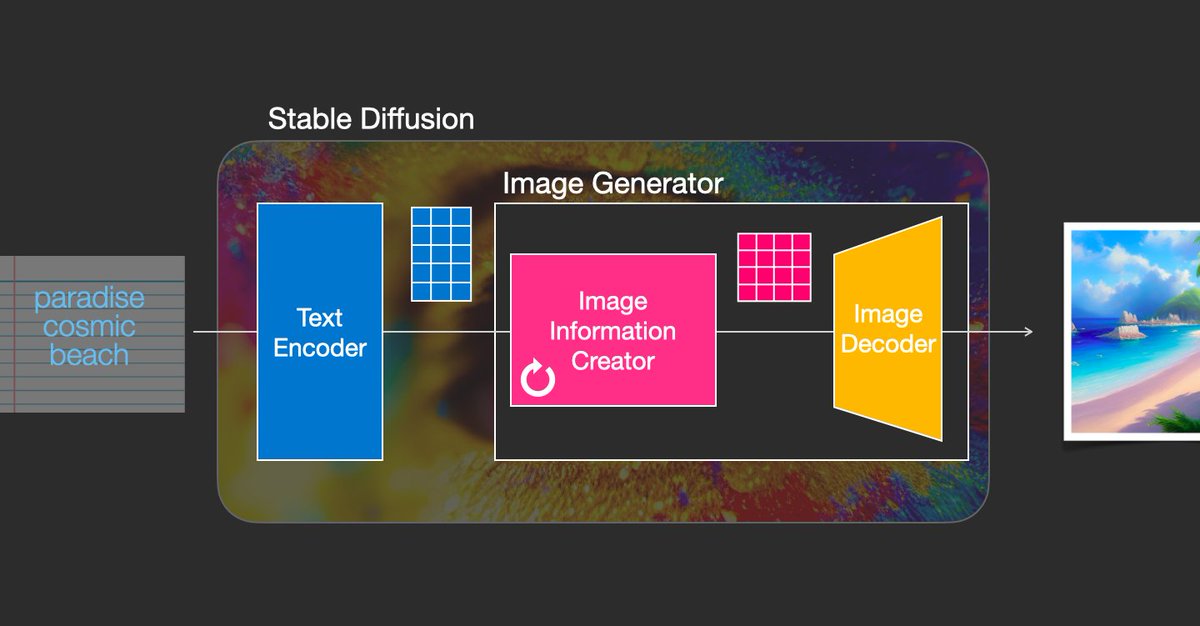

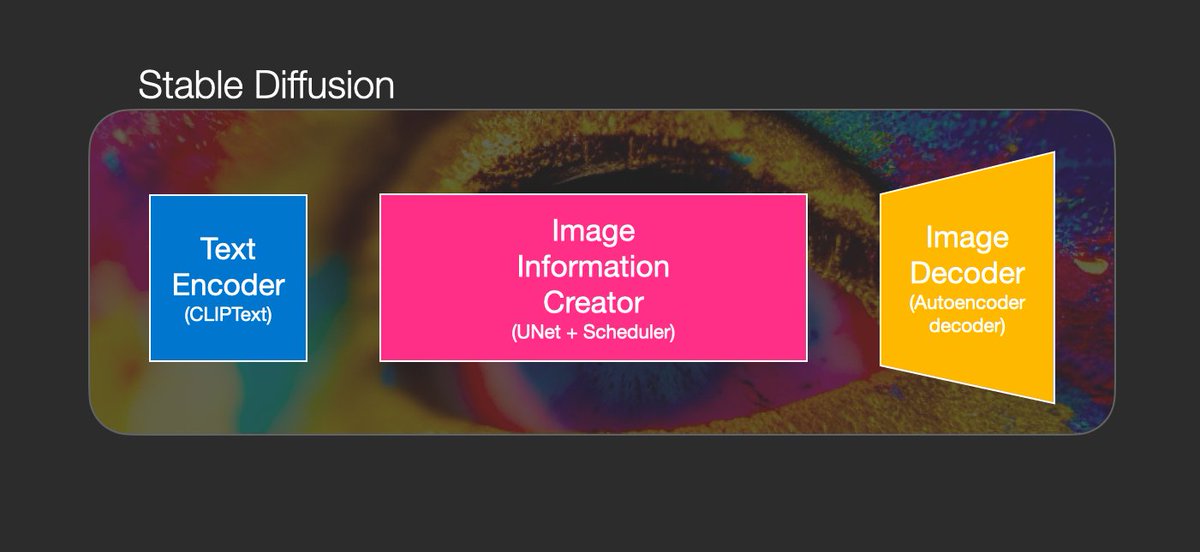

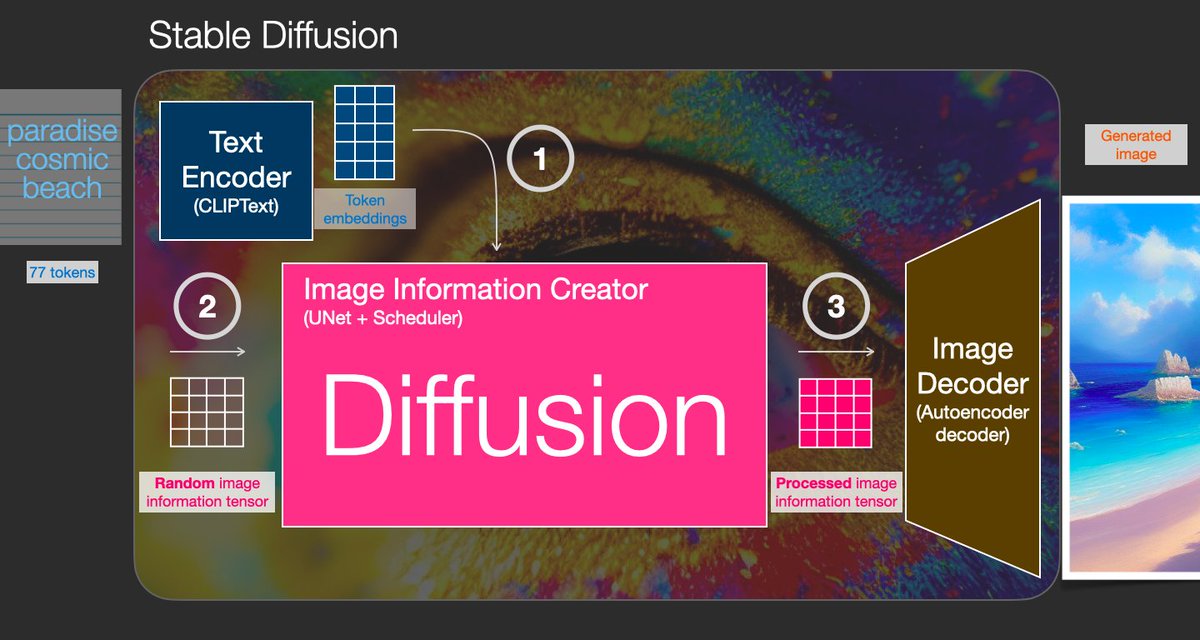

AI image generation is the most recent mind-blowing AI capability.

#StableDiffusion is a clear milestone in this development because it made a high-performance model available to the masses.

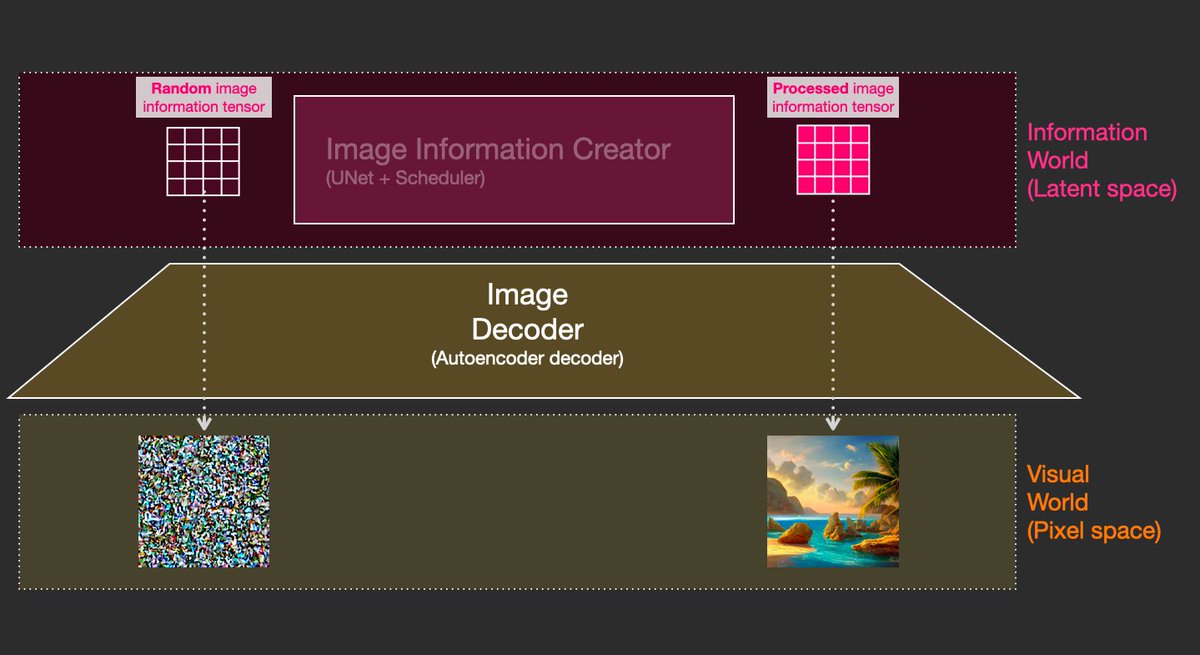

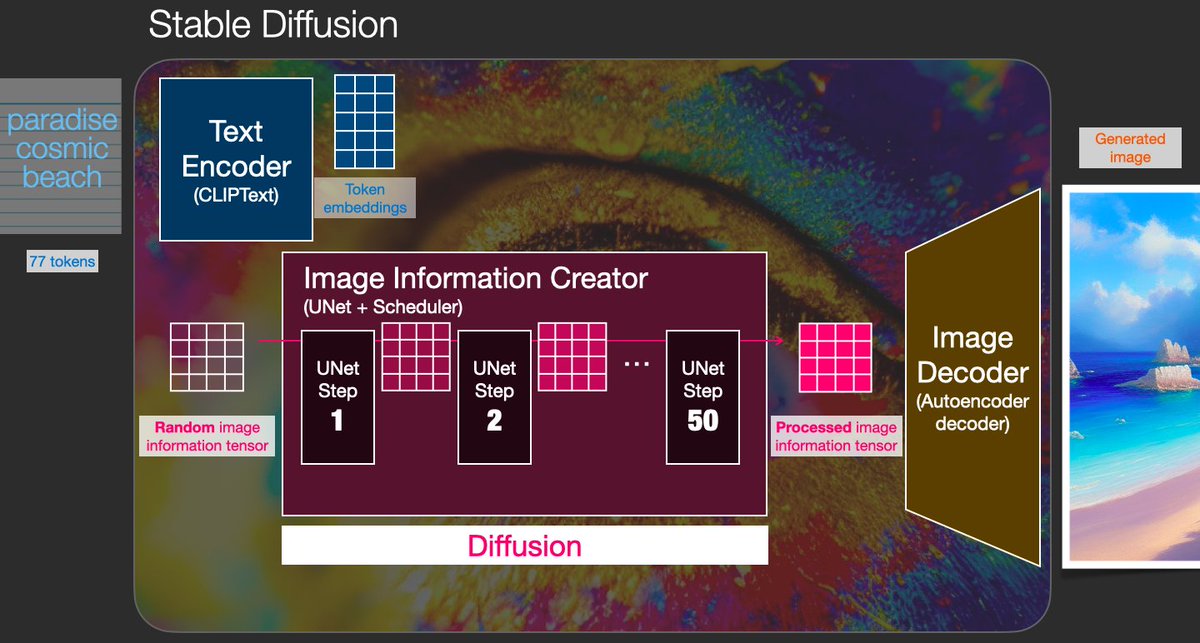

This is how it works.

1/n

#StableDiffusion is a clear milestone in this development because it made a high-performance model available to the masses.

This is how it works.

1/n

Loading suggestions...