Stateful Active Facilitator: Coordination and Environmental Heterogeneity in Cooperative Multi-Agent Reinforcement Learning

@DianboLiu, @b0ussifo, @Cmeo97, @anirudhg9119, Tianmin Shu, Michael Mozer, Nicolas Heess & Yoshua Bengio

@Mila_Quebec

arxiv.org

(1/N)

@DianboLiu, @b0ussifo, @Cmeo97, @anirudhg9119, Tianmin Shu, Michael Mozer, Nicolas Heess & Yoshua Bengio

@Mila_Quebec

arxiv.org

(1/N)

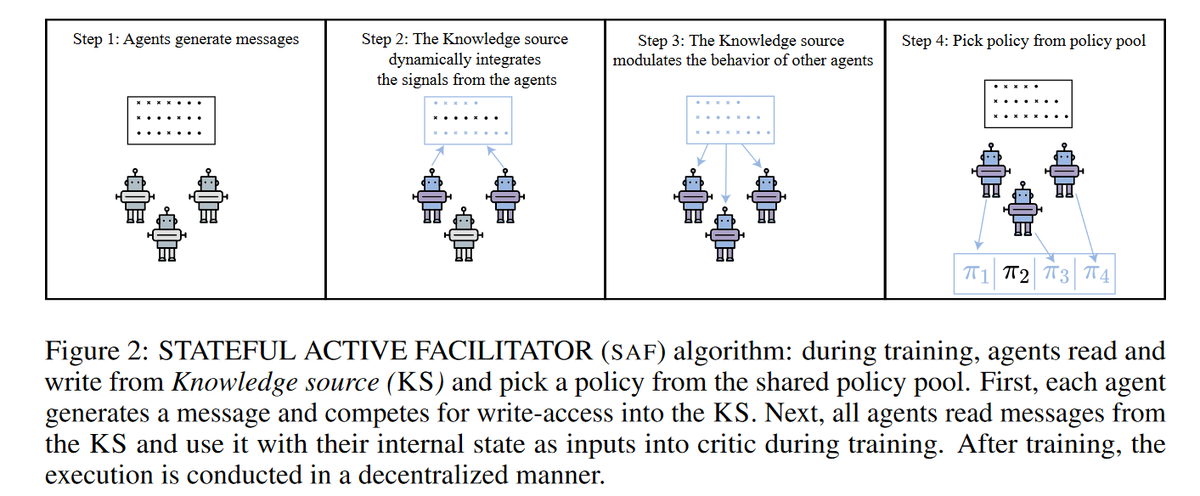

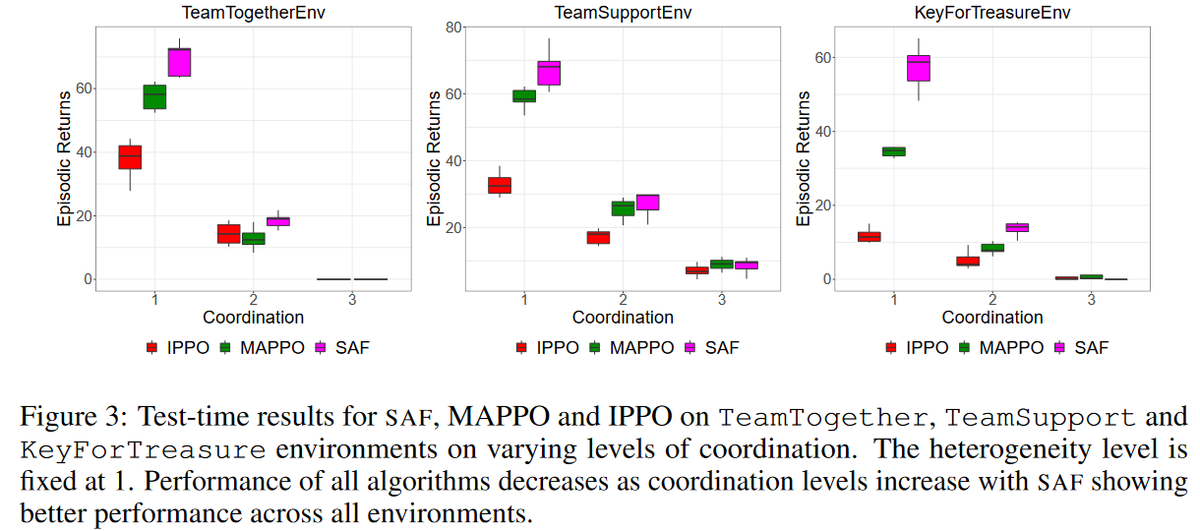

Reinforcement Learning in cooperative multi-agent settings requires individual agents to learn to work together in order to achieve a common goal. To attain optimal behavior, these agents need to learn to "coordinate" efficiently among each other.

(2/N)

(2/N)

However, MARL is faced with the unique challenge of changing environment dynamics as different agents update their policy parameters. This makes it difficult for the agents to learn efficiently coordinated behavior.

(3/N)

(3/N)

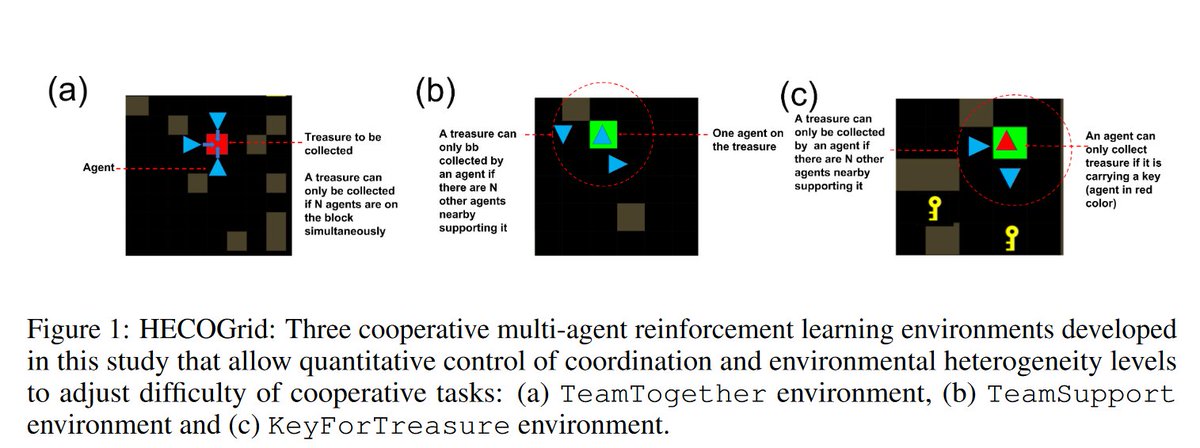

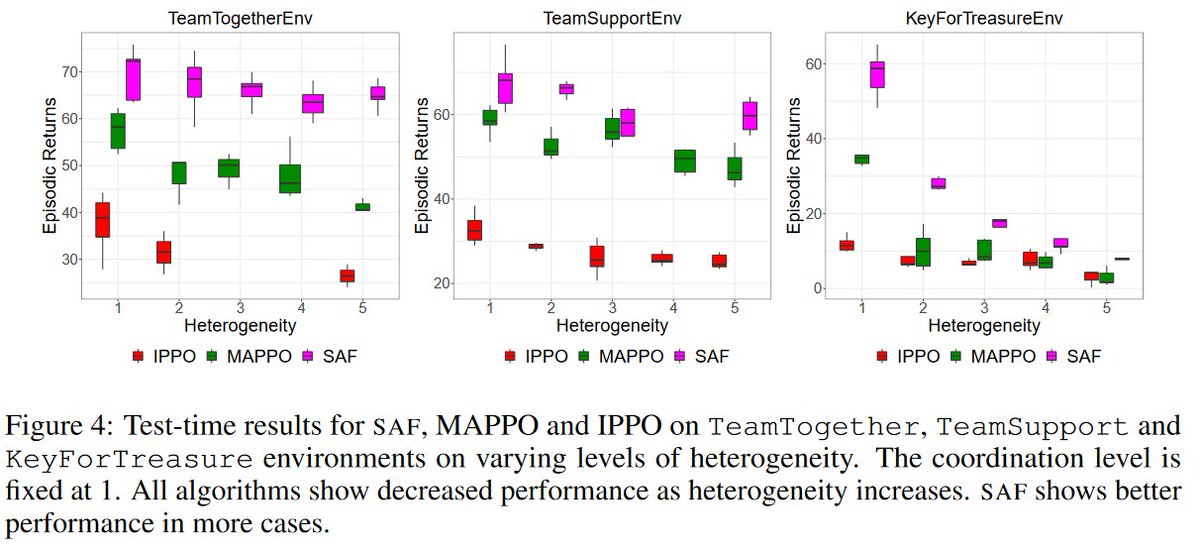

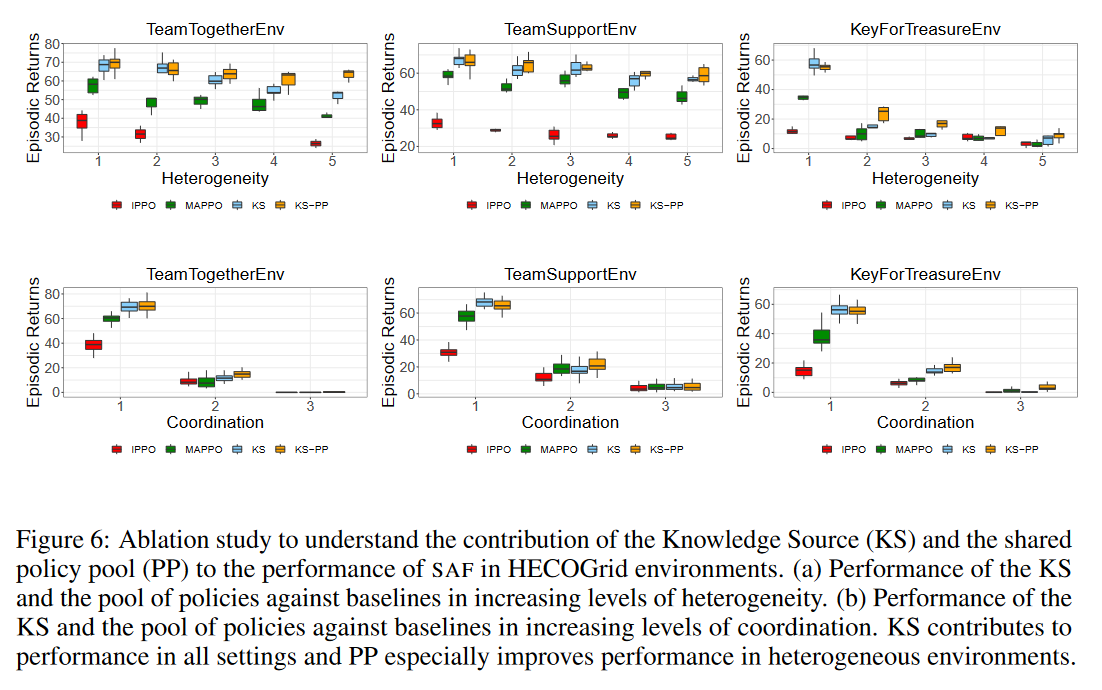

Further, in order to be robust, MARL in real world also needs to tackle "spatial" heterogeneity within the environment, i.e., changing structure, distribution of obstacles, transition dynamics, etc. A given MARL environment can be considered as having a certain level of

(4/N)

(4/N)

"heterogeneity" and requiring a certain level of "coordination" among the agents in order to solve it. However, there doesn't exist any suite of Reinforcement Learning environments that facilitates an empirical study of the effect of these parameters on MARL approaches and

(5/N)

(5/N)

Code used for conducting the experiments is available at:

github.com

We'll be releasing HECOGrid separately soon!

github.com

We'll be releasing HECOGrid separately soon!

Loading suggestions...