First, a disclaimer.

It is very hard to do benchmarking in a fair way.

I am comparing how *I would* do things in pure Python/PyTorch vs what Merlin Dataloader does for me.

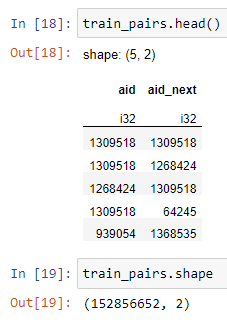

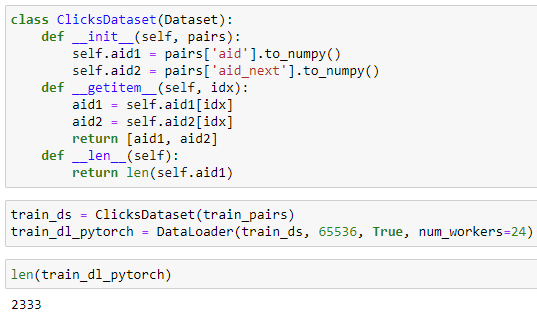

Here is the setup:

It is very hard to do benchmarking in a fair way.

I am comparing how *I would* do things in pure Python/PyTorch vs what Merlin Dataloader does for me.

Here is the setup:

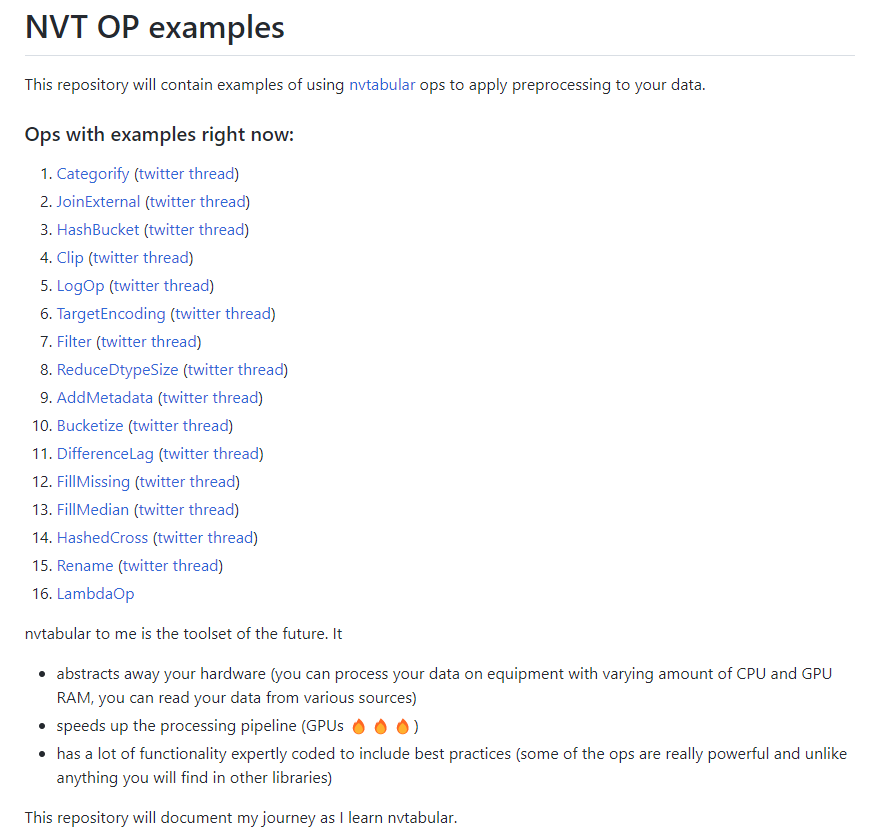

So yeah, this is a new library by my team. 🙂

You can find it here: github.com

It supports TF, PyTorch and has some support for JAX.

You can find it here: github.com

It supports TF, PyTorch and has some support for JAX.

One reason it is so fast is because it utilizes Dask-based Merlin Datasets.

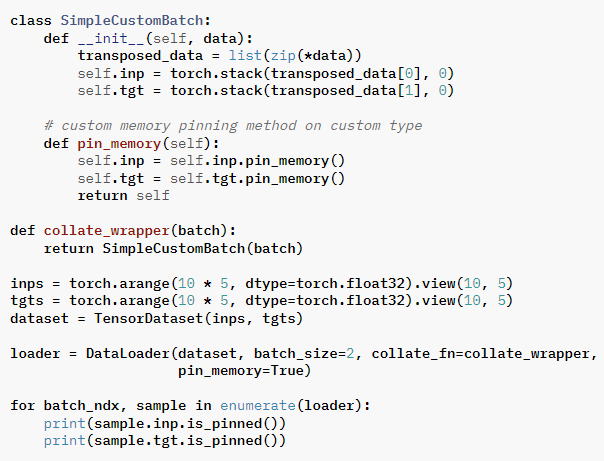

And I guess there are a couple of other things in Merlin Dataloaders that make it all (including memory transfer) super-fast.

But I would lie if I said I understood everything that is going on 🙂

And I guess there are a couple of other things in Merlin Dataloaders that make it all (including memory transfer) super-fast.

But I would lie if I said I understood everything that is going on 🙂

Loading suggestions...