Everyprompt.com @everyprompt

This is the most polished complete offering of the three below IMO

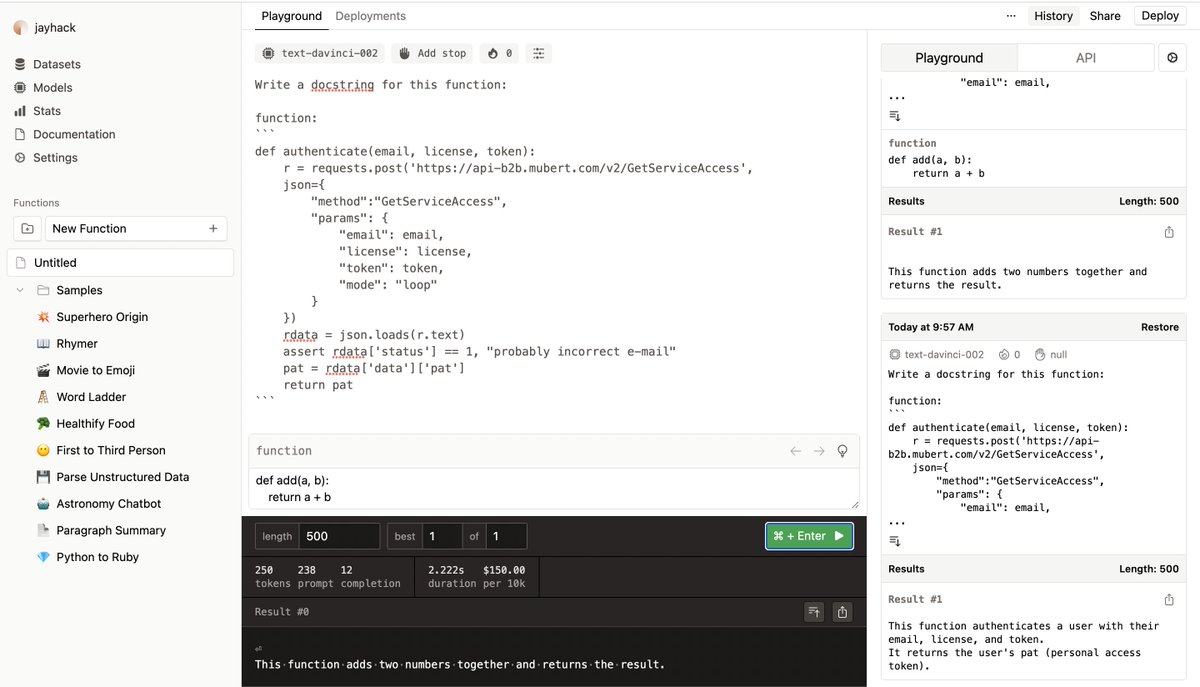

Includes a prompt engineering interface, ability to inject variables into prompts, and nascent logging.

A few observations 👇

1/

This is the most polished complete offering of the three below IMO

Includes a prompt engineering interface, ability to inject variables into prompts, and nascent logging.

A few observations 👇

1/

A word on dev with LLMs - every developer goes through these stages:

- develop a prompt (usually in OpenAI playground)

- iteratively refine it

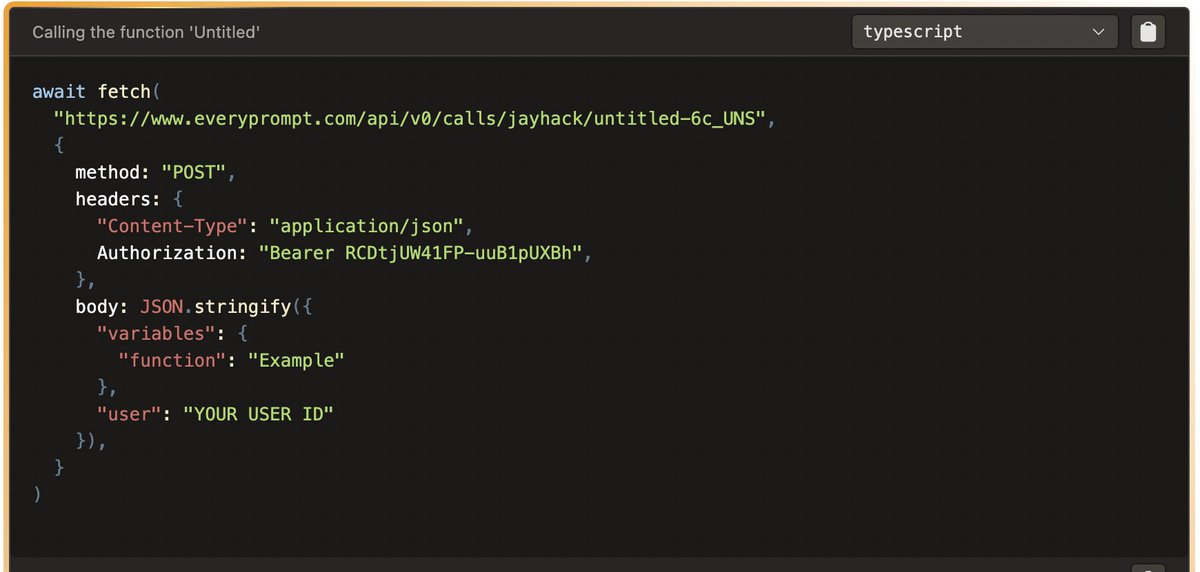

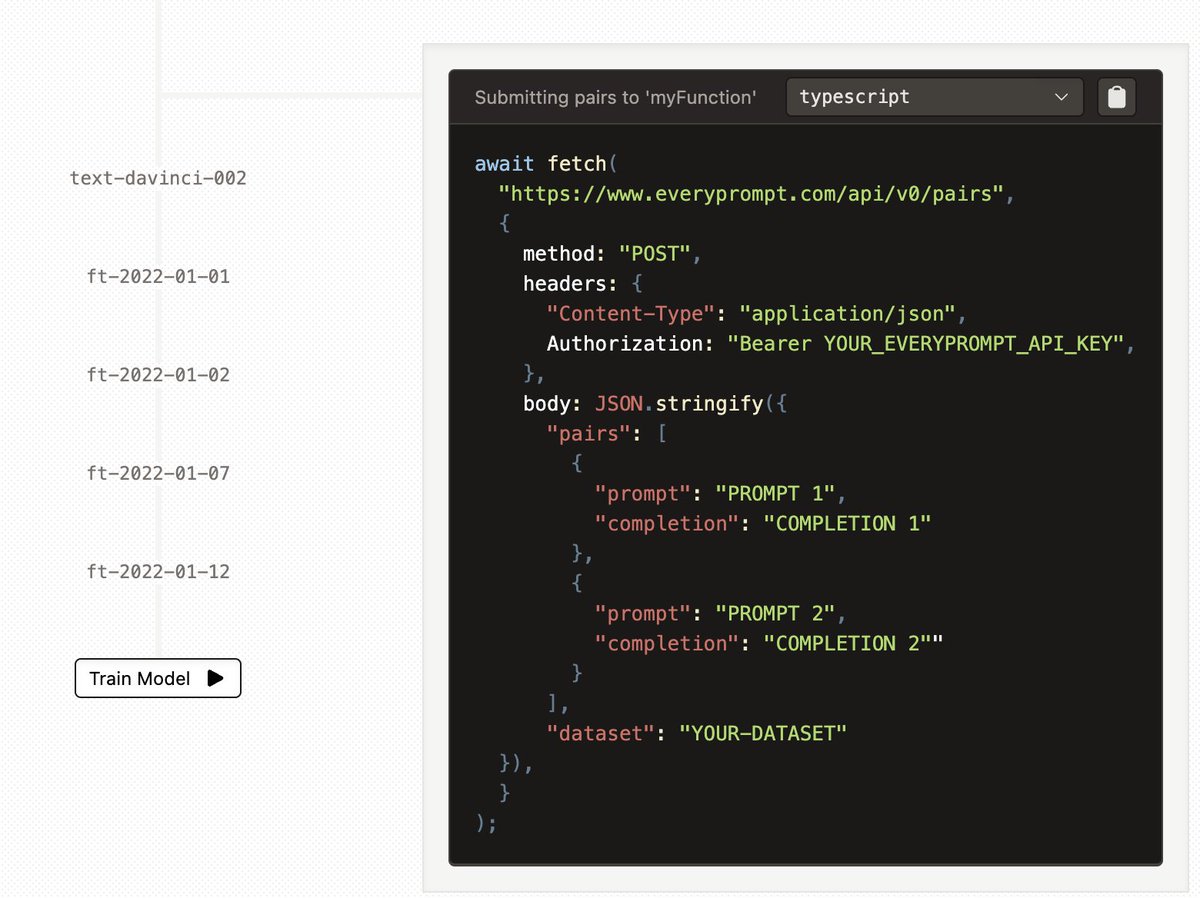

- deploy it via API

- integrate API into your application

- monitor ongoing usage

Everyprompt enables this flow.

2/

- develop a prompt (usually in OpenAI playground)

- iteratively refine it

- deploy it via API

- integrate API into your application

- monitor ongoing usage

Everyprompt enables this flow.

2/

In summary, this is a better developer experience than working in the OpenAI playground.

The only functionality that it adds on top of the raw OpenAI API, however, is the ability to inject variables into prompts.

7/

The only functionality that it adds on top of the raw OpenAI API, however, is the ability to inject variables into prompts.

7/

A few feature requests for @everyprompt :

- See every invocation of your API via logs

- Ability to authenticate users

- Ability to charge users for each API invocation

Would also love to see prompt chaining like @dust4ai and @LangChainAI !

8/

- See every invocation of your API via logs

- Ability to authenticate users

- Ability to charge users for each API invocation

Would also love to see prompt chaining like @dust4ai and @LangChainAI !

8/

Overall, I recommend @everyprompt if you are spinning up a web application that uses GPT-3 on the backend and want something that just works.

Enjoy!

Enjoy!

Loading suggestions...