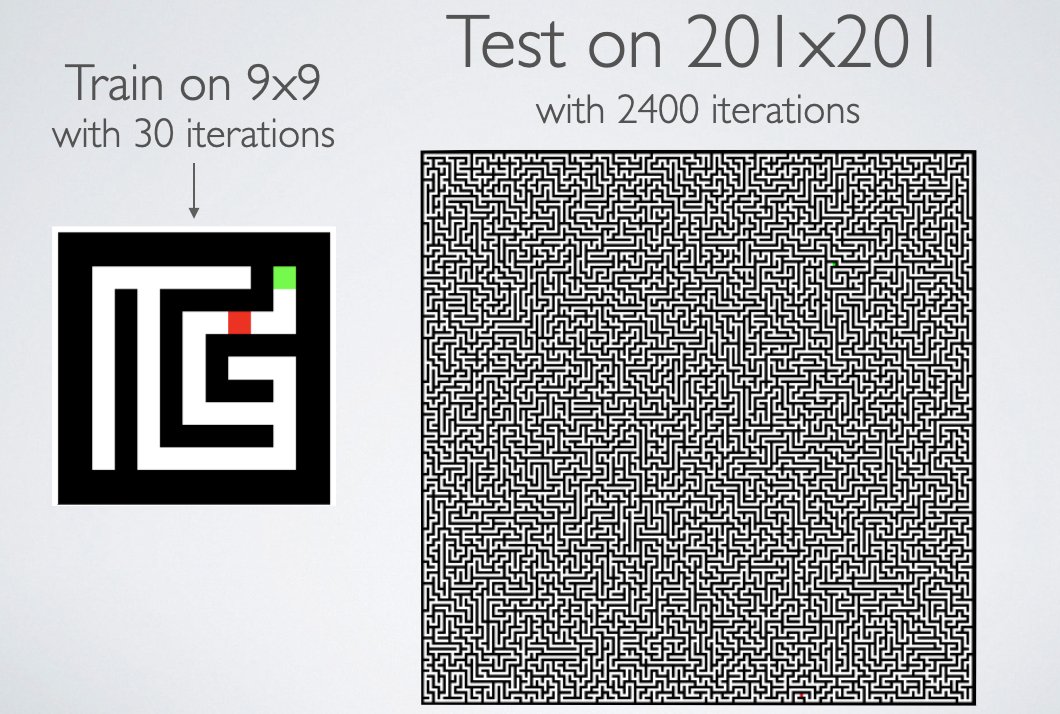

If we test the same network on a larger maze it totally fails. The network memorized *what* maze solutions look like, but it didn’t learn *how* to solve mazes.

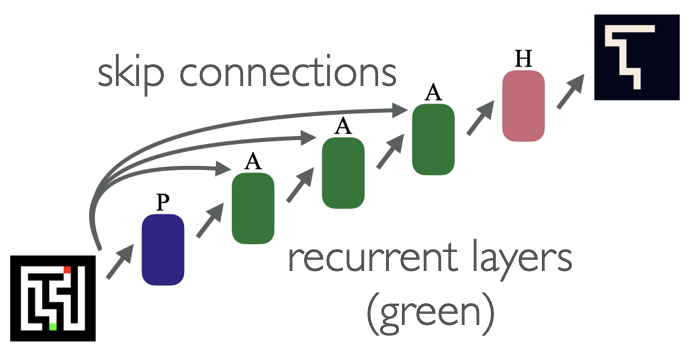

We can make the model synthesize a scalable maze-solving algorithm just by changing its architecture...

We can make the model synthesize a scalable maze-solving algorithm just by changing its architecture...

Now, a model trained *only* on 9x9 mazes for 30 recurrent iterations can generalize to this 801x801 maze at text time, provided we run the recurrent block for 25,000 iterations. That's over 100K conv ops.

Strangely, the network has also learned an error correcting code. If we corrupt the net's memory when it's halfway done, it will always recover. If we change the start/end point after the maze is solved, it draws the new solution in one shot with no wrong turns (shown below).

Networks can also learn algorithms for chess and prefix sum computation. For all these problems we use the same network and training loop, but different training data.

arxiv.org

See you all on thurs afternoon at @NeurIPSConf!

arxiv.org

See you all on thurs afternoon at @NeurIPSConf!

Loading suggestions...