In addition to the conflation of public policies against abuse/spam w/ secret polices against political viewpoints & topics, the other bit of conflation I'm seeing right now is amplification/suppression of tweets vs. of accounts. This is an example of the second type:

Twitter's internal data suggests that righty tweets perform better than lefty tweets -- they seem to get some benefit from the algo. That's a different issue than targeted deboosting of prominent right-wing accounts.

I haven't seen anyone claiming that all right-wing accounts on this site have been deboosted. The claim is that prominent accounts with certain viewpoints on hot-button issues were deboosted, & those often fell on the right side of the spectrum. That can be true alongside...

... righty tweets from whoever tend to do better than lefty tweets from whoever. Both of those things can be true at once, so Martin's link refutes nothing.

Anyway, there are more examples of this "tweets vs. accounts" confusion/conflation going on right now. I'm seeing it in responses to different @elonmusk tweets, and it's a whole other front in the fight over the release of these files.

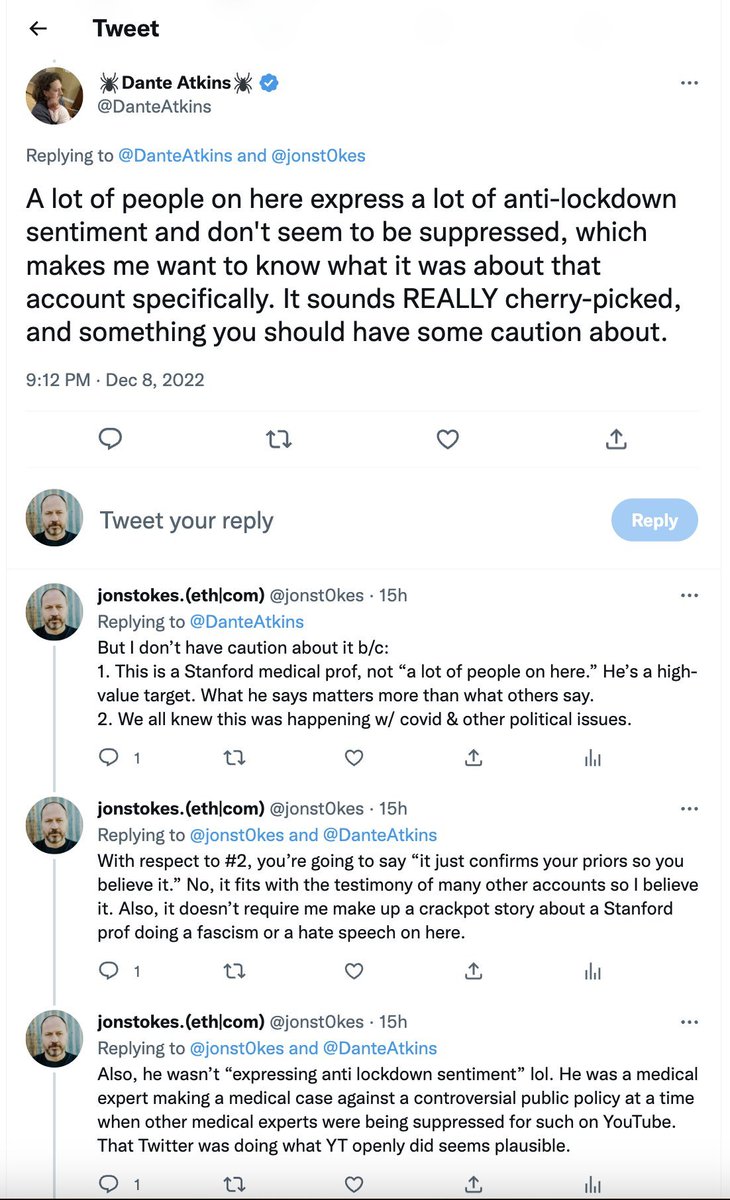

The tactic of responding to the claim that Twitter deboosted specific prominent accounts (based on viewpoint) by pointing out that tweets with some similar/adjacent viewpoint are widespread on here, is one I encountered yesterday via @DanteAtkins:

Me: Twitter suppressed this large, important account because of the viewpoint it was promoting.

You: Nonsense. Millions of tweets were flying around from randos pushing a version of that viewpoint.

Do people not see how the second claim is not really responsive to the first?

You: Nonsense. Millions of tweets were flying around from randos pushing a version of that viewpoint.

Do people not see how the second claim is not really responsive to the first?

It actually scans to me that targeted deboosting of prominent right-wing accounts based on viewpoint was a semi-rational attempt by the Twitter team to "remedy" the algo's love of red meat, if they don't know what's causing that love & can't patch it.

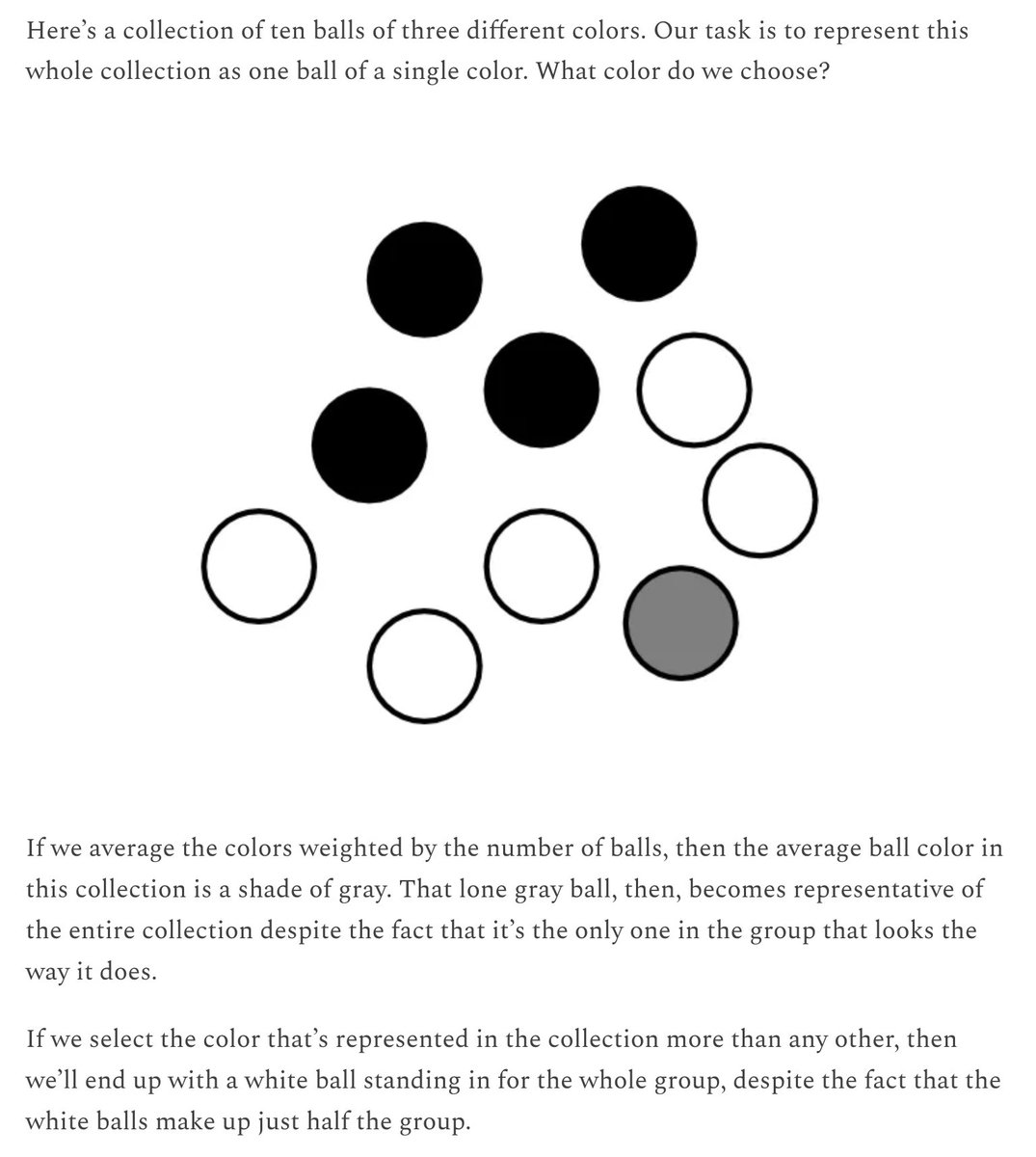

They would see themselves as trying to bring "balance" to the platform by counteracting the algo's right-wing proclivities. That is in fact the very logic behind this tweet, so I can imagine the platform team using it to justify viewpoint-based suppression

These algorithmic bias conflicts are very hard to adjudicate because different groups are working from fundamentally different understandings of what is fair. Normally this isn't catastrophic in our society b/c fairness conceptions can be tribal & localized by geography.

But when they're tribal & all the different groups with conflicting fairness conceptions are on the same network & subject to exactly one conception (=whoever writes the algo), it's winner-takes-all & the stakes are way too high.

Oh, link to the post where the above screencap is from, where I dig into the issue of algorithmic bias and fundamentally conflicting notions of what is fair: jonstokes.com

Loading suggestions...