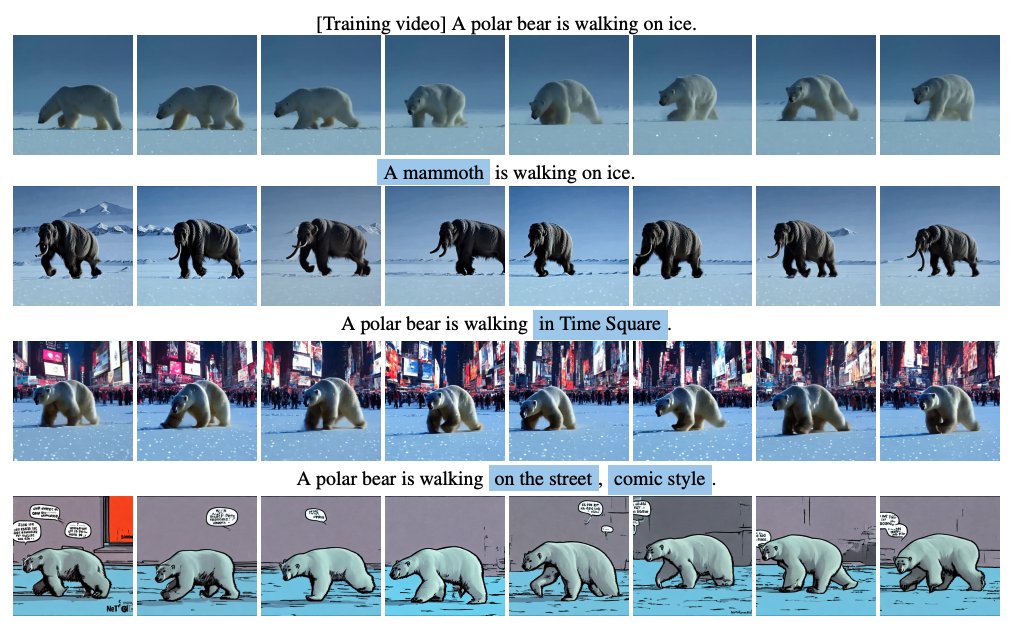

Overall, this is a great paper proposing the feasibility of one-shot video generation with impressive results.

I also like the idea that we are already focusing on computational efficiency for T2V.

Paper: arxiv.org

Website: tuneavideo.github.io

I also like the idea that we are already focusing on computational efficiency for T2V.

Paper: arxiv.org

Website: tuneavideo.github.io

Loading suggestions...