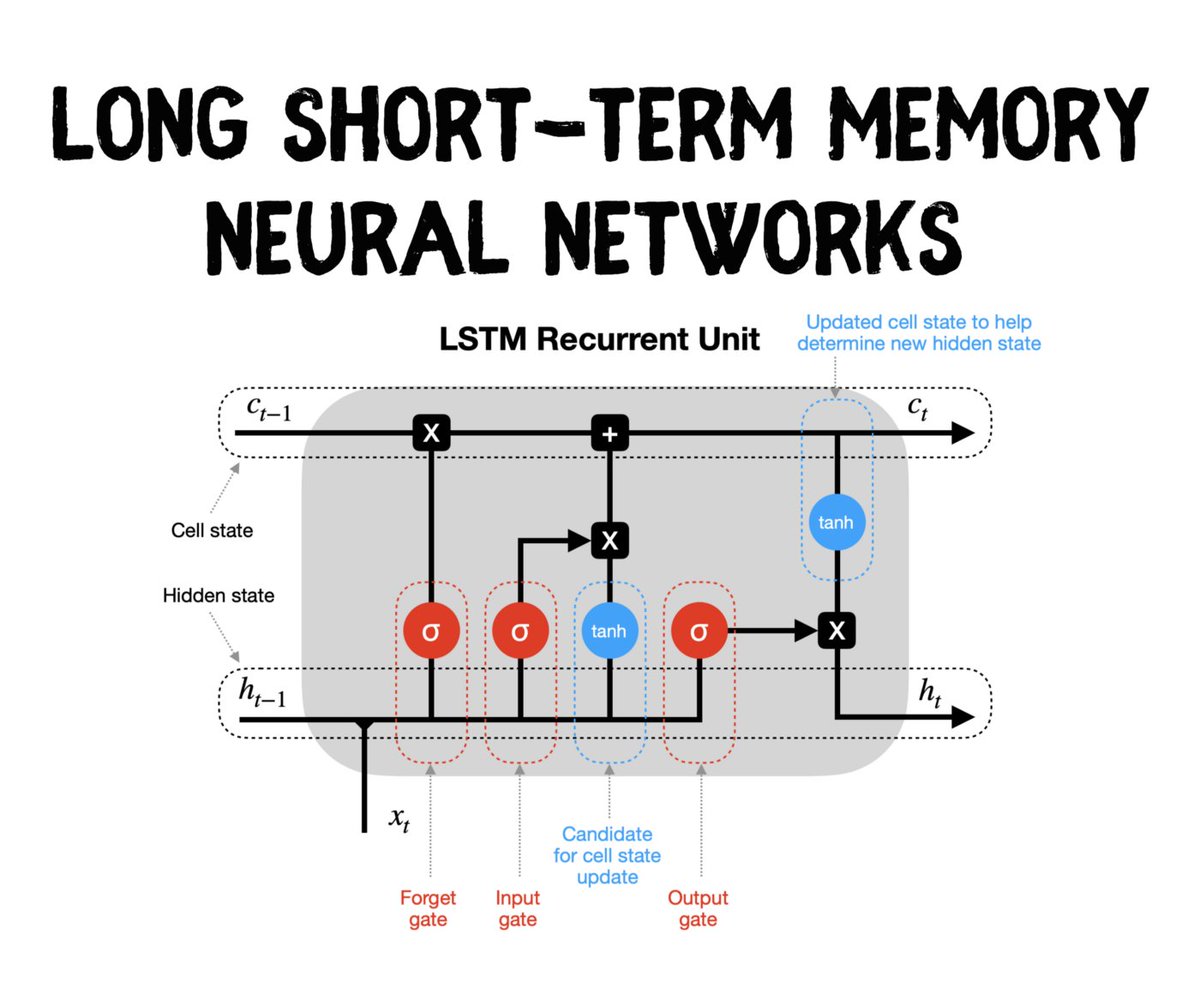

LSTM networks are composed of multiple layers, each of which contains "memory cells" that can store information for long periods of time. These cells are connected by "gates" that can control the flow of information in and out of the cell.

The input gate controls the flow of information into the memory cell, while the output gate controls the flow of information out of the cell. The forget gate controls the amount of information that is retained in the cell over time.

LSTM networks are particularly useful for tasks that require the model to remember long-term dependencies. For example, in natural language processing, the meaning of a word can depend on the context in which it was used several sentences earlier.

LSTM networks have been highly successful in a wide range of tasks, including language translation, language modeling, and speech recognition. They have also been applied to other areas, such as financial forecasting and stock prediction.

LSTM networks are more computationally expensive than other types of neural networks, but they are well-suited to tasks that require the model to remember long-term dependencies.

They have become a popular choice for many tasks due to their ability to capture long-term dependencies and their strong performance on a wide range of tasks.

Loading suggestions...