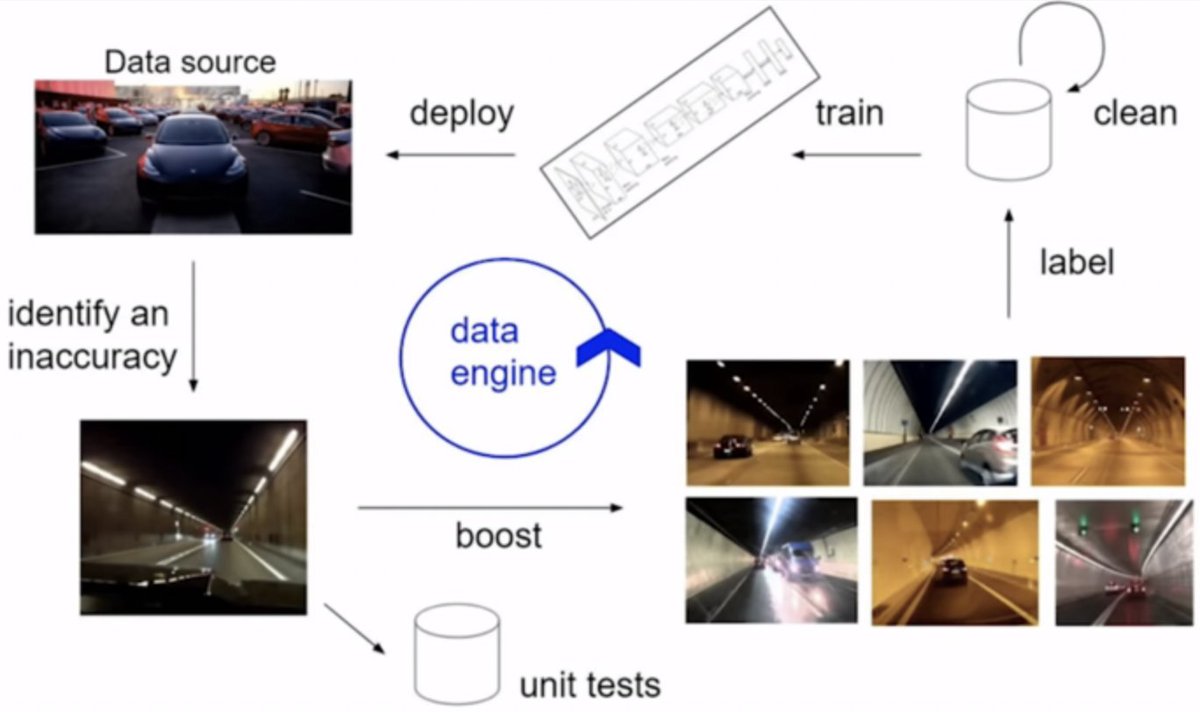

The defensibility comes from the idea that the models that amass more experience (i.e. are deployed earlier/broader) will improve faster over time, compounding returns.

This assumes that deployment in the wild is the best way to gather the "fuel" for your data engine, however.

This assumes that deployment in the wild is the best way to gather the "fuel" for your data engine, however.

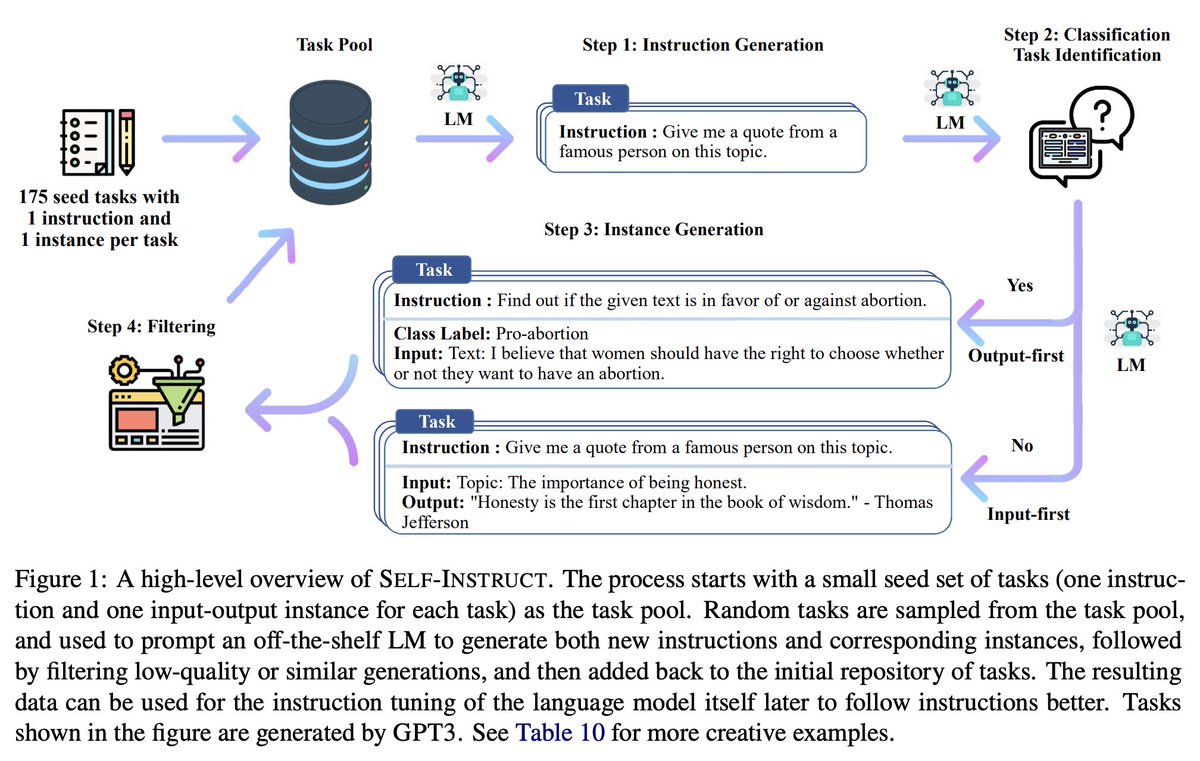

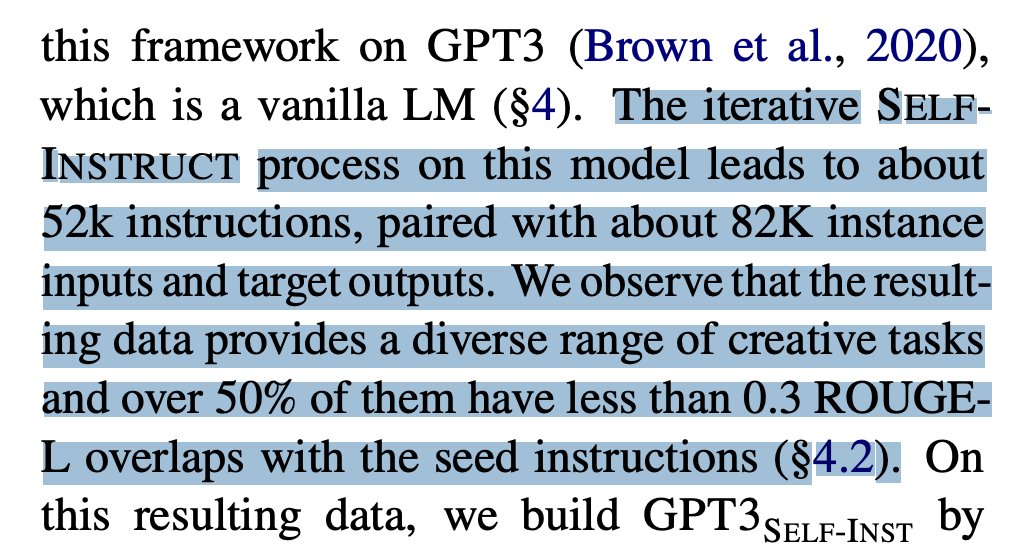

This is very promising as an approach for startups and resource-poor outfits trying to achieve high performance IMO

Barrier to entry is low: literally see if you can generate synthetic tasks/samples in your domain for instruction tuning, then fine-tune + evaluate.

Barrier to entry is low: literally see if you can generate synthetic tasks/samples in your domain for instruction tuning, then fine-tune + evaluate.

Loading suggestions...