Current LLMs have finite context length - the amount of input it can "consider" simultaneously.

This is a challenge if you want to run inference over:

- An entire codebase

- All of your Notion/Drive/Dropbox

- An entire social media site

Etc.

This is a challenge if you want to run inference over:

- An entire codebase

- All of your Notion/Drive/Dropbox

- An entire social media site

Etc.

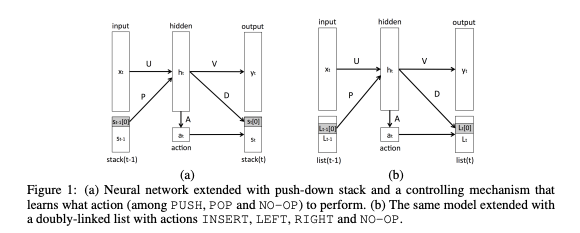

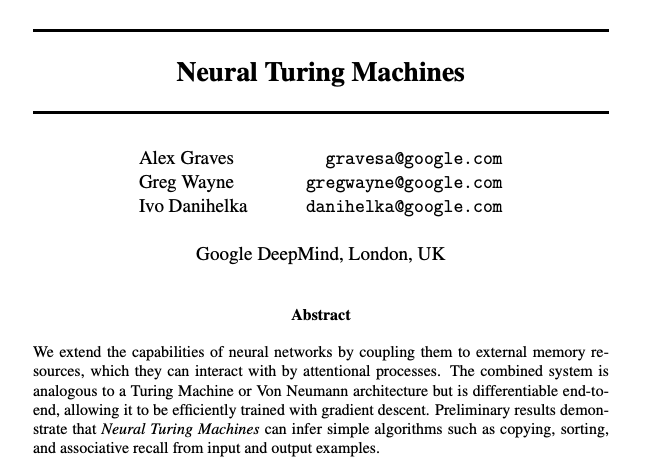

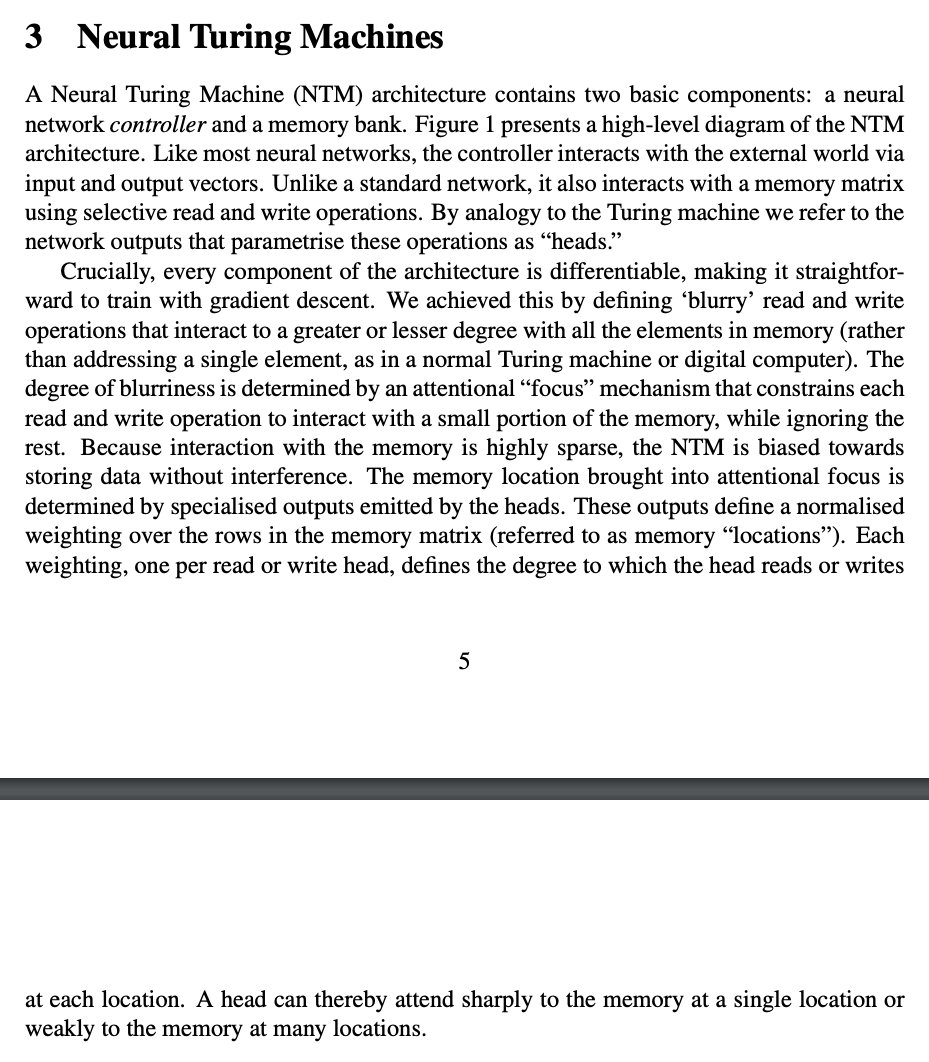

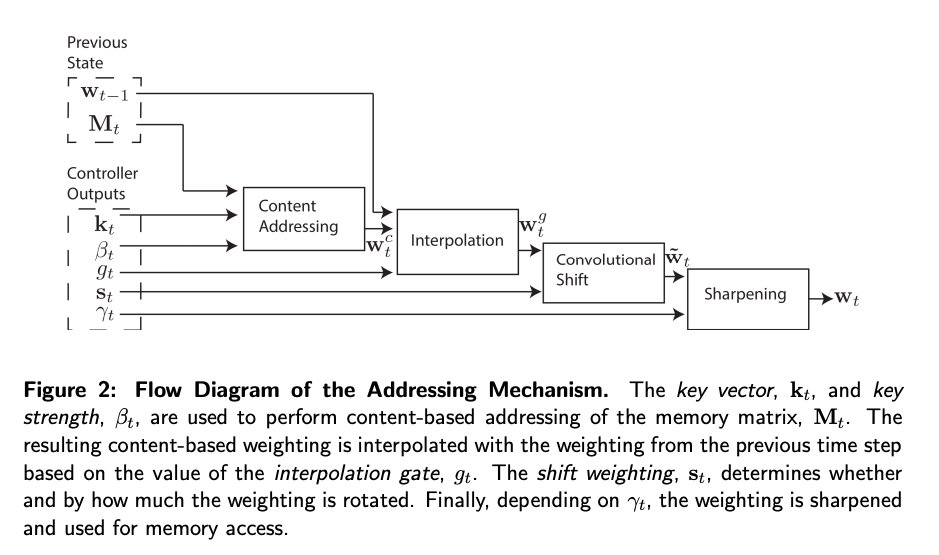

The idea in these (and other) approaches is to strap on some sort of an external data structure that a sequence model learns to store/retrieve info from

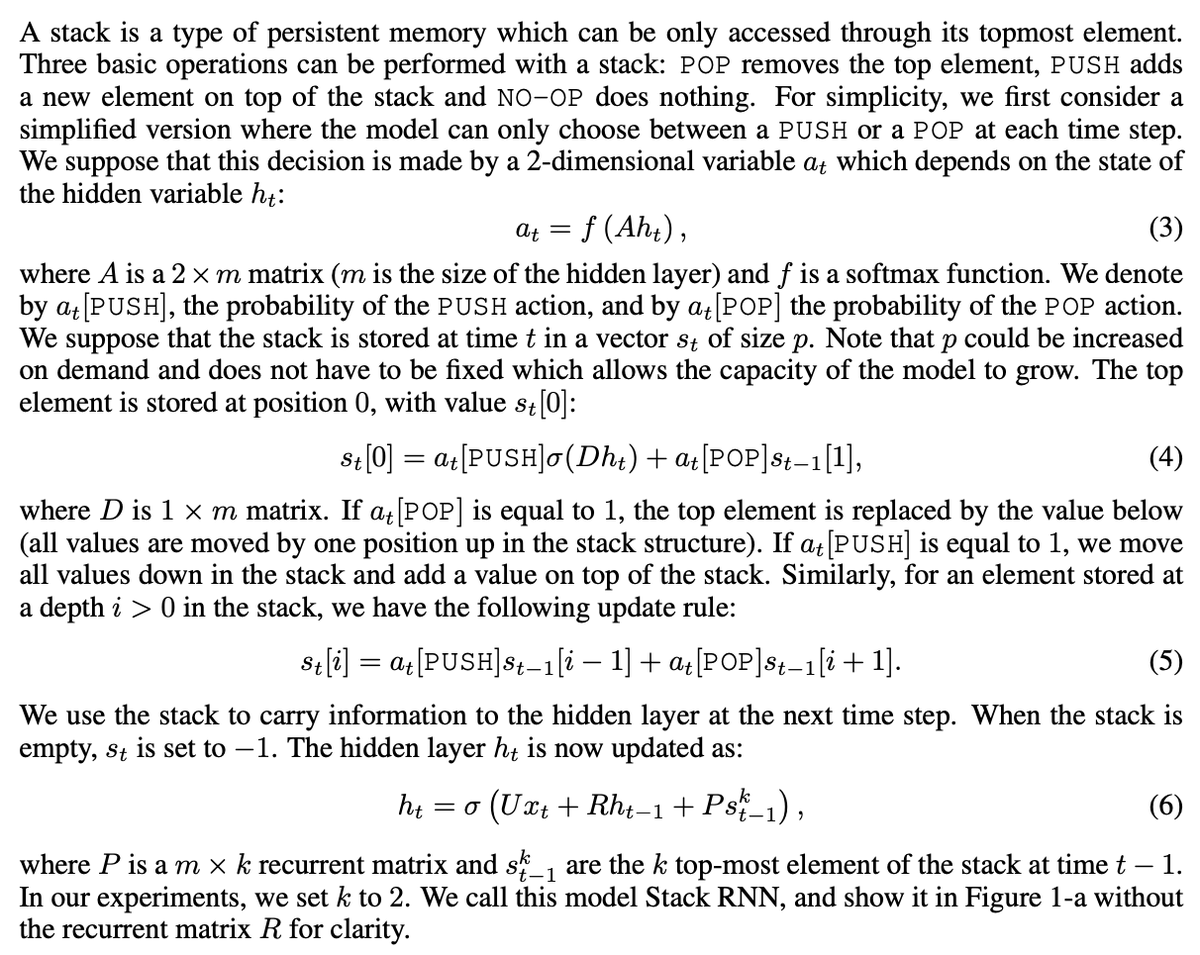

For instance, you can teach a regular RNN to output actions it wants to perform on a stack, like push/pop

For instance, you can teach a regular RNN to output actions it wants to perform on a stack, like push/pop

A trivial version of this is that at every timestep an RNN gets to output a vector that gets summed into a fixed buffer, then it gets to read that buffer as part of input at the subsequent timestep

You can do much better though.

You can do much better though.

By modern standards the performance of this isn't remarkable but it definitely *feels* like something that would kick ass.

No reason this wouldn't generalize to networks with transformers as the building blocks as well, i.e. GPT, T5, OPT etc.

No reason this wouldn't generalize to networks with transformers as the building blocks as well, i.e. GPT, T5, OPT etc.

In conclusion:

Two cool techniques that were demonstrated a while ago for strapping some sort of a data structure to a sequence model (way smaller than LLMs), then trained with backpropogation

Two cool techniques that were demonstrated a while ago for strapping some sort of a data structure to a sequence model (way smaller than LLMs), then trained with backpropogation

No reason this couldn't happen with LLMs too

And when it does, many problems relating to context overflow will be suddenly tractable

🙏

And when it does, many problems relating to context overflow will be suddenly tractable

🙏

Loading suggestions...