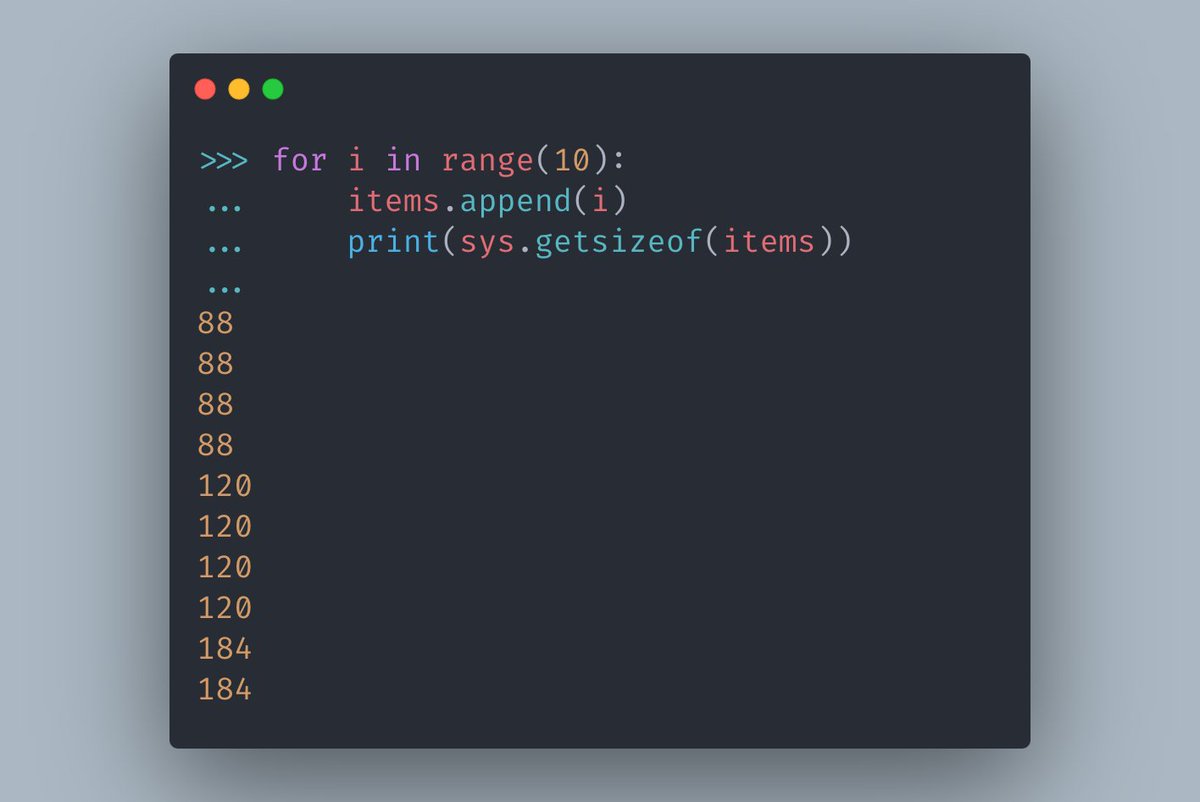

The list obviously doesn't start with a size of 10000, that's too much memory to reserve for an empty list.

But at the end, the list is holding 10000 items, so clearly the actual size of the list is changing in the background.

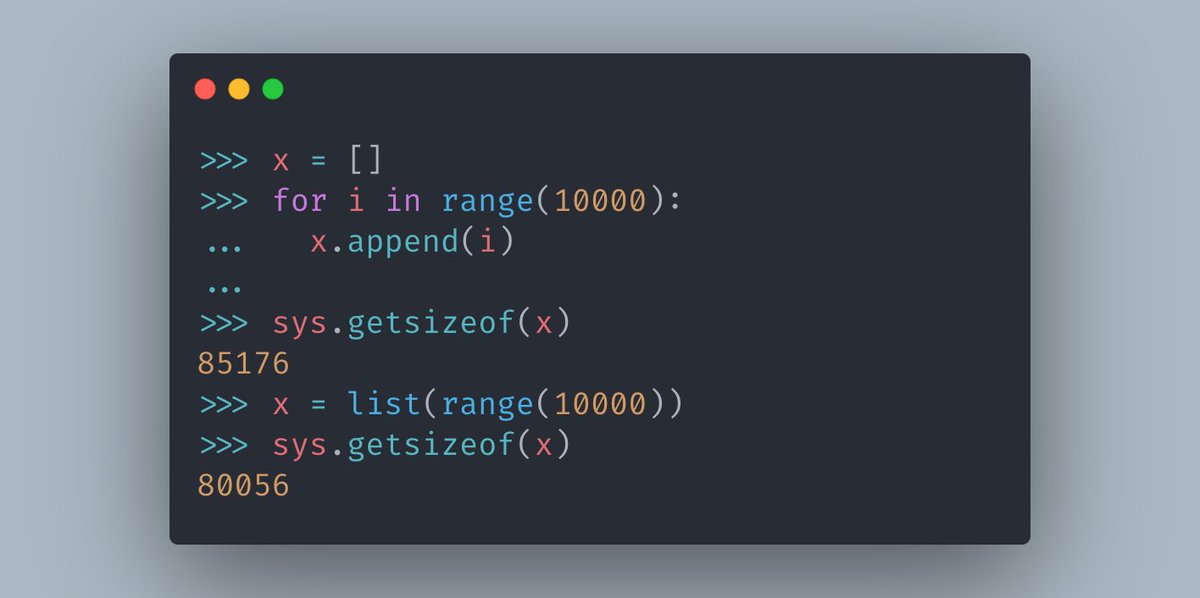

✨In fact, we can verify it with `sys.getsizeof()`:

But at the end, the list is holding 10000 items, so clearly the actual size of the list is changing in the background.

✨In fact, we can verify it with `sys.getsizeof()`:

What exactly happens when the size of the list changes?

The memory allocated for the list can't magically be enlarged, so how is this really working?

In reality,

✨The entire list is copied to a bigger location.

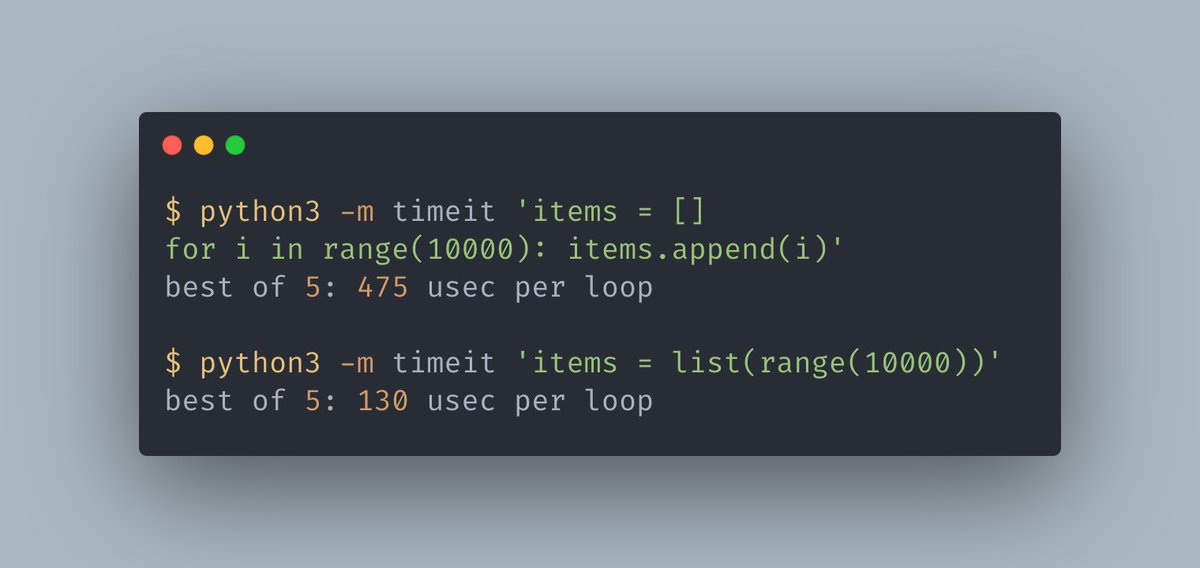

And copying over the list so many times simply wastes CPU time.

The memory allocated for the list can't magically be enlarged, so how is this really working?

In reality,

✨The entire list is copied to a bigger location.

And copying over the list so many times simply wastes CPU time.

Indeed! It looks like an empty list has a size of 56 bytes, it seems that it needs some metadata.

But, the list created from a pre-known size seems to be adding 8 bytes for every item in it.

✨And that makes sense: Every pointer on a 64bit machine is 8 bytes! No space wasted!

But, the list created from a pre-known size seems to be adding 8 bytes for every item in it.

✨And that makes sense: Every pointer on a 64bit machine is 8 bytes! No space wasted!

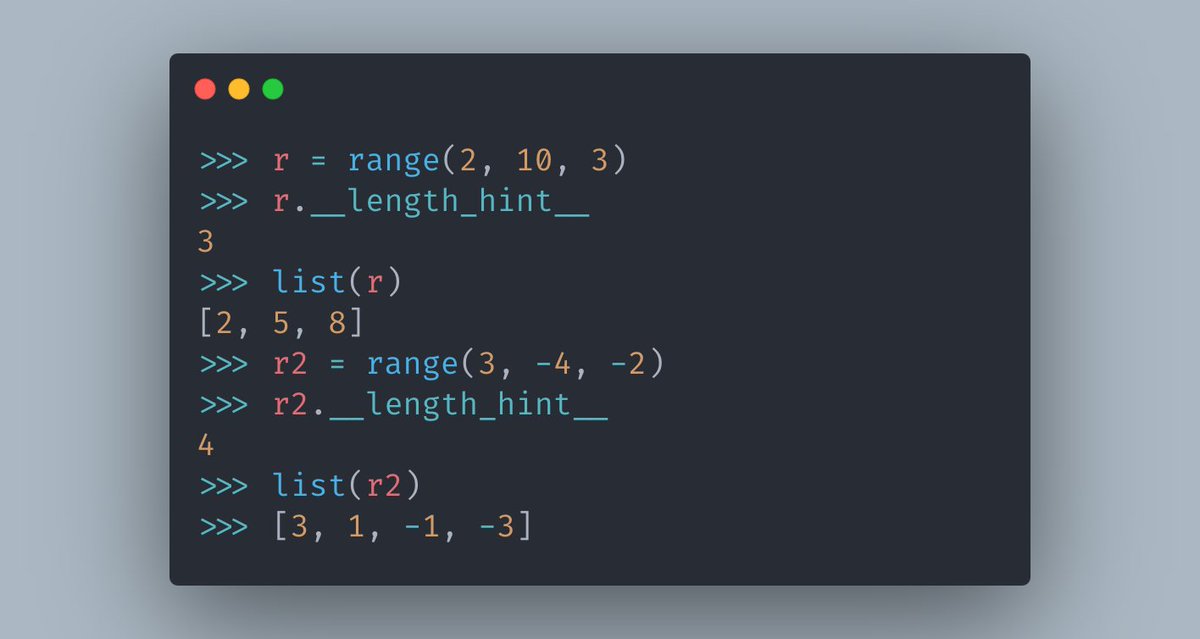

If an iterable defines a `__length_hint__` property, `list(iterable)` will allocate that given size from the beginning, that way no time is wasted reallocating the memory as the list is built up.

🐍What other objects do you think define this dunder?

🐍What other objects do you think define this dunder?

For more information, Check out PEP 424:

peps.python.org

peps.python.org

Loading suggestions...