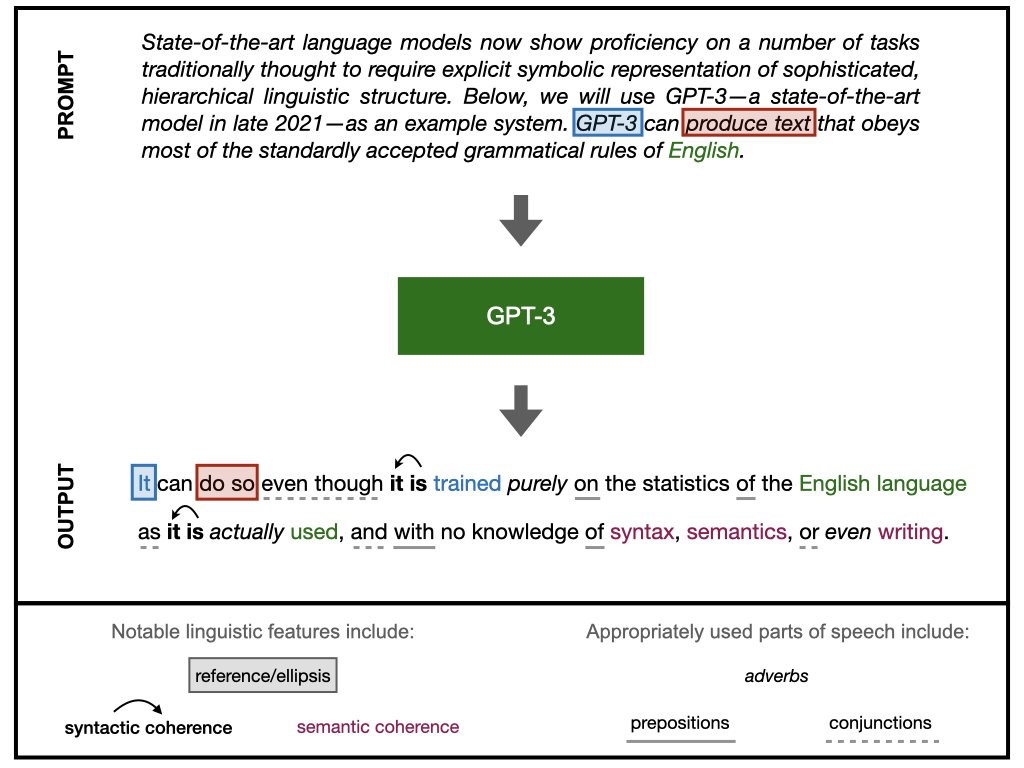

Thus, we argue that the word-in-context prediction objective is not enough to master human thought (even though it’s surprisingly effective for learning much about language!). 6/

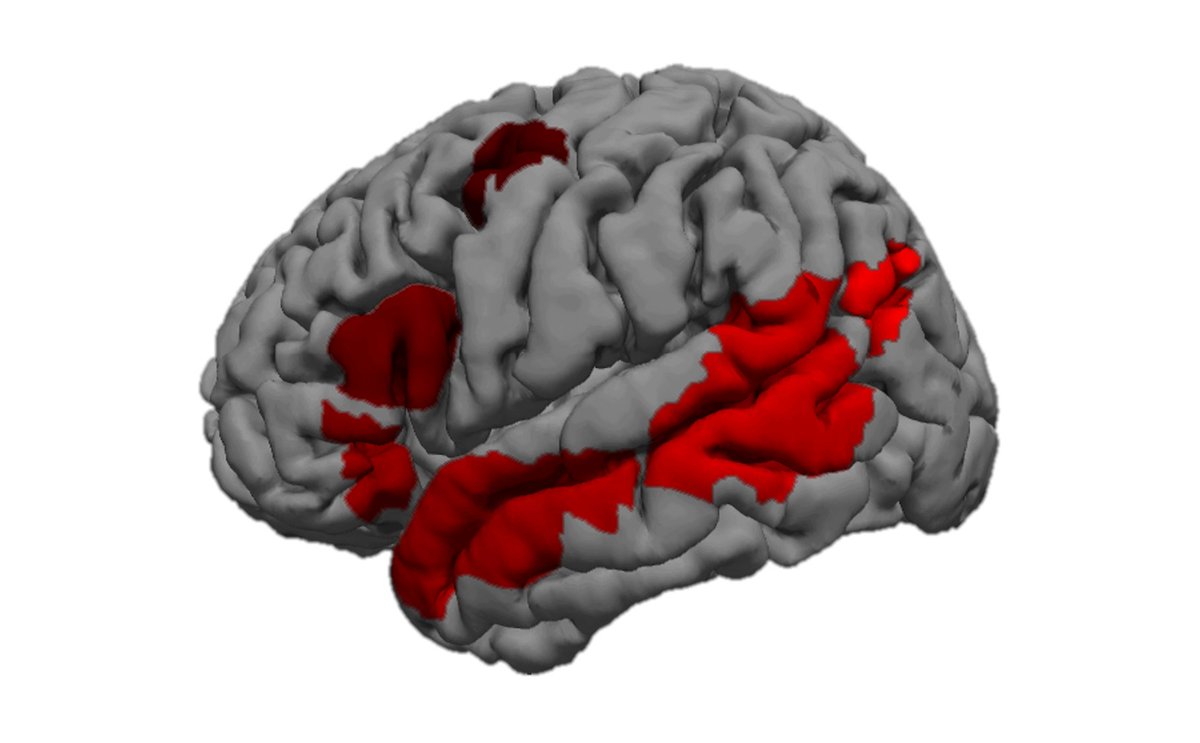

Like human brains, models that strive to master both formal & functional language competence will benefit from modular components - either built-in explicitly or emerging through a careful combo of data+training objectives+architecture. 7/

ChatGPT, with its combination of next-word prediction and RLHF objectives, might be a step in that direction (although it still can’t think imo). 8/

The formal/functional distinction is important for clarifying much of the current discourse around LLMs. Too often, people mistake coherent text generation for thought or even sentience. We call this a “good at language = good at thought” fallacy. 9/

theconversation.com

theconversation.com

Similarly, criticisms directed at LLMs center on their inability to think (or do math, or maintain a coherent worldview) and sometimes overlook their impressive advances in language learning. We call this a “bad at thought = bad at language” fallacy. 10/

It’s been fun working with a brilliant team of coauthors @kmahowald @ev_fedorenko @IbanDlank @Nancy_Kanwisher & Josh Tenenbaum.

11/

11/

We’ve done a lot of work refining our views and revising our arguments every time a new big model came out. In the end, we still think a cogsci perspective is valuable - and hope you do too :) 12/12

P.S. Although we have >20 pages of refs, we're likely missing stuff. If you think we don’t cover important work, comment below! We also under-cover some topics (grounding, memory, etc) - if you think something doesn’t square with the formal/functional distinction, let us know.

*should be Kassner & Schütze, so sorry

Loading suggestions...