We're releasing our @scale_AI hackathon 1st place project - "GPT is all you need for backend" with @evanon0ping @theappletucker

But let me first explain how it works:

But let me first explain how it works:

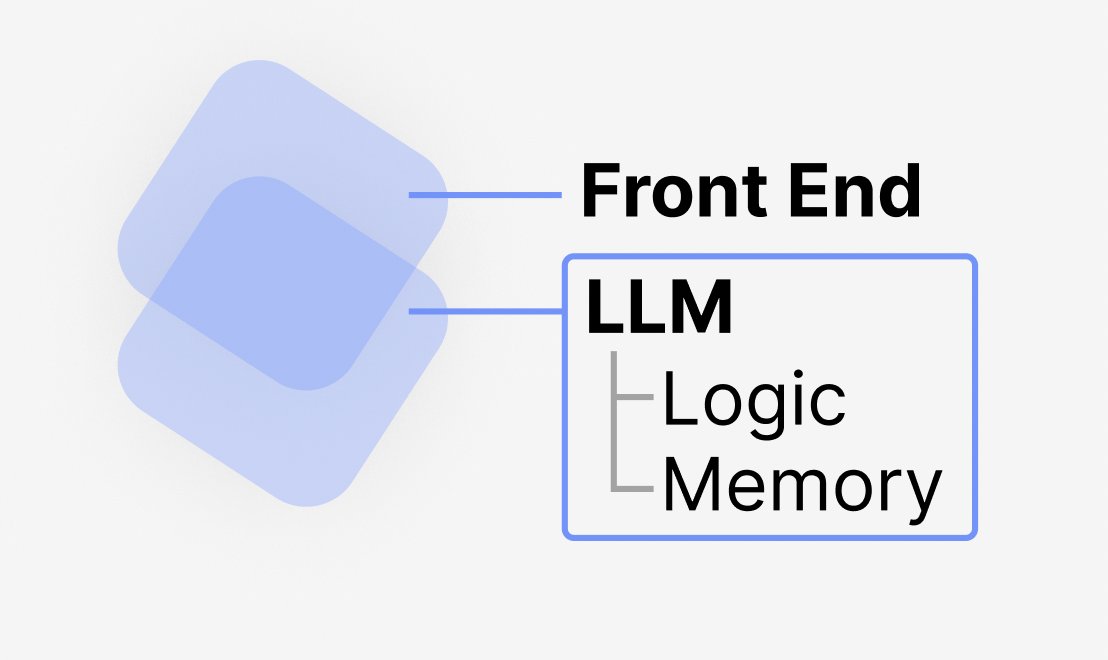

Our vision for a future tech stack is to completely replace the backend with an LLM that can both run logic and store memory. We demonstrated this with a Todo app.

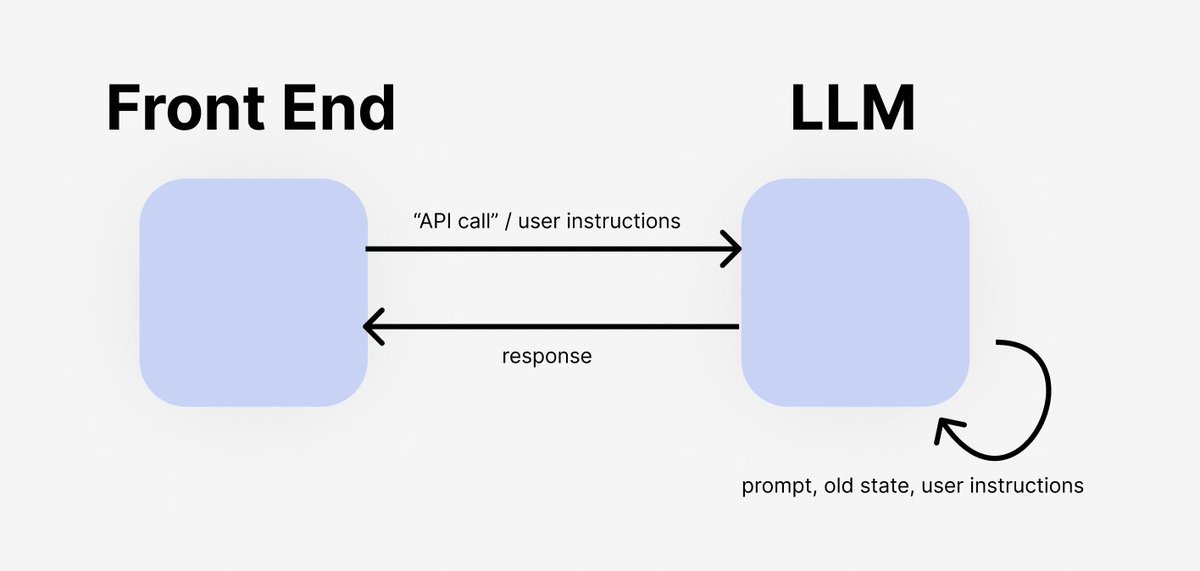

We first instruct the LLM on the purpose of the backend (i.e. "This is a todo list app") and provide it with an initial JSON blob for the database state (i.e. {todo_items: [{title: "eat breakfast", completed: true}, {title: "go to school", completed: false}]}.

Crazy thing is, the data is flexibly "stored". You can tell the LLM to create sublists, convert to key-value pairs, manage multiple linked todo lists, etc.

Because we simply fed the LLM an idea of the data at the beginning, the data schema is entirely malleable on the fly!

Because we simply fed the LLM an idea of the data at the beginning, the data schema is entirely malleable on the fly!

Even more, the backend logic is also entirely flexible. You can "call" the API with add_five_household_chores() or deleteAllTodosDealingWithFood() or sort_todos_by_estimated_time().

And it will do exactly that, even though you never wrote any of those functions!

And it will do exactly that, even though you never wrote any of those functions!

A ToDo app is just one example of what's possible. We thought of LLMagnus Chess App, flash cards with LLM backends, etc. The possibilities are limitless.

The future of backends might be no server, no DB, and only LLM??!

github.com

github.com

Loading suggestions...