I've been studying machine learning for half a decade, and here is the most popular function we use every day:

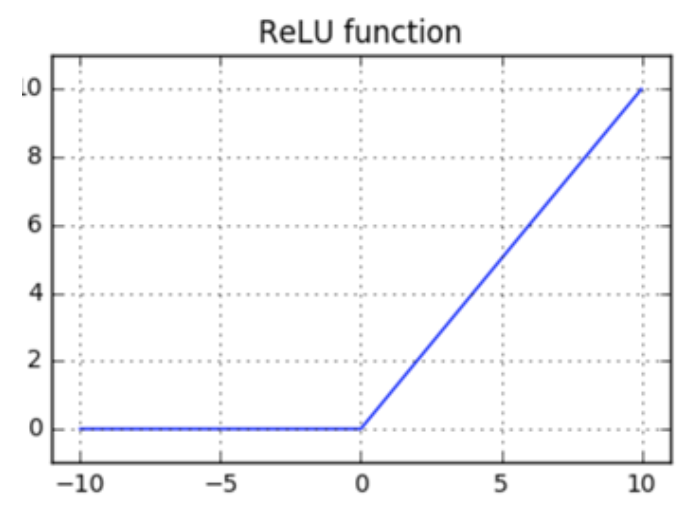

f(x) = max(0, x)

Yet many people don't understand a fundamental characteristic of this function.

Here is what you need to know:

f(x) = max(0, x)

Yet many people don't understand a fundamental characteristic of this function.

Here is what you need to know:

The name of this function is "Rectified Linear Unit" or ReLU.

Most people think ReLU is both continuous and differentiable.

They are wrong.

I know because I've asked this question several times in @0xbnomial and interviews.

Many make the same mistake.

Most people think ReLU is both continuous and differentiable.

They are wrong.

I know because I've asked this question several times in @0xbnomial and interviews.

Many make the same mistake.

Let's start by defining ReLU:

f(x) = max(0, x)

In English: if x <= 0, the function will return 0. Otherwise, the function will return x.

f(x) = max(0, x)

In English: if x <= 0, the function will return 0. Otherwise, the function will return x.

A necessary condition for a function to be differentiable: it must be continuous.

ReLU is continuous. That's good, but not enough.

Its derivative should also exist for every individual point.

Here is where things get interesting.

ReLU is continuous. That's good, but not enough.

Its derivative should also exist for every individual point.

Here is where things get interesting.

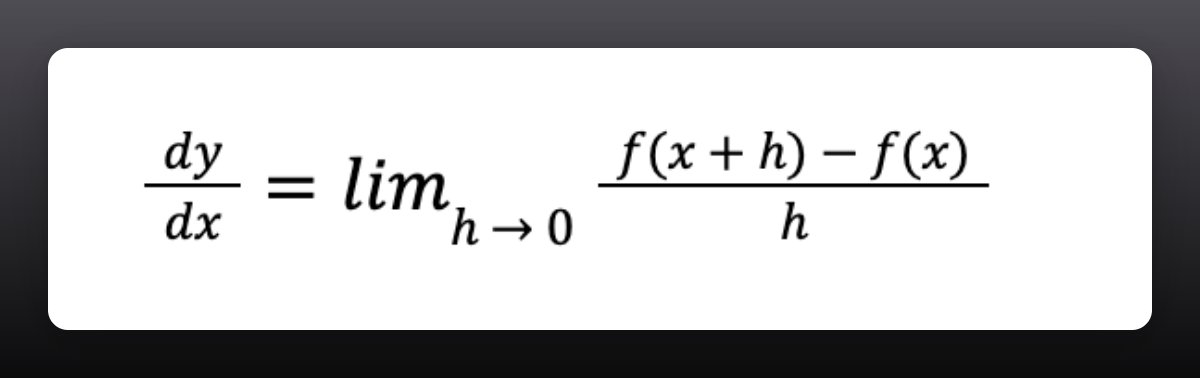

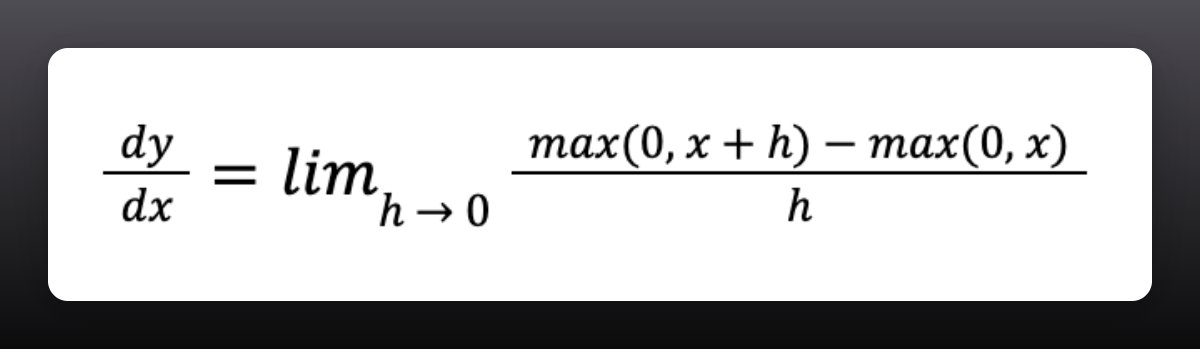

For ReLU to be differentiable, its derivative should exist at x = 0 (our problematic point.)

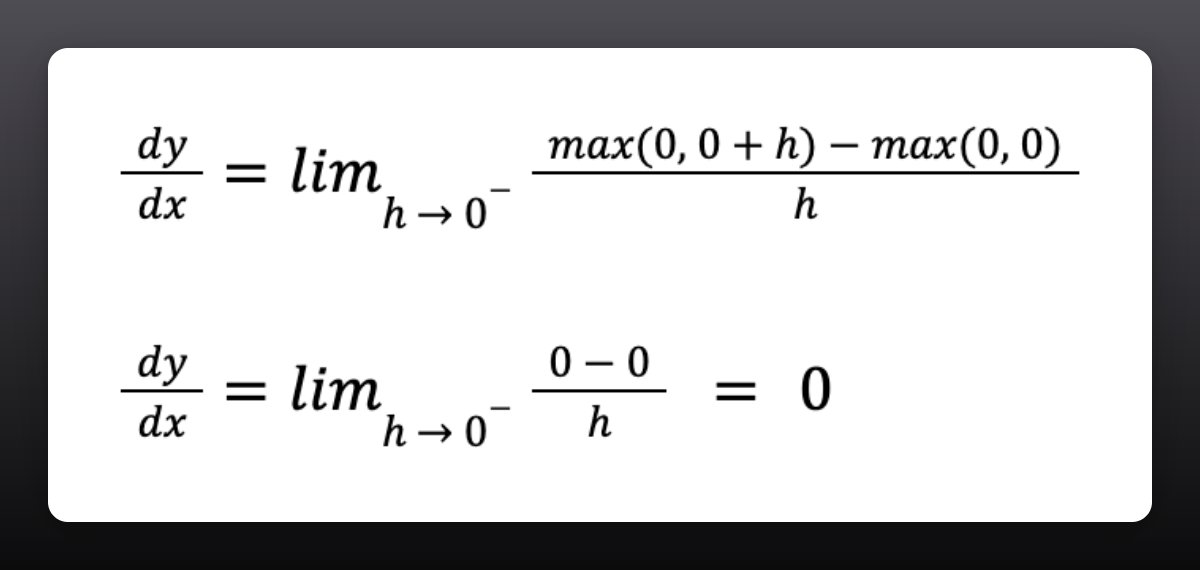

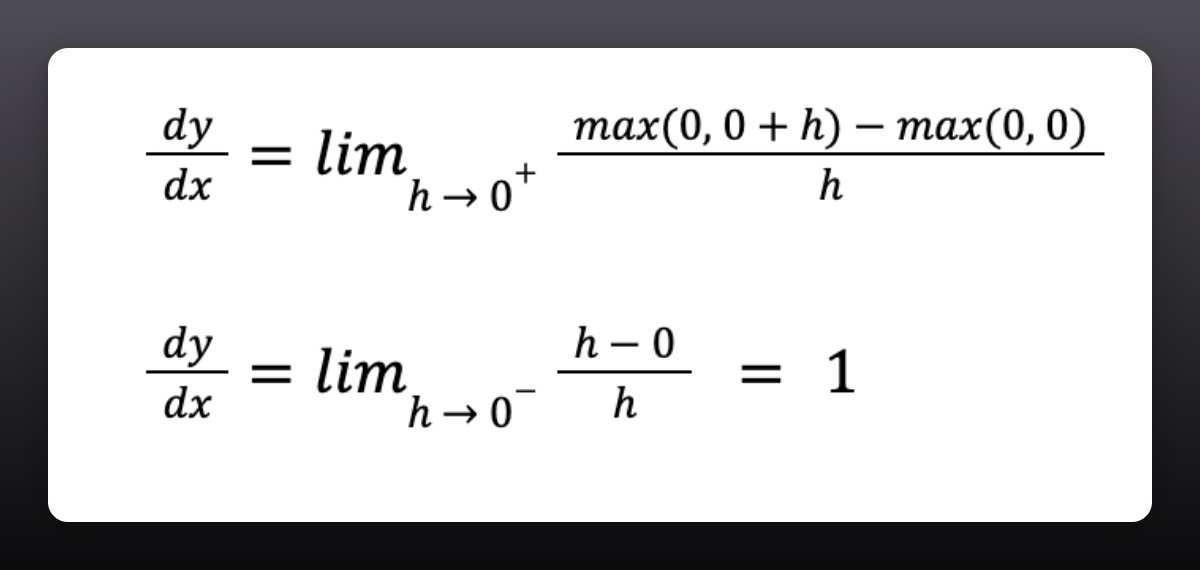

To see whether the derivate exists, we need to check that the left-hand and right-hand limits exist and are equal at x = 0.

That shouldn't be hard to do.

To see whether the derivate exists, we need to check that the left-hand and right-hand limits exist and are equal at x = 0.

That shouldn't be hard to do.

This is what we have:

1. The left-hand limit is 0.

2. The right-hand limit is 1.

For the function's derivative to exist at x = 0, both the left-hand and right-hand limits should be the same.

This is not the case. The derivative of ReLU doesn't exist at x = 0.

1. The left-hand limit is 0.

2. The right-hand limit is 1.

For the function's derivative to exist at x = 0, both the left-hand and right-hand limits should be the same.

This is not the case. The derivative of ReLU doesn't exist at x = 0.

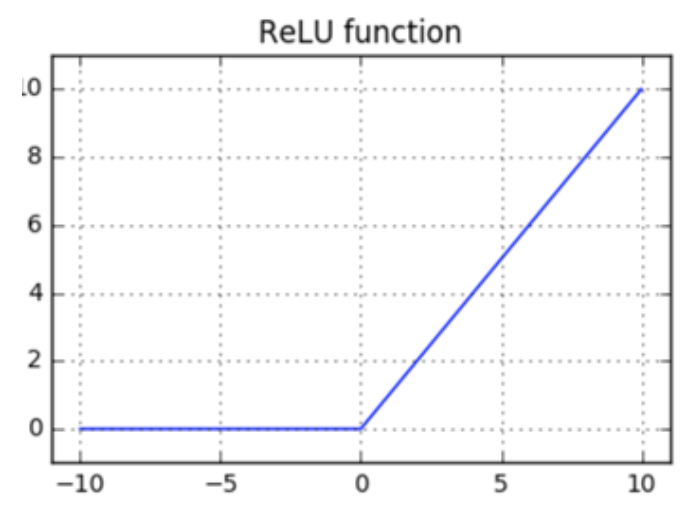

We now have the complete answer:

• ReLU is continuous

• ReLU is not differentiable

But here was the central confusion point:

How come ReLU is not differentiable but we can use it as an activation function when using Gradient Descent?

• ReLU is continuous

• ReLU is not differentiable

But here was the central confusion point:

How come ReLU is not differentiable but we can use it as an activation function when using Gradient Descent?

What happens is that we don't care that the derivative of ReLU is not defined when x = 0. When this happens, we set the derivative to 0 (or any arbitrary value) and move on with our lives.

A nice hack.

This is the reason we can still use ReLU together with Gradient Descent.

A nice hack.

This is the reason we can still use ReLU together with Gradient Descent.

Loading suggestions...