#01_ ControlNet Depth&pose Workflow Quick Tutorial #stablediffusion #AIイラスト #pose2image #depth2image

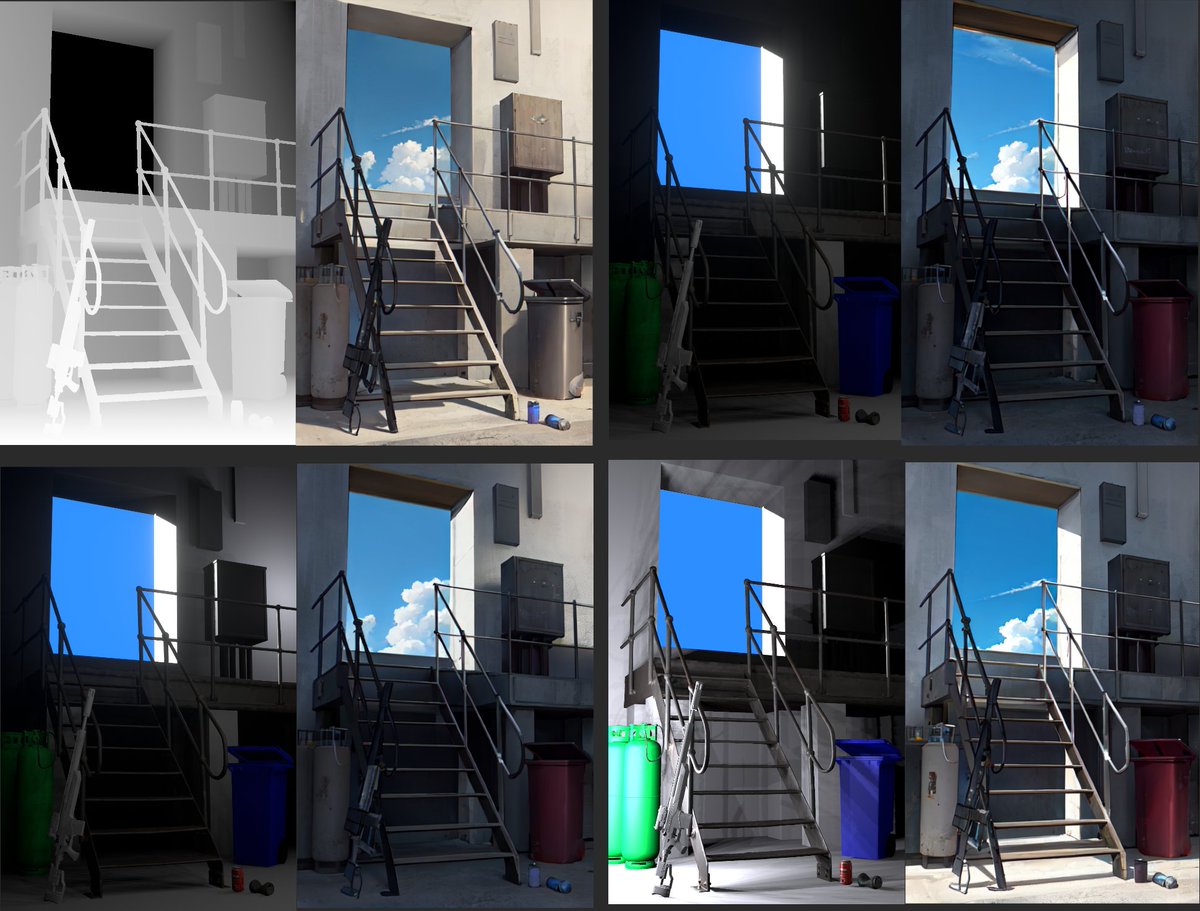

#02_ First we need to render the background and character Openpose bones separately. We will use them for Depth2image and Pose2image respectively. I used Blender. youtube.com

#07_ ABG Remover Script makes it easy to remove the background. We need to tweak the generated mask a bit to make it cleaner. github.com

#8_ Finally, merge the background and characters and upscale them to a higher resolution. I used the Ultimate SD Upscale extension. github.com

#11_ Blender model that looks like an openpose bone can be downloaded for free here. toyxyz.gumroad.com

Loading suggestions...