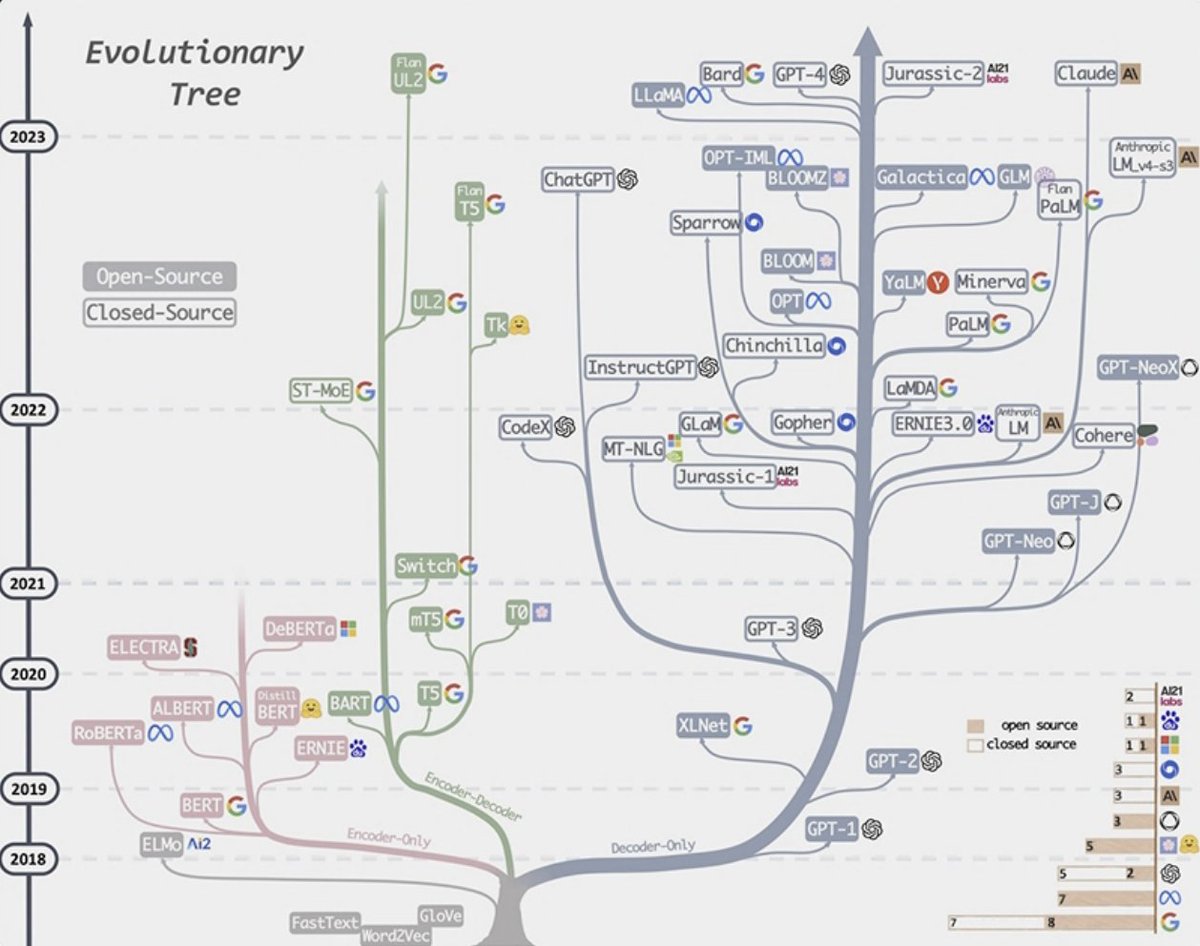

Andrej repeats this several times, the best open source model to learn from right now is probably LLaMa from @MetaAI (since OAI didn't release anything about GPT-4)

GPT-2 - released + weights

GPT-3 - base model available via API (da-vinci)

GPT-4 - Not Available via API

8/

GPT-2 - released + weights

GPT-3 - base model available via API (da-vinci)

GPT-4 - Not Available via API

8/

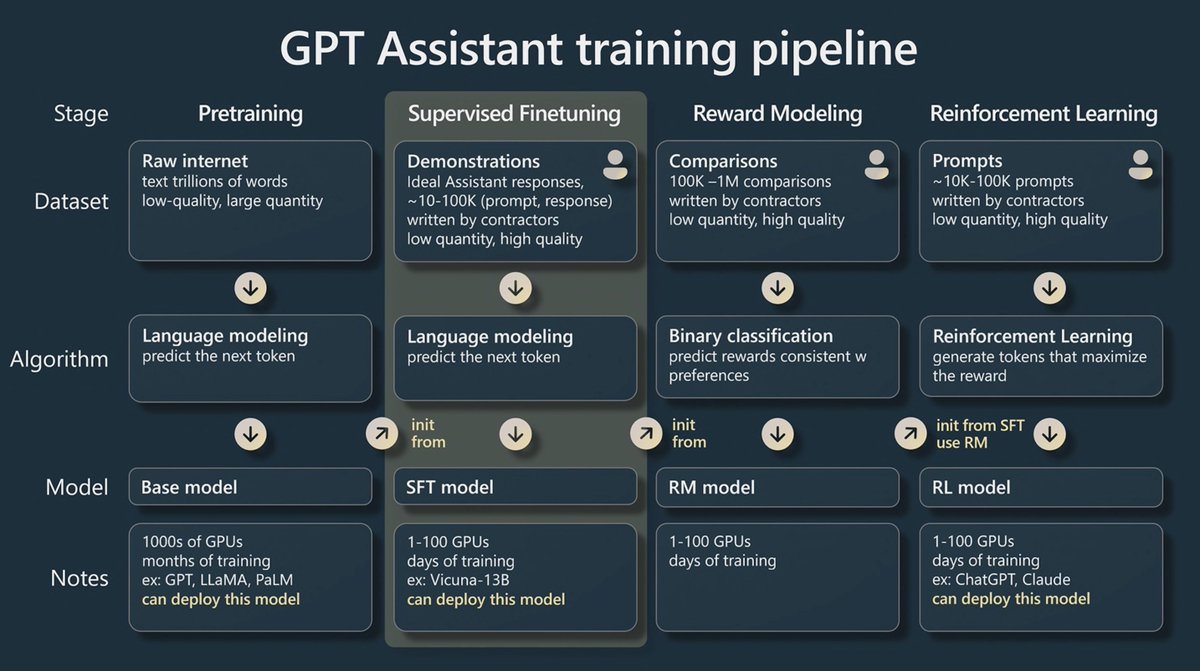

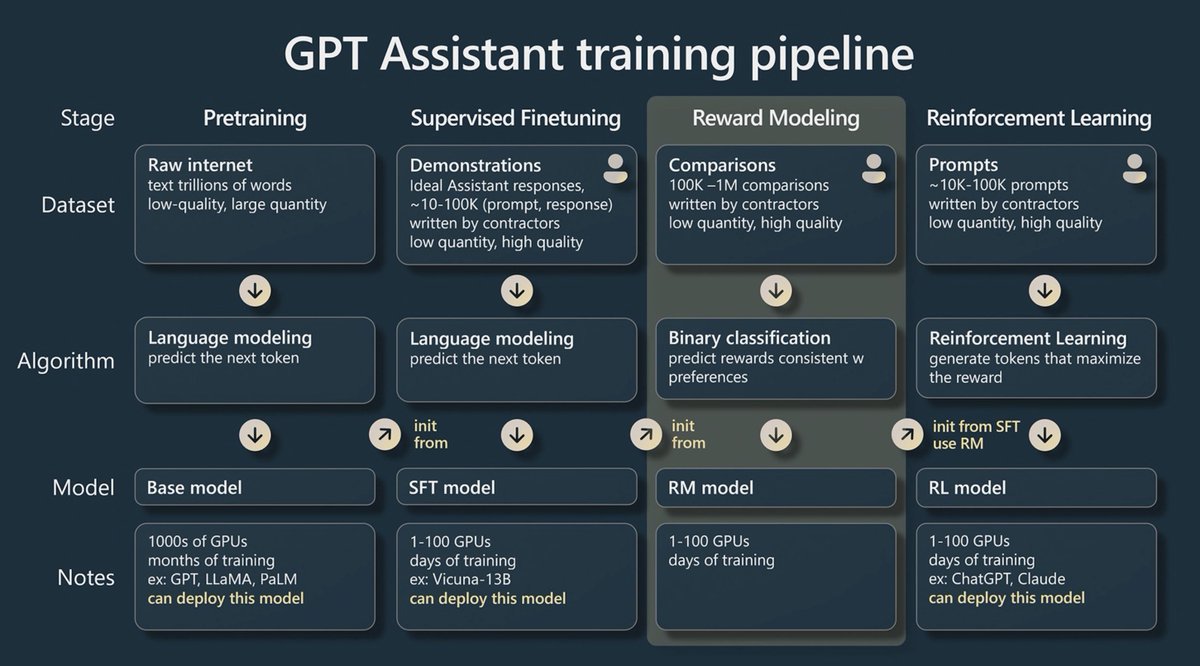

This is done by wighting the better voted on responses. For example, when you hit 👍 or 👎 in chatGPT, or choose to regenerate a response, those signals are great for RLHF.

12/

12/

Andrej is going into the potential reasons of why RLHF models "feel" better to us. At least in terms being a good assistant.

Here again if anyone's still reading, I'll refer you to the video 😅

13/

Here again if anyone's still reading, I'll refer you to the video 😅

13/

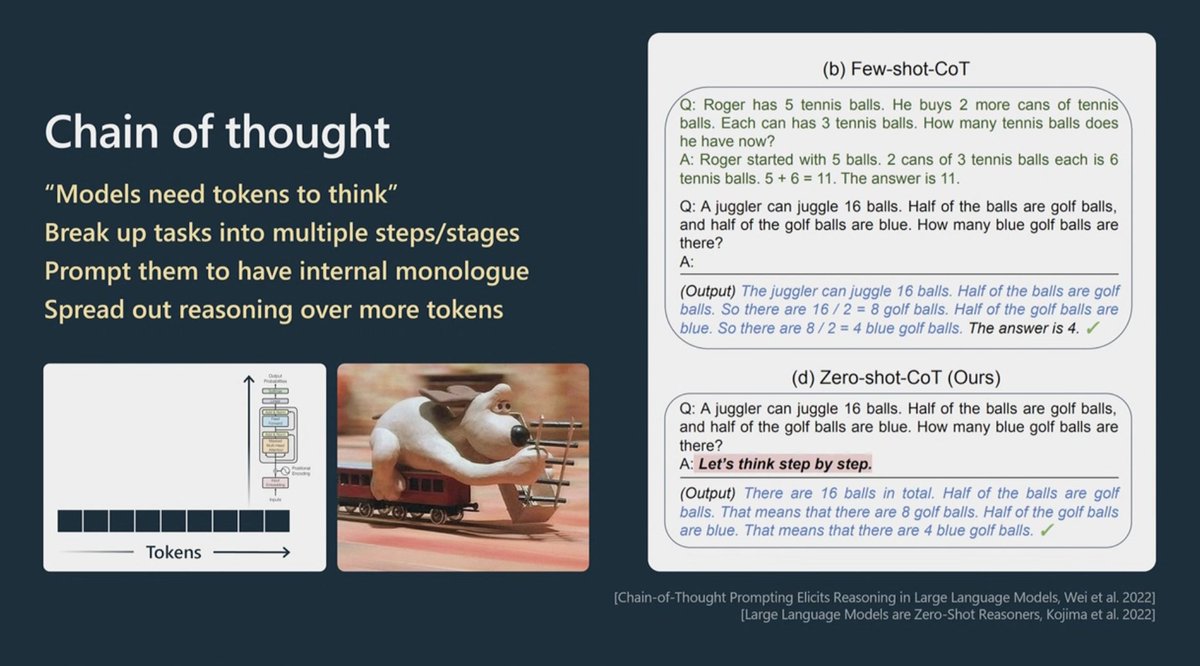

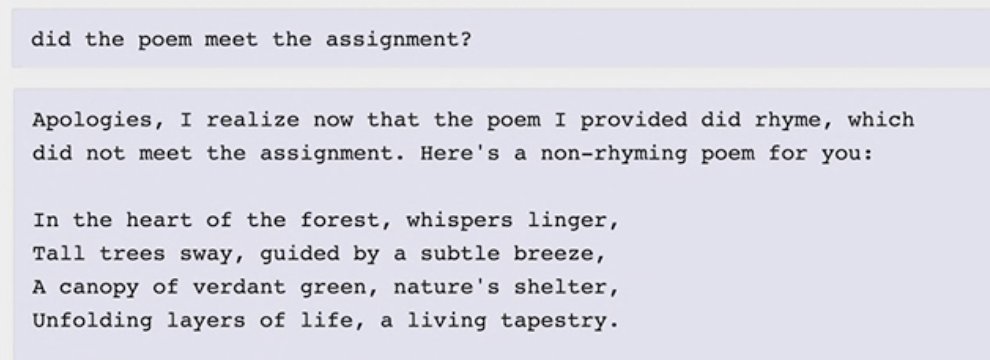

Now Andrej is going into Self Reflection as a method.

Models can get "stuck" because they have no way to cancel what tokens they already sampled.

Imagine yourself saying the wrong word and stopping yourself in the middle "let me rephrase" and you re-start the sentence

20/

Models can get "stuck" because they have no way to cancel what tokens they already sampled.

Imagine yourself saying the wrong word and stopping yourself in the middle "let me rephrase" and you re-start the sentence

20/

Andrej also calls out #AutoGPT ( by @SigGravitas ) as a project that got overhyped but is still very interesting to observe and get inspiration from

I'll plug in my twitter list of "Agent" builders that includes many of those folks

24/

I'll plug in my twitter list of "Agent" builders that includes many of those folks

24/

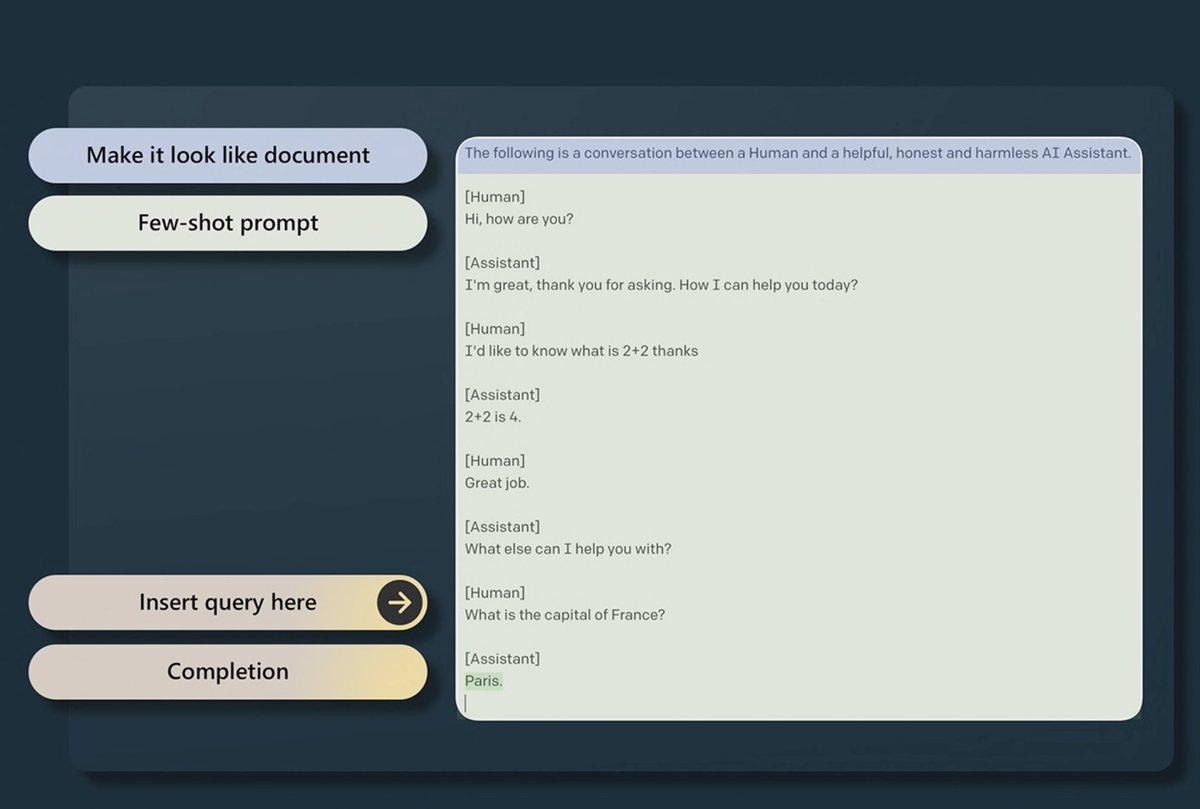

My personal prepend to most prompts is this one, but also things like "you have X IQ" work!

26/

26/

"Context window of the transformer is it's working memory"

The model has immediate perfect access to it's working memory.

Andrej calling out @gpt_index by @jerryjliu0 on stage as an example of a way to "load" information into this perfect recall working memory.

28/

The model has immediate perfect access to it's working memory.

Andrej calling out @gpt_index by @jerryjliu0 on stage as an example of a way to "load" information into this perfect recall working memory.

28/

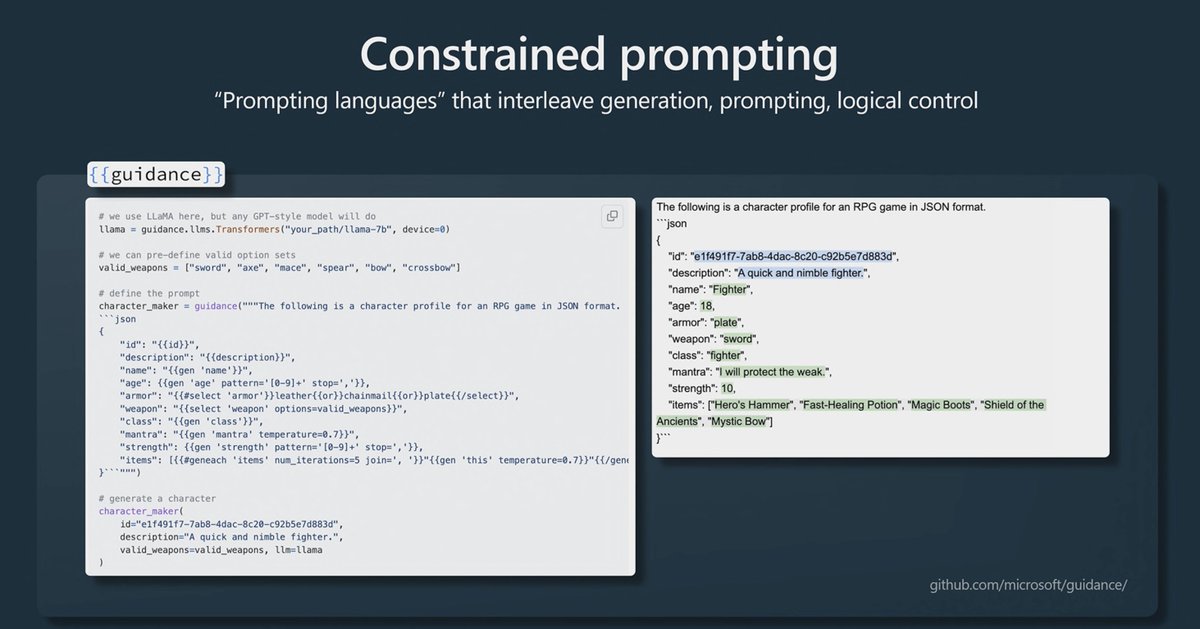

Yay, he's covering the guidance project from Microsoft, that constraints the prompt outputs.

github.com

29/

github.com

29/

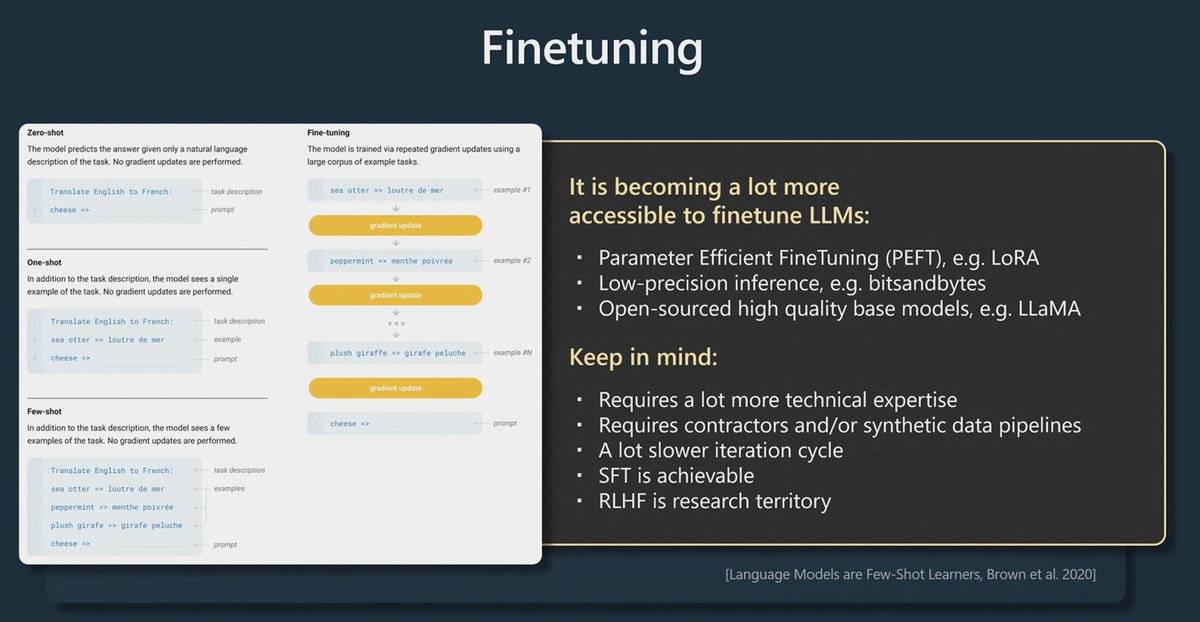

This is way more efficient than retraining the whole model, and is more available.

Andrej again calls out LlaMa as the best open source fine-tuneable model and is hinting at @ylecun to open source it for commercial use 😅🙏

31/

Andrej again calls out LlaMa as the best open source fine-tuneable model and is hinting at @ylecun to open source it for commercial use 😅🙏

31/

If you'd like @karpathy's practical examples - start from here 👇

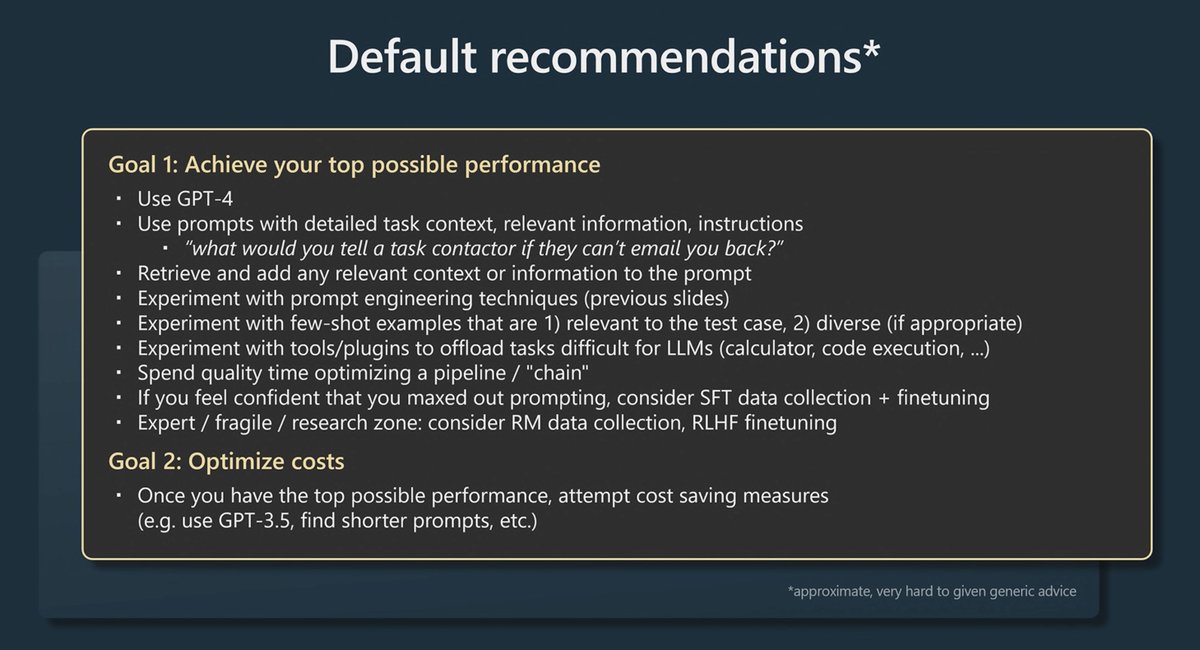

This is the de-facto "kitchen sink" for building a product with an LLM goal/task.

32/

This is the de-facto "kitchen sink" for building a product with an LLM goal/task.

32/

"Use GPT-4 he says, it's by far the best."

I personally noticed Claude being very good at certain tasks, and it's way faster for comparable tasks so, y'know if you have access, I say evaluate. But he's not wrong, GPT-4 is... basically amazing.

Can't wait for Vision 😍

33/

I personally noticed Claude being very good at certain tasks, and it's way faster for comparable tasks so, y'know if you have access, I say evaluate. But he's not wrong, GPT-4 is... basically amazing.

Can't wait for Vision 😍

33/

"What would you tell a task contractor if they can't email you back" is a good yard stick at a complex prompt by Andrej.

From me: for example, see wolfram alpha's prompt

34/

From me: for example, see wolfram alpha's prompt

34/

Retrieve and add any relevant context or information to the prompt.

And shove as many examples of how you expect the results to look like in the prompting.

Me: Here tools like @LangChainAI and @trychroma come into play, use them to enrich your prompts.

35/

And shove as many examples of how you expect the results to look like in the prompting.

Me: Here tools like @LangChainAI and @trychroma come into play, use them to enrich your prompts.

35/

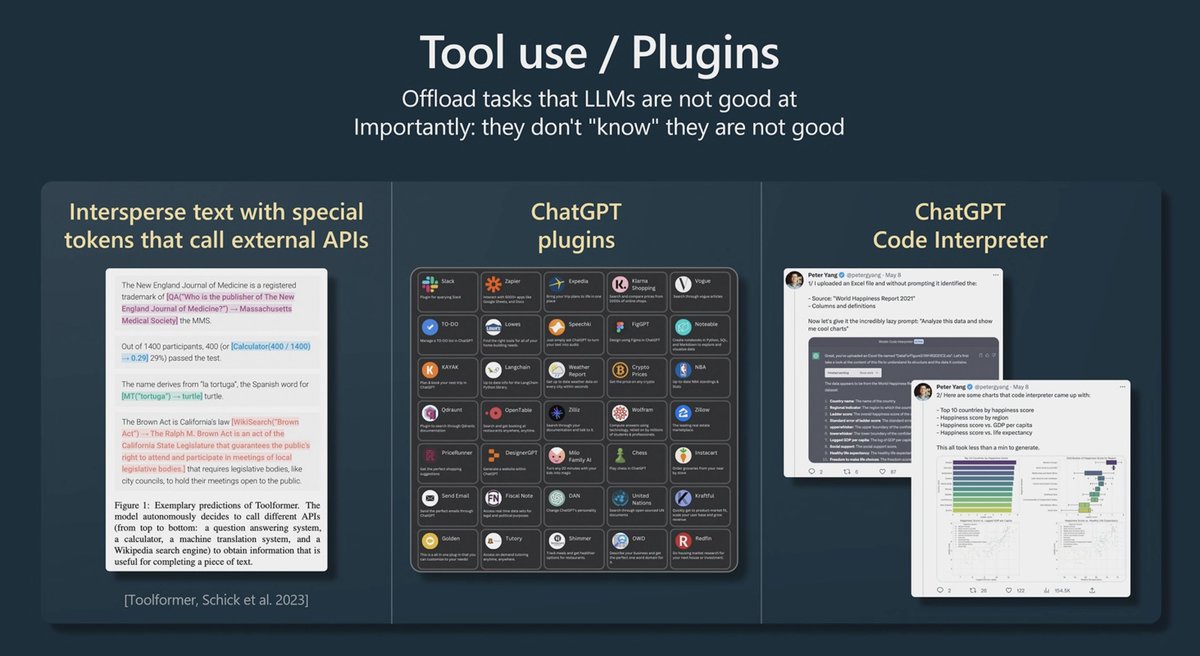

Experiment with tools/plugins to offload tasks like calculation, code execution.

Andrej also suggest first achieving your task, and only then optimize for cost.

Me: removing the constraint of "but this will be very costly" definitely helps with prompting if you can afford

36/

Andrej also suggest first achieving your task, and only then optimize for cost.

Me: removing the constraint of "but this will be very costly" definitely helps with prompting if you can afford

36/

If you've maxed out prompting, and he again repeats, prompting can take you very far, then your company can decide to move to fine-tuning and RLHF on your own data.

37/

37/

Models may be biased

Models may fabricate ("hallucinate") information

Models may have reasoning errors

Models may struggle in classes of applications, e.g. spelling related tasks

Models have knowledge cutoffs (e.g. September 2021)

Models are susceptible to prompt injection

39/

Models may fabricate ("hallucinate") information

Models may have reasoning errors

Models may struggle in classes of applications, e.g. spelling related tasks

Models have knowledge cutoffs (e.g. September 2021)

Models are susceptible to prompt injection

39/

So for may 2023 - per Andrej Karpahy, use LLMs for these tasks:

⭐ Use in low-stakes applications, combine with human oversight

⭐ Source of inspiration, suggestions

⭐ Copilots over autonomous agents

40/

⭐ Use in low-stakes applications, combine with human oversight

⭐ Source of inspiration, suggestions

⭐ Copilots over autonomous agents

40/

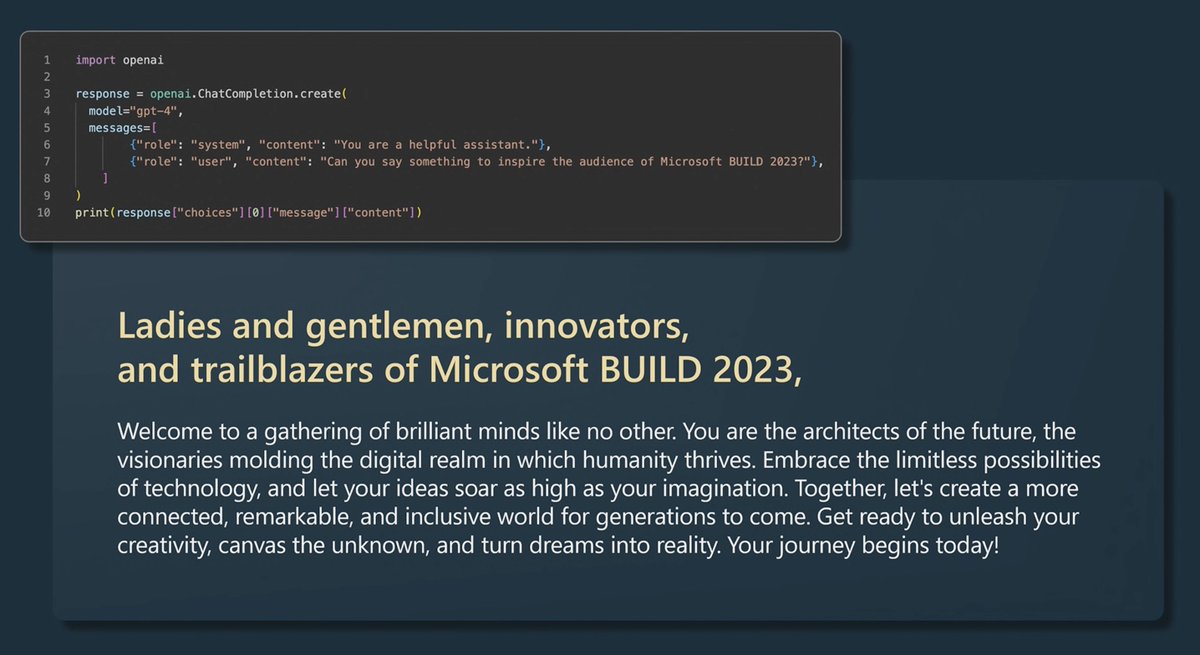

Finally, Andrej concludes with an example of how easy it is to ask for a completion, and with GPT-4 generated address to the audience of #microsoftBuild which he reads in a very TED like cadence to the applauds from the audience!

Thanks @karpathy

41/

Thanks @karpathy

41/

You can read the unrolled version of this thread here: typefully.com

43/

43/

Enjoyed this thread? Give it a retweet and a follow! 🫡

Oh and duh, here is the video! 😶

I meant for the first tweet to be a quote of Andrey's tweet but then... got rugged by twitter

I meant for the first tweet to be a quote of Andrey's tweet but then... got rugged by twitter

Loading suggestions...