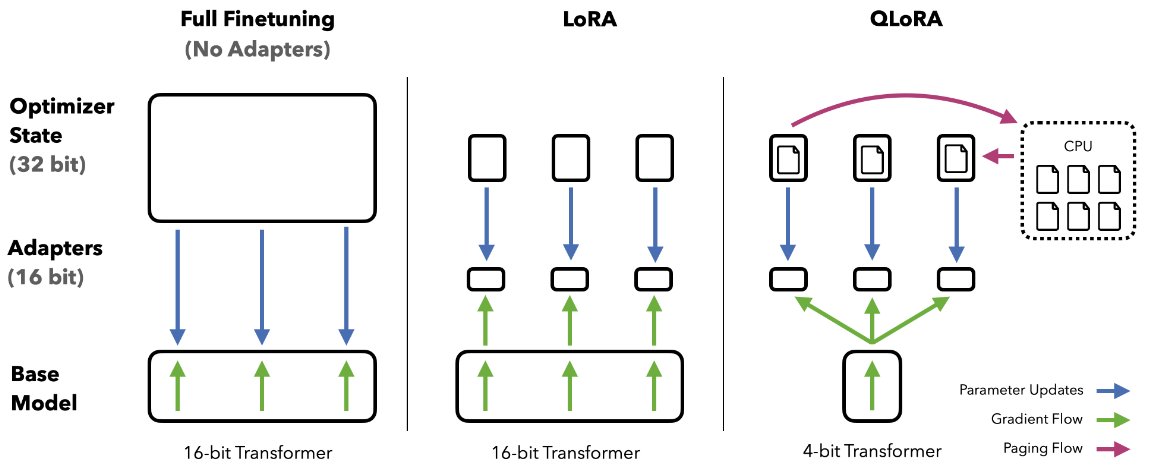

4-bit QLoRA is here to equalize the playing field for LLM exploration. You can now fine-tune a state-of-the-art 65B chatbot on one GPU in 24h.

Paper: arxiv.org

Code and Demo: github.com

Paper: arxiv.org

Code and Demo: github.com

arxiv.org/abs/2305.14314

QLoRA: Efficient Finetuning of Quantized LLMs

We present QLoRA, an efficient finetuning approach that reduces memory usage enough to finetune a 65...

github.com/artidoro/qlora

GitHub - artidoro/qlora: QLORA: Efficient Finetuning of Quantized LLMs

QLORA: Efficient Finetuning of Quantized LLMs. Contribute to artidoro/qlora development by creating...

The paper arxiv.org contains lots of insights and considerations about instruction tuning and chatbot evaluation and points to areas for future work.

In particular:

- instruction tuning datasets are not necessarily helpful for chatbot performance

- quality of data rather than quantity is important for chatbots

- multitask QA benchmarks like MMLU are not always correlated with chatbot performance

- instruction tuning datasets are not necessarily helpful for chatbot performance

- quality of data rather than quantity is important for chatbots

- multitask QA benchmarks like MMLU are not always correlated with chatbot performance

- both human and automated evaluations are challenging when comparing strong systems

- using large eval datasets with many prompts, like the OA benchmark, is important for evaluation

- many possible improvements to our setup including RLHF explorations

- using large eval datasets with many prompts, like the OA benchmark, is important for evaluation

- many possible improvements to our setup including RLHF explorations

Guanaco is *far from perfect*, but the fact that it is remarkably easy to train with QLoRA while obtaining strong chatbot performance makes it a perfect starting point for future research.

Thank you to @Tim_Dettmers @universeinanegg @LukeZettlemoyer and the many people who made this project possible! In particular, @younesbelkada and the @huggingface team. It's been amazing working with you

Loading suggestions...