Gradients are really important in Machine Learning.

We use gradient information in deep learning and many other algorithms to train models and optimize them.

To understand gradients first we need to know derivatives.

I know it sounds horrible, but stay with me!

2/10

We use gradient information in deep learning and many other algorithms to train models and optimize them.

To understand gradients first we need to know derivatives.

I know it sounds horrible, but stay with me!

2/10

What is a gradient?

A gradient is a derivative of a function with more input variables.

So a gradient is basically the same as a derivative but with more dimensions.

In ML, we typically have a set of parameters that define a model, so we use gradients.

6/10

A gradient is a derivative of a function with more input variables.

So a gradient is basically the same as a derivative but with more dimensions.

In ML, we typically have a set of parameters that define a model, so we use gradients.

6/10

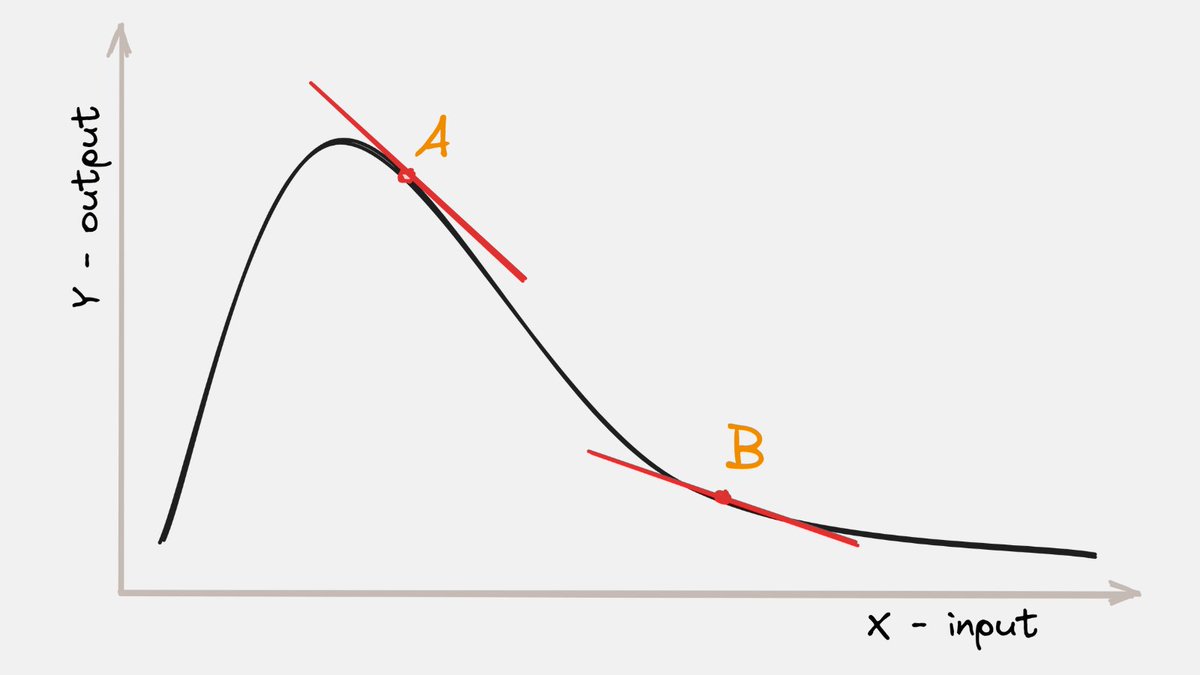

Why knowing the derivative/gradient is good?

Because we can optimize.

The derivative can tell how to change the input in order to increase or decrease the output, so we can get closer to minimum or maximum of a function.

Go back to the A-B example.

7/10

Because we can optimize.

The derivative can tell how to change the input in order to increase or decrease the output, so we can get closer to minimum or maximum of a function.

Go back to the A-B example.

7/10

That's it for today.

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

10/10

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

10/10

Loading suggestions...