Technology

Artificial Intelligence

Machine Learning

Software Development

Natural Language Processing

I just added @nomic_ai new GPT4All Embeddings to @LangChainAI. Here's a new doc on running local / private retrieval QA (e.g., on your laptop) w/ GPT4All embeddings + @trychroma + GPT4All LLM. Easy setup, great work from @nomic_ai ...

python.langchain.com

python.langchain.com

Impressively easy UX from @nomic_ai. Embeddings "just worked" (auto-downloads locally) ...

python.langchain.com

python.langchain.com

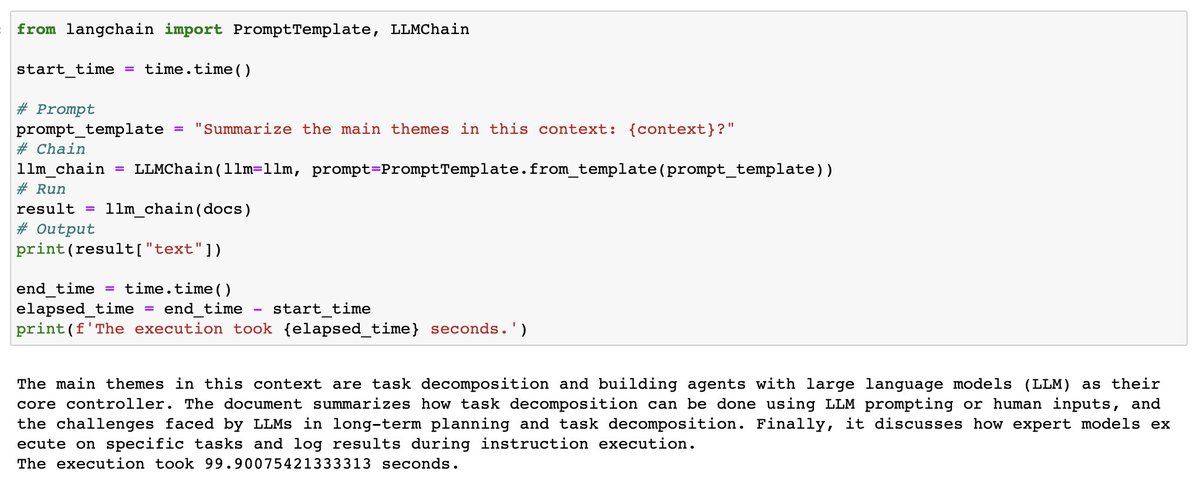

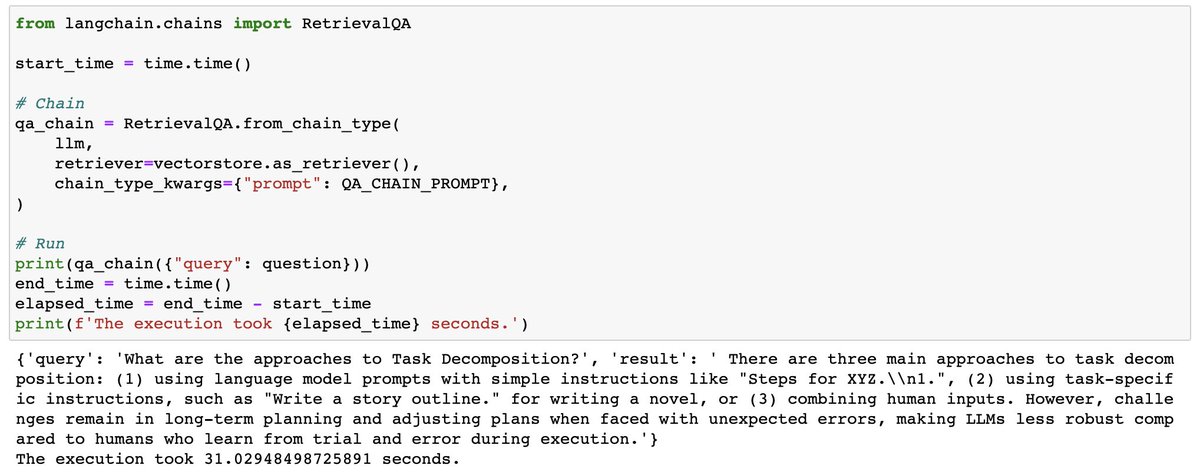

... example in docs shows retrievalQA on @lilianweng great blog post on agents. Load the blog text from website, split it, and create a local @trychroma vectorDB w/ local GPT4All embeddings. Ask any question, get back relevant splits ...

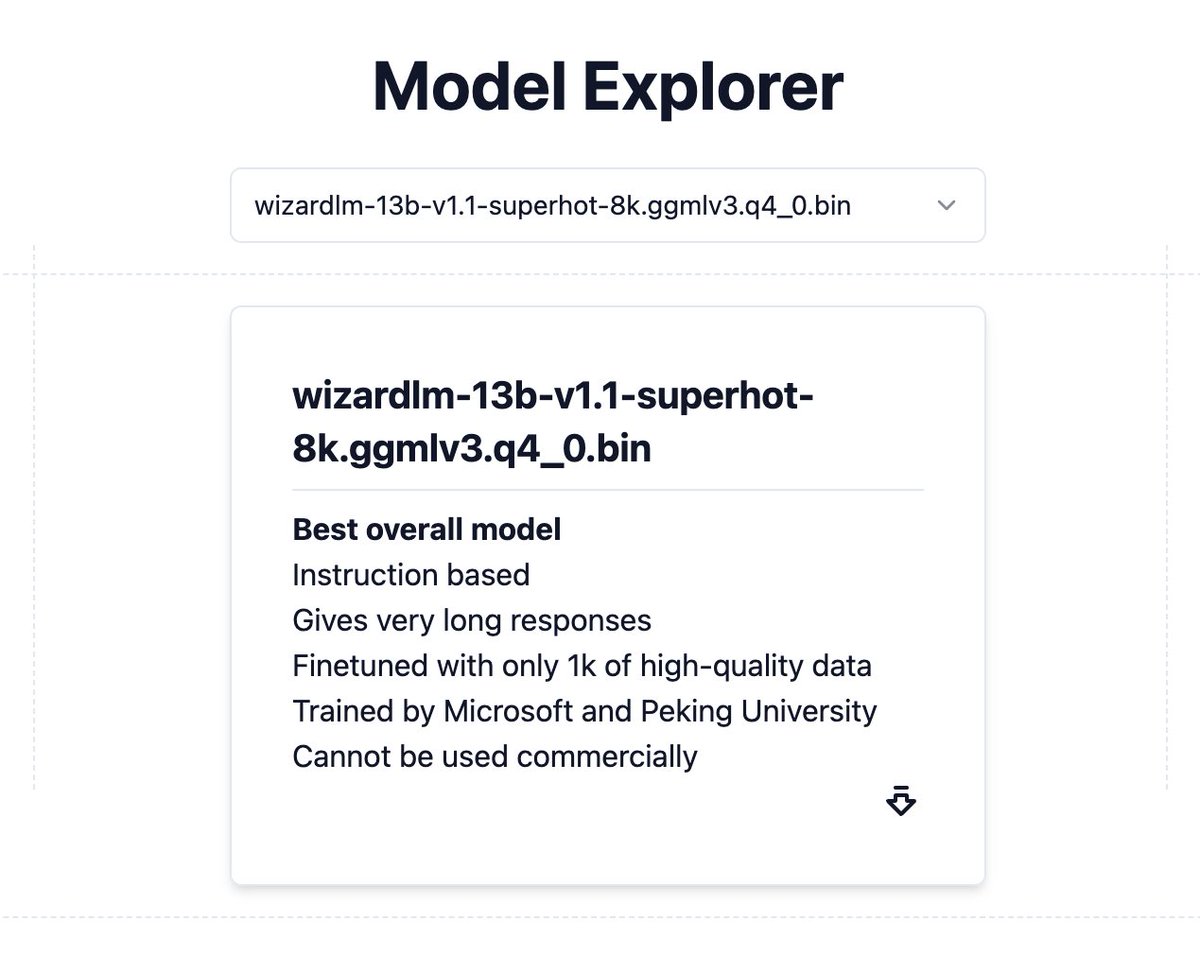

... w/ local DB done, download LLM from @nomic_ai. Their Model Explorer is very helpful to browse different models. Download and then supply the local model path in notebook to create a local LLM ...

gpt4all.io

gpt4all.io

Loading suggestions...