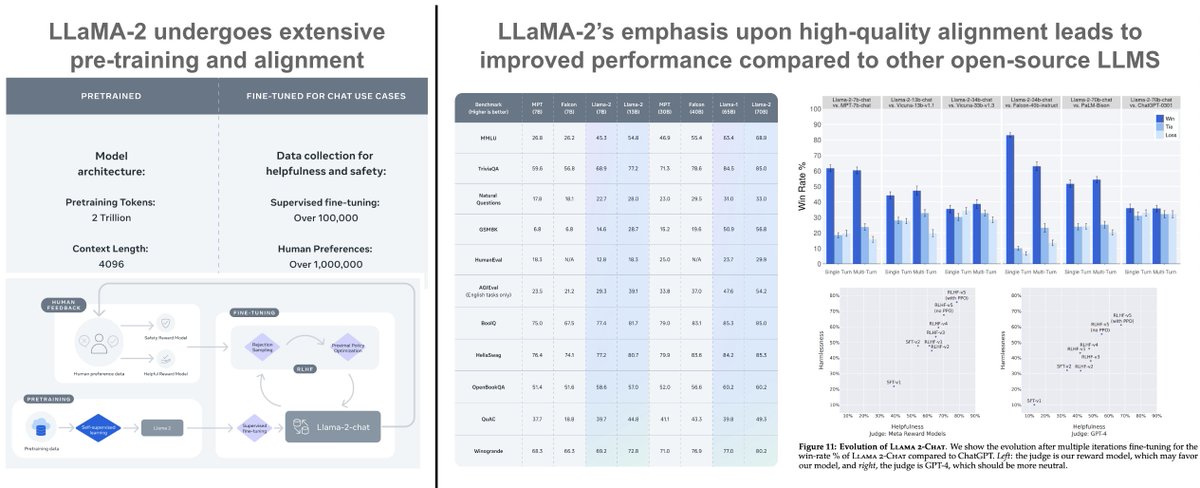

I'm sure a lot of people have already read the LLaMA-2 publication, but here it is in case anyone needs the link. Goes without saying, but it's an awesome read that's full of really interesting/useful details about properly aligning LLMs.

arxiv.org

arxiv.org

Loading suggestions...