✅ Principal Component Analysis ( PCA) is an important technique in ML - Explained in simple terms.

A quick thread 👇🏻🧵

#MachineLearning #Coding #100DaysofCode #deeplearning #DataScience

PC : Research Gate

A quick thread 👇🏻🧵

#MachineLearning #Coding #100DaysofCode #deeplearning #DataScience

PC : Research Gate

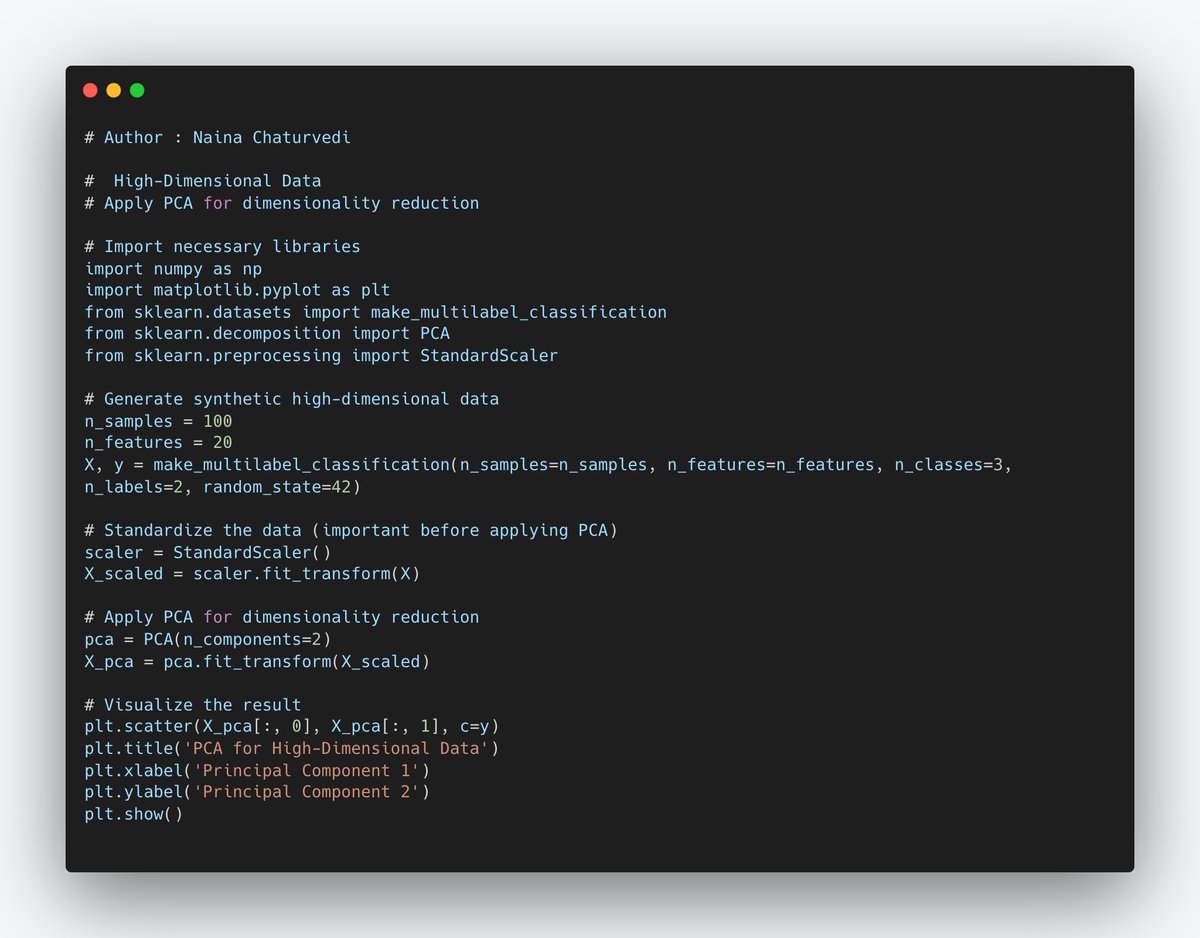

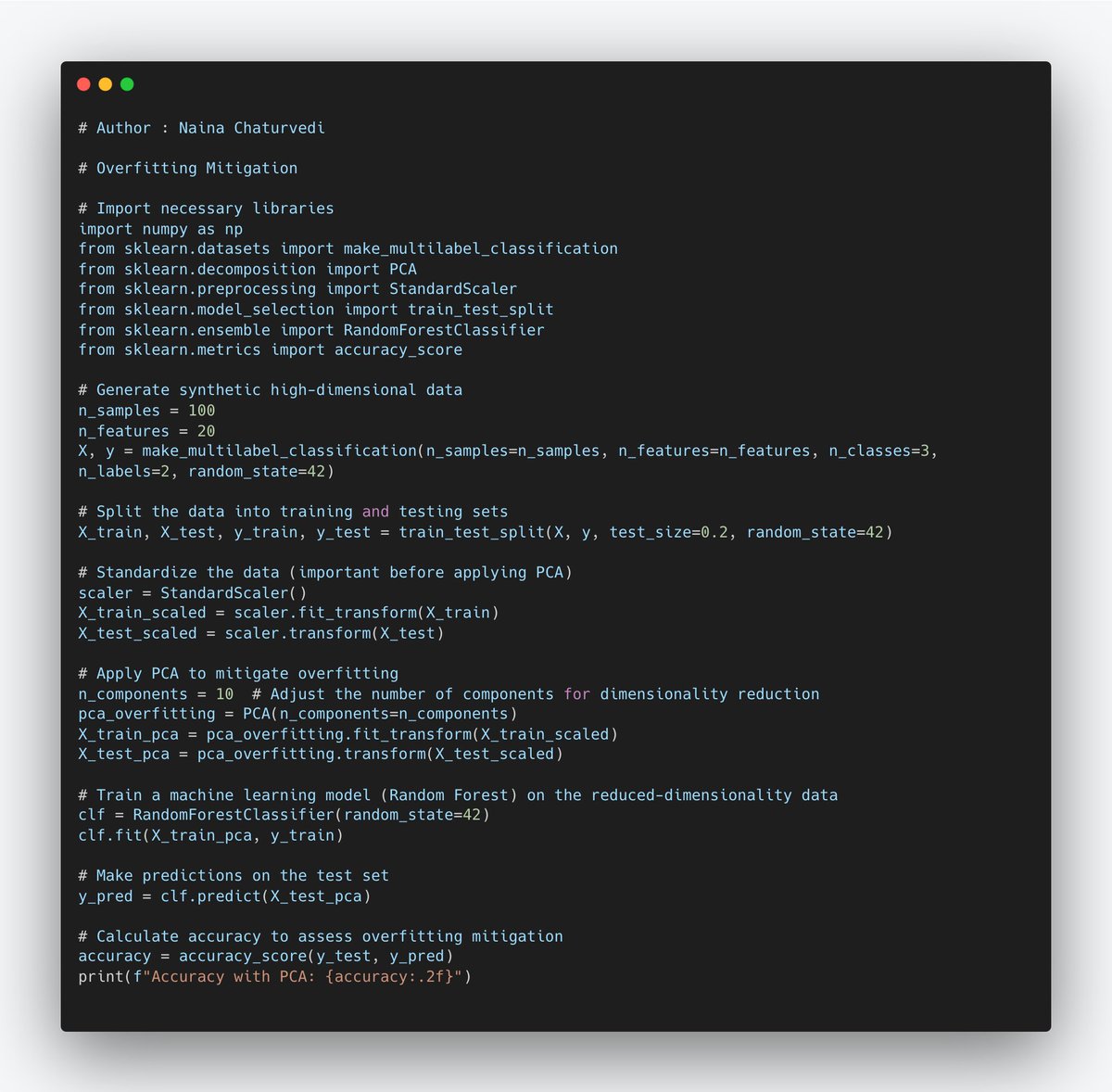

9/ PCA is a statistical method used for reducing the dimensionality of data while retaining its essential information. It achieves this by identifying the most significant patterns (principal components) in the data and representing it in a lower-dimensional space.

11/ By selecting a subset of these principal components, you can represent the data with reduced dimensions.

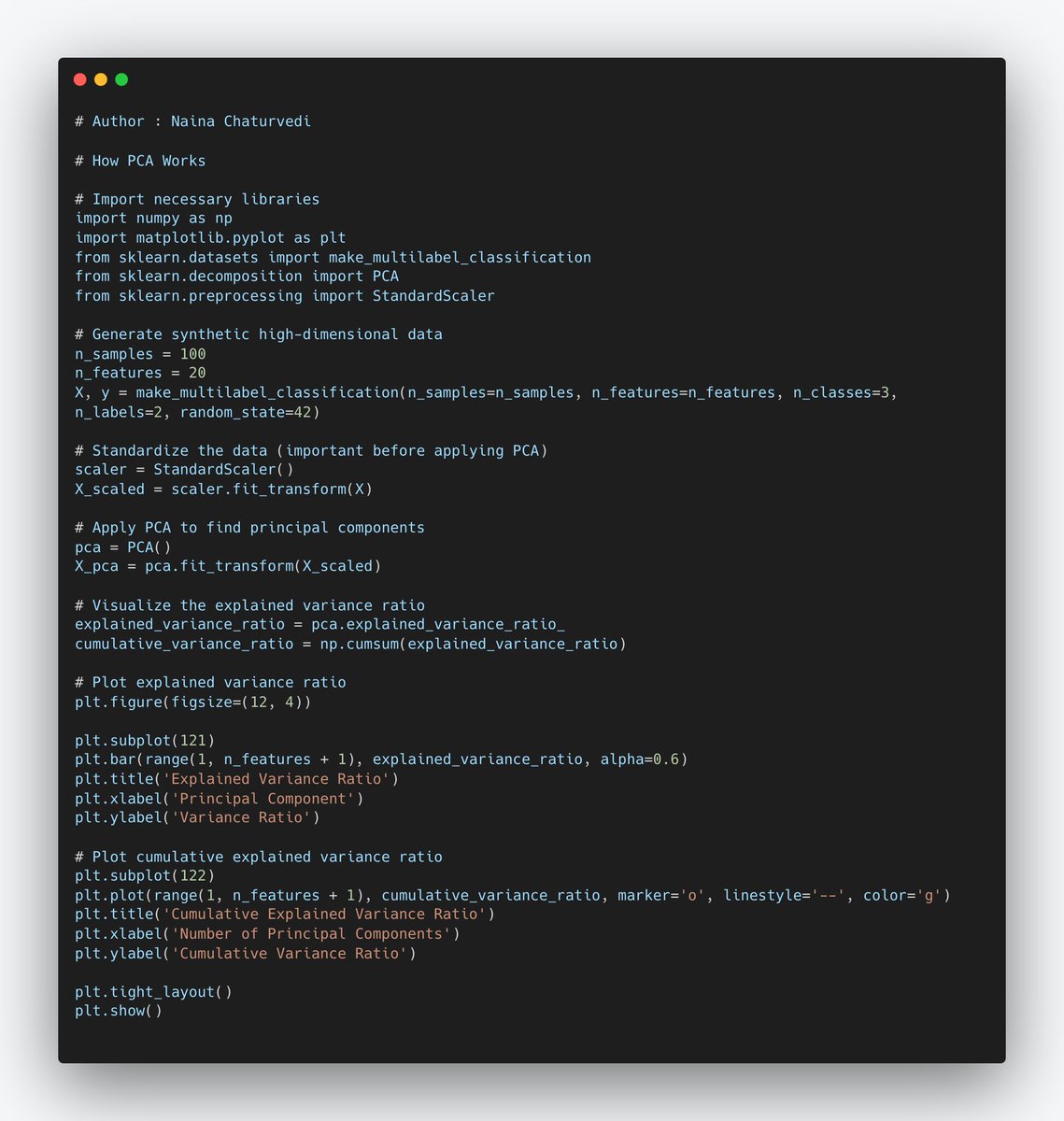

14/ The eigenvector with the highest eigenvalue is the first principal component, the second highest eigenvalue corresponds to the second principal component, and so on. Eigenvalues indicate how much variance is explained by each principal component.

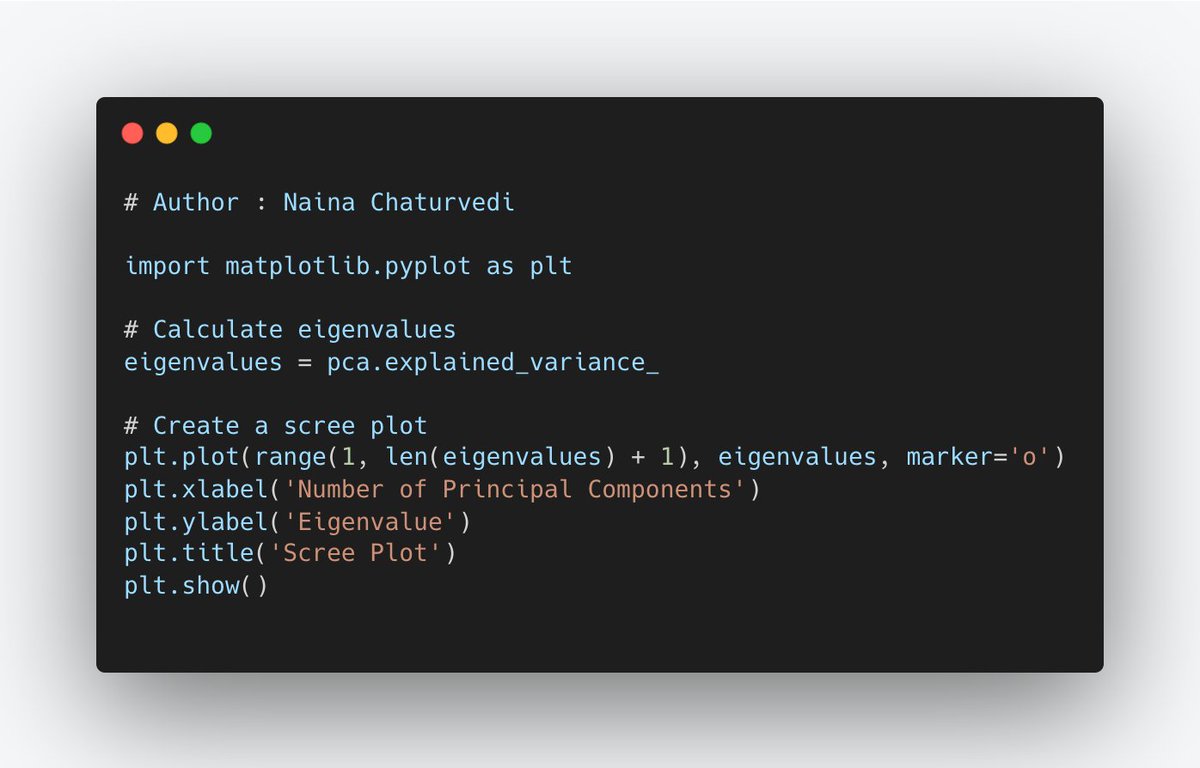

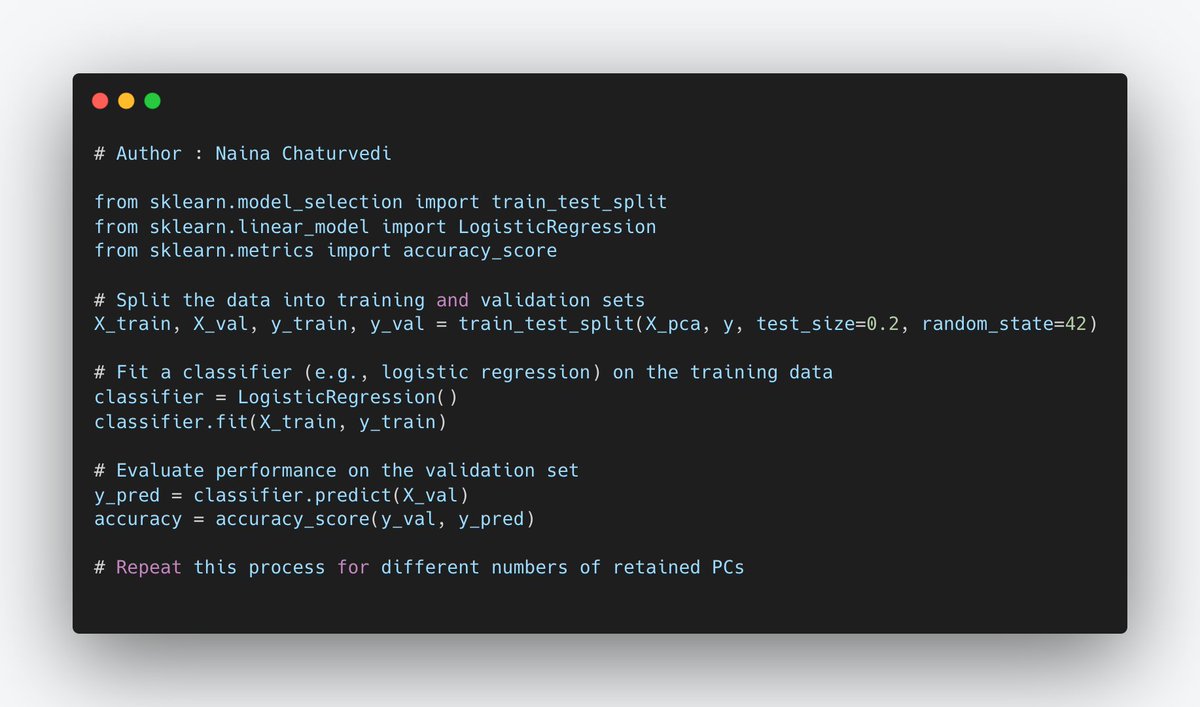

20/ Choosing the Right Number of Principal Components:

Choosing the optimal number of principal components (PCs) to retain in PCA is a crucial decision, as it balances dimensionality reduction with information retention.

Choosing the optimal number of principal components (PCs) to retain in PCA is a crucial decision, as it balances dimensionality reduction with information retention.

24/ Subscribe and Read more - naina0405.substack.com

Read - How to Efficiently Build Scalable Machine Learning Pipelines open.substack.com

Github -

github.com

Read - How to Efficiently Build Scalable Machine Learning Pipelines open.substack.com

Github -

github.com

naina0405.substack.com

Ignito | Naina Chaturvedi | Substack

Data Science, ML, AI and more... Click to read Ignito, by Naina Chaturvedi, a Substack publication w...

github.com/Coder-World04/…

GitHub - Coder-World04/Complete-Machine-Learning-: This repository contains everything you need to become proficient in Machine Learning

This repository contains everything you need to become proficient in Machine Learning - GitHub - Cod...

open.substack.com/pub/naina0405/…

How to Efficiently Build Scalable Machine Learning Pipelines - Explained in Simple terms with Implementation Details

With code, techniques and best tips...

Loading suggestions...