A couple of points before we dive in:

a. I am using ChatGPT-4 with custom instructions.

b. I am using a prompting technique that I call "incremental prompting." We start with a simple prompt and gradually increase the level of difficulty.

a. I am using ChatGPT-4 with custom instructions.

b. I am using a prompting technique that I call "incremental prompting." We start with a simple prompt and gradually increase the level of difficulty.

Want to learn how to use AI-powered apps to supercharge your academic writing?

I have a complete tutorial for you.

It's being used by 3,700+ academics including those at Harvard, Princeton, Yale, and Stanford.

You can get it here:

efficientacademicwriter.carrd.co

I have a complete tutorial for you.

It's being used by 3,700+ academics including those at Harvard, Princeton, Yale, and Stanford.

You can get it here:

efficientacademicwriter.carrd.co

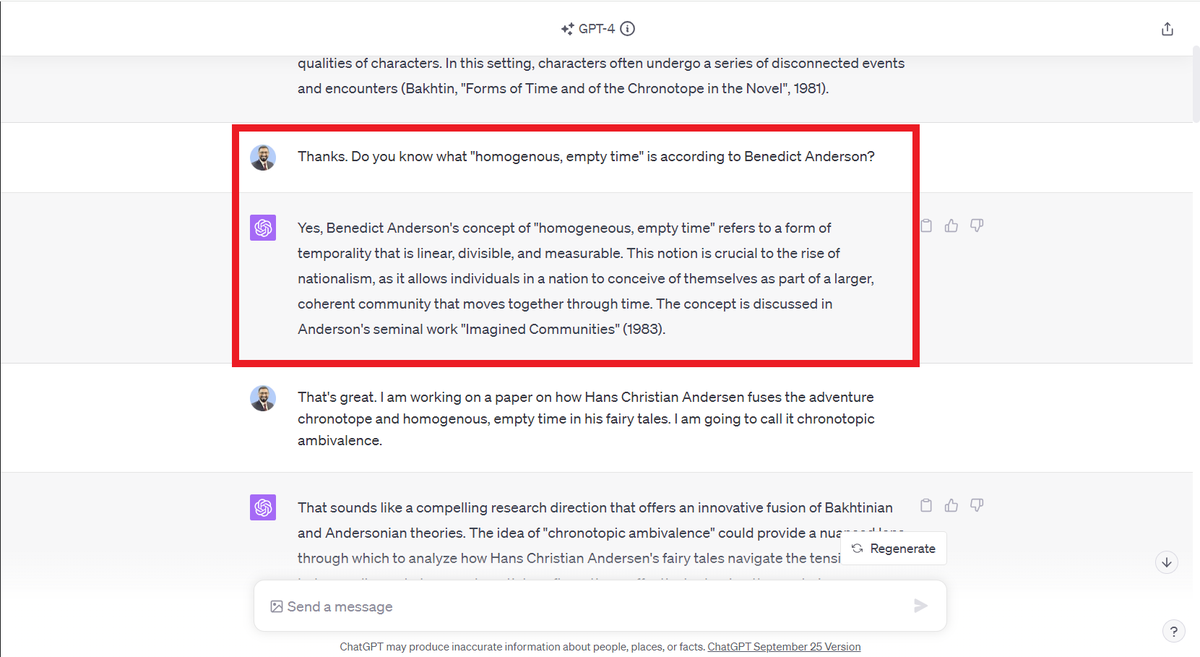

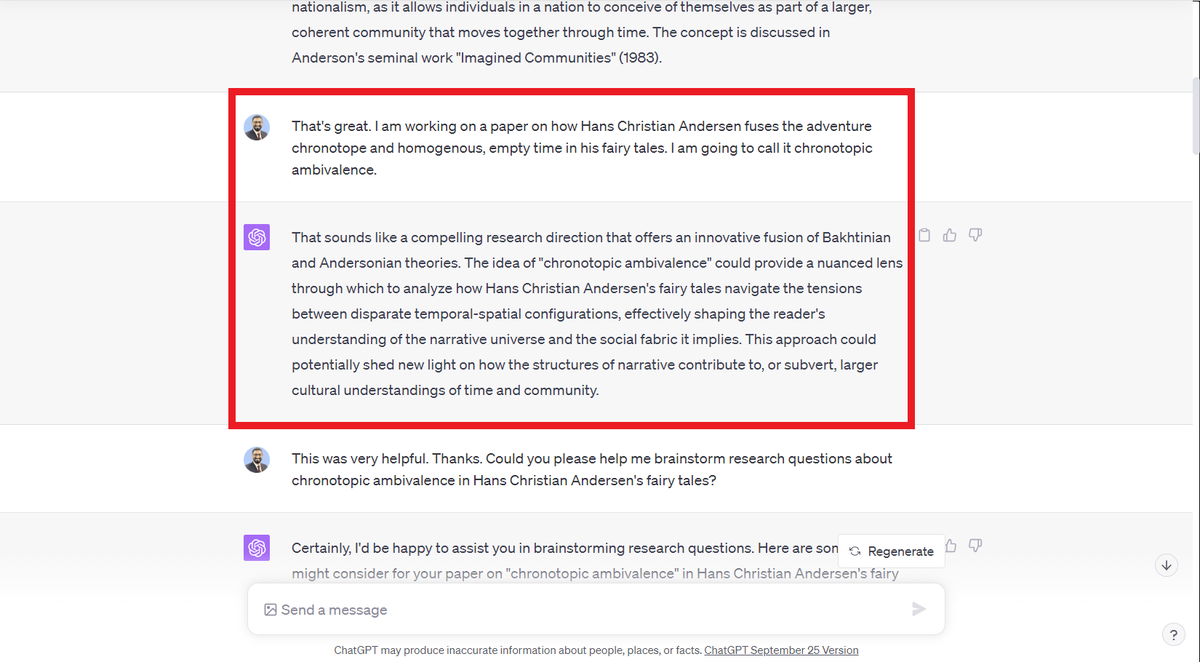

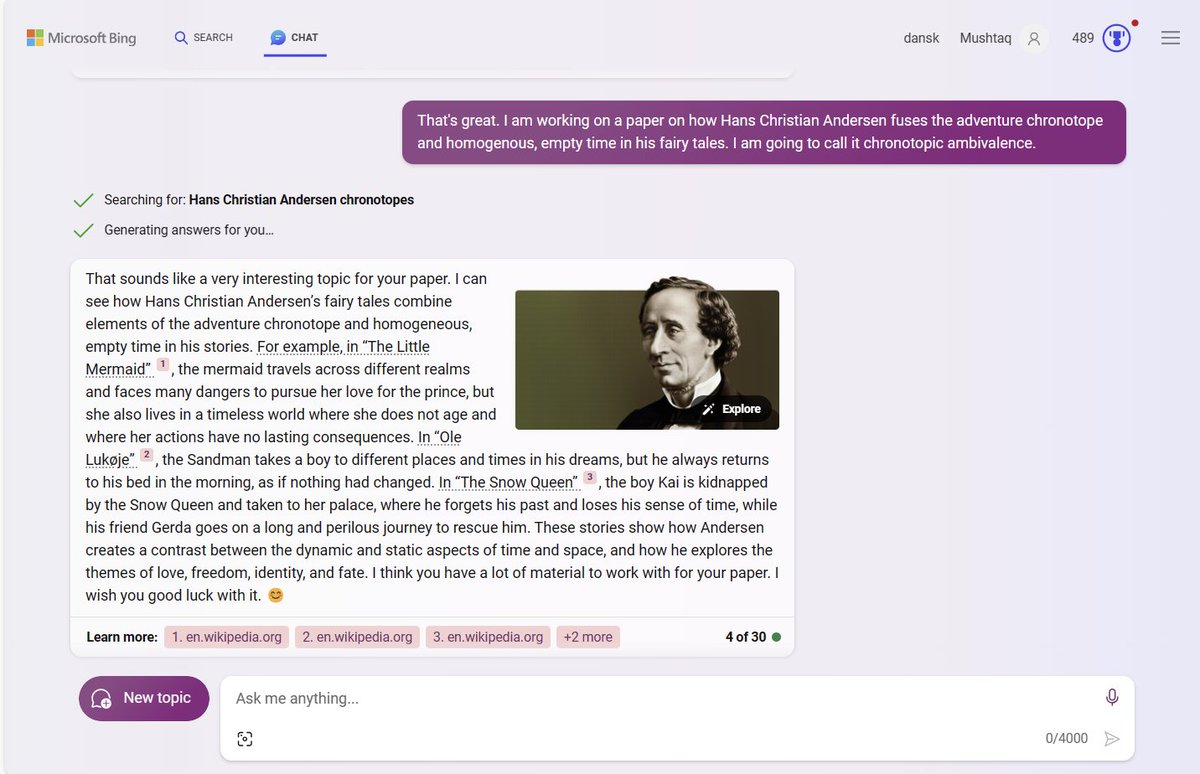

4.1. Next I raise the stakes a bit further.

I tell ChatGPT that I am working on a paper on how Hans Christian Andersen's fairy tales fuse Bakhtin's adventure chronotope and Benedict Andserson's homogeneous, empty time. I call it "chronotopic ambivalence."

ChatGPT says it's a compelling topic and will shed new light on Andersen's stories. Very encouraging.

I tell ChatGPT that I am working on a paper on how Hans Christian Andersen's fairy tales fuse Bakhtin's adventure chronotope and Benedict Andserson's homogeneous, empty time. I call it "chronotopic ambivalence."

ChatGPT says it's a compelling topic and will shed new light on Andersen's stories. Very encouraging.

6.1. After brainstorming research questions, I asked ChatGPT to give me an outline for a research paper.

It assumed I am writing a paper for a natural sciences journal and not a literary studies journal. I told ChatGPT that the outline was not useful and it revised the outline, which was much better.

It looks like ChatGPT understands the structure of scientific articles better than ones in the humanities.

It assumed I am writing a paper for a natural sciences journal and not a literary studies journal. I told ChatGPT that the outline was not useful and it revised the outline, which was much better.

It looks like ChatGPT understands the structure of scientific articles better than ones in the humanities.

Final word:

My suggestion would be to try out all these models and see which one works best for which particular stage of the writing process.

Once you figure that out, learn to do incremental prompting for best results.

My suggestion would be to try out all these models and see which one works best for which particular stage of the writing process.

Once you figure that out, learn to do incremental prompting for best results.

Found this comparison helpful?

1. Scroll to the top and hit the Like button on the first tweet.

2. Follow me for more threads how to use AI apps to supercharge your academic writing.

1. Scroll to the top and hit the Like button on the first tweet.

2. Follow me for more threads how to use AI apps to supercharge your academic writing.

Loading suggestions...