1/30 The @solanaconf Breakpoint megathread, read along for a recap and news on @solana 1) tech, 2) economics, 3) finance, 4) payments, 5) gaming, and 6) physical infrastructure networks. Also if you recently started looking at $SOL and want to know what's going on👇

2/30 @aeyakovenko starts proposing a solana alignment thesis: it's very simple. Is a new software release reducing latency and increase throughput? That's it. Not much space for alignment dissident police here to get creative.

Solana intends to build a giant global state machine synchronized at the speed of light. Solana is decentralized to allow anyone to have access to the global state and build composable applications that make use of it. The protocol is designed to scale with hardware as efficiently as possible.

Solana intends to build a giant global state machine synchronized at the speed of light. Solana is decentralized to allow anyone to have access to the global state and build composable applications that make use of it. The protocol is designed to scale with hardware as efficiently as possible.

3/30 @amiravalliani puts emphasis on network health metrics such as the high number of validators (~2000) and RPC nodes (~900), high Nakamoto coefficient, good node hosting and geographical distribution, low carbon footprint.

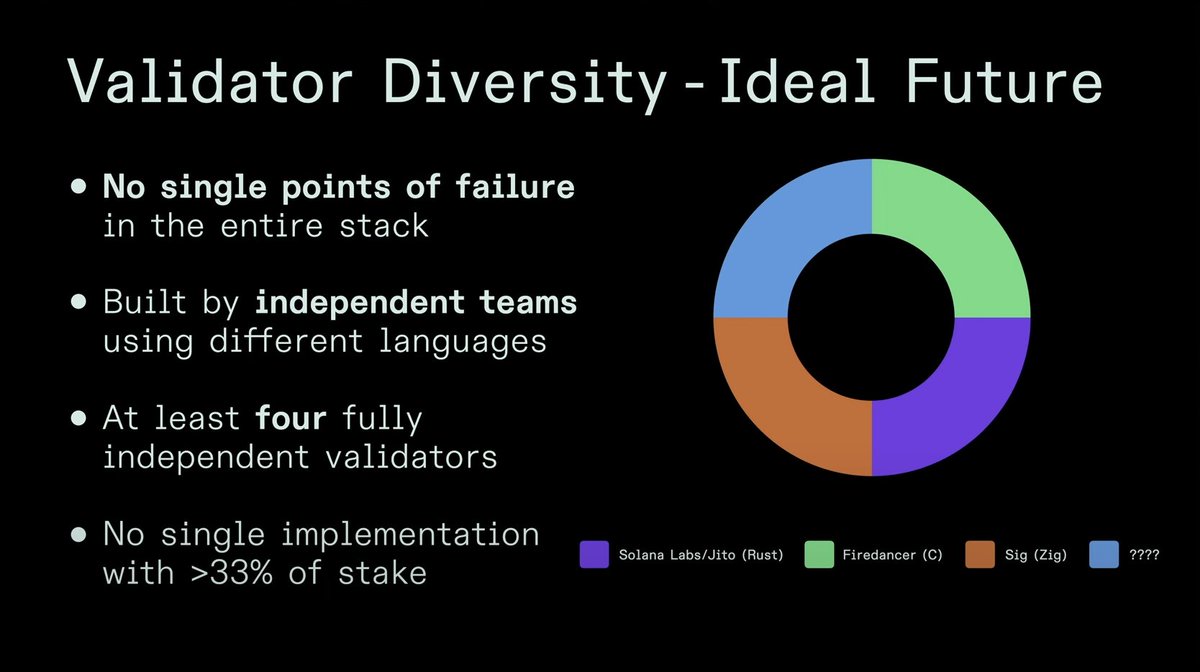

4/30 A high number of validators and good stake distribution help with decentralization, but Solana is still relying on a single client implementation: the @jito_labs client (currently running on ~30% of the stake) shares most of its codebase with the @solanalabs client.

@DanPaul000 presents an ideal future in which Solana can count on 4 independent client implementations, wherein none of them accrues more than 33% of the stake. That's a future without single points of failure.

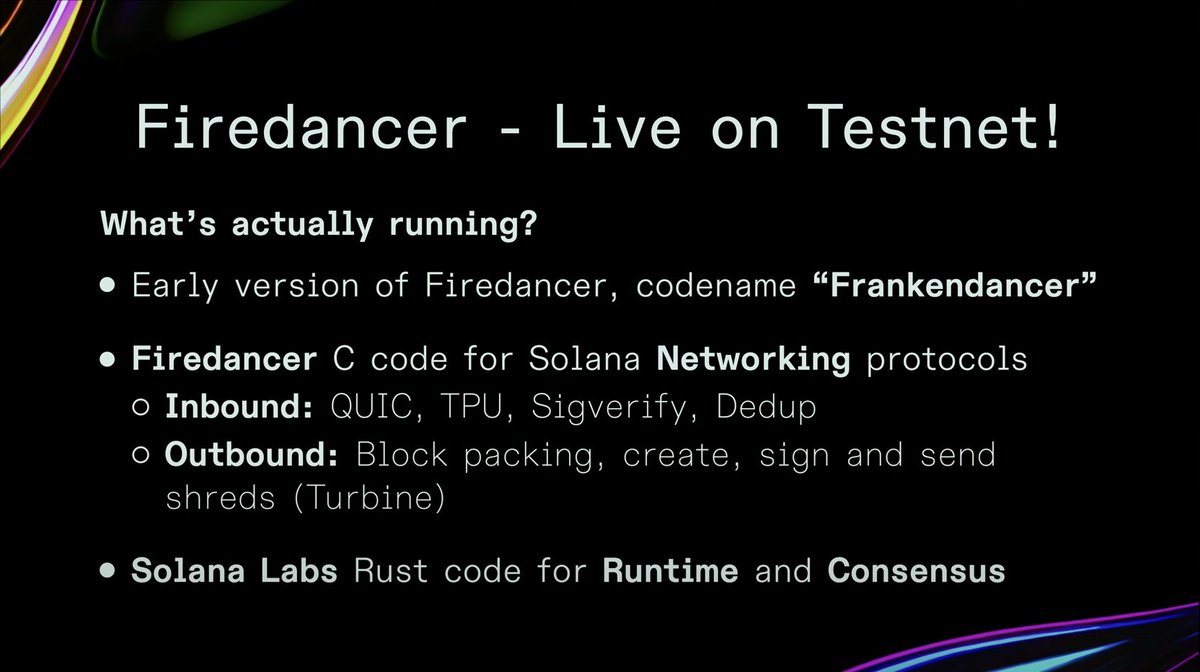

The good news is that we are getting closer to that future, as @jump_firedancer is live on testnet! It's working on commodity hardware, staked, voting and producing blocks together with Solana Labs and Jito Labs validators.

To be precise, what is live on testnet today is a hybrid, early version nicknamed "Frankendancer", as it uses its own code for Networking functions, but it relies on Solana Labs code for Runtime and Consensus.

Firedancer is the first new client built from the ground up in C to avoid any dependence from the Solana Labs client and with the ultimate goal of achieving maximum performance, limited only by hardware scaling.

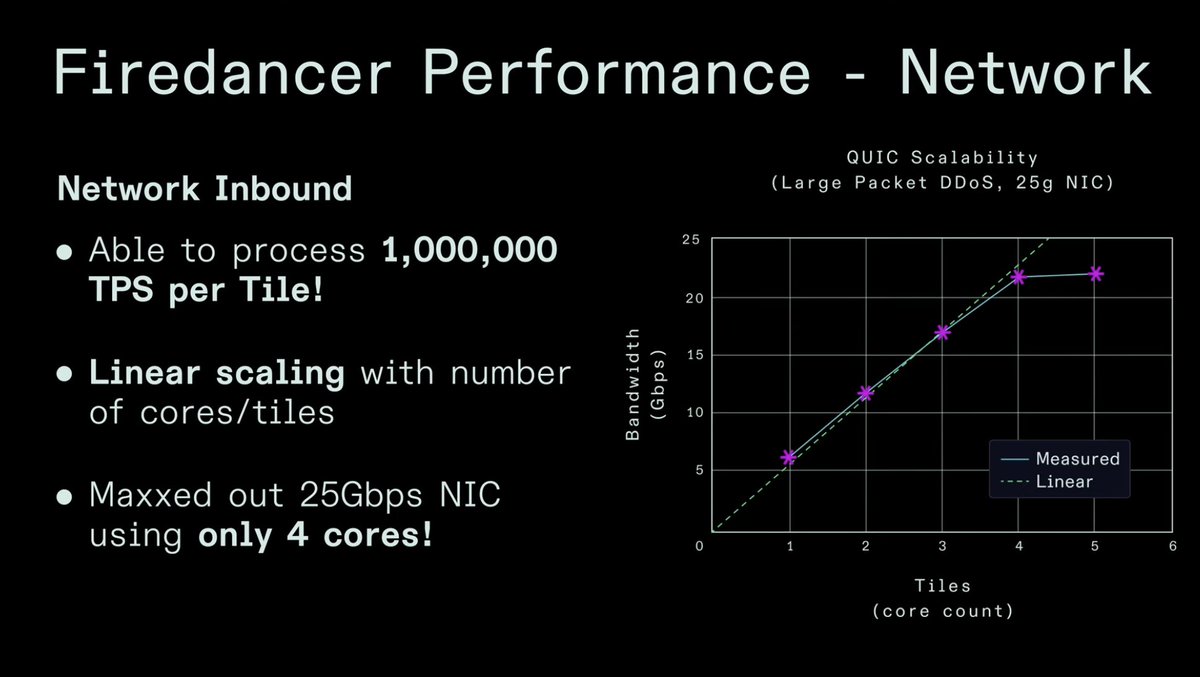

Early tests are very promising and can show off the results achieved by the Firedancer team on Networking functions (e.g. receiving transactions, verifying signatures, data propagation). For instance, Firedancer's QUIC can process 1 MTPS per core, reaching the physical limits of a 25 Gbps NIC with only 4 cores!

The next steps include getting Frankendancer on Mainnet somewhere in the first half of 2024 and shipping a fully independent Firedancer by the end of 2024. An independent end-to-end Firedancer would give the most benefits in terms of performance and client diversity.

@DanPaul000 presents an ideal future in which Solana can count on 4 independent client implementations, wherein none of them accrues more than 33% of the stake. That's a future without single points of failure.

The good news is that we are getting closer to that future, as @jump_firedancer is live on testnet! It's working on commodity hardware, staked, voting and producing blocks together with Solana Labs and Jito Labs validators.

To be precise, what is live on testnet today is a hybrid, early version nicknamed "Frankendancer", as it uses its own code for Networking functions, but it relies on Solana Labs code for Runtime and Consensus.

Firedancer is the first new client built from the ground up in C to avoid any dependence from the Solana Labs client and with the ultimate goal of achieving maximum performance, limited only by hardware scaling.

Early tests are very promising and can show off the results achieved by the Firedancer team on Networking functions (e.g. receiving transactions, verifying signatures, data propagation). For instance, Firedancer's QUIC can process 1 MTPS per core, reaching the physical limits of a 25 Gbps NIC with only 4 cores!

The next steps include getting Frankendancer on Mainnet somewhere in the first half of 2024 and shipping a fully independent Firedancer by the end of 2024. An independent end-to-end Firedancer would give the most benefits in terms of performance and client diversity.

5/30 The @jump_firedancer team gave us insights on how they achieved such high performance in the functions already implemented and live on testnet, with focused technical sessions on faster execution of ED25519 sigverify and Reed-Solomon coding / encoding.

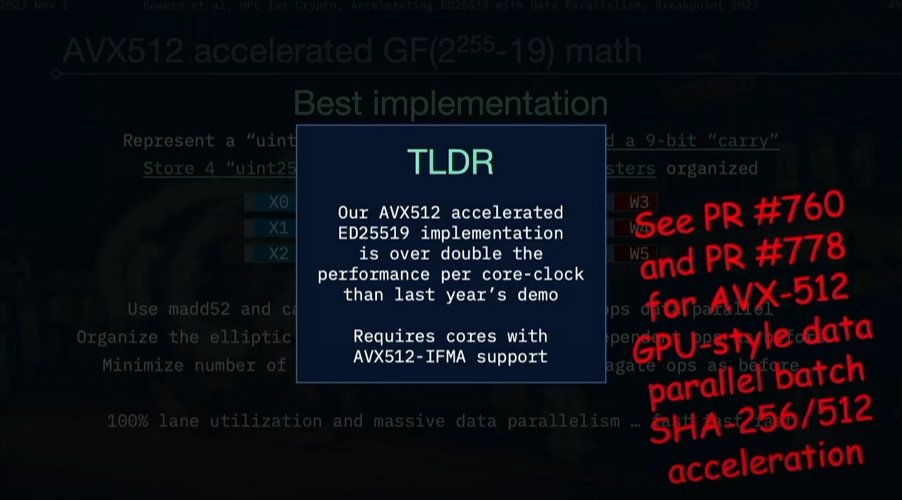

Accelerating ED25519 is critical. If you really go to the core of what a validator needs to do, you will find signature verification. There is no way around that. ED25519 is not cheap and was not designed for parallel execution. Kevin Bowers explained how Firedancer achieves 2x faster execution using cores with AVX512-IFMA support. It was also interesting to learn from Kaveh Aasaraai how ED25519 can be significantly accelerated with custom FPGAs. These people are hardcore laser focused on getting the most out of the hardware.

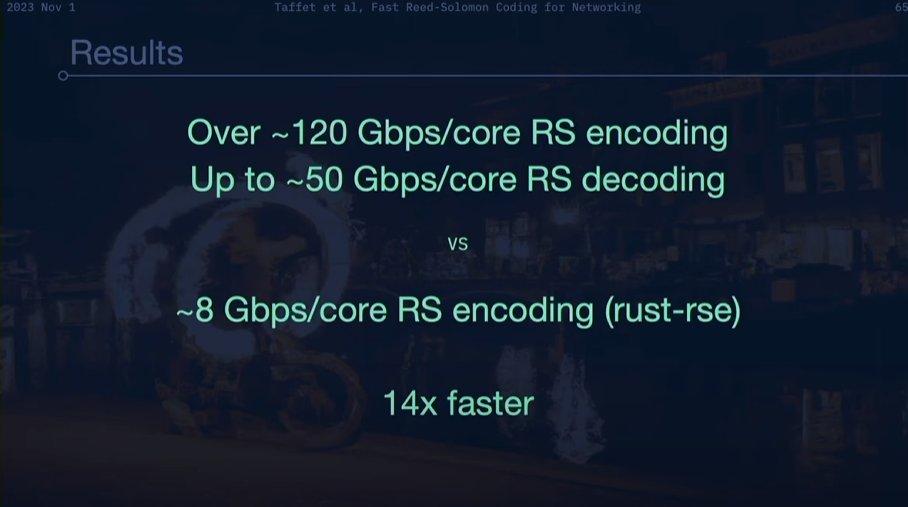

Philip Taffet presented a blazing fast library for encoding / decoding the Reed Solomon (RS) codes needed for block propagation. This is critical for Solana: fast data propagation is achieved by distributing block fragments (shreds) to a subset of nodes in a tree configuration. This is faster than traditional gossip protocols but it's vulnerable to packet loss unless you use erasure codes for reconstructing data.

The new library achieves over 120 Gbps/core for encoding and 50 Gbps/core for decoding, while the standard rust library used by the Labs validator is limited to 8 Gbps/core for encoding.

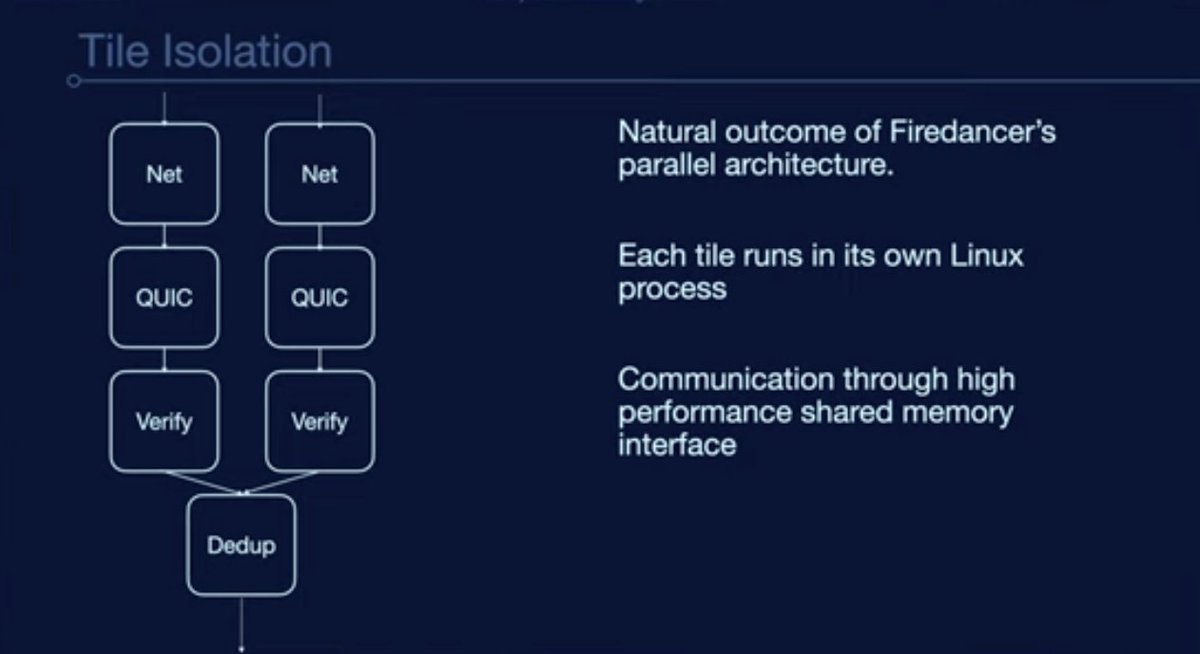

Last but not least, Felix Willhelm presented Firedancer's focus on security, including continuous fuzzing (automatic testing via continuous supply of untrusted data) + code reviews (it turns out they cover an almost completely independent set of bugs). Firedancer achieves security via defense in depth: vulnerabilities are mitigated as much as possible. If still some exploits arise, tile isolation and OS sandboxing avoid that the system is fully compromised.

Interestingly, tile isolation is not good for performance only (allowing you to optimize single functions), but also to limit the damage in case of exploits and to focus on the functions that are presumably in the greatest interest of an attacker (verification).

Accelerating ED25519 is critical. If you really go to the core of what a validator needs to do, you will find signature verification. There is no way around that. ED25519 is not cheap and was not designed for parallel execution. Kevin Bowers explained how Firedancer achieves 2x faster execution using cores with AVX512-IFMA support. It was also interesting to learn from Kaveh Aasaraai how ED25519 can be significantly accelerated with custom FPGAs. These people are hardcore laser focused on getting the most out of the hardware.

Philip Taffet presented a blazing fast library for encoding / decoding the Reed Solomon (RS) codes needed for block propagation. This is critical for Solana: fast data propagation is achieved by distributing block fragments (shreds) to a subset of nodes in a tree configuration. This is faster than traditional gossip protocols but it's vulnerable to packet loss unless you use erasure codes for reconstructing data.

The new library achieves over 120 Gbps/core for encoding and 50 Gbps/core for decoding, while the standard rust library used by the Labs validator is limited to 8 Gbps/core for encoding.

Last but not least, Felix Willhelm presented Firedancer's focus on security, including continuous fuzzing (automatic testing via continuous supply of untrusted data) + code reviews (it turns out they cover an almost completely independent set of bugs). Firedancer achieves security via defense in depth: vulnerabilities are mitigated as much as possible. If still some exploits arise, tile isolation and OS sandboxing avoid that the system is fully compromised.

Interestingly, tile isolation is not good for performance only (allowing you to optimize single functions), but also to limit the damage in case of exploits and to focus on the functions that are presumably in the greatest interest of an attacker (verification).

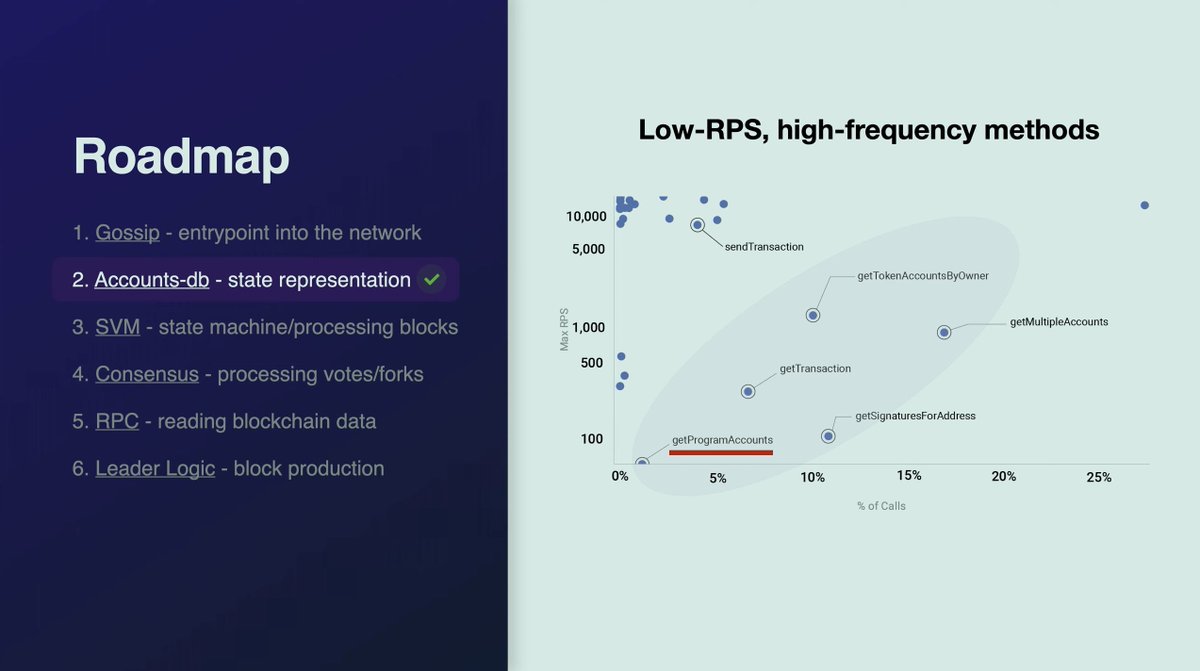

6/30 2 independent validators is good, 3 independent validators is better! @1ultd and @0xNineTeen from @syndica_io presented their progress on building Sig, a new read-optimized Solana validator.

Syndica is a RPC node provider. RPC nodes see a lot of data, and Syndica noticed a problem: 96% of all calls are read calls, and some methods are particularly expensive in the current client implementation. This can cause slot lag and ultimately a user experience not as smooth as it could be with 400 ms block times.

Sig is a new validator client built to optimize reads-per-second (RPS). Sig is written in Zig, a general purpose programming language for maintaining robust and reusable software. Zig has good readability, hopefully inviting more developers to contribute to the client implementation.

Sig is at the second stage of its implementation roadmap, with focus on low RPS and/or high-frequency methods.

Syndica is a RPC node provider. RPC nodes see a lot of data, and Syndica noticed a problem: 96% of all calls are read calls, and some methods are particularly expensive in the current client implementation. This can cause slot lag and ultimately a user experience not as smooth as it could be with 400 ms block times.

Sig is a new validator client built to optimize reads-per-second (RPS). Sig is written in Zig, a general purpose programming language for maintaining robust and reusable software. Zig has good readability, hopefully inviting more developers to contribute to the client implementation.

Sig is at the second stage of its implementation roadmap, with focus on low RPS and/or high-frequency methods.

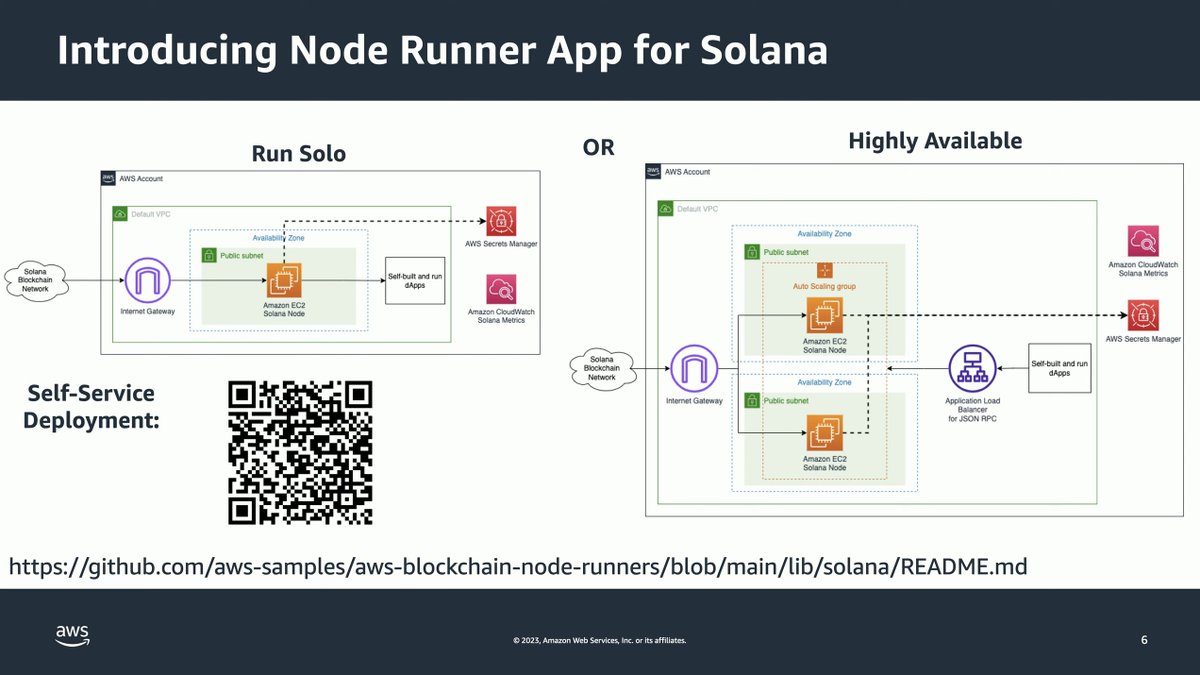

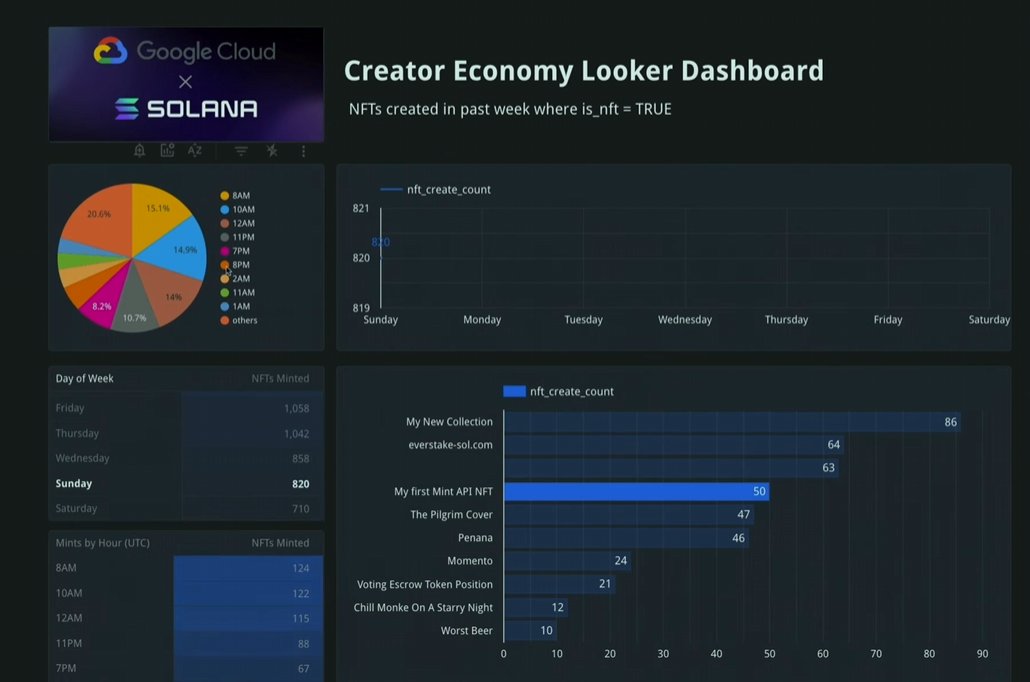

8/30 @googlecloud is already running a Solana validator since a long time. @jmtromans announced that Solana data is now indexed in Google Cloud BigQuery and integrated with Duet for AI powered queries and Looker for generating dashboards.

In addition, the Blockchain Node Engine will allow to deploy Solana non-voting RPC nodes with one click. Solana is the first non-EVM chain to be supported by Blockchain Node Engine.

In addition, the Blockchain Node Engine will allow to deploy Solana non-voting RPC nodes with one click. Solana is the first non-EVM chain to be supported by Blockchain Node Engine.

9/30 @harsh4786 and @anoushk77 presented their progress on @tinydancerio, the first light client for Solana, massively reducing the cost for users to verify the chain. The roadmap comprises three phases: simple payment verification (SPV), data availability sampling and block reconstruction, SVM fraud proving system.

The recent approval of SIMD-64: Transaction Receipts was a huge step towards the completion of phase 1 enabling SPV on Solana.

The recent approval of SIMD-64: Transaction Receipts was a huge step towards the completion of phase 1 enabling SPV on Solana.

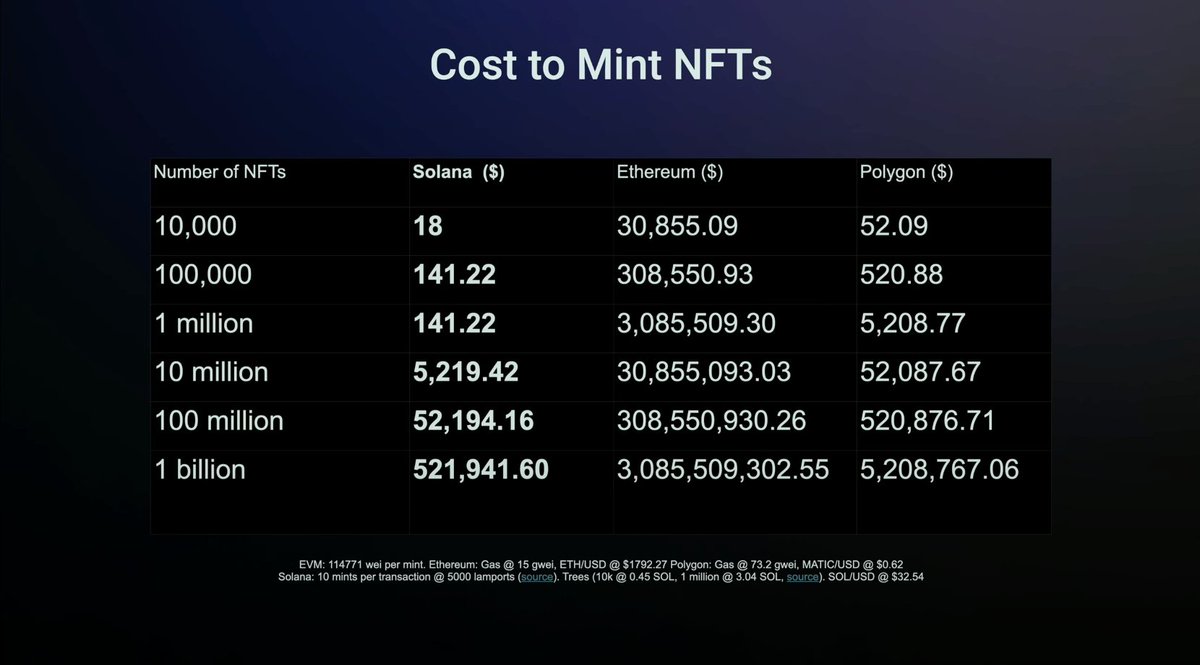

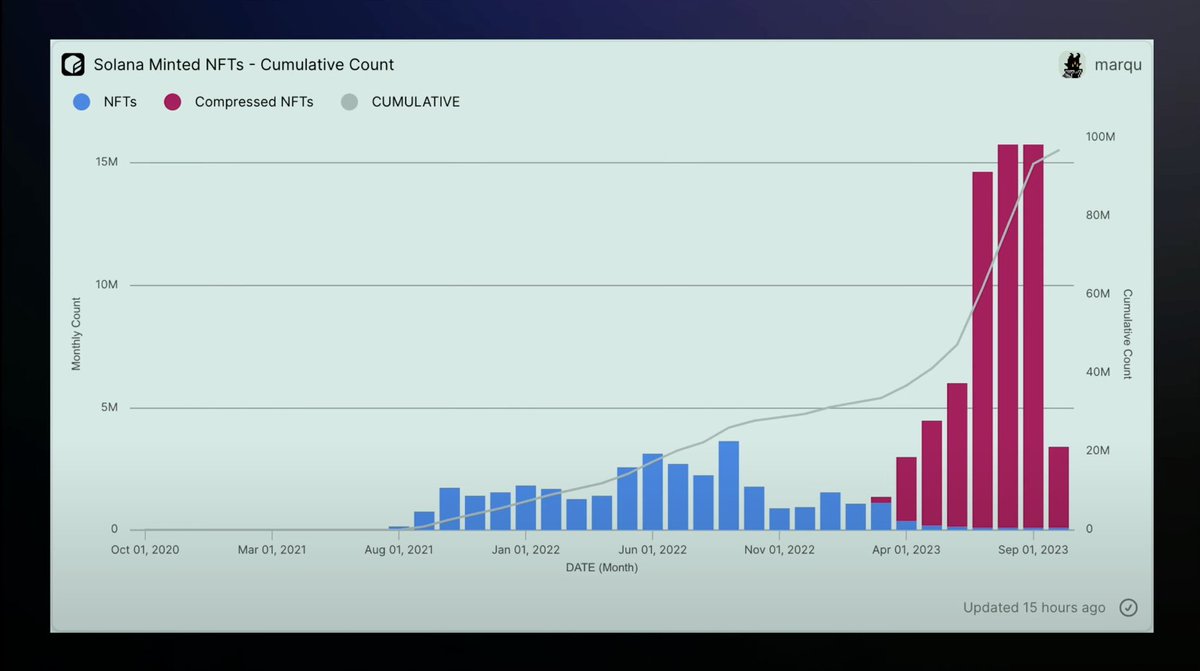

10/30 State compression on Solana works by merklizing data and storing it off-chain. Only the merkle root is required to be stored on-chain, massively reducing cost. Data can be modified through transactions on-chain, and in general data can be reconstructed by replaying the ledger.

This found naturally a use case in minting huge amounts of NFTs (see @drip_haus drops, @saydialect sticker packs)

@jnwng notices how state compression goes beyond the NFT applications we are seeing today; in its more general definition, state compression is "the ability to verifiably secure data in the Solana ledger for use in programs". Potentially this can bring everything on chain and enable private state, rollups, gaming, social media, etc.

This found naturally a use case in minting huge amounts of NFTs (see @drip_haus drops, @saydialect sticker packs)

@jnwng notices how state compression goes beyond the NFT applications we are seeing today; in its more general definition, state compression is "the ability to verifiably secure data in the Solana ledger for use in programs". Potentially this can bring everything on chain and enable private state, rollups, gaming, social media, etc.

11/30 The future of web3 is mobile. @Soonganoid explained how @solanamobile is pushing towards this vision, presenting Wallet-Standard on iOS, a Safari extension wallet enabling self custody on iOS today.

The wallet is open source and allows to interact with dApps directly on Safari - no need for an in-app browser.

The wallet is open source and allows to interact with dApps directly on Safari - no need for an in-app browser.

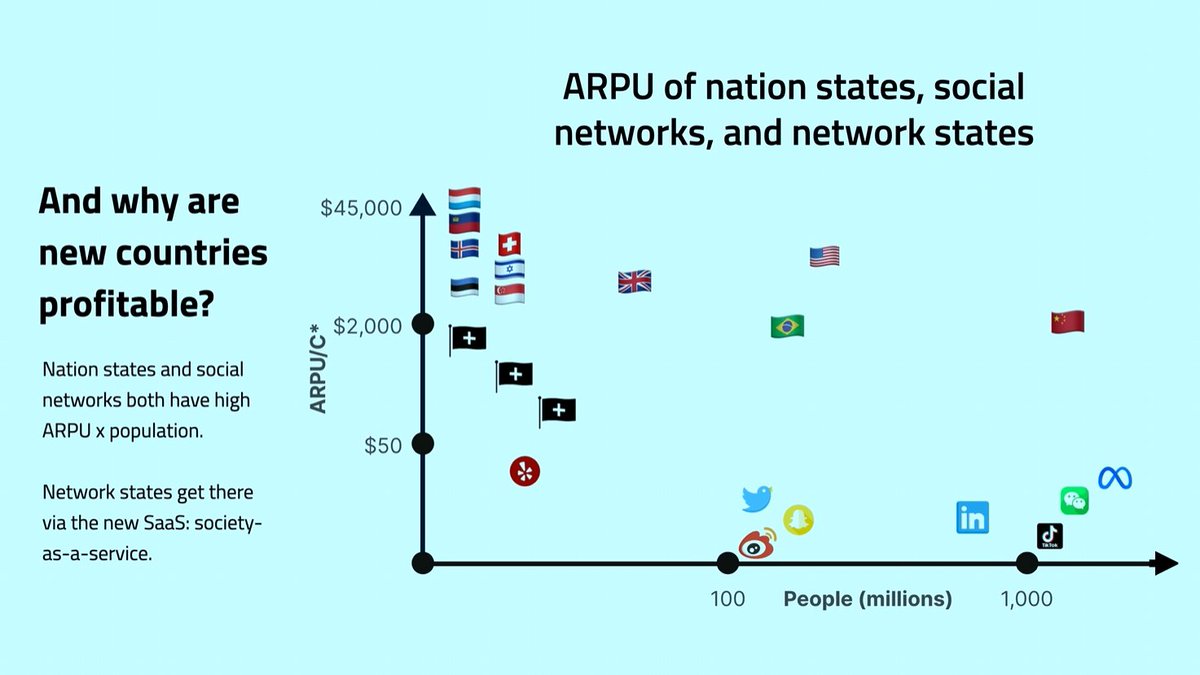

12/30 Started as a concept, @thenetworkstate is turning from theory to practice. Can we go beyond starting new currencies to starting new countries? @balajis made the case for how starting network states today is not only possible, but also preferable (for better opportunities) and profitable (via subscription fees: society as a service).

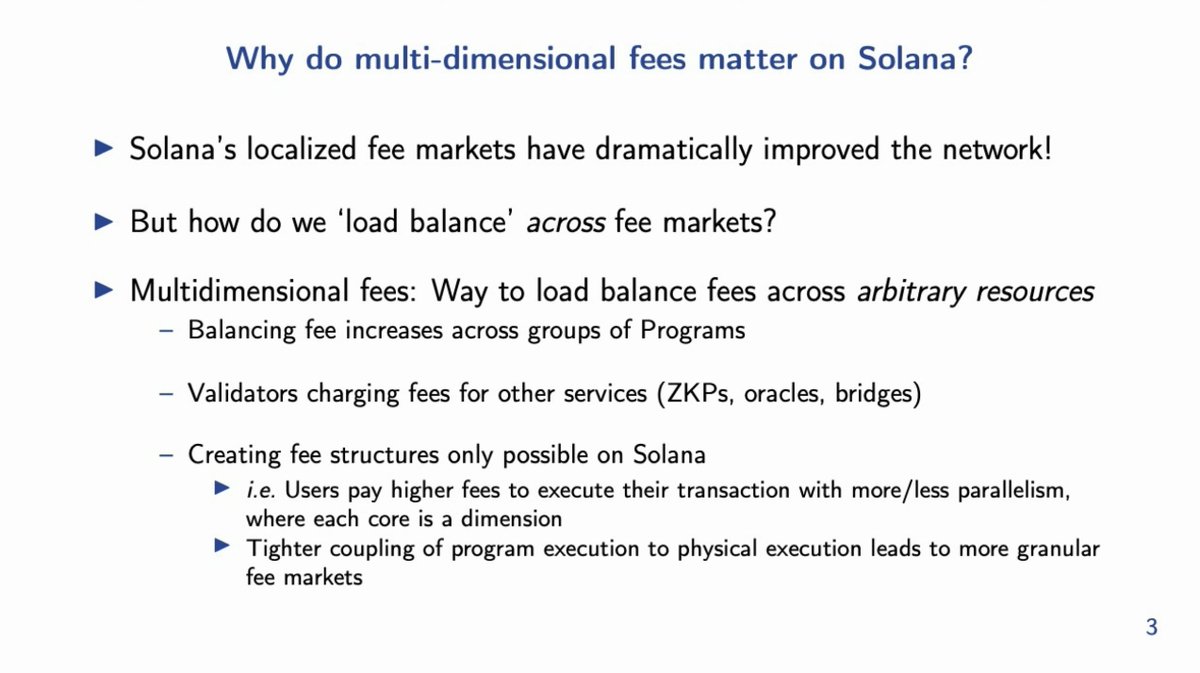

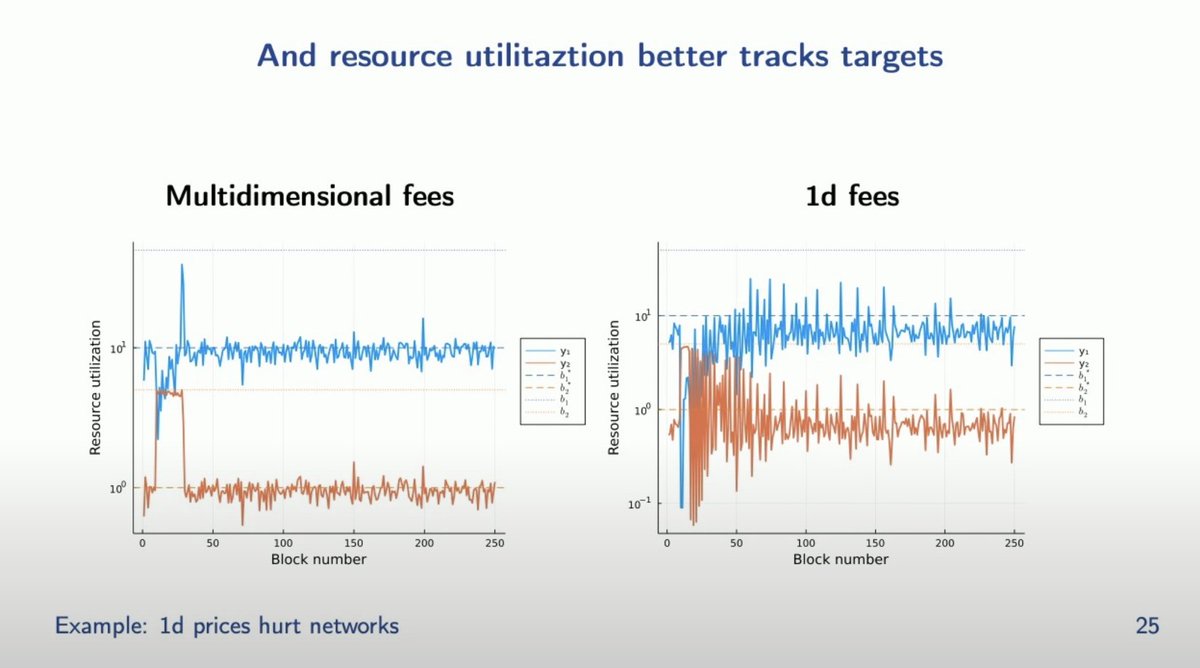

13/30 It is clear that fee markets with fixed relative prices (same price for access to orthogonal resource e.g. execution and compute) are inefficient and a multi-dimensional fee market is desirable. Here, @tarunchitra presents his work on "Designing Multidimensional Blockchain Fee Markets", co-authored with @theo_diamandis, @GuilleAngeris and @alexhevans, on a framework to optimally set multi-dimensional base fees.

This is particularly interesting for Solana, not only because it is already making good use of parallel fee markets to isolate programs in high demand, but also:

- validators may charge fees for other services beyond solana programs

- further work on how cores could be clustered and allocated to different resources

- synergy with future network upgrades like multiple proposers

The referenced paper proposes a method to update prices in a multi-dimensional fee market, showing more efficient resource utilization and ultimately a higher transaction throughput.

This is particularly interesting for Solana, not only because it is already making good use of parallel fee markets to isolate programs in high demand, but also:

- validators may charge fees for other services beyond solana programs

- further work on how cores could be clustered and allocated to different resources

- synergy with future network upgrades like multiple proposers

The referenced paper proposes a method to update prices in a multi-dimensional fee market, showing more efficient resource utilization and ultimately a higher transaction throughput.

14/30 @urosnoetic presented the problem of finding the right balance between token burn and mint economics for decentralized physical infrastructure networks (DePIN). Most models set the burn in function of the price of the service paid by the consumers. This price is set in fiat and paid by the consumers with the protocol token, which is subsequently burned. Producers (aka contributors, workers) are rewarded with newly minted protocol tokens.

This is tough to balance, as if the contributors are not incentivized enough they will eventually leave the network, but if instead they receive too many tokens eventually the token price will collapse. The proposed solution is based on setting not only the service cost, but also the producer incentive in fiat.

This is tough to balance, as if the contributors are not incentivized enough they will eventually leave the network, but if instead they receive too many tokens eventually the token price will collapse. The proposed solution is based on setting not only the service cost, but also the producer incentive in fiat.

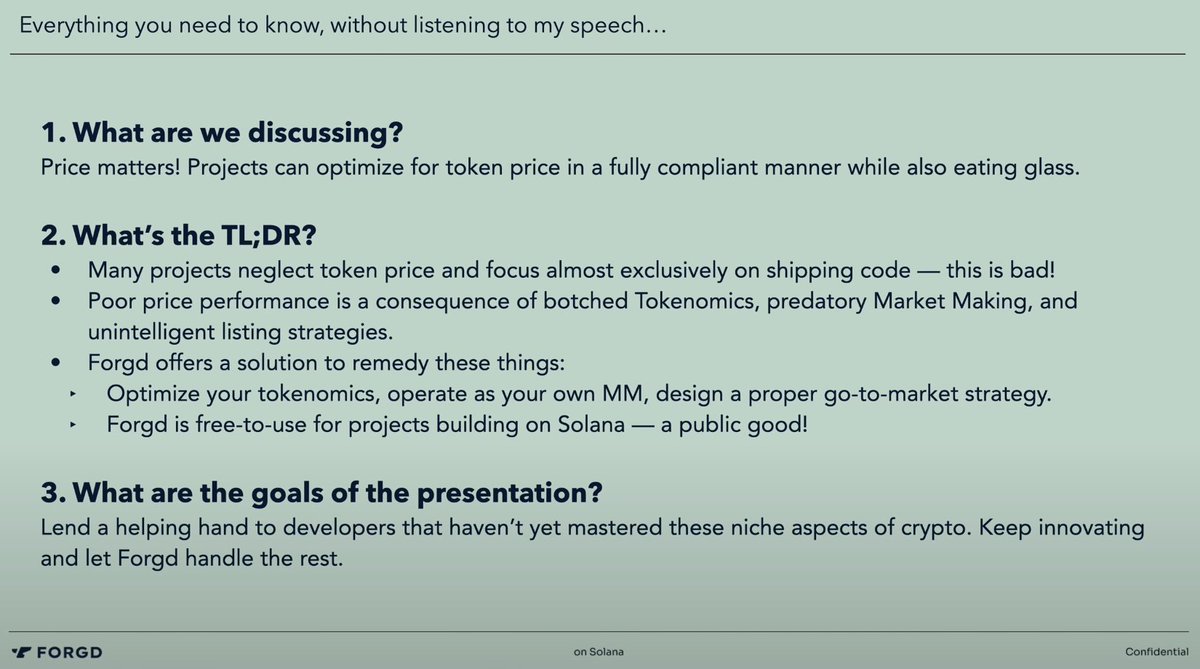

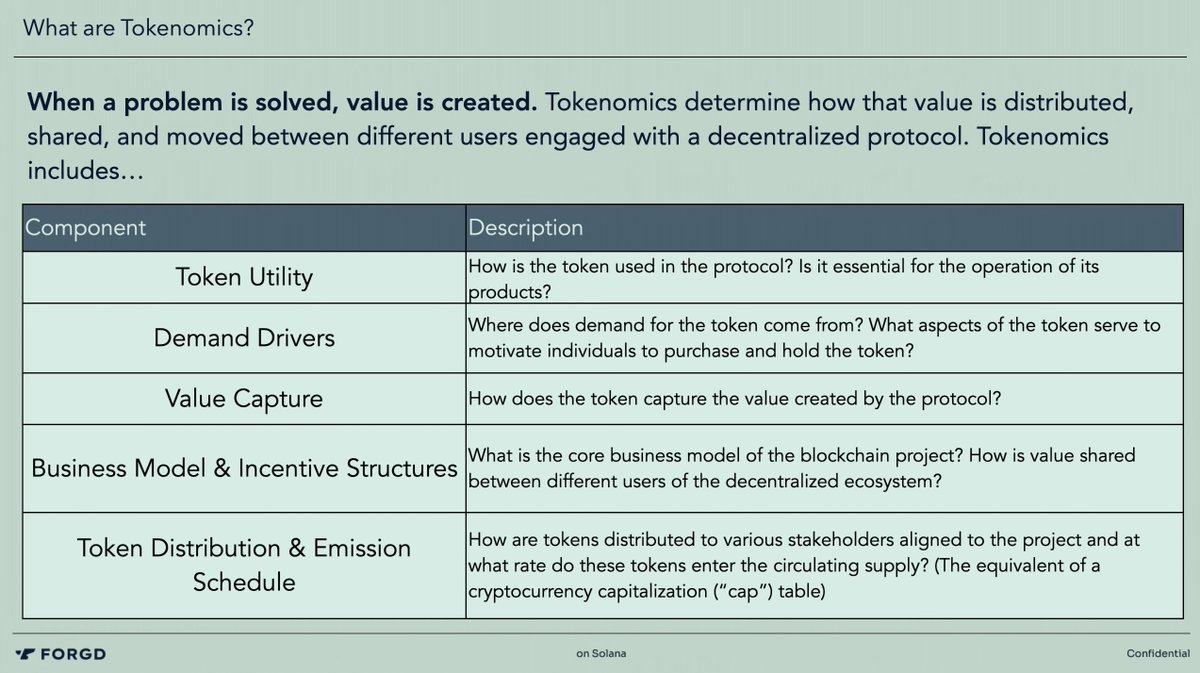

15/30 Forgd is a public good for Solana developers to focus on the tech while Forgd supports on token price performance. The founder @MolidorShane explains how 1) price performance is extremely important for network growth, 2) poor price performance is often related to the same recurring issues:

- botched tokenomics design (e.g. low float high FDV, lack of value capture mechanisms)

- bad relationships with market makers (e.g. giving them too much influence, not monitoring)

- bad listing strategies (creating imbalances at launch in terms of supply and demand)

- botched tokenomics design (e.g. low float high FDV, lack of value capture mechanisms)

- bad relationships with market makers (e.g. giving them too much influence, not monitoring)

- bad listing strategies (creating imbalances at launch in terms of supply and demand)

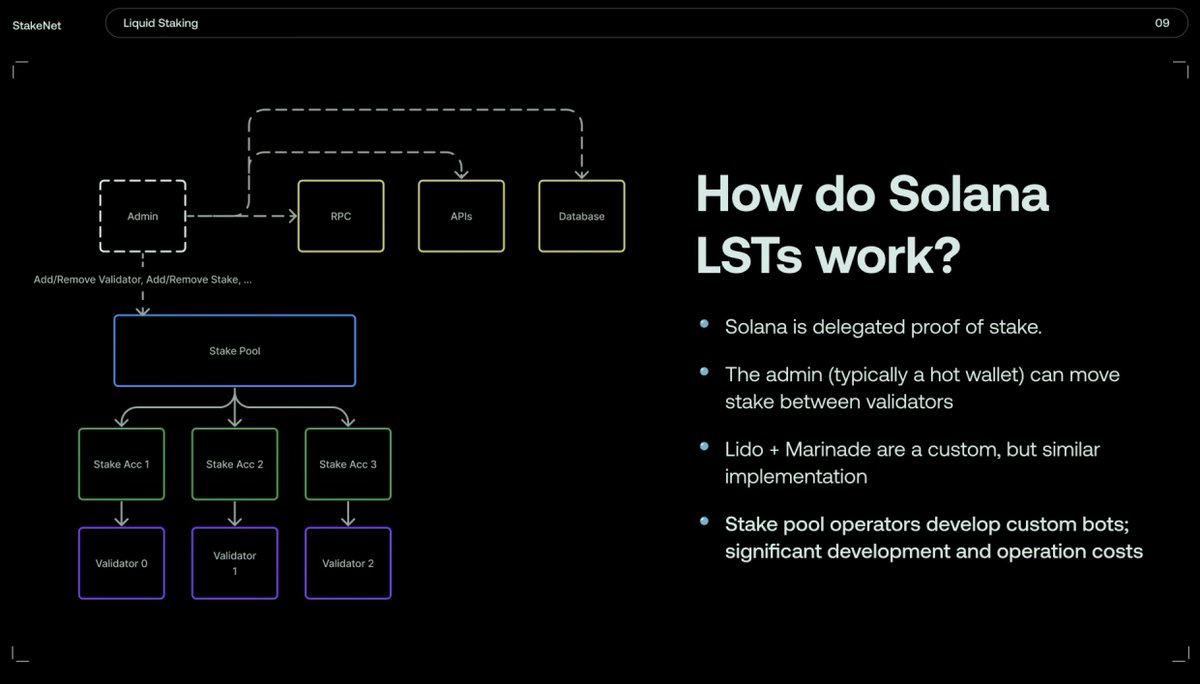

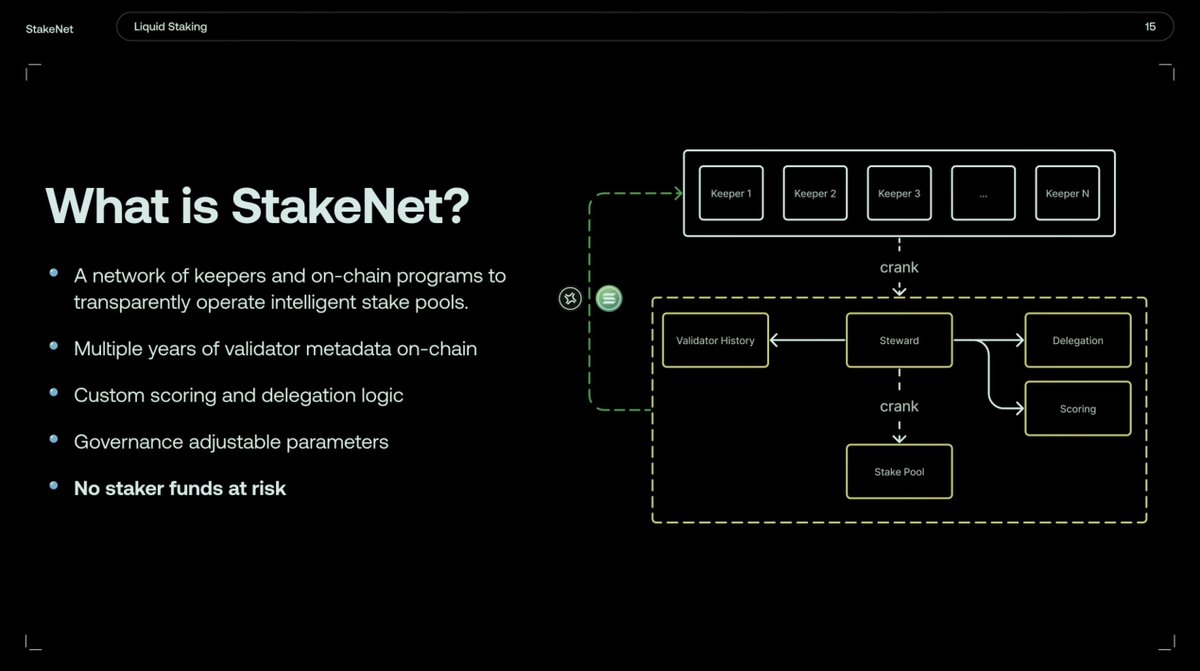

16/30 @buffalu__ and @segfaultdoctor presented @jito_labs StakeNet, a new protocol to make $SOL liquid staking more decentralized.

Solana LSTs in their current form have users depositing $SOL in the stake pool, and an admin distributing stake delegations across different validators. This is centralized as the admin is typically a hot wallet and a single point of failure / attack vector, and its operation is less transparent than a decentralized solution.

With Jito StakeNet, LSTs on Solana can truly become DeFi. Jito StakeNet is a self-sustaining and decentralized protocol for operating stake pools. Stake pools are intelligent as the stake is allocated via custom scoring and delegation logic with governance adjustable parameters. All the code will be open-source and verified. Everything is running on chain and maintained by a network of keepers, wih the ultimate goal of making @jito_sol timeless.

Solana LSTs in their current form have users depositing $SOL in the stake pool, and an admin distributing stake delegations across different validators. This is centralized as the admin is typically a hot wallet and a single point of failure / attack vector, and its operation is less transparent than a decentralized solution.

With Jito StakeNet, LSTs on Solana can truly become DeFi. Jito StakeNet is a self-sustaining and decentralized protocol for operating stake pools. Stake pools are intelligent as the stake is allocated via custom scoring and delegation logic with governance adjustable parameters. All the code will be open-source and verified. Everything is running on chain and maintained by a network of keepers, wih the ultimate goal of making @jito_sol timeless.

17/30 Started as an oracle network on Solana, @PythNetwork is now running on its own SVM-based app-chain and providing price feeds to multiple chains. Data provided by publishers is pulled on-demand by the apps with very low latency.

@mdomcahill presented the progress made by Pyth in the last months, showing how it dominates on total value secured (TVS) on many chains and in particular on high throughput low latency chains. The impressive growth made by Pyth validates their economy in which publishers are incentivized in pushing directly on-chain

In addition, we got the announcement of the Pyth retrospective airdrop for community members and users of decentralized applications that use Pyth Network data.

Governance topics will include:

- size of update fees

- reward distribution mechanism for publishers

- approving other software updates to on-chain program saccess blockchains

- how products are listed on Pyth (e.g. type of assets and assets to be added)

- how publishers will be permissioned to provide data for price feeds

@mdomcahill presented the progress made by Pyth in the last months, showing how it dominates on total value secured (TVS) on many chains and in particular on high throughput low latency chains. The impressive growth made by Pyth validates their economy in which publishers are incentivized in pushing directly on-chain

In addition, we got the announcement of the Pyth retrospective airdrop for community members and users of decentralized applications that use Pyth Network data.

Governance topics will include:

- size of update fees

- reward distribution mechanism for publishers

- approving other software updates to on-chain program saccess blockchains

- how products are listed on Pyth (e.g. type of assets and assets to be added)

- how publishers will be permissioned to provide data for price feeds

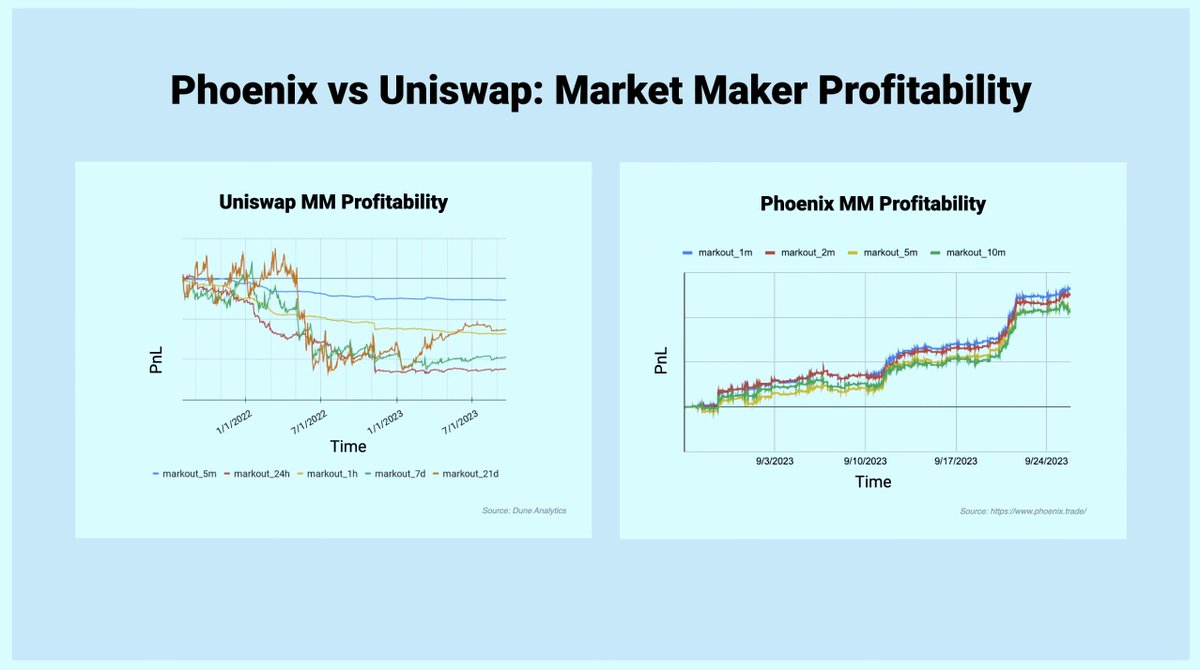

18/30 DeFi principles are extremely important as transparency and permissionless innovation in an open competitive environment can produce better long-term outcomes for the users. But still, as well put by @0xShitTrader in his presentation, decentralization in itself is not enough to provide value to market participants. DeFi needs to out-compete TradFi on quality of liquidity, sustainability and user experience.

The problem is that we are not there yet. DeFi today is broken, with traditional on-chain AMMs being worse both for makers and takers. Takers suffer from low liquidity and high spreads, and makers lose money over time. How to fix it?

A possible approach consist in moving some components off-chain: off-chain RFQ, off-chain order books, consolidation of order flow and so on. The risk here is not only on complexity but mostly on trading off the benefits of decentralization for performance.

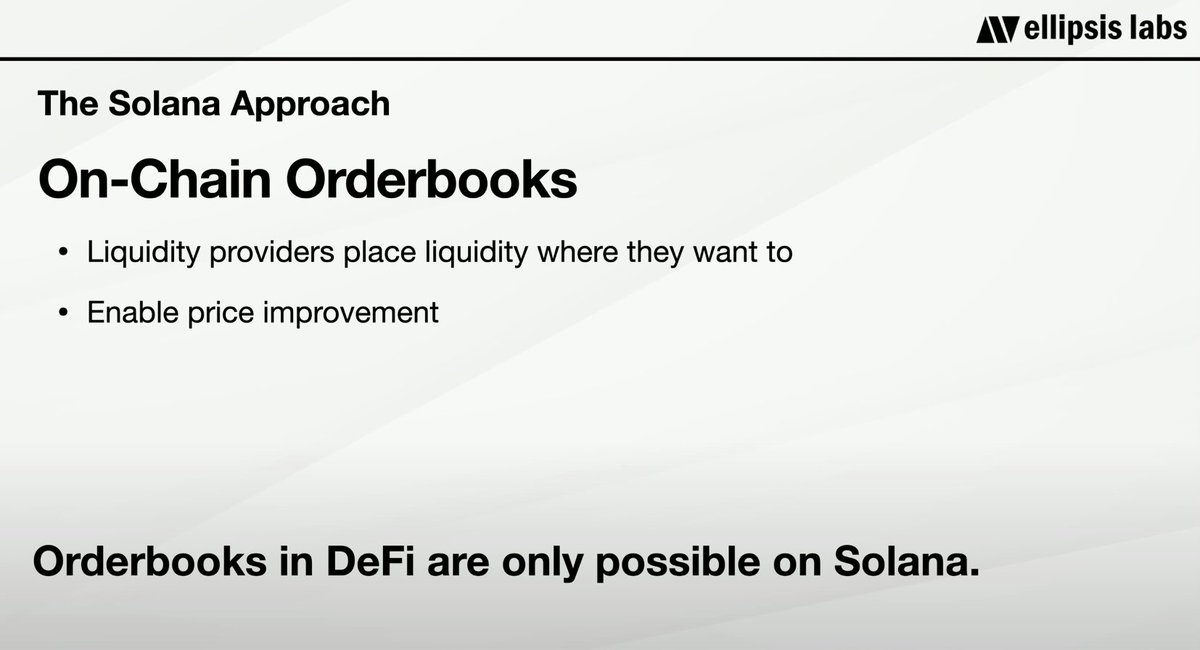

The @ellipsis_labs team follows a different approach: @PhoenixTrade is a fully on-chain limit order book on Solana, only possible due to low network fees. Phoenix market makers are profitable thanks to active liquidity and takers benefit from tight spreads on par if not better than CEXes. Big centralized exchanges have still the benefit of allowing large trades due to higher liquidity, but this is the way forward.

@solana developers should take advantage of Solana unique features rather than replicating designs born on different systems.

The problem is that we are not there yet. DeFi today is broken, with traditional on-chain AMMs being worse both for makers and takers. Takers suffer from low liquidity and high spreads, and makers lose money over time. How to fix it?

A possible approach consist in moving some components off-chain: off-chain RFQ, off-chain order books, consolidation of order flow and so on. The risk here is not only on complexity but mostly on trading off the benefits of decentralization for performance.

The @ellipsis_labs team follows a different approach: @PhoenixTrade is a fully on-chain limit order book on Solana, only possible due to low network fees. Phoenix market makers are profitable thanks to active liquidity and takers benefit from tight spreads on par if not better than CEXes. Big centralized exchanges have still the benefit of allowing large trades due to higher liquidity, but this is the way forward.

@solana developers should take advantage of Solana unique features rather than replicating designs born on different systems.

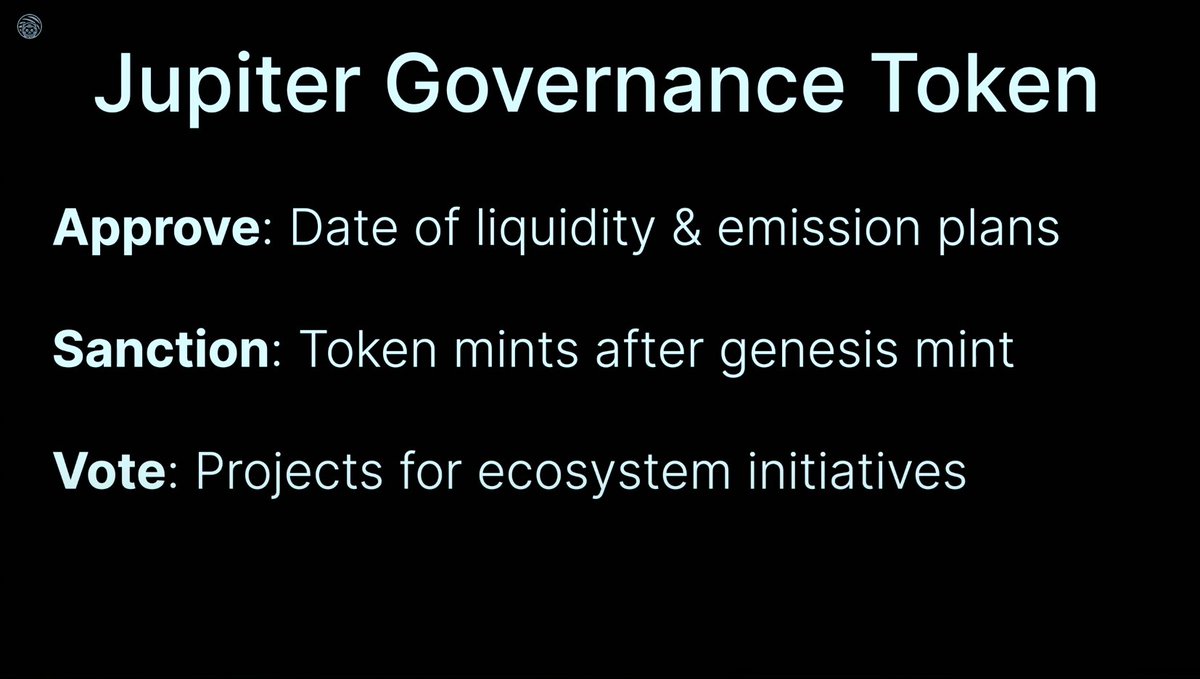

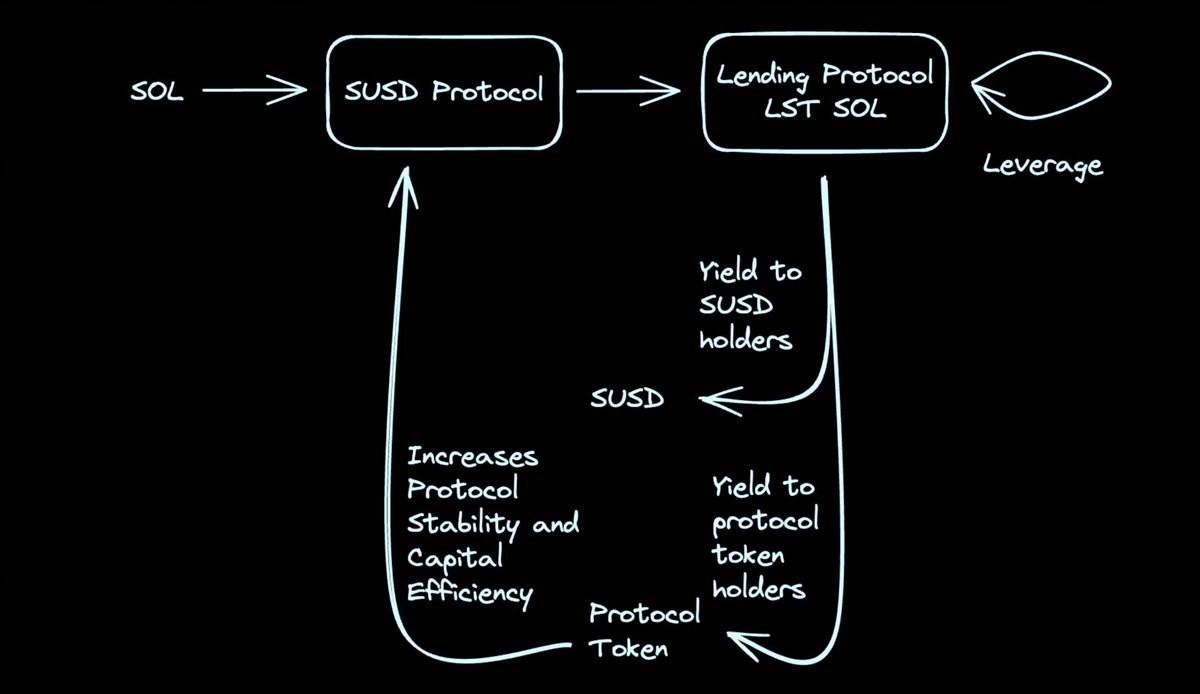

19/ The @JupiterExchange governance token was announced at Breakpoint 2023. 40% of the total supply of $JUP will be airdropped to Jupiter users. In addition, the team is developing a protocol for a yield-bearing stablecoin $SUSD backed by leveraged $SOL LSTs.

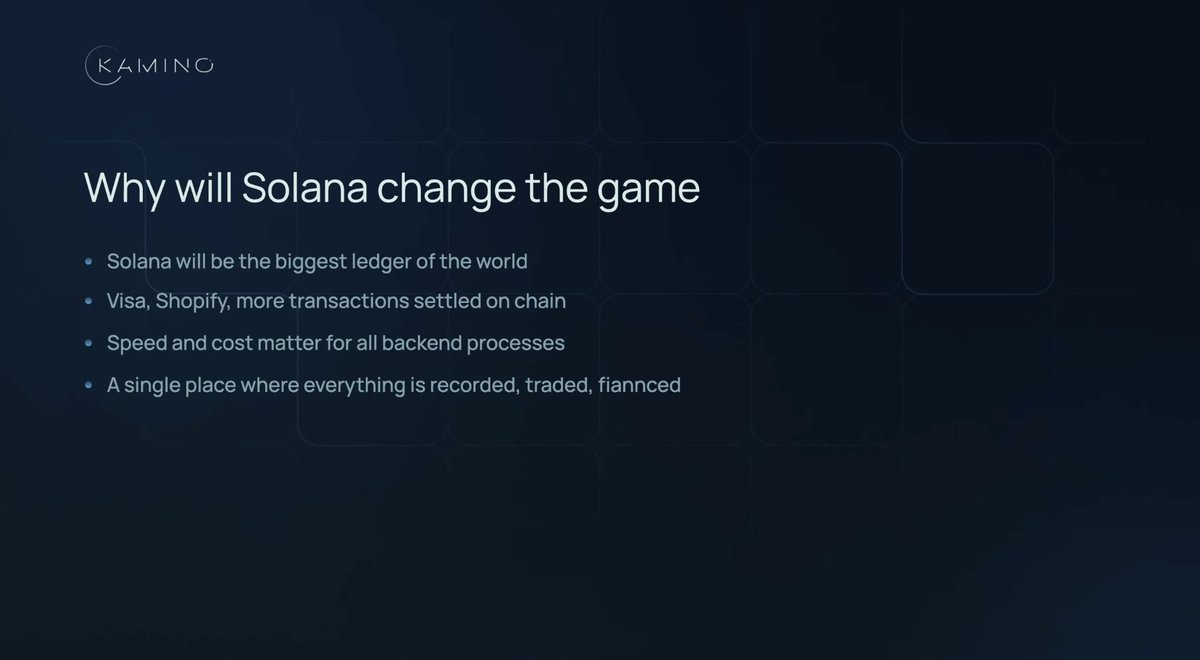

20/30 We know about the potential and benefits of decentralized finance, but today DeFi didn't really deliver yet on its promise: we don't have many interesting assets beyond native tokens, we lack liquidity and/or on-chain operations are expensive. We are still early in terms of infrastructure, but this is not the end state of DeFI.

@y2kappa knows that finance on @solana is about to go exponential. Solana, a global replicated state machine synchronized at the speed of light, is the best ledger for recording, trading, financing any asset.

@HubbleProtocol is helping bringing more assets on chain, and @Kamino_Finance unifies financial activity, offering a platform to borrow, lend, leverage and provide liquidity on Solana.

@y2kappa knows that finance on @solana is about to go exponential. Solana, a global replicated state machine synchronized at the speed of light, is the best ledger for recording, trading, financing any asset.

@HubbleProtocol is helping bringing more assets on chain, and @Kamino_Finance unifies financial activity, offering a platform to borrow, lend, leverage and provide liquidity on Solana.

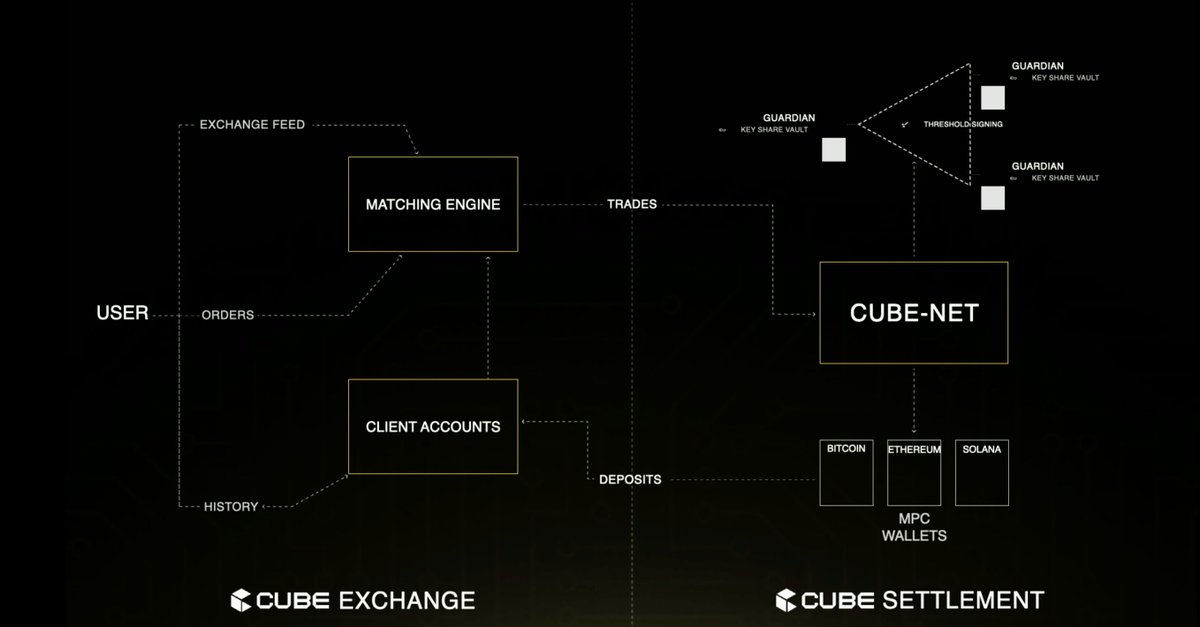

21/30 @baalazamon introduced @cubexch, an exchange aiming to be on par with centralized exchanges in terms of performance and convenience, without the centralized counterparty risk in terms of custody of the funds.

DEXes struggle getting adoption as self-custody is hard and liquidity is bad. Cube exchange fixes this. User funds are secured by a whole SVM-based network of guardians, who collectively control MPC wallets deployed on different chains. This allows to swap native $BTC for $ETH or $SOL without incurring bridging risks or dealing with wrapped assets.

Trades are handled by an off-chain matching engine, providing a seamless trading experience. Only the MPC wallets are on-chain for decentralized custody.

DEXes struggle getting adoption as self-custody is hard and liquidity is bad. Cube exchange fixes this. User funds are secured by a whole SVM-based network of guardians, who collectively control MPC wallets deployed on different chains. This allows to swap native $BTC for $ETH or $SOL without incurring bridging risks or dealing with wrapped assets.

Trades are handled by an off-chain matching engine, providing a seamless trading experience. Only the MPC wallets are on-chain for decentralized custody.

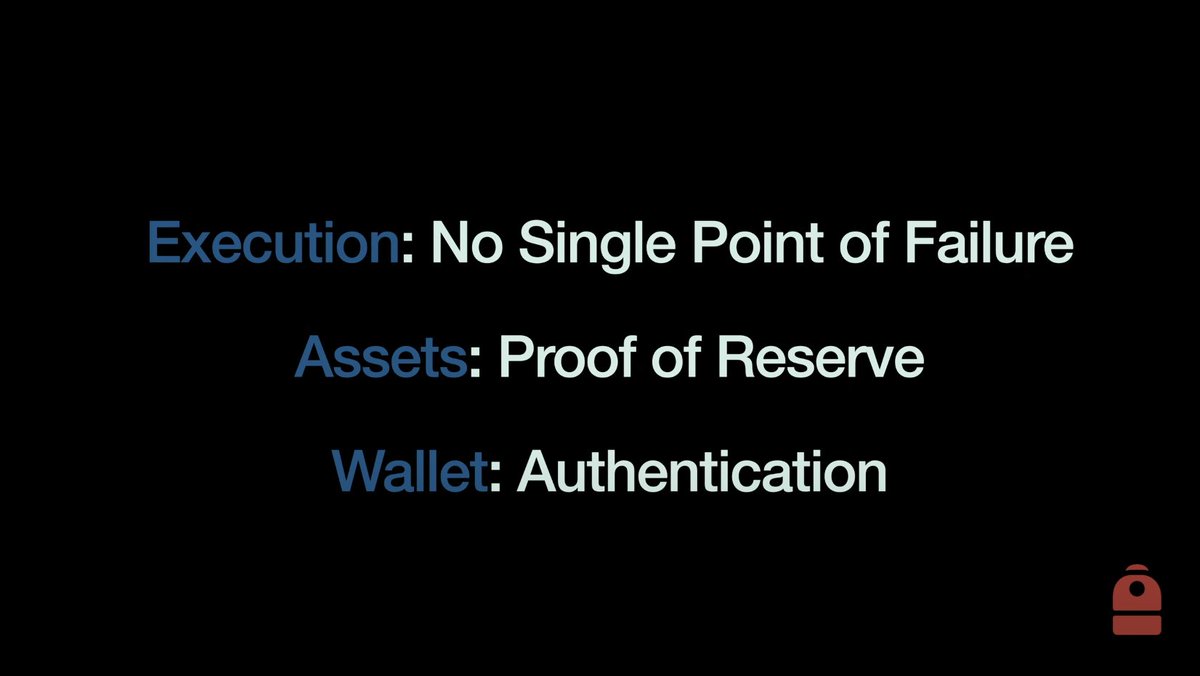

22/30 @armaniferrante announced the @xNFT_Backpack Exchange, a next-generation regulated exchange relying on cryptographic verifiability. It's time to learn from the failures of other centralized exchanges and do it right. For instance, there is no excuse not to have proof of reserve. Backpack users will be able to verify directly from their wallet. Execution won't have a single point of failure, relying on state machine replication.

The exchange will be well integrated with the wallet app. Users will be able to convert fiat from anywhere in the world to gas tokens ready to interact with decentralized applications. This is in contrast with the user experience today involving way too many steps and apps. At the same time, self-custody on the Backpack wallet is still fully private - the wallet is fully client side and stays private, no interference with the regulated exchange.

The exchange will be well integrated with the wallet app. Users will be able to convert fiat from anywhere in the world to gas tokens ready to interact with decentralized applications. This is in contrast with the user experience today involving way too many steps and apps. At the same time, self-custody on the Backpack wallet is still fully private - the wallet is fully client side and stays private, no interference with the regulated exchange.

23/30 @KyleSamani tackles the modular vs integrated debate from first principles. What are blockchains and why are they useful? At their core, blockchains are asset ledgers, a tool to keep track of who owns what. The natural application of such a system is trading and payments.

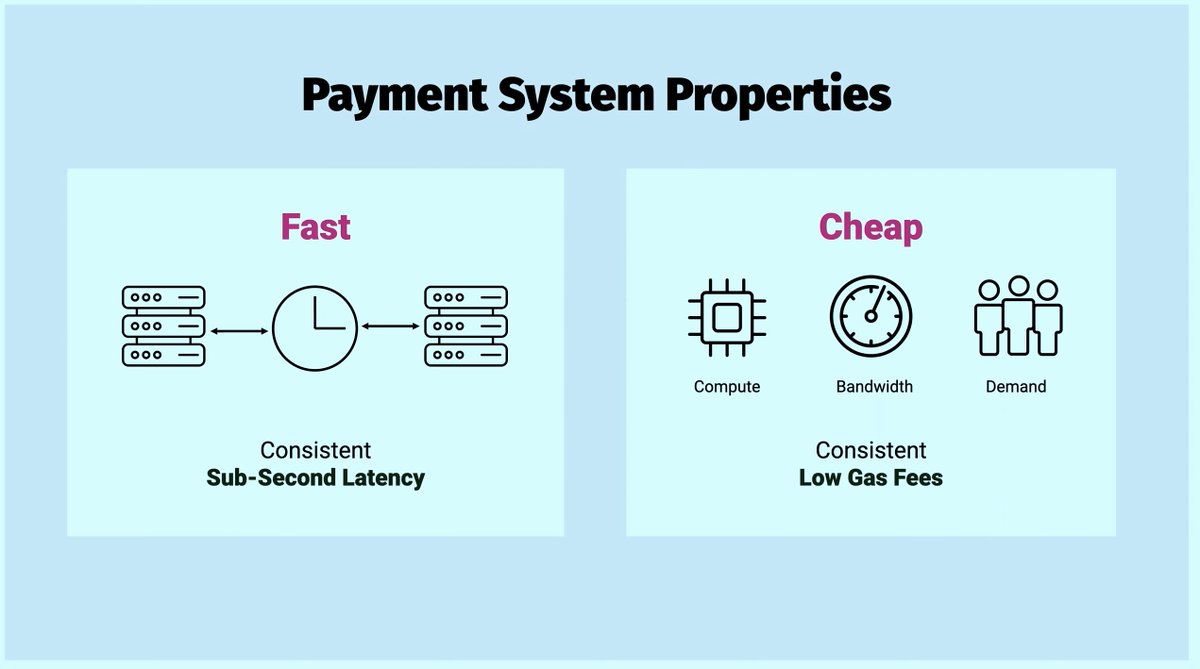

The question on how to optimize a blockchain-based network then becomes a question on how to optimize it for trading and payments... Starting from enabling fast and cheap transactions. Fast means fast confirmations, low latency. Cheap means that gas fees should be consistently low.

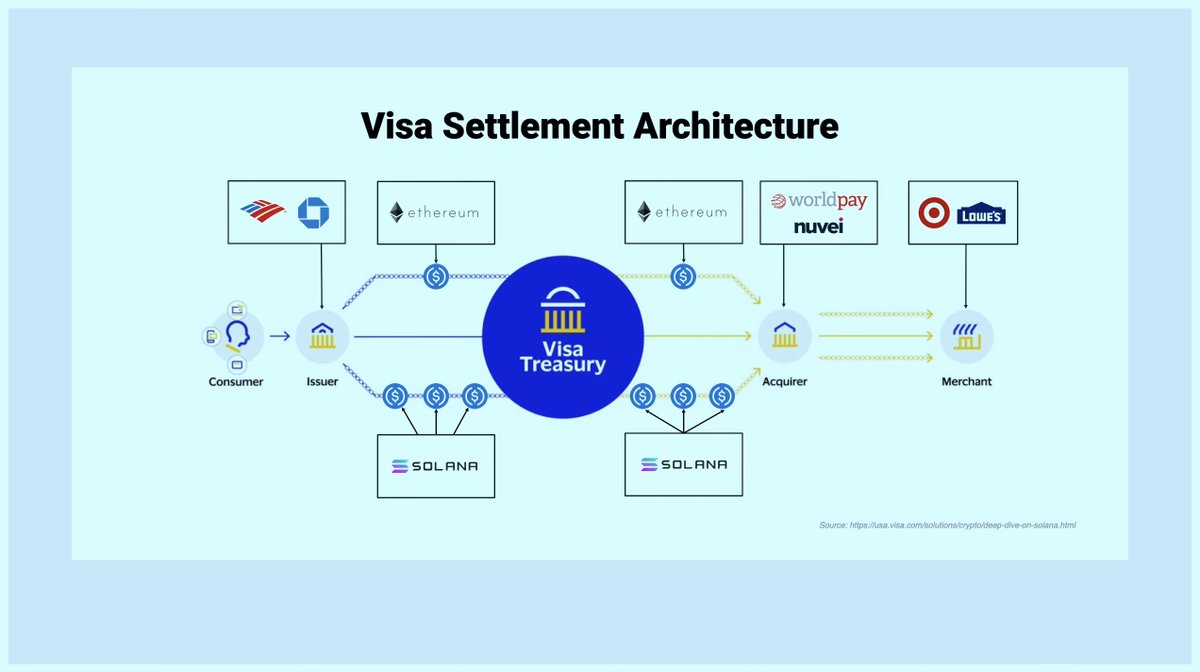

High performance, integrated systems are better for trading and payments, and we have practical evidence of this, with @Visa and @Worldpay_Global adopting USDC on @Solana payment settlements and @PhoenixTrade making market makers profitable with a fully on-chain order book exchange.

The question on how to optimize a blockchain-based network then becomes a question on how to optimize it for trading and payments... Starting from enabling fast and cheap transactions. Fast means fast confirmations, low latency. Cheap means that gas fees should be consistently low.

High performance, integrated systems are better for trading and payments, and we have practical evidence of this, with @Visa and @Worldpay_Global adopting USDC on @Solana payment settlements and @PhoenixTrade making market makers profitable with a fully on-chain order book exchange.

24/30 @mhudack presented @SlingMoney, an app to send money to anyone in the world, fast, cheap and easy to use. No crypto knowledge is required. After passing KYC, you can fund your wallet and use a third-party service for encrypted key backup. Critically, you can also send to someone who's not on Sling. They can just withdraw to their bank. Sling is already working in 30 countries with more coming up over time.

25/30 @helio_pay is built based on the conviction that payment is the number one use case for blockchain, but there is a huge barrier in terms of UX and adoption of wallets. Helio helps merchants to accept any kind of crypto payment and off-ramp eventually without knowing anything about blockchain. Helio is built on @Solana as it is ideal for payments being super fast and cheap. Alternatives would include the Lightning network and Ethereum layer 2 solutions.

26/30 @at_mwagner gave a huge update on what to expect from @staratlas in the near future, with new game modes, improved graphics, a new companion app for leveling up characters. Can't say much about the game without actually trying it (it's currently in early access), but I will keep following the development.

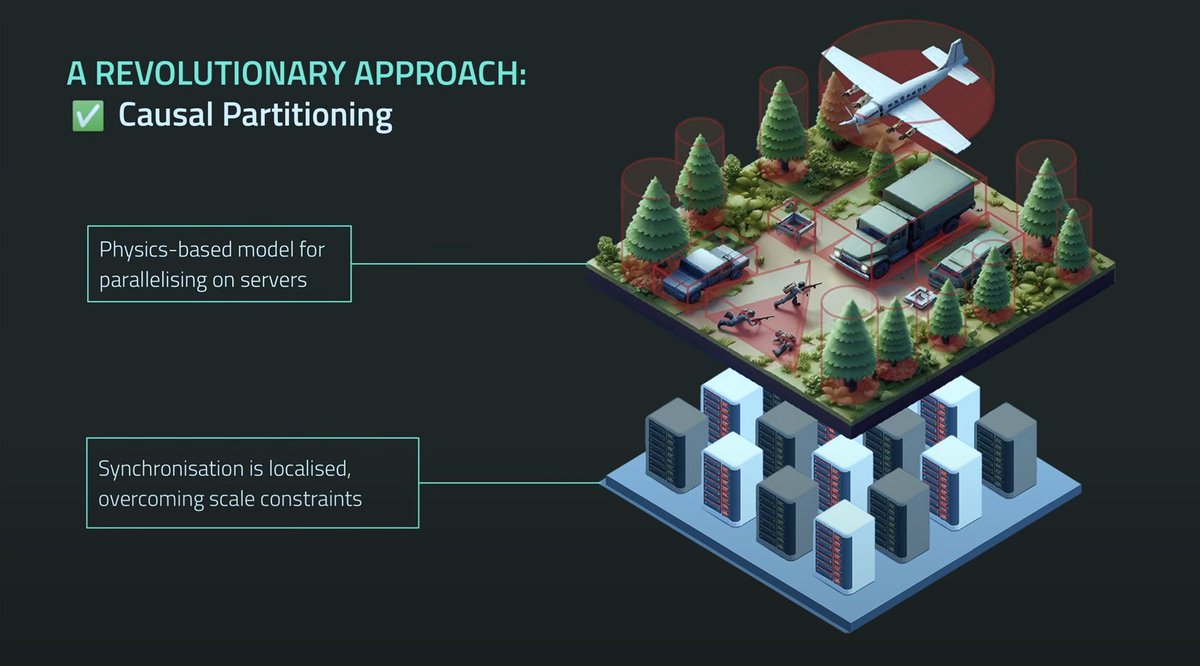

Very interesting to see progress from @MetaGravity_ on scaling up to tens of thousands of concurrent players in a 3D environment with physics enabled. This is enabled by using causal partitioning instead of traditional spatial sharding.

Very interesting to see progress from @MetaGravity_ on scaling up to tens of thousands of concurrent players in a 3D environment with physics enabled. This is enabled by using causal partitioning instead of traditional spatial sharding.

27/30 @PeterMooreLFC summarized the history of monetization in gaming based on his experience as executive at Sega, Xbox and EA. The gaming industry is a 200 billion industry today and it won't stop growing any time soon.

Different monetization models have found success over time even though they weren't obvious or even found strong opposition when first proposed: free to play, DLC and expansion packs, mobile gaming, live services, subscription and cloud gaming.

Peter believes a decentralized infrastructure can bring benefits both to developers and gamers.

Different monetization models have found success over time even though they weren't obvious or even found strong opposition when first proposed: free to play, DLC and expansion packs, mobile gaming, live services, subscription and cloud gaming.

Peter believes a decentralized infrastructure can bring benefits both to developers and gamers.

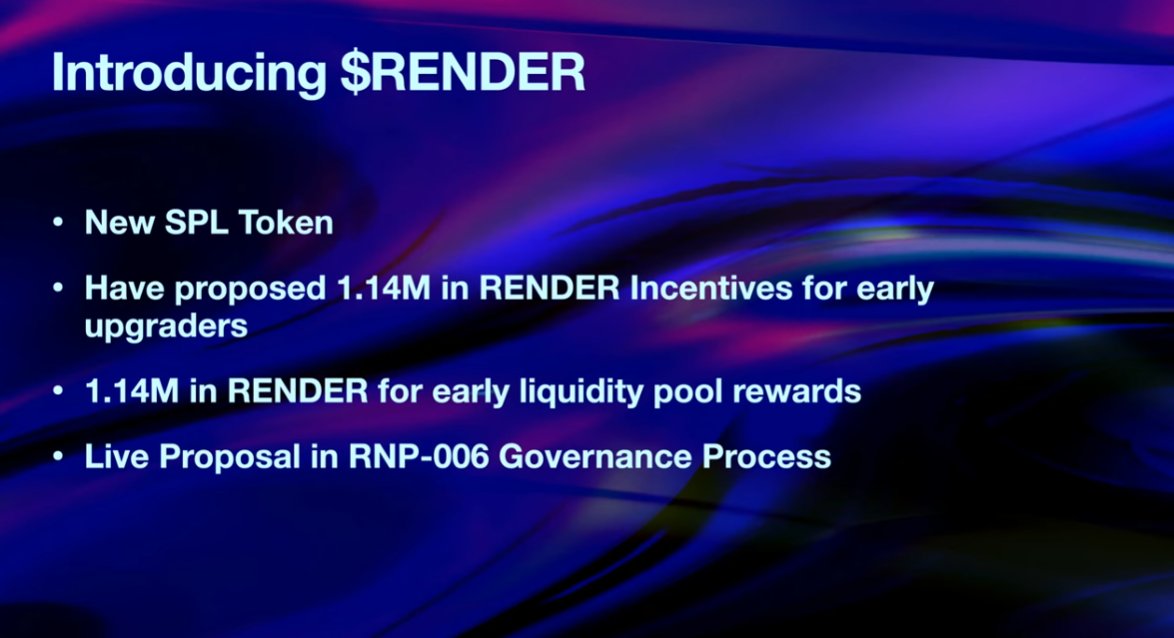

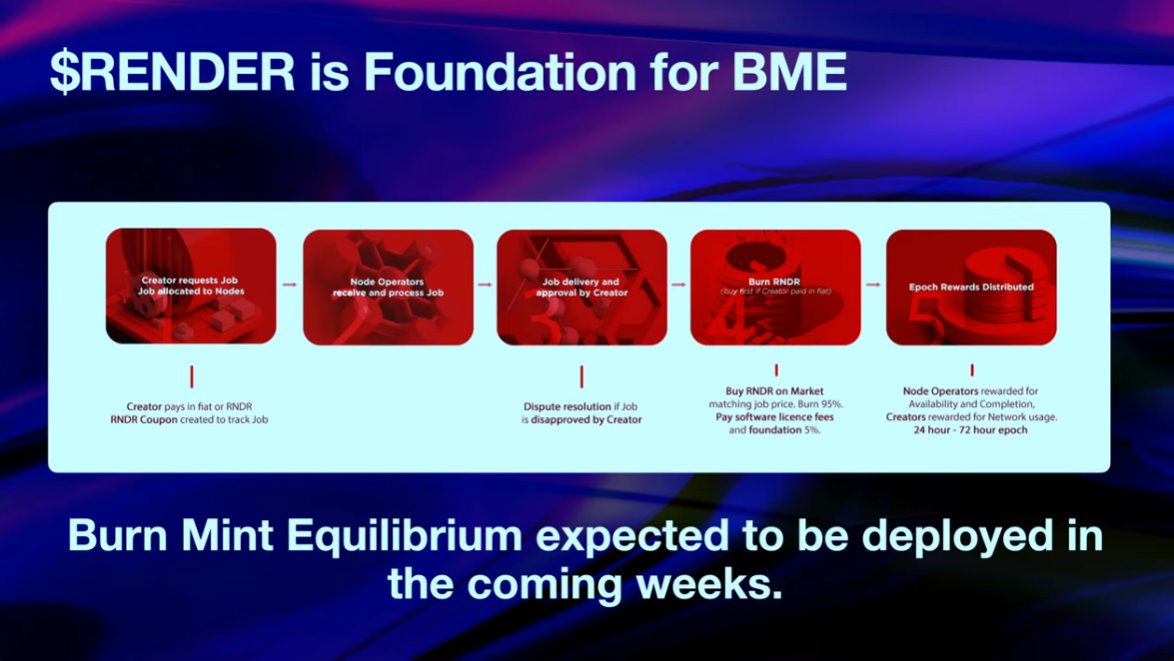

28/30 @drjonessf announced that the @rendernetwork infrastructure has upgraded to Solana, and introduced the $RNDR token migration to $RENDER on Solana. A burn-mint equilibrium model will be detailed in the coming weeks.

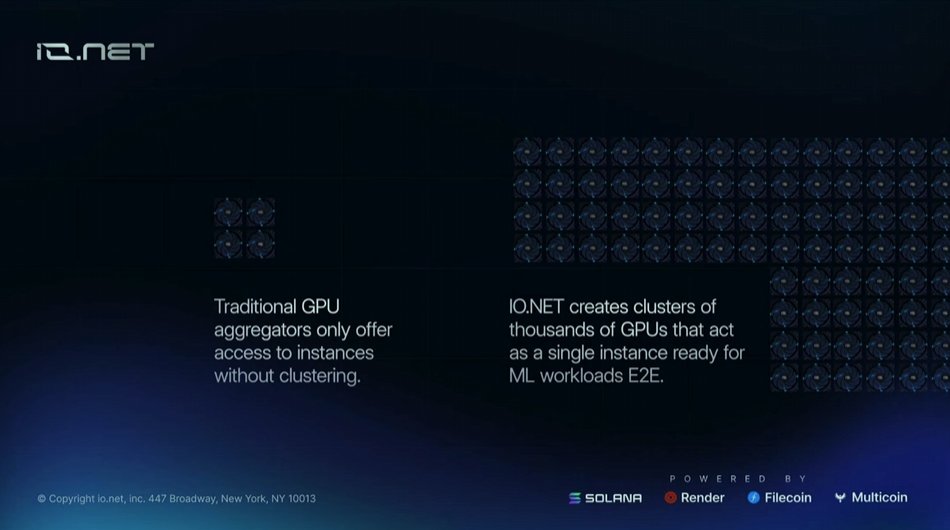

29/30 @MTorygreen presented how @ionet_official is addressing the GPU shortage on the cloud by providing the world's largest AI compute cloud on Solana. GPUs are provided from different sources including @rendernetwork. A critical differentiation from other providers is in the fact that Ionet can create clusters of GPUs, combining processing power required for heavy AI workloads.

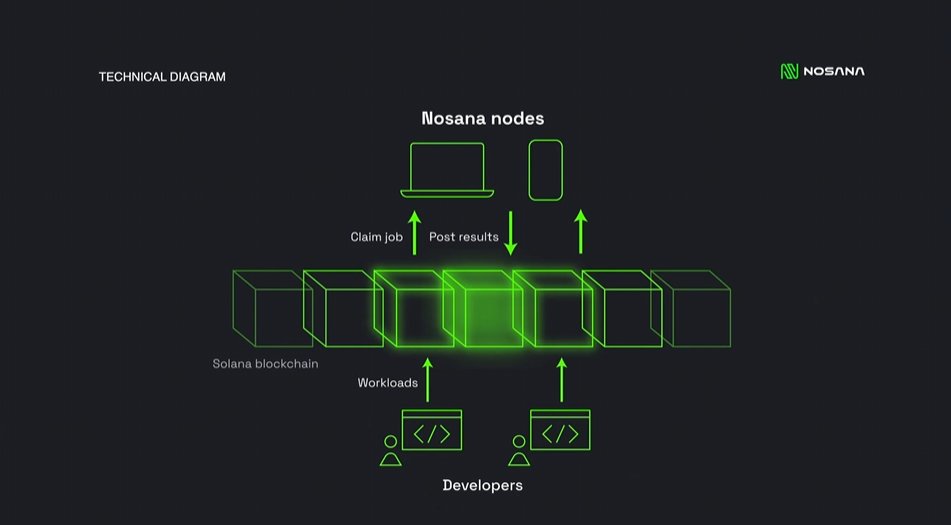

30/30 @nosana_ci is leveraging Solana to provide the world's largest compute grid. We are facing a problem of GPU shortage on the cloud. At the same time, consumer GPUs that are well suited for AI inferencing jobs may offer a way to address the increasing demand. Nosana is a decentralized open marketplace to connect users to GPU owners that are rewarded for solving jobs posted on Solana.

And that's all I have, even though I am sure I missed something important - the conference was great and @SolanaFndn did a great job organizing and providing video recordings immediately. Next year the conference moves to Singapore. See you there? :)

Loading suggestions...