“Animate Anyone” was released last night for making pose guide videos. Lets dive in.

Paper: arxiv.org

Project: humanaigc.github.io

🧵1/

Paper: arxiv.org

Project: humanaigc.github.io

🧵1/

humanaigc.github.io/animate-anyone/

Animate Anyone

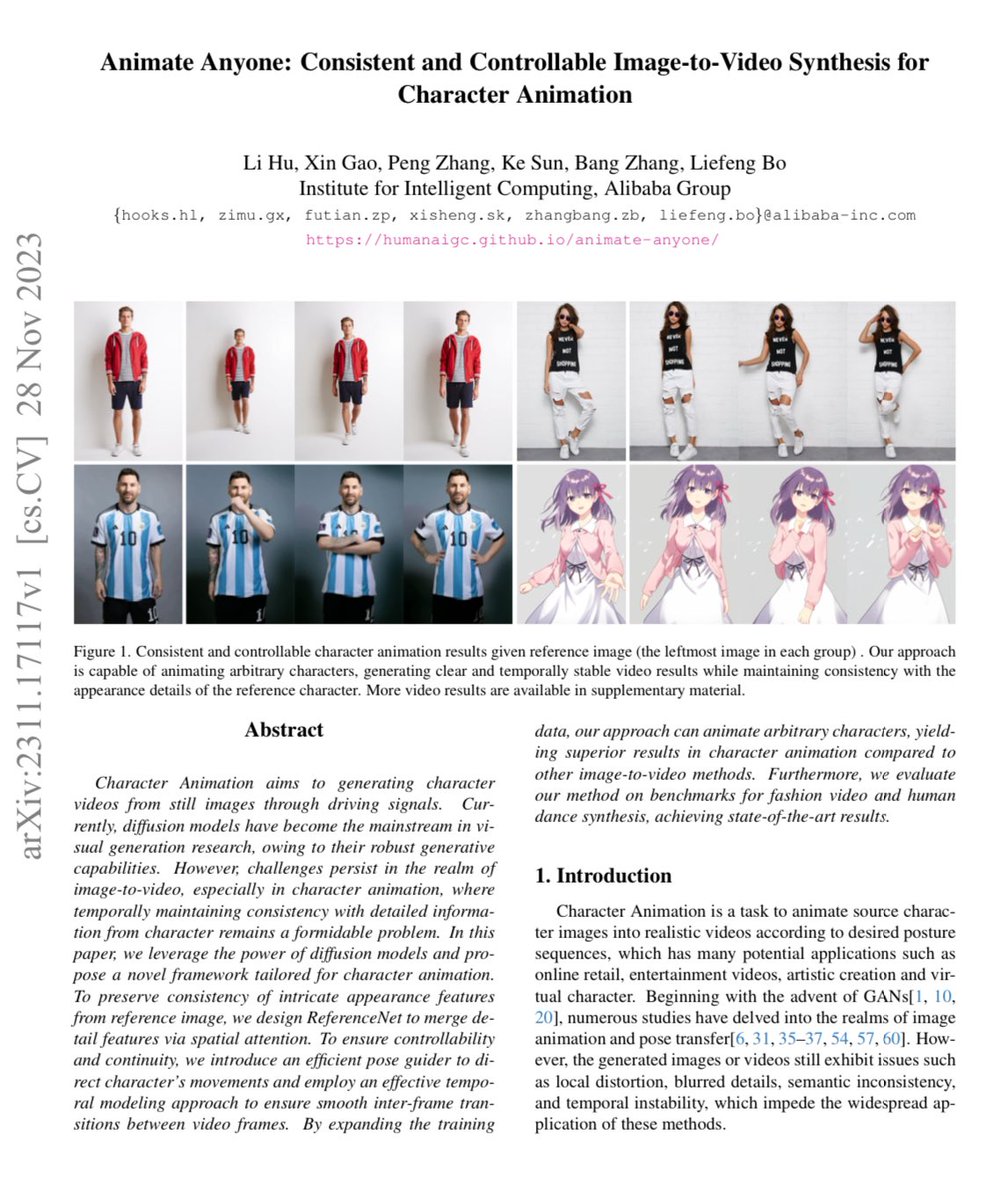

Animate Anyone: Consistent and Controllable Image-to-Video Synthesis for Character Animation

arxiv.org/abs/2311.17117

Animate Anyone: Consistent and Controllable Image-to-Video...

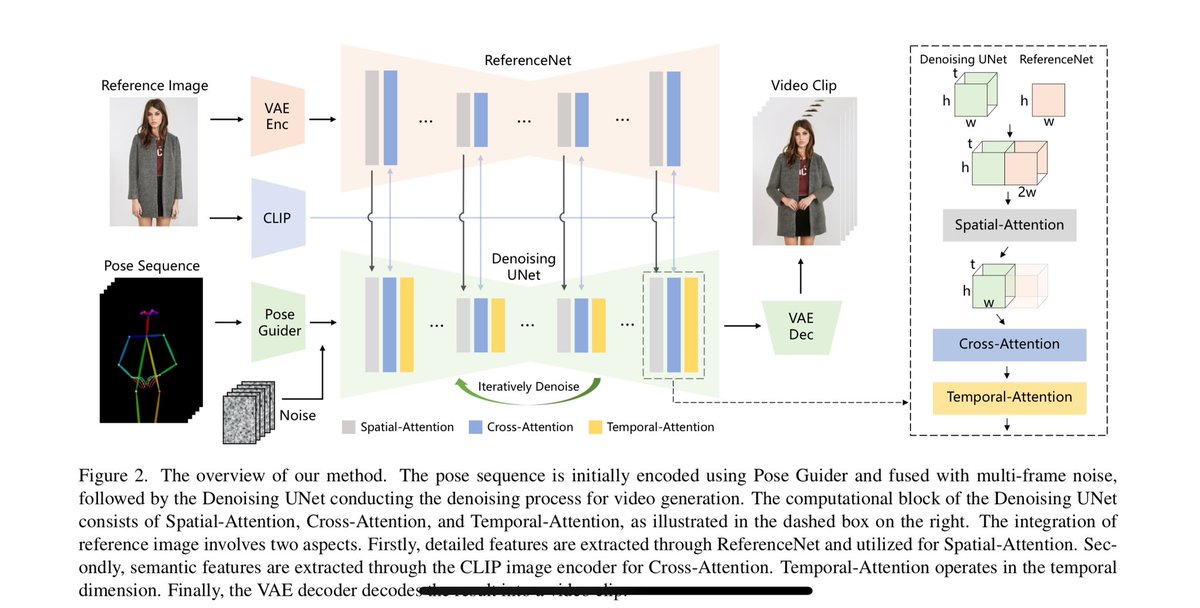

Character Animation aims to generating character videos from still images through driving signals. C...

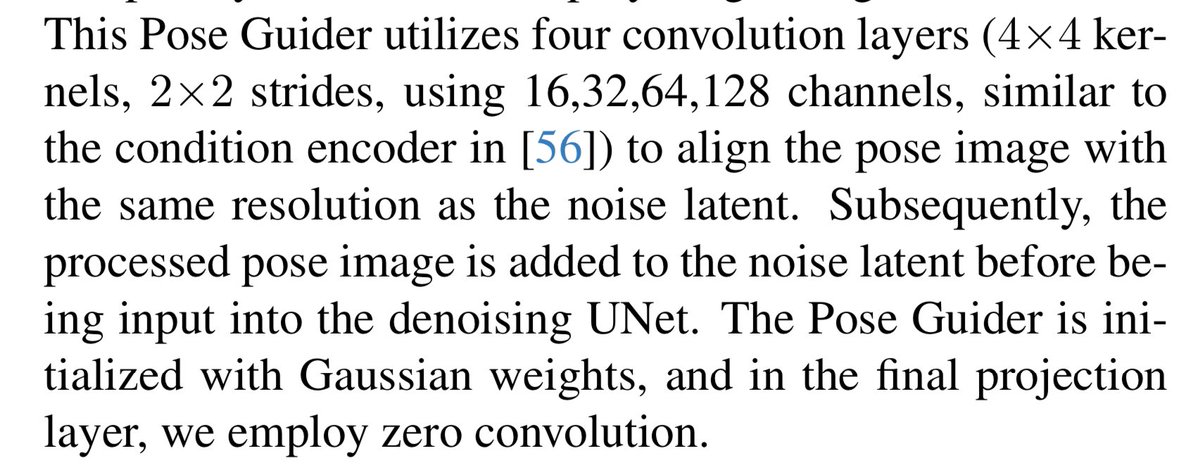

Then they train the temporal attention in isolation as is standard for AnimateDiff training.

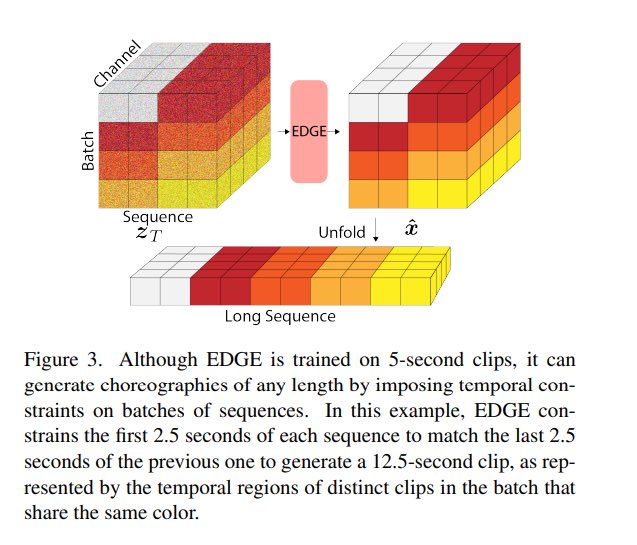

During inference they resize the poses to the size of the input character. For longer generation they use a trick from “Editable Dance Generation From Music” (arxiv.org) 9/

During inference they resize the poses to the size of the input character. For longer generation they use a trick from “Editable Dance Generation From Music” (arxiv.org) 9/

I’m still unclear on that final bit about long duration animations. They are generating more than 24 frames in the examples and they are incredibly coherent so it obviously works well.

Hopefully the code comes out soon, but if not the paper is detailed enough to repro. 10/

Hopefully the code comes out soon, but if not the paper is detailed enough to repro. 10/

Digging into the EDGE paper more, I think they are using in-painting to generate longer animations. I've tried an in-painting like approach with AnimateDiff to make longer animations, it doesn't work well in general. Maybe it works here because of the extra conditioning 🤷♂️11/

Loading suggestions...