2. Range

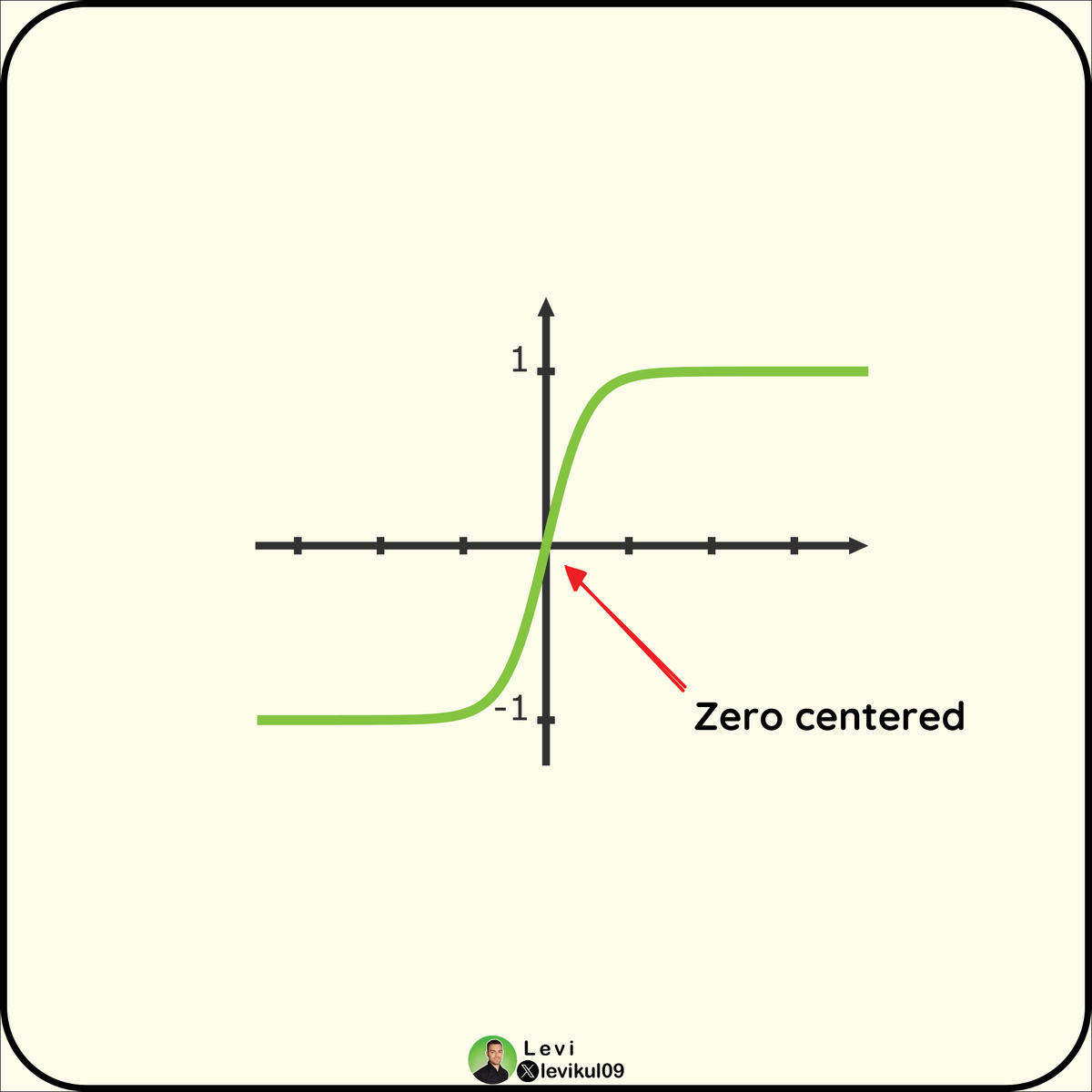

The output of the tanh function lies between -1 and 1.

Other functions like sigmoid allow only positive values, while tanh can be negative.

This can be an advantage in some neural networks.

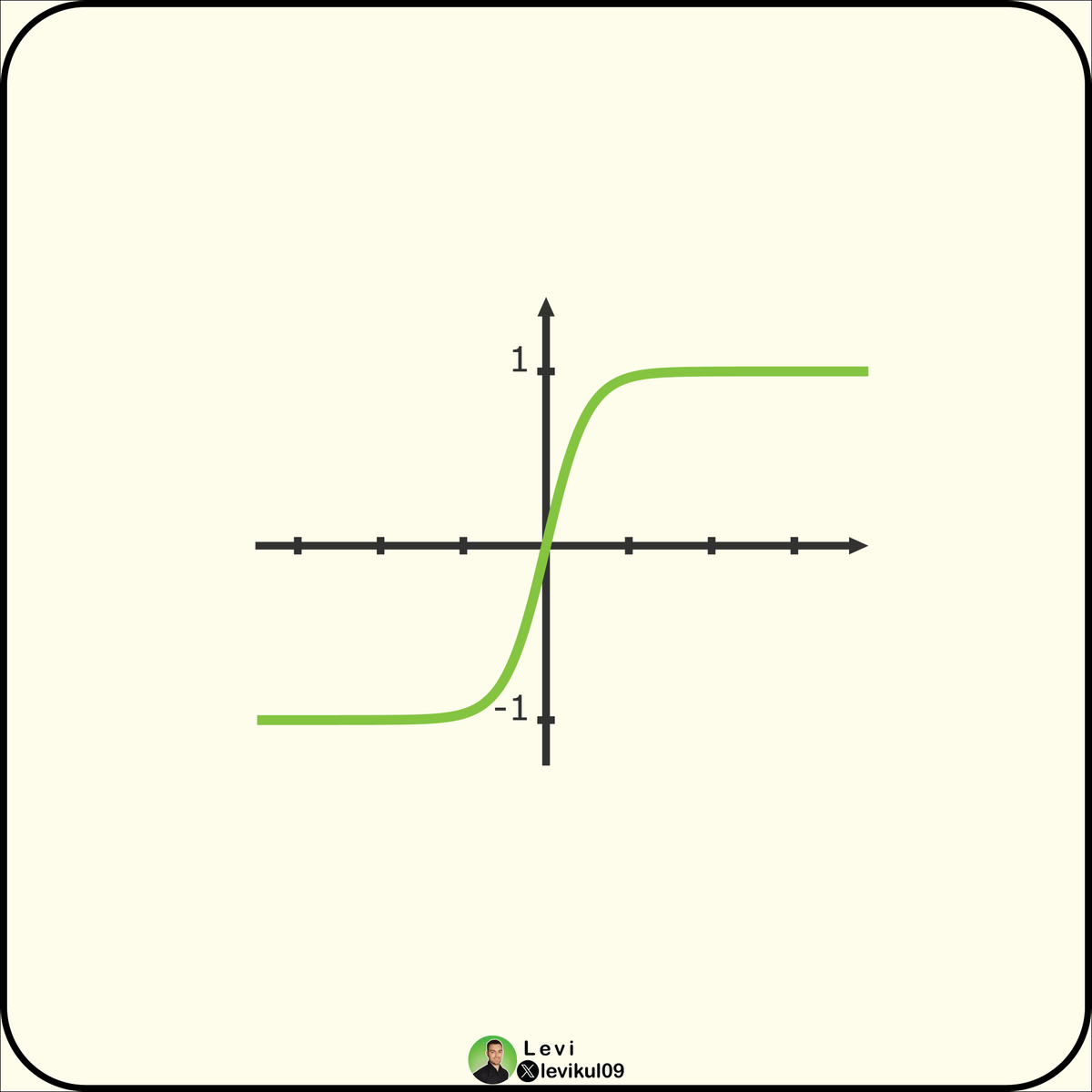

The output of the tanh function lies between -1 and 1.

Other functions like sigmoid allow only positive values, while tanh can be negative.

This can be an advantage in some neural networks.

That's it for today.

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

I hope you've found this thread helpful.

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

If you haven't already, join our newsletter DSBoost.

We share:

• Interviews

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

We share:

• Interviews

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

Loading suggestions...