NNs with a large number of parameters are powerful.

But

• They tend to overfit since they also learn the noise.

• They are complex, hence slow.

Dropout is a technique to solve these problems.

How it works?

2/7

But

• They tend to overfit since they also learn the noise.

• They are complex, hence slow.

Dropout is a technique to solve these problems.

How it works?

2/7

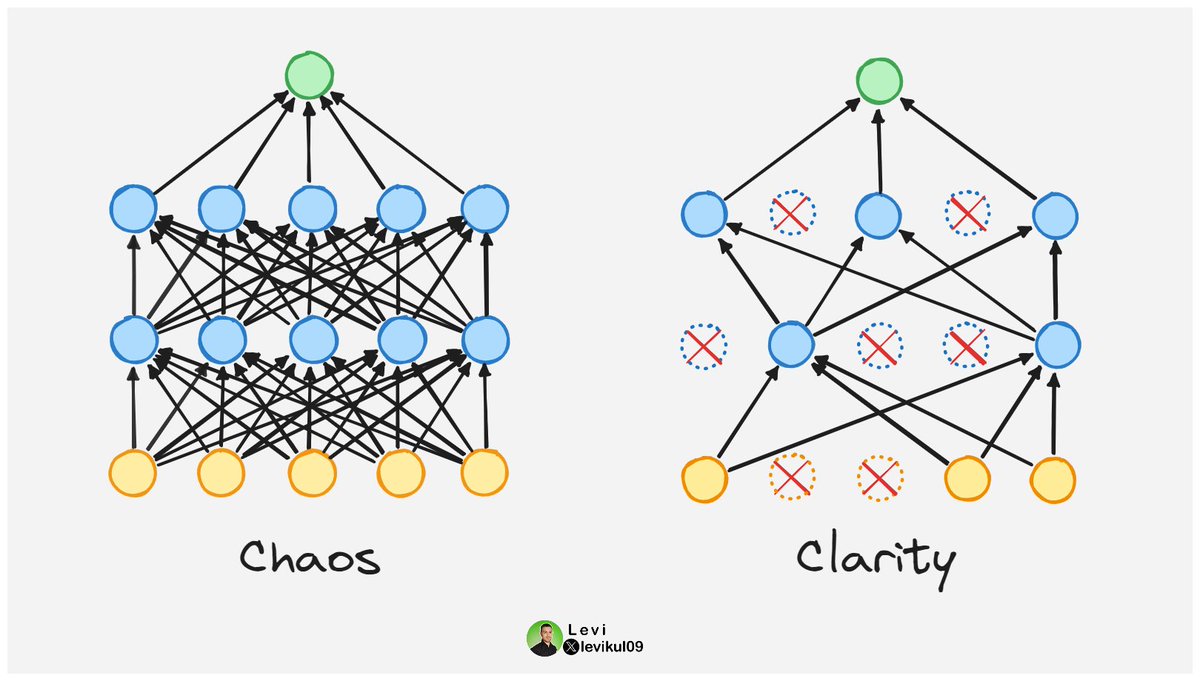

It randomly selects some nodes and connections and turns them off temporarily (drops them out).

The result:

• We get a more clear network.

3/7

The result:

• We get a more clear network.

3/7

• Because different subsets of neurons are dropped out in each iteration, the remaining neurons must learn to generalize well, so they become more powerful.

• The network is less sensitive to the specific weights, making the model more effective.

4/7

• The network is less sensitive to the specific weights, making the model more effective.

4/7

Ensemble learning achieves similar results, but that process also requires a lot of training power.

Dropout simulates ensembling by randomly zeroing out different neurons during training and using a scaled-down version of the network in testing.

5/7

Dropout simulates ensembling by randomly zeroing out different neurons during training and using a scaled-down version of the network in testing.

5/7

Did you like this post?

Hit that follow button for me and pay back with your support.

It literally takes 1 second for you but makes me 10x happier.

Thanks 😉

6/7

Hit that follow button for me and pay back with your support.

It literally takes 1 second for you but makes me 10x happier.

Thanks 😉

6/7

If you haven't already, join our newsletter DSBoost.

We share:

• Interviews

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

7/7

We share:

• Interviews

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

7/7

Loading suggestions...