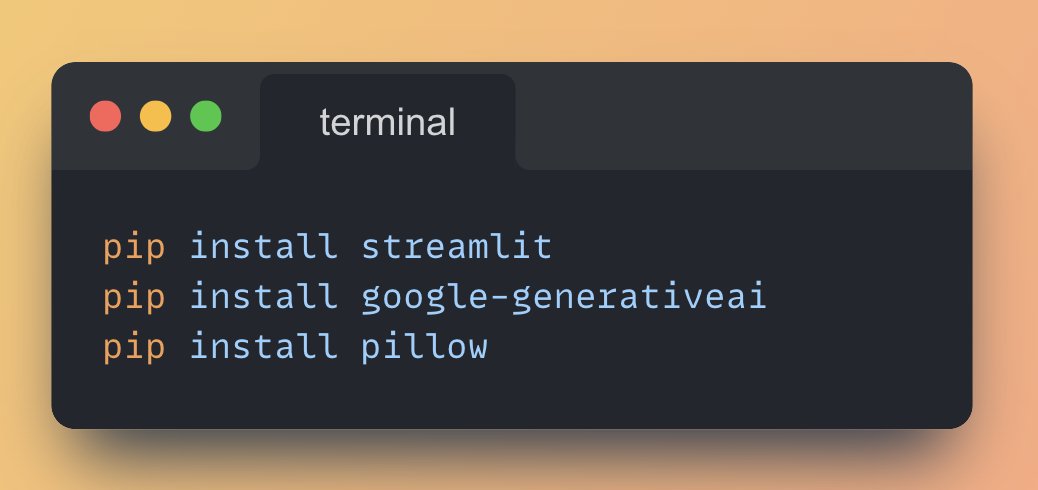

Build a multimodal LLM chatbot using Gemini Flash in just 30 lines of Python Code (step-by-step instructions):

Find all the awesome LLM Apps demo with RAG in the following Github Repo.

P.S: Don't forget to star the repo to show your support 🌟

github.com

P.S: Don't forget to star the repo to show your support 🌟

github.com

If you find this useful, RT to share it with your friends.

Don't forget to follow me @Saboo_Shubham_ for more such LLMs tips and tutorials.

x.com

Don't forget to follow me @Saboo_Shubham_ for more such LLMs tips and tutorials.

x.com

Loading suggestions...